Like I promised, hereby my official submission for the CoZ bounty, which contains a full and practical implementation of the HoneyBadger algorithm as described in the paper. All sub-protocols are tested and implemented in the top-level HoneyBadger protocol.

The repository can be found here.

Like most other BFT protocols hbbft is very well suited for the land of the wild --which in my opinion the crypto landscape is as today-- it guarantees liveness of the network even when nodes are behaving faulty. But what intrigued me the most is how its constructed --which comes at a high implementation cost in time and effort--. The hbbft algorithm contains 4 protocols which I implemented in modular building blocks. These protocols can be separately tested and implemented.

- Reliable broadcast algorithm (rbc.go)

- Byzantine binary agreement (bba.go)

- Asynchronous common subset (acs.go)

- HoneyBadger protocol (honey_badger.go)

HoneyBadger guarantees that all good nodes output the same set of transactions in each epoch. This makes it really interesting as we don't need to elect new leaders, the network can keep committing transactions instead of wasting time and bandwidth on leader elections. The nodes could keep track of the first output in a certain epoch by one of the consensus nodes and use that to record into a new block, ignoring identical sets in the same epoch.

ok github.com/anthdm/hbbft (cached) coverage: 73.6% of statements

I would be a rock-superstar if it was, but this code is fresh and will most likely need more community adoption and in-depth stress testing in real-world situations to be stamped as "rock solid".

I added an abstract Transport interface which should make it very easy to implement custom transports. I currently implemented LocalTransport for testing.

Consenter will be used for testing hbbft in a more realistic scenario consenter

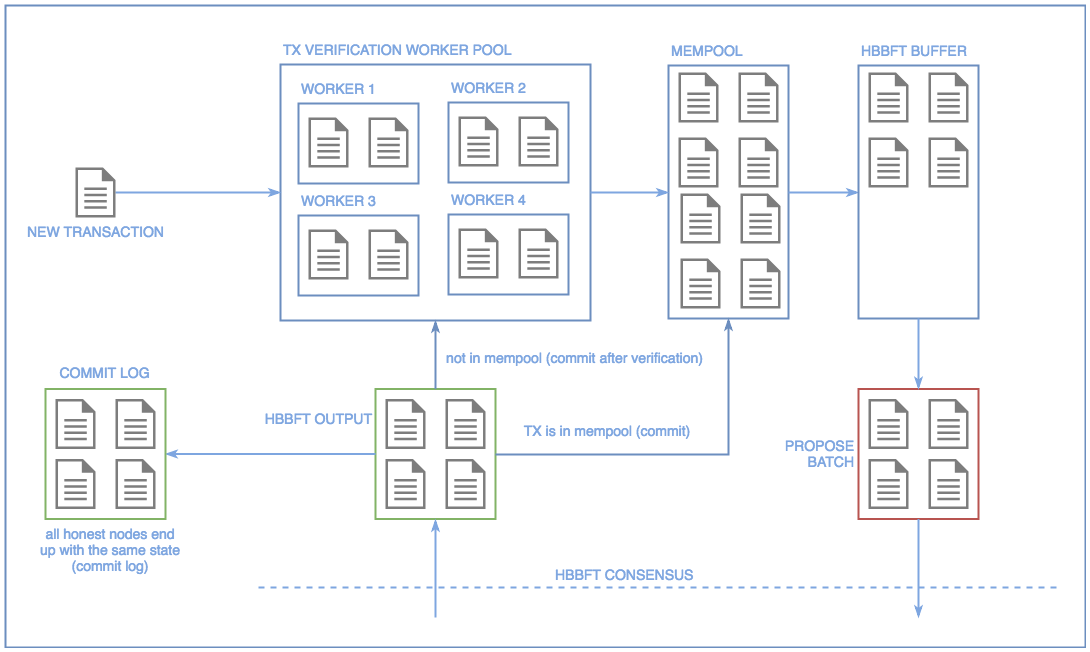

It's possible that during primetime the nodes will be under heavy load --when a token sale is happening for example--. To handle this peak we can make use of dynamic batch sizing. In hbbft the length of the unbounded transaction buffer len(buf) is a very good metric for this. To enable autoscaling to a certain point we can measure the length of the buffer over a specified number of epochs N. When N is reached and batch size B reaches a threshold T we can dynamically scale up the batch size hence increasing the throughput of the node. It's also important to make sure that length of the buffer never reaches 0. The implementation of hbbft as of today will pause and poll for new transactions in the buffer before it will propose a new batch which will hit performance. Ideally is the length of the buffer between > B / 2 and < B. When the length of the buffer is too high we need to scale B down.

Another way that can enhance autoscaling is to dynamically scale up the TX verification worker pool. Both approaches can work together to handle high amounts of load on the node.

- One node receives a new transaction from a client.

- This transactions will feed in to the TX verification worker pool where a random worker will pick it up and verifies the transaction.

- After verification the transaction will be inserted in to the mempool of the node and pushed on top of the hbbft buffer.

- hbbft engine will propose a random batch transactions from its buffer.

- The node will receive output from the hbbft engine. If the transaction is in our mempool we can directly commit the transaction. Otherwise we need to perform verification and feed this transaction to the TX worker pool. After verification the transaction can be commited and appended to the commit log.

- All good / honest nodes will end up with the same commit log.

- number of nodes 4

- tx delay 2ms

- batch size 600

- tx worker pool size 4

===============================================

SERVER (1)

commited 70270 transactions over 33.62288822s

throughput 2129 TX/S

===============================================

===============================================

SERVER (1)

commited 75010 transactions over 35.772351082s

throughput 2143 TX/S

===============================================

===============================================

SERVER (1)

commited 80632 transactions over 38.512462328s

throughput 2121 TX/S

===============================================

===============================================

SERVER (1)

commited 85472 transactions over 40.466456374s

throughput 2136 TX/S

===============================================

===============================================

SERVER (1)

commited 89657 transactions over 42.20158124s

throughput 2134 TX/S

===============================================

===============================================

SERVER (1)

commited 93493 transactions over 43.843495793s

throughput 2174 TX/S

===============================================

The performance of hbbft is very good for small to medium size networks, adding more nodes impact performance drastically, we can counter this by increasing the batch size, but this approach will not scale infinitely. One round of HoneyBadger consensus can contain around 80 messages that are exchanged by participants in the network before reaching consensus on a set of transactions. However the liveness that the algorithm guarantees when nodes are faulty is rock solid --no more 10 minutes+ block delays--.

Keep in mind that these are benches for the algorithm. Real world implementation will suffer by couple of variables:

- number of carrier nodes

- TX verification / processing on the underlying node

- Server architecture

- ..

2018/05/25 22:06:00 Starting benchmark 4 nodes 128 tx size 100 batch size over 5 seconds...

2018/05/25 22:06:05 node (0) processed a total of (117010) transactions in 5 seconds [ 23402 tx/s ]

2018/05/25 22:06:05 node (1) processed a total of (116923) transactions in 5 seconds [ 23384 tx/s ]

2018/05/25 22:06:05 node (2) processed a total of (117010) transactions in 5 seconds [ 23402 tx/s ]

2018/05/25 22:06:05 node (3) processed a total of (116923) transactions in 5 seconds [ 23384 tx/s ]

2018/05/25 22:06:05 Starting benchmark 6 nodes 128 tx size 200 batch size over 5 seconds...

2018/05/25 22:06:10 node (0) processed a total of (89585) transactions in 5 seconds [ 17917 tx/s ]

2018/05/25 22:06:10 node (1) processed a total of (89585) transactions in 5 seconds [ 17917 tx/s ]

2018/05/25 22:06:10 node (2) processed a total of (89585) transactions in 5 seconds [ 17917 tx/s ]

2018/05/25 22:06:10 node (3) processed a total of (89585) transactions in 5 seconds [ 17917 tx/s ]

2018/05/25 22:06:10 node (4) processed a total of (89585) transactions in 5 seconds [ 17917 tx/s ]

2018/05/25 22:06:10 node (5) processed a total of (89585) transactions in 5 seconds [ 17917 tx/s ]

2018/05/25 22:06:10 Starting benchmark 8 nodes 128 tx size 400 batch size over 5 seconds...

2018/05/25 22:06:15 node (0) processed a total of (72259) transactions in 5 seconds [ 14451 tx/s ]

2018/05/25 22:06:15 node (1) processed a total of (72259) transactions in 5 seconds [ 14451 tx/s ]

2018/05/25 22:06:15 node (2) processed a total of (72259) transactions in 5 seconds [ 14451 tx/s ]

2018/05/25 22:06:15 node (3) processed a total of (72259) transactions in 5 seconds [ 14451 tx/s ]

2018/05/25 22:06:15 node (4) processed a total of (72259) transactions in 5 seconds [ 14451 tx/s ]

2018/05/25 22:06:15 node (5) processed a total of (72259) transactions in 5 seconds [ 14451 tx/s ]

2018/05/25 22:06:15 node (6) processed a total of (72259) transactions in 5 seconds [ 14451 tx/s ]

2018/05/25 22:06:15 node (7) processed a total of (72259) transactions in 5 seconds [ 14451 tx/s ]

2018/05/25 22:06:15 Starting benchmark 12 nodes 128 tx size 1000 batch size over 5 seconds...

2018/05/25 22:06:20 node (0) processed a total of (52542) transactions in 5 seconds [ 10508 tx/s ]

2018/05/25 22:06:20 node (1) processed a total of (52542) transactions in 5 seconds [ 10508 tx/s ]

2018/05/25 22:06:20 node (2) processed a total of (52542) transactions in 5 seconds [ 10508 tx/s ]

2018/05/25 22:06:20 node (3) processed a total of (52542) transactions in 5 seconds [ 10508 tx/s ]

2018/05/25 22:06:20 node (4) processed a total of (53504) transactions in 5 seconds [ 10700 tx/s ]

2018/05/25 22:06:20 node (5) processed a total of (53504) transactions in 5 seconds [ 10700 tx/s ]

2018/05/25 22:06:20 node (6) processed a total of (52542) transactions in 5 seconds [ 10508 tx/s ]

2018/05/25 22:06:20 node (7) processed a total of (52542) transactions in 5 seconds [ 10508 tx/s ]

2018/05/25 22:06:20 node (8) processed a total of (52542) transactions in 5 seconds [ 10508 tx/s ]

2018/05/25 22:06:20 node (9) processed a total of (52542) transactions in 5 seconds [ 10508 tx/s ]

2018/05/25 22:06:20 node (10) processed a total of (52542) transactions in 5 seconds [ 10508 tx/s ]

2018/05/25 22:06:20 node (11) processed a total of (52542) transactions in 5 seconds [ 10508 tx/s ]