I recommend you know the basic knowledge of Kubernetes Pods before reading this blog. You can check this doc for details about Kubernetes Pods.

StatefulSet is a Kubernetes object designed to manage stateful applications.

Like a Deployment, a StatefulSet scales up a set of pods to the desired number that you define in a config file.

Pods in a StatefulSet runs the same containers defined in the Pod spec inside the StatefulSet spec.

Unlike a Deployment, every Pod of a StatefulSet owns a sticky and stable identity.

A StatefulSet also provides the guarantee about ordered deployment, deletion, scaling, and rolling updates for its Pods.

A complete StatefulSet consists of two components:

- A Headless Service used to control the network ID of the StatefulSet's Pods

- A StatefulSet object used to create and manage its Pods.

The following example demonstrates how to use a StatefulSet to create a ZooKeeper Server.

Please note that the following StatefulSet Spec is simplified for demo purposes.

You can check this YAML file for the complete configuration of this ZooKeeper Service.

A Zookeeper service is a distributed coordination system for distributed applications. It allows you to read, write data, and observe data updates. Data is stored and replicated in each ZooKeeper server and these servers work together as a ZooKeeper Ensemble.

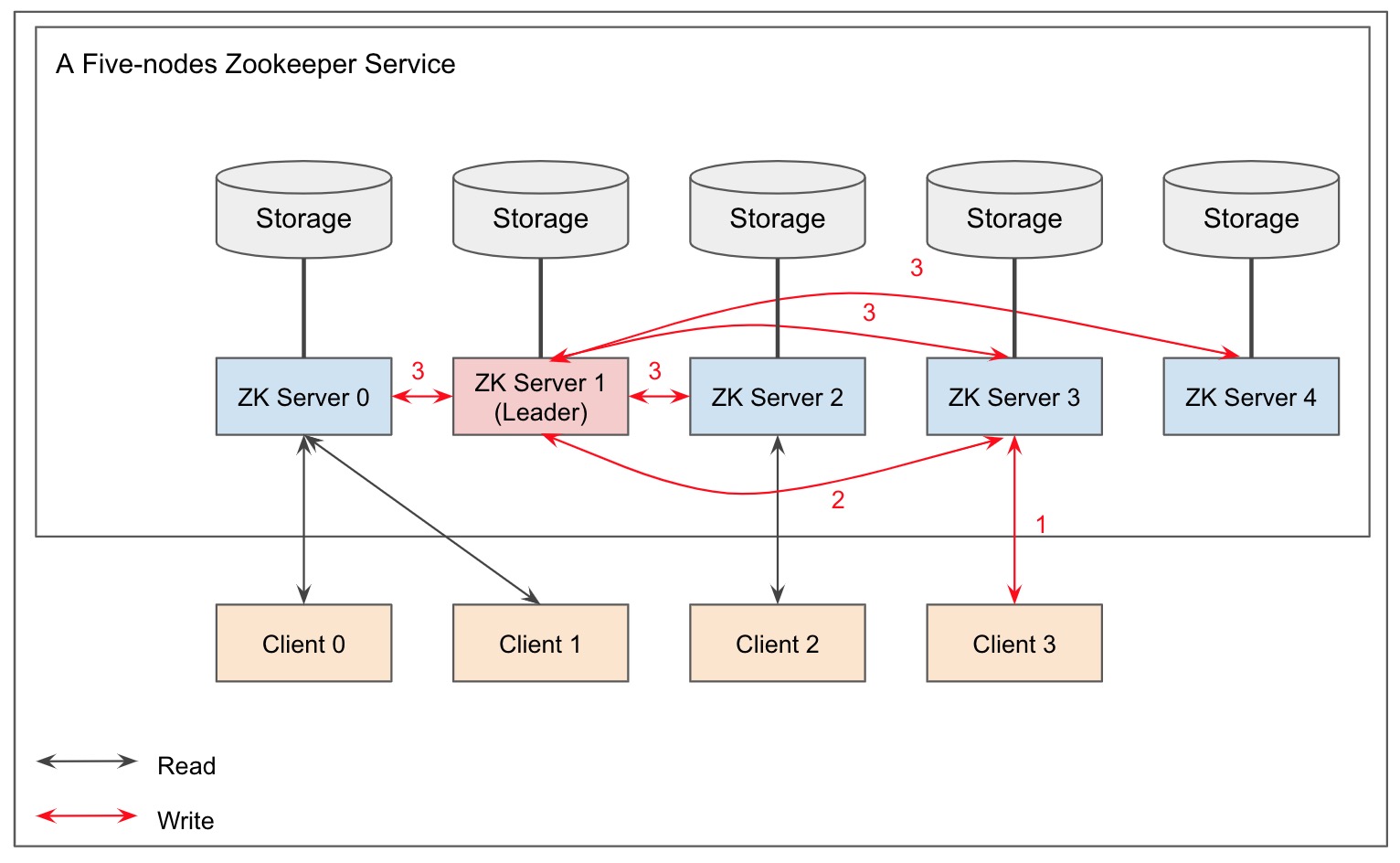

The following picture shows the overview of a ZooKeeper service with five ZooKeeper servers. You can see each server in a ZooKeeper service has a stable network ID for potential leader elections. Moreover, one of the ZooKeeper servers needs to be selected as a leader for managing the service topology and processing write requests. StatefulSets is suitable for running such an application as it guarantees uniqueness for Pods.

A Headless Service is responsible for controlling the network domain for a StatefulSet. The way to create a headless service is to specify clusterIP == None.

The following spec is for creating a Headless Service for the ZooKeeper service. This Headless Service is used to manage Pod Identify for the following StatefulSet.

https://gist.github.com/c2a4b823cc46fd38ea7c2f7194cc6429

Unlike a ClusterIP Service or

a LoadBalancer Service, a Headless Service does not provide load-balancing.

Based on my experience, any request to zk-hs.default.svc.cluster.local is always redirected to the first StatefulSet Pod (zk-0 in the example).

Therefore, A Kubernetes Service that provides load balancing or an Ingress is required if you need to load-balance traffic for your StatefulSet.

The following spec demonstrates how to use a StatefulSet to run a ZooKeeper service:

https://gist.github.com/5dbea22c23af3b035690ba6228e627de

The field metadata contains metadata of this StatefulSet, which includes the name of this StatefulSet and the Namespace it belongs to.

You can also put labels and annotations in this field.

The field spec defines the specification of this StatefulSet and the field spec.template defines a template for creating the Pods this StatefulSet manages.

Like a Deployment, a StatefulSet uses the field spec.selctor to find which Pods to manage.

You can check this doc for details about the usage of Pod Selector.

The field spec.replica specifies the desired number of Pods for the StatefulSet. It is recommended to run an odd number of Pods for some stateful applications

like ZooKeepers, based on the consideration of the efficiency of some operations.

For example, a ZooKeeper service marks a data write complete only when more than half of its servers send an acknowledgment back to the leader.

Take a six pods ZooKeeper service as an example. The service remains available as long as at least four servers (ceil(6/2 + 1)) are available,

which means your service can tolerate the failure of two servers. Nevertheless, it can still tolerate two-servers failure when the server number is lowered down to five.

Meanwhile, this also improves write efficiency as now it only needs 3 servers' acknowledgment to complete a write request.

Therefore, having the sixth server, in this case, does not give you any additional advantage in terms of write efficiency and server availability.

A StatefulSet Pod is assigned a unique ID (aka. Pod Name) from its Headless Service when it is created.

This ID sticks to the Pod during the life cycle of the StatefulSet.

The pattern of constructing ID is ${statefulSetName}-${ordinal}. For example, Kubernetes will create five Pods with five unique IDs zk-0, zk-1, zk-2, zk-3 and zk-4 for the above ZooKeeper service.

The ID of a StatefulSet Pod is also its hostname. The subdomain takes the form

${podID}.${headlessServiceName}.{$namespace}.svc.cluster.local where cluster.local is the cluster domain.

For example, the subdomain of the first ZooKeeper Pod is zk-0.zk-hs.default.svc.cluster.local.

It is recommended to use a Stateful Pod's subdomain other than its IP to reach the Pod as the subdomain is unique within the whole cluster.

You can choose whether to create/update/delete a StatefulSet's Pod in order or in parallel

by specifying spec.podManagementPolicy == OrderedReady or spec.podManagementPolicy == Parallel.

OrderedReady is the default setting and it controls the Pods to be created with the order 0, 1, 2, ..., N and to be deleted with the order N, N-1, ..., 1, 0.

In addition, it has to wait for the current Pod to become Ready or terminated prior to terminating or launching the next Pod.

Parallel launches or terminates all the Pods simultaneously. It does not rely on the state of the current Pod to lunch or terminate the next Pod.

There are several rolling update strategies available for StatefulSets.

RollingUpdate is the default strategy and it deletes and recreates each Pod for a StatefulSet when a rolling update occurs.

Doing rolling updates for the stateful applications like ZooKeepers is a little bit tricky:

Other Pods need enough time to elect a new leader when the StatefulSet Controller is recreating the leader.

Therefore, You should consider configuring readnessProbe and

readnessProbe.initialDelaySeconds for the containers inside a StatefulSet Pods to delay the new Pod to be ready,

thus delaying the rolling update of the next Pod and giving other running Pods enough time to update the service topology.

This should give your stateful applications, for example, a ZooKeeper service, enough time to handle the case where a Pod is lost and back.

Like a Deployment, the ideal scenario of running a StatefulSet is to distribute its Pods to different nodes in different zones and

avoid running multiple Pods in the same node. The spec.template.spec.affinity field allows you to specify node affinity and inter-pod affinity (or anti-affinity) for the SatefulSet Pods.

You can check this doc for details about using node/pod affinity in Kubernetes

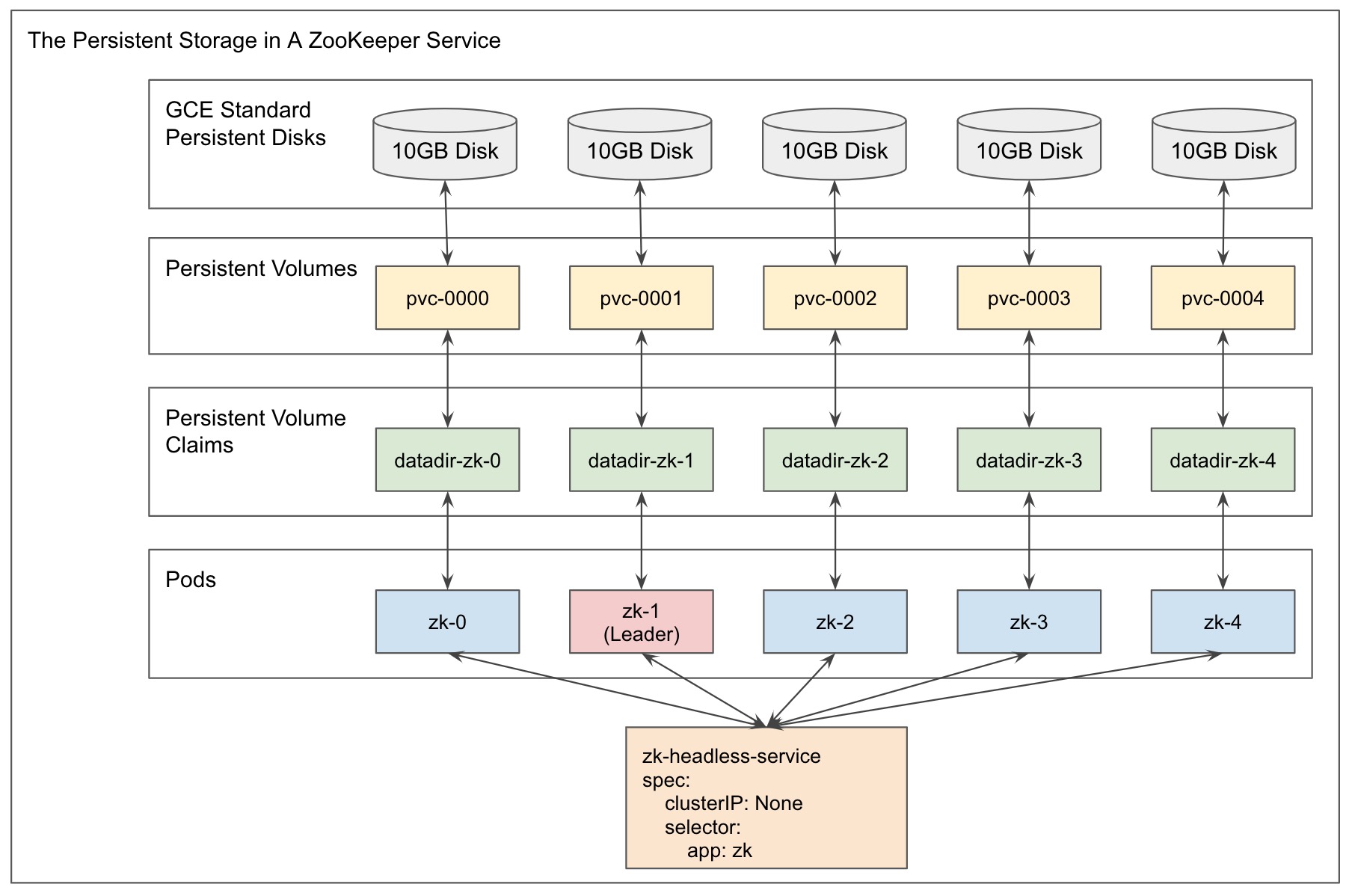

The field spec.volumeClaimTemplates is used to provide stable storage for StatefulSets.

As shown in the following picture, the field spec.volumeClaimTemplates creates a Persistent Volume Claim (datadir-zk-0), a Persistent Volume (pv-0000),

and a 10 GB standard persistent disk for Pod zk-0. These storage settings have the same life cycle as the StatefulSet, which means the storage for a Stateful Pod

is stable and persistent. Any StatefulSet Pod will not lose its data whenever it is terminated and recreated.

Check this blog if you are curious about how Kubernetes provides load balancing for your applications through Kubernetes Services.