Last active

July 16, 2019 12:10

-

-

Save c-bata/7eb7738c829c9858f4955dab5ac851e3 to your computer and use it in GitHub Desktop.

Benchmark of GaussianProcessRegressor for https://github.com/scikit-learn/scikit-learn/pull/14378

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| import time | |

| import numpy as np | |

| import matplotlib.pyplot as plt | |

| from sklearn.gaussian_process import kernels as sk_kern | |

| from sklearn.gaussian_process import GaussianProcessRegressor | |

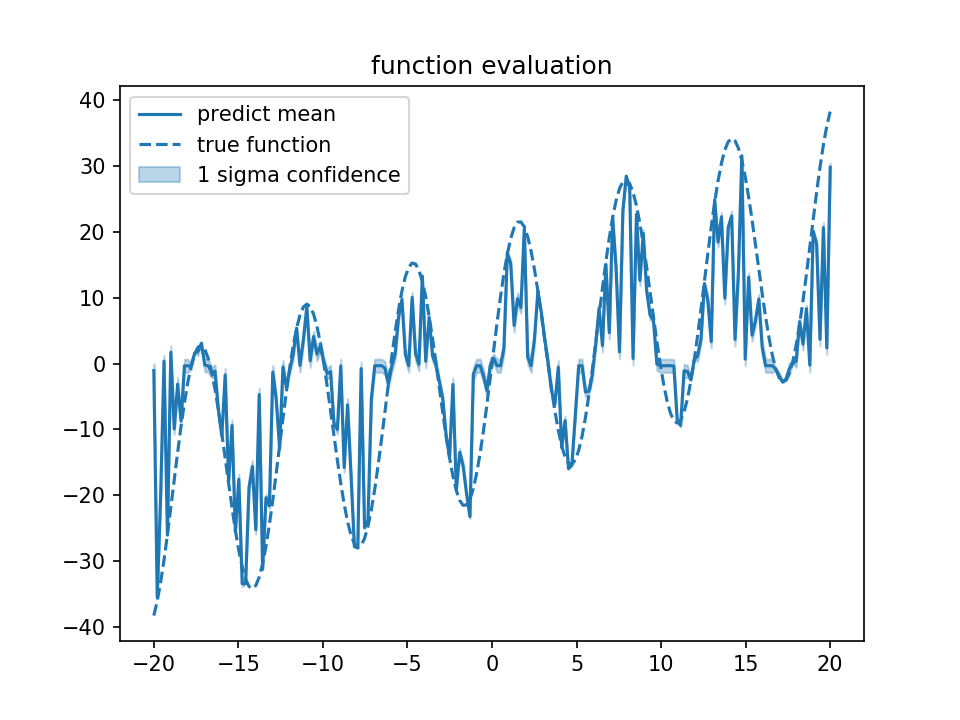

| def objective(x): | |

| return x + 20 * np.sin(x) | |

| def plot_result(x_test, mean, std): | |

| plt.plot(x_test[:, 0], mean, color="C0", label="predict mean") | |

| plt.fill_between(x_test[:, 0], mean + std, mean - std, color="C0", alpha=.3, label="1 sigma confidence") | |

| xx = np.linspace(-20, 20, 200) | |

| plt.plot(xx, objective(xx), "--", color="C0", label="true function") | |

| plt.title("function evaluation") | |

| plt.legend() | |

| plt.savefig("gpr_predict.png", dpi=150) | |

| def main(): | |

| kernel = sk_kern.RBF(1.0, (1e-3, 1e3)) + sk_kern.ConstantKernel(1.0, (1e-3, 1e3)) | |

| clf = GaussianProcessRegressor( | |

| kernel=kernel, | |

| alpha=1e-10, | |

| optimizer="fmin_l_bfgs_b", | |

| n_restarts_optimizer=20, | |

| normalize_y=True) | |

| np.random.seed(0) | |

| x_train = np.random.uniform(-20, 20, 200) | |

| y_train = objective(x_train) + np.random.normal(loc=0, scale=.1, size=x_train.shape) | |

| times = [] | |

| for i in range(10): | |

| start = time.time() | |

| clf.fit(x_train.reshape(-1, 1), y_train) | |

| elapsed = time.time() - start | |

| print(f"elapsed: {elapsed:.3f}s") | |

| times.append(elapsed) | |

| print("score:", np.array(times).mean(), np.array(times).std()) | |

| x_test = np.linspace(-20., 20., 200).reshape(-1, 1) | |

| pred_mean, pred_std = clf.predict(x_test, return_std=True) | |

| plot_result(x_test, pred_mean, pred_std) | |

| if __name__ == '__main__': | |

| main() |

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

patch

gpr.py L420

↓

before

After

almost 26.4% faster