Last active

August 9, 2023 01:41

-

-

Save aparrish/f21f6abbf2367e8eb23438558207e1c3 to your computer and use it in GitHub Desktop.

NLP Concepts with spaCy. Code examples released under CC0 https://creativecommons.org/choose/zero/, other text released under CC BY 4.0 https://creativecommons.org/licenses/by/4.0/

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| { | |

| "cells": [ | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "# NLP concepts with spaCy\n", | |

| "\n", | |

| "“Natural Language Processing” is a field at the intersection of computer science, linguistics and artificial intelligence which aims to make the underlying structure of language available to computer programs for analysis and manipulation. It’s a vast and vibrant field with a long history! New research and techniques are being developed constantly.\n", | |

| "\n", | |

| "The aim of this notebook is to introduce a few simple concepts and techniques from NLP—just the stuff that’ll help you do creative things quickly, and maybe open the door for you to understand more sophisticated NLP concepts that you might encounter elsewhere.\n", | |

| "\n", | |

| "We'll be using a library called [spaCy](https://spacy.io/), which is a good compromise between being very powerful and state-of-the-art and easy for newcomers to understand.\n", | |

| "\n", | |

| "(Traditionally, most NLP work in Python was done with a library called [NLTK](http://www.nltk.org/). NLTK is a fantastic library, but it’s also a writhing behemoth: large and slippery and difficult to understand. Also, much of the code in NLTK is decades out of date with contemporary practices in NLP.)\n", | |

| "\n", | |

| "This tutorial is written in Python 2.7, but the concepts should translate easily to later versions.\n", | |

| "\n", | |

| "## Natural language\n", | |

| "\n", | |

| "“Natural language” is a loaded phrase: what makes one stretch of language “natural” while another stretch is not? NLP techniques are opinionated about what language is and how it works; as a consequence, you’ll sometimes find yourself having to conceptualize your text with uncomfortable abstractions in order to make it work with NLP. (This is especially true of poetry, which almost by definition breaks most “conventional” definitions of how language behaves and how it’s structured.)\n", | |

| "\n", | |

| "Of course, a computer can never really fully “understand” human language. Even when the text you’re using fits the abstractions of NLP perfectly, the results of NLP analysis are always going to be at least a little bit inaccurate. But often even inaccurate results can be “good enough”—and in any case, inaccurate output from NLP procedures can be an excellent source of the sublime and absurd juxtapositions that we (as poets) are constantly in search of.\n", | |

| "\n", | |

| "## English only (sorta)\n", | |

| "\n", | |

| "The English Speakers Only Club\n", | |

| "The main assumption that most NLP libraries and techniques make is that the text you want to process will be in English. Historically, most NLP research has been on English specifically; it’s only more recently that serious work has gone into applying these techniques to other languages. The examples in this chapter are all based on English texts, and the tools we’ll use are geared toward English. If you’re interested in working on NLP in other languages, here are a few starting points:\n", | |

| "* [Konlpy](https://github.com/konlpy/konlpy), natural language processing in\n", | |

| " Python for Korean\n", | |

| "* [Jieba](https://github.com/fxsjy/jieba), text segmentation and POS tagging in\n", | |

| " Python for Chinese\n", | |

| "* The [Pattern](http://www.clips.ua.ac.be/pattern) library (like TextBlob, a\n", | |

| " simplified/augmented interface to NLTK) includes POS-tagging and some\n", | |

| " morphology for Spanish in its\n", | |

| " [pattern.es](http://www.clips.ua.ac.be/pages/pattern-es) package.\n", | |

| "\n", | |

| "## English grammar: a crash course\n", | |

| "\n", | |

| "The only thing I believe about English grammar is [this](http://www.writing.upenn.edu/~afilreis/88v/creeley-on-sentence.html):\n", | |

| "\n", | |

| "> \"Oh yes, the sentence,\" Creeley once told the critic Burton Hatlen, \"that's\n", | |

| "> what we call it when we put someone in jail.\"\n", | |

| "\n", | |

| "There is no such thing as a sentence, or a phrase, or a part of speech, or even\n", | |

| "a \"word\"---these are all pareidolic fantasies occasioned by glints of sunlight\n", | |

| "we see reflected on the surface of the ocean of language; fantasies that we\n", | |

| "comfort ourselves with when faced with language's infinite and unknowable\n", | |

| "variability.\n", | |

| "\n", | |

| "Regardless, we may find it occasionally helpful to think about language using\n", | |

| "these abstractions. The following is a gross oversimplification of both how\n", | |

| "English grammar works, and how theories of English grammar work in the context\n", | |

| "of NLP. But it should be enough to get us going!\n", | |

| "\n", | |

| "### Sentences and parts of speech\n", | |

| "\n", | |

| "English texts can roughly be divided into \"sentences.\" Sentences are themselves\n", | |

| "composed of individual words, each of which has a function in expressing the\n", | |

| "meaning of the sentence. The function of a word in a sentence is called its\n", | |

| "\"part of speech\"---i.e., a word functions as a noun, a verb, an adjective, etc.\n", | |

| "Here's a sentence, with words marked for their part of speech:\n", | |

| "\n", | |

| " I really love entrees from the new cafeteria.\n", | |

| " pronoun adverb verb noun (plural) preposition determiner adjective noun\n", | |

| "\n", | |

| "Of course, the \"part of speech\" of a word isn't a property of the word itself.\n", | |

| "We know this because a single \"word\" can function as two different parts of speech:\n", | |

| "\n", | |

| "> I love cheese.\n", | |

| "\n", | |

| "The word \"love\" here is a verb. But here:\n", | |

| "\n", | |

| "> Love is a battlefield.\n", | |

| "\n", | |

| "... it's a noun. For this reason (and others), it's difficult for computers to\n", | |

| "accurately determine the part of speech for a word in a sentence. (It's\n", | |

| "difficult sometimes even for humans to do this.) But NLP procedures do their\n", | |

| "best!\n", | |

| "\n", | |

| "### Phrases and larger syntactic structures\n", | |

| "\n", | |

| "There are several different ways for talking about larger syntactic structures in sentences. The scheme used by spaCy is called a \"dependency grammar.\" We'll talk about the details of this below.\n" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "## Installing spaCy\n", | |

| "\n", | |

| "[Follow the instructions here](https://spacy.io/docs/usage/). When using `pip`, make sure to upgrade to the newest version first, with `pip install --upgrade pip`. (This will ensure that at least *some* of the dependencies are installed as pre-built binaries)\n", | |

| "\n", | |

| " pip install spacy\n", | |

| " \n", | |

| "(If you're not using a virtual environment, try `sudo pip install spacy`.)\n", | |

| "\n", | |

| "Currently, spaCy is distributed in source form only, so the installation process involves a bit of compiling. On macOS, you'll need to install [XCode](https://developer.apple.com/xcode/) in order to perform the compilation steps. [Here's a good tutorial for macOS Sierra](http://railsapps.github.io/xcode-command-line-tools.html), though the steps should be similar on other versions.\n", | |

| "\n", | |

| "After you've installed spaCy, you'll need to download the data. Run the following on the command line:\n", | |

| "\n", | |

| " python -m spacy download en" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "## Basic usage\n", | |

| "\n", | |

| "Import `spacy` like any other Python module. The `spaCy` code expects all strings to be unicode strings, so make sure you've included `from __future__ import unicode_literals` at the top of your Python 2.7 code—it'll make your life easier, trust me." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 170, | |

| "metadata": { | |

| "collapsed": true | |

| }, | |

| "outputs": [], | |

| "source": [ | |

| "from __future__ import unicode_literals\n", | |

| "import spacy" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Create a new spaCy object using `spacy.load('en')` (assuming you want to work with English; spaCy supports other languages as well)." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 171, | |

| "metadata": { | |

| "collapsed": true | |

| }, | |

| "outputs": [], | |

| "source": [ | |

| "nlp = spacy.load('en')" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "And then create a `Document` object by calling the spaCy object with the text you want to work with. Below I've included a few sentences from the Universal Declaration of Human Rights:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 204, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "doc = nlp(\"All human beings are born free and equal in dignity and rights. They are endowed with reason and conscience and should act towards one another in a spirit of brotherhood. Everyone has the right to life, liberty and security of person.\")" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "## Sentences\n", | |

| "\n", | |

| "If you learn nothing else about spaCy (or NLP), then learn at least that it's a good way to get a list of sentences in a text. Once you've created a document object, you can iterate over the sentences it contains using the `.sents` attribute:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 172, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "name": "stdout", | |

| "output_type": "stream", | |

| "text": [ | |

| "All human beings are born free and equal in dignity and rights.\n", | |

| "They are endowed with reason and conscience and should act towards one another in a spirit of brotherhood.\n", | |

| "Everyone has the right to life, liberty and security of person.\n" | |

| ] | |

| } | |

| ], | |

| "source": [ | |

| "for item in doc.sents:\n", | |

| " print item.text" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "The `.sents` attribute is a generator, so you can't index or count it directly. To do this, you'll need to convert it to a list first using the `list()` function:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 110, | |

| "metadata": { | |

| "collapsed": true | |

| }, | |

| "outputs": [], | |

| "source": [ | |

| "sentences_as_list = list(doc.sents)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 111, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "3" | |

| ] | |

| }, | |

| "execution_count": 111, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "len(sentences_as_list)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 112, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "They are endowed with reason and conscience and should act towards one another in a spirit of brotherhood." | |

| ] | |

| }, | |

| "execution_count": 112, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "import random\n", | |

| "random.choice(sentences_as_list)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "## Words\n", | |

| "\n", | |

| "Iterating over a document yields each word in the document in turn. Words are represented with spaCy [Token](https://spacy.io/docs/api/token) objects, which have several interesting attributes. The `.text` attribute gives the underlying text of the word, and the `.lemma_` attribute gives the word's \"lemma\" (explained below):" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 173, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "name": "stdout", | |

| "output_type": "stream", | |

| "text": [ | |

| "All all\n", | |

| "human human\n", | |

| "beings being\n", | |

| "are be\n", | |

| "born bear\n", | |

| "free free\n", | |

| "and and\n", | |

| "equal equal\n", | |

| "in in\n", | |

| "dignity dignity\n", | |

| "and and\n", | |

| "rights right\n", | |

| ". .\n", | |

| "They -PRON-\n", | |

| "are be\n", | |

| "endowed endow\n", | |

| "with with\n", | |

| "reason reason\n", | |

| "and and\n", | |

| "conscience conscience\n", | |

| "and and\n", | |

| "should should\n", | |

| "act act\n", | |

| "towards towards\n", | |

| "one one\n", | |

| "another another\n", | |

| "in in\n", | |

| "a a\n", | |

| "spirit spirit\n", | |

| "of of\n", | |

| "brotherhood brotherhood\n", | |

| ". .\n", | |

| "Everyone everyone\n", | |

| "has have\n", | |

| "the the\n", | |

| "right right\n", | |

| "to to\n", | |

| "life life\n", | |

| ", ,\n", | |

| "liberty liberty\n", | |

| "and and\n", | |

| "security security\n", | |

| "of of\n", | |

| "person person\n", | |

| ". .\n" | |

| ] | |

| } | |

| ], | |

| "source": [ | |

| "for word in doc:\n", | |

| " print word.text, word.lemma_" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "A word's \"lemma\" is its most \"basic\" form, the form without any morphology\n", | |

| "applied to it. \"Sing,\" \"sang,\" \"singing,\" are all different \"forms\" of the\n", | |

| "lemma *sing*. Likewise, \"octopi\" is the plural of \"octopus\"; the \"lemma\" of\n", | |

| "\"octopi\" is *octopus*.\n", | |

| "\n", | |

| "\"Lemmatizing\" a text is the process of going through the text and replacing\n", | |

| "each word with its lemma. This is often done in an attempt to reduce a text\n", | |

| "to its most \"essential\" meaning, by eliminating pesky things like verb tense\n", | |

| "and noun number.\n", | |

| "\n", | |

| "Individual sentences can also be iterated over to get a list of words:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 114, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "name": "stdout", | |

| "output_type": "stream", | |

| "text": [ | |

| "They\n", | |

| "are\n", | |

| "endowed\n", | |

| "with\n", | |

| "reason\n", | |

| "and\n", | |

| "conscience\n", | |

| "and\n", | |

| "should\n", | |

| "act\n", | |

| "towards\n", | |

| "one\n", | |

| "another\n", | |

| "in\n", | |

| "a\n", | |

| "spirit\n", | |

| "of\n", | |

| "brotherhood\n", | |

| ".\n" | |

| ] | |

| } | |

| ], | |

| "source": [ | |

| "sentence = list(doc.sents)[1]\n", | |

| "for word in sentence:\n", | |

| " print word.text" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "## Parts of speech\n", | |

| "\n", | |

| "The `pos_` attribute gives a general part of speech; the `tag_` attribute gives a more specific designation. [List of meanings here.](https://spacy.io/docs/api/annotation)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 115, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "name": "stdout", | |

| "output_type": "stream", | |

| "text": [ | |

| "All DET DT\n", | |

| "human ADJ JJ\n", | |

| "beings NOUN NNS\n", | |

| "are VERB VBP\n", | |

| "born VERB VBN\n", | |

| "free ADJ JJ\n", | |

| "and CCONJ CC\n", | |

| "equal ADJ JJ\n", | |

| "in ADP IN\n", | |

| "dignity NOUN NN\n", | |

| "and CCONJ CC\n", | |

| "rights NOUN NNS\n", | |

| ". PUNCT .\n", | |

| "They PRON PRP\n", | |

| "are VERB VBP\n", | |

| "endowed VERB VBN\n", | |

| "with ADP IN\n", | |

| "reason NOUN NN\n", | |

| "and CCONJ CC\n", | |

| "conscience NOUN NN\n", | |

| "and CCONJ CC\n", | |

| "should VERB MD\n", | |

| "act VERB VB\n", | |

| "towards ADP IN\n", | |

| "one NUM CD\n", | |

| "another DET DT\n", | |

| "in ADP IN\n", | |

| "a DET DT\n", | |

| "spirit NOUN NN\n", | |

| "of ADP IN\n", | |

| "brotherhood NOUN NN\n", | |

| ". PUNCT .\n", | |

| "Everyone NOUN NN\n", | |

| "has VERB VBZ\n", | |

| "the DET DT\n", | |

| "right NOUN NN\n", | |

| "to ADP IN\n", | |

| "life NOUN NN\n", | |

| ", PUNCT ,\n", | |

| "liberty NOUN NN\n", | |

| "and CCONJ CC\n", | |

| "security NOUN NN\n", | |

| "of ADP IN\n", | |

| "person NOUN NN\n", | |

| ". PUNCT .\n" | |

| ] | |

| } | |

| ], | |

| "source": [ | |

| "for item in doc:\n", | |

| " print item.text, item.pos_, item.tag_" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "### Extracting words by part of speech\n", | |

| "\n", | |

| "With knowledge of which part of speech each word belongs to, we can make simple code to extract and recombine words by their part of speech. The following code creates a list of all nouns and adjectives in the text:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 175, | |

| "metadata": { | |

| "collapsed": true | |

| }, | |

| "outputs": [], | |

| "source": [ | |

| "nouns = []\n", | |

| "adjectives = []\n", | |

| "for item in doc:\n", | |

| " if item.pos_ == 'NOUN':\n", | |

| " nouns.append(item.text)\n", | |

| "for item in doc:\n", | |

| " if item.pos_ == 'ADJ':\n", | |

| " adjectives.append(item.text)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "And below, some code to print out random pairings of an adjective from the text with a noun from the text:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 177, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "name": "stdout", | |

| "output_type": "stream", | |

| "text": [ | |

| "equal Everyone\n" | |

| ] | |

| } | |

| ], | |

| "source": [ | |

| "print random.choice(adjectives) + \" \" + random.choice(nouns)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Making a list of verbs works similarly:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 178, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "verbs = []\n", | |

| "for item in doc:\n", | |

| " if item.pos_ == 'VERB':\n", | |

| " verbs.append(item.text)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Although in this case, you'll notice the list of verbs is a bit unintuitive. We're getting words like \"should\" and \"are\" and \"has\"—helper verbs that maybe don't fit our idea of what verbs we want to extract." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 179, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "[u'are', u'born', u'are', u'endowed', u'should', u'act', u'has']" | |

| ] | |

| }, | |

| "execution_count": 179, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "verbs" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "This is because we used the `.pos_` attribute, which only gives us general information about the part of speech. The `.tag_` attribute allows us to be more specific about the kinds of verbs we want. For example, this code gives us only the verbs in past participle form:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 180, | |

| "metadata": { | |

| "collapsed": true | |

| }, | |

| "outputs": [], | |

| "source": [ | |

| "only_past = []\n", | |

| "for item in doc:\n", | |

| " if item.tag_ == 'VBN':\n", | |

| " only_past.append(item.text)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 181, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "[u'born', u'endowed']" | |

| ] | |

| }, | |

| "execution_count": 181, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "only_past" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "## Larger syntactic units\n", | |

| "\n", | |

| "Okay, so we can get individual words by their part of speech. Great! But what if we want larger chunks, based on their syntactic role in the sentence? The easy way is `.noun_chunks`, which is an attribute of a document or a sentence that evaluates to a list of [spans](https://spacy.io/docs/api/span) of noun phrases, regardless of their position in the document:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 183, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "name": "stdout", | |

| "output_type": "stream", | |

| "text": [ | |

| "All human beings\n", | |

| "dignity\n", | |

| "rights\n", | |

| "They\n", | |

| "reason\n", | |

| "conscience\n", | |

| "a spirit\n", | |

| "brotherhood\n", | |

| "Everyone\n", | |

| "life\n", | |

| "the right to life, liberty\n", | |

| "security\n", | |

| "person\n" | |

| ] | |

| } | |

| ], | |

| "source": [ | |

| "for item in doc.noun_chunks:\n", | |

| " print item.text" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "For anything more sophisticated than this, though, we'll need to learn about how spaCy parses sentences into its syntactic components." | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "### Understanding dependency grammars" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "\n", | |

| "\n", | |

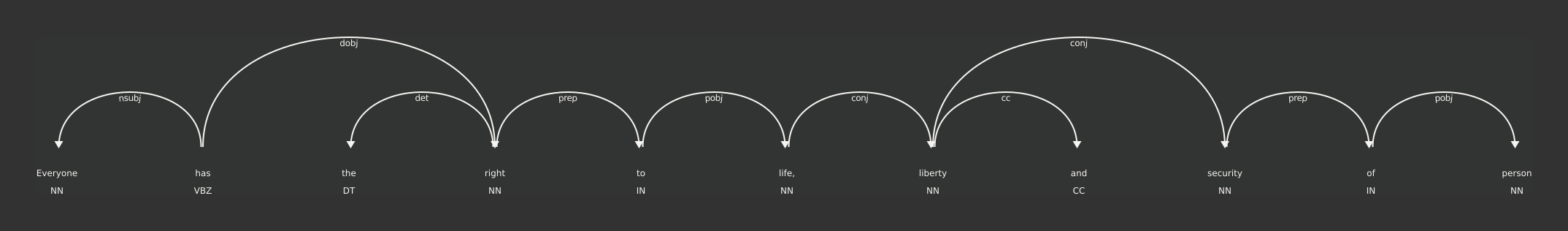

| "[See in \"displacy\", spaCy's syntax visualization tool.](https://demos.explosion.ai/displacy/?text=Everyone%20has%20the%20right%20to%20life%2C%20liberty%20and%20security%20of%20person&model=en&cpu=1&cph=0)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "The spaCy library parses the underlying sentences using a [dependency grammar](https://en.wikipedia.org/wiki/Dependency_grammar). Dependency grammars look different from the kinds of sentence diagramming you may have done in high school, and even from tree-based [phrase structure grammars](https://en.wikipedia.org/wiki/Phrase_structure_grammar) commonly used in descriptive linguistics. The idea of a dependency grammar is that every word in a sentence is a \"dependent\" of some other word, which is that word's \"head.\" Those \"head\" words are in turn dependents of other words. The finite verb in the sentence is the ultimate \"head\" of the sentence, and is not itself dependent on any other word. (The dependents of a particular head are sometimes called its \"children.\")\n", | |

| "\n", | |

| "The question of how to know what constitutes a \"head\" and a \"dependent\" is complicated. As a starting point, here's a passage from [Dependency Grammar and Dependency Parsing](http://stp.lingfil.uu.se/~nivre/docs/05133.pdf):\n", | |

| "\n", | |

| "> Here are some of the criteria that have been proposed for identifying a syntactic relation between a head H and a dependent D in a construction C (Zwicky, 1985; Hudson, 1990):\n", | |

| ">\n", | |

| "> 1. H determines the syntactic category of C and can often replace C.\n", | |

| "> 2. H determines the semantic category of C; D gives semantic specification.\n", | |

| "> 3. H is obligatory; D may be optional.\n", | |

| "> 4. H selects D and determines whether D is obligatory or optional.\n", | |

| "> 5. The form of D depends on H (agreement or government).\n", | |

| "> 6. The linear position of D is specified with reference to H.\"\n", | |

| "\n", | |

| "There are different *types* of relationships between heads and dependents, and each type of relation has its own name. Use the displaCy visualizer (linked above) to see how a particular sentence is parsed, and what the relations between the heads and dependents are. (I've listed a few common relations below.)\n", | |

| "\n", | |

| "Every token object in a spaCy document or sentence has attributes that tell you what the word's head is, what the dependency relationship is between that word and its head, and a list of that word's children (dependents). The following code prints out each word in the sentence, the tag, the word's head, the word's dependency relation with its head, and the word's children (i.e., dependent words):" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 148, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "name": "stdout", | |

| "output_type": "stream", | |

| "text": [ | |

| "Word: Everyone\n", | |

| "Tag: NN\n", | |

| "Head: has\n", | |

| "Dependency relation: nsubj\n", | |

| "Children: []\n", | |

| "Subtree: [Everyone]\n", | |

| "\n", | |

| "Word: has\n", | |

| "Tag: VBZ\n", | |

| "Head: has\n", | |

| "Dependency relation: ROOT\n", | |

| "Children: [Everyone, liberty, .]\n", | |

| "Subtree: [Everyone, has, the, right, to, life, ,, liberty, and, security, of, person, .]\n", | |

| "\n", | |

| "Word: the\n", | |

| "Tag: DT\n", | |

| "Head: right\n", | |

| "Dependency relation: det\n", | |

| "Children: []\n", | |

| "Subtree: [the]\n", | |

| "\n", | |

| "Word: right\n", | |

| "Tag: NN\n", | |

| "Head: liberty\n", | |

| "Dependency relation: nmod\n", | |

| "Children: [the, to]\n", | |

| "Subtree: [the, right, to, life, ,]\n", | |

| "\n", | |

| "Word: to\n", | |

| "Tag: IN\n", | |

| "Head: right\n", | |

| "Dependency relation: prep\n", | |

| "Children: [life]\n", | |

| "Subtree: [to, life, ,]\n", | |

| "\n", | |

| "Word: life\n", | |

| "Tag: NN\n", | |

| "Head: to\n", | |

| "Dependency relation: pobj\n", | |

| "Children: [,]\n", | |

| "Subtree: [life, ,]\n", | |

| "\n", | |

| "Word: ,\n", | |

| "Tag: ,\n", | |

| "Head: life\n", | |

| "Dependency relation: punct\n", | |

| "Children: []\n", | |

| "Subtree: [,]\n", | |

| "\n", | |

| "Word: liberty\n", | |

| "Tag: NN\n", | |

| "Head: has\n", | |

| "Dependency relation: dobj\n", | |

| "Children: [right, and, security, of]\n", | |

| "Subtree: [the, right, to, life, ,, liberty, and, security, of, person]\n", | |

| "\n", | |

| "Word: and\n", | |

| "Tag: CC\n", | |

| "Head: liberty\n", | |

| "Dependency relation: cc\n", | |

| "Children: []\n", | |

| "Subtree: [and]\n", | |

| "\n", | |

| "Word: security\n", | |

| "Tag: NN\n", | |

| "Head: liberty\n", | |

| "Dependency relation: conj\n", | |

| "Children: []\n", | |

| "Subtree: [security]\n", | |

| "\n", | |

| "Word: of\n", | |

| "Tag: IN\n", | |

| "Head: liberty\n", | |

| "Dependency relation: prep\n", | |

| "Children: [person]\n", | |

| "Subtree: [of, person]\n", | |

| "\n", | |

| "Word: person\n", | |

| "Tag: NN\n", | |

| "Head: of\n", | |

| "Dependency relation: pobj\n", | |

| "Children: []\n", | |

| "Subtree: [person]\n", | |

| "\n", | |

| "Word: .\n", | |

| "Tag: .\n", | |

| "Head: has\n", | |

| "Dependency relation: punct\n", | |

| "Children: []\n", | |

| "Subtree: [.]\n", | |

| "\n" | |

| ] | |

| } | |

| ], | |

| "source": [ | |

| "for word in list(doc.sents)[2]:\n", | |

| " print \"Word:\", word.text\n", | |

| " print \"Tag:\", word.tag_\n", | |

| " print \"Head:\", word.head.text\n", | |

| " print \"Dependency relation:\", word.dep_\n", | |

| " print \"Children:\", list(word.children)\n", | |

| " print \"\"" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Here's a list of a few dependency relations and what they mean. ([A more complete list can be found here.](http://www.mathcs.emory.edu/~choi/doc/clear-dependency-2012.pdf))\n", | |

| "\n", | |

| "* `nsubj`: this word's head is a verb, and this word is itself the subject of the verb\n", | |

| "* `nsubjpass`: same as above, but for subjects in sentences in the passive voice\n", | |

| "* `dobj`: this word's head is a verb, and this word is itself the direct object of the verb\n", | |

| "* `iobj`: same as above, but indirect object\n", | |

| "* `aux`: this word's head is a verb, and this word is an \"auxiliary\" verb (like \"have\", \"will\", \"be\")\n", | |

| "* `attr`: this word's head is a copula (like \"to be\"), and this is the description attributed to the subject of the sentence (e.g., in \"This product is a global brand\", `brand` is dependent on `is` with the `attr` dependency relation)\n", | |

| "* `det`: this word's head is a noun, and this word is a determiner of that noun (like \"the,\" \"this,\" etc.)\n", | |

| "* `amod`: this word's head is a noun, and this word is an adjective describing that noun\n", | |

| "* `prep`: this word is a preposition that modifies its head\n", | |

| "* `pobj`: this word is a dependent (object) of a preposition" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "### Using .subtree for extracting syntactic units\n", | |

| "\n", | |

| "The `.subtree` attribute evaluates to a generator that can be flatted by passing it to `list()`. This is a list of the word's syntactic dependents—essentially, the \"clause\" that the word belongs to." | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "This function merges a subtree and returns a string with the text of the words contained in it:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 184, | |

| "metadata": { | |

| "collapsed": true | |

| }, | |

| "outputs": [], | |

| "source": [ | |

| "def flatten_subtree(st):\n", | |

| " return ''.join([w.text_with_ws for w in list(st)]).strip()" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "With this function in our toolbox, we can write a loop that prints out the subtree for each word in a sentence:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 163, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "name": "stdout", | |

| "output_type": "stream", | |

| "text": [ | |

| "Word: Everyone\n", | |

| "Flattened subtree: Everyone\n", | |

| "\n", | |

| "Word: has\n", | |

| "Flattened subtree: Everyone has the right to life, liberty and security of person.\n", | |

| "\n", | |

| "Word: the\n", | |

| "Flattened subtree: the\n", | |

| "\n", | |

| "Word: right\n", | |

| "Flattened subtree: the right to life,\n", | |

| "\n", | |

| "Word: to\n", | |

| "Flattened subtree: to life,\n", | |

| "\n", | |

| "Word: life\n", | |

| "Flattened subtree: life,\n", | |

| "\n", | |

| "Word: ,\n", | |

| "Flattened subtree: ,\n", | |

| "\n", | |

| "Word: liberty\n", | |

| "Flattened subtree: the right to life, liberty and security of person\n", | |

| "\n", | |

| "Word: and\n", | |

| "Flattened subtree: and\n", | |

| "\n", | |

| "Word: security\n", | |

| "Flattened subtree: security\n", | |

| "\n", | |

| "Word: of\n", | |

| "Flattened subtree: of person\n", | |

| "\n", | |

| "Word: person\n", | |

| "Flattened subtree: person\n", | |

| "\n", | |

| "Word: .\n", | |

| "Flattened subtree: .\n", | |

| "\n" | |

| ] | |

| } | |

| ], | |

| "source": [ | |

| "for word in list(doc.sents)[2]:\n", | |

| " print \"Word:\", word.text\n", | |

| " print \"Flattened subtree: \", flatten_subtree(word.subtree)\n", | |

| " print \"\"" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Using the subtree and our knowledge of dependency relation types, we can write code that extracts larger syntactic units based on their relationship with the rest of the sentence. For example, to get all of the noun phrases that are subjects of a verb:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 164, | |

| "metadata": { | |

| "collapsed": true | |

| }, | |

| "outputs": [], | |

| "source": [ | |

| "subjects = []\n", | |

| "for word in doc:\n", | |

| " if word.dep_ in ('nsubj', 'nsubjpass'):\n", | |

| " subjects.append(flatten_subtree(word.subtree))" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 166, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "[u'All human beings', u'They', u'Everyone']" | |

| ] | |

| }, | |

| "execution_count": 166, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "subjects" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Or every prepositional phrase:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 168, | |

| "metadata": { | |

| "collapsed": true | |

| }, | |

| "outputs": [], | |

| "source": [ | |

| "prep_phrases = []\n", | |

| "for word in doc:\n", | |

| " if word.dep_ == 'prep':\n", | |

| " prep_phrases.append(flatten_subtree(word.subtree))" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 185, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "[u'in dignity and rights',\n", | |

| " u'with reason and conscience',\n", | |

| " u'towards one another',\n", | |

| " u'in a spirit of brotherhood',\n", | |

| " u'of brotherhood',\n", | |

| " u'to life,',\n", | |

| " u'of person']" | |

| ] | |

| }, | |

| "execution_count": 185, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "prep_phrases" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "## Entity extraction\n", | |

| "\n", | |

| "A common task in NLP is taking a text and extracting \"named entities\" from it—basically, proper nouns, or names of companies, products, locations, etc. You can easily access this information using the `.ents` property of a document." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 190, | |

| "metadata": { | |

| "collapsed": true | |

| }, | |

| "outputs": [], | |

| "source": [ | |

| "doc2 = nlp(\"John McCain and I visited the Apple Store in Manhattan.\")" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 192, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "name": "stdout", | |

| "output_type": "stream", | |

| "text": [ | |

| "John McCain\n", | |

| "the Apple Store\n", | |

| "Manhattan\n" | |

| ] | |

| } | |

| ], | |

| "source": [ | |

| "for item in doc2.ents:\n", | |

| " print item" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Entity objects have a `.label_` attribute that tells you the type of the entity. ([Here's a full list of the built-in entity types.](https://spacy.io/docs/usage/entity-recognition#entity-types))" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 208, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "name": "stdout", | |

| "output_type": "stream", | |

| "text": [ | |

| "John McCain PERSON\n", | |

| "the Apple Store ORG\n", | |

| "Manhattan GPE\n" | |

| ] | |

| } | |

| ], | |

| "source": [ | |

| "for item in doc2.ents:\n", | |

| " print item.text, item.label_" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "[More on spaCy entity recognition.](https://spacy.io/docs/usage/entity-recognition)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "## Loading data from a file\n", | |

| "\n", | |

| "You can load data from a file easily with spaCy. You just have to make sure that the data is in Unicode format, not plain-text. An easy way to do this is to call `.decode('utf8')` on the string after you've loaded it:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 210, | |

| "metadata": { | |

| "collapsed": true | |

| }, | |

| "outputs": [], | |

| "source": [ | |

| "doc3 = nlp(open(\"genesis.txt\").read().decode('utf8'))" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "From here, we can see what entities were here with us from the very beginning:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 209, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "name": "stdout", | |

| "output_type": "stream", | |

| "text": [ | |

| "earth LOC\n", | |

| "the Spirit of God ORG\n", | |

| "Day PERSON\n", | |

| "Night TIME\n", | |

| "first ORDINAL\n", | |

| "second ORDINAL\n", | |

| "one CARDINAL\n", | |

| "Earth LOC\n", | |

| "morning TIME\n", | |

| "third ORDINAL\n", | |

| "night TIME\n", | |

| "seasons DATE\n", | |

| "two CARDINAL\n", | |

| "earth LOC\n", | |

| "the day DATE\n", | |

| "the night TIME\n", | |

| "\n", | |

| "FAC\n", | |

| "evening TIME\n", | |

| "morning TIME\n", | |

| "fourth ORDINAL\n", | |

| "moveth TIME\n", | |

| "earth LOC\n", | |

| "fifth ORDINAL\n", | |

| "earth LOC\n", | |

| "earth LOC\n", | |

| "sixth ORDINAL\n" | |

| ] | |

| } | |

| ], | |

| "source": [ | |

| "for item in doc3.ents:\n", | |

| " print item.text, item.label_" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "## Further reading and resources\n", | |

| "\n", | |

| "[A few example programs can be found here.](https://github.com/aparrish/rwet-examples/tree/master/spacy)\n", | |

| "\n", | |

| "We've barely scratched the surface of what it's possible to do with spaCy. [There's a great page of tutorials on the official site](https://spacy.io/docs/usage/tutorials) that you should check out!" | |

| ] | |

| } | |

| ], | |

| "metadata": { | |

| "kernelspec": { | |

| "display_name": "Python 2", | |

| "language": "python", | |

| "name": "python2" | |

| }, | |

| "language_info": { | |

| "codemirror_mode": { | |

| "name": "ipython", | |

| "version": 2 | |

| }, | |

| "file_extension": ".py", | |

| "mimetype": "text/x-python", | |

| "name": "python", | |

| "nbconvert_exporter": "python", | |

| "pygments_lexer": "ipython2", | |

| "version": "2.7.6" | |

| } | |

| }, | |

| "nbformat": 4, | |

| "nbformat_minor": 2 | |

| } |

Great tutorial, but why limit it to Python 2, especially in NLP?

It appears that you could add from __future__ import print_function and add the appropriate parentheses, and then this code would work in both Python 2 and 3.

That was great but I'd like to know how more nuanced software is built using spacy, are these all the fundamentals? I'd like to develop a context free grammar for financial news reporting, how can i get there?

Great stuff..!

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Awesome stuff! And spacy today has so many languages supported! Even portuguese! In the past, some middle of 2015~2016, I didn't found portuguese models. I'll test.

Thanks for your contribution. This indeed will help some people to get into the most important concepts of NLP.