Created

June 24, 2013 14:23

-

-

Save fonnesbeck/5850413 to your computer and use it in GitHub Desktop.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| { | |

| "metadata": { | |

| "name": "2. Data Wrangling with Pandas" | |

| }, | |

| "nbformat": 3, | |

| "nbformat_minor": 0, | |

| "worksheets": [ | |

| { | |

| "cells": [ | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "# Data Wrangling with Pandas\n", | |

| "\n", | |

| "Now that we have been exposed to the basic functionality of Pandas, lets explore some more advanced features that will be useful when addressing more complex data management tasks.\n", | |

| "\n", | |

| "As most statisticians/data analysts will admit, often the lion's share of the time spent implementing an analysis is devoted to preparing the data itself, rather than to coding or running a particular model that uses the data. This is where Pandas and Python's standard library are beneficial, providing high-level, flexible, and efficient tools for manipulating your data as needed.\n" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "import pandas as pd\n", | |

| "import numpy as np\n", | |

| "\n", | |

| "# Set some Pandas options\n", | |

| "pd.set_option('display.notebook_repr_html', False)\n", | |

| "pd.set_option('display.max_columns', 20)\n", | |

| "pd.set_option('display.max_rows', 25)" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [], | |

| "prompt_number": 1 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "## Date/Time data handling\n", | |

| "\n", | |

| "Date and time data are inherently problematic. There are an unequal number of days in every month, an unequal number of days in a year (due to leap years), and time zones that vary over space. Yet information about time is essential in many analyses, particularly in the case of time series analysis." | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "The `datetime` built-in library handles temporal information down to the nanosecond." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "from datetime import datetime" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [], | |

| "prompt_number": 2 | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "now = datetime.now()\n", | |

| "now" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 3, | |

| "text": [ | |

| "datetime.datetime(2013, 6, 24, 13, 44, 48, 183558)" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 3 | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "now.day" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 4, | |

| "text": [ | |

| "24" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 4 | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "now.weekday()" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 5, | |

| "text": [ | |

| "0" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 5 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "In addition to `datetime` there are simpler objects for date and time information only, respectively." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "from datetime import date, time" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [], | |

| "prompt_number": 6 | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "time(3, 24)" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 7, | |

| "text": [ | |

| "datetime.time(3, 24)" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 7 | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "date(1970, 9, 3)" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 8, | |

| "text": [ | |

| "datetime.date(1970, 9, 3)" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 8 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Having a custom data type for dates and times is convenient because we can perform operations on them easily. For example, we may want to calculate the difference between two times:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "my_age = now - datetime(1970, 9, 3)\n", | |

| "my_age" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 9, | |

| "text": [ | |

| "datetime.timedelta(15635, 49488, 183558)" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 9 | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "my_age.days/365." | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 10, | |

| "text": [ | |

| "42.83561643835616" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 10 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "In this section, we will manipulate data collected from ocean-going vessels on the eastern seaboard. Vessel operations are monitored using the Automatic Identification System (AIS), a safety at sea navigation technology which vessels are required to maintain and that uses transponders to transmit very high frequency (VHF) radio signals containing static information including ship name, call sign, and country of origin, as well as dynamic information unique to a particular voyage such as vessel location, heading, and speed. \n", | |

| "\n", | |

| "The International Maritime Organization\u2019s (IMO) International Convention for the Safety of Life at Sea requires functioning AIS capabilities on all vessels 300 gross tons or greater and the US Coast Guard requires AIS on nearly all vessels sailing in U.S. waters. The Coast Guard has established a national network of AIS receivers that provides coverage of nearly all U.S. waters. AIS signals are transmitted several times each minute and the network is capable of handling thousands of reports per minute and updates as often as every two seconds. Therefore, a typical voyage in our study might include the transmission of hundreds or thousands of AIS encoded signals. This provides a rich source of spatial data that includes both spatial and temporal information.\n", | |

| "\n", | |

| "For our purposes, we will use summarized data that describes the transit of a given vessel through a particular administrative area. The data includes the start and end time of the transit segment, as well as information about the speed of the vessel, how far it travelled, etc." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "segments = pd.read_csv(\"data/AIS/transit_segments.csv\")\n", | |

| "segments.head()" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 11, | |

| "text": [ | |

| " mmsi name transit segment seg_length avg_sog min_sog \\\n", | |

| "0 1 Us Govt Ves 1 1 5.1 13.2 9.2 \n", | |

| "1 1 Dredge Capt Frank 1 1 13.5 18.6 10.4 \n", | |

| "2 1 Us Gov Vessel 1 1 4.3 16.2 10.3 \n", | |

| "3 1 Us Gov Vessel 2 1 9.2 15.4 14.5 \n", | |

| "4 1 Dredge Capt Frank 2 1 9.2 15.4 14.6 \n", | |

| "\n", | |

| " max_sog pdgt10 st_time end_time \n", | |

| "0 14.5 96.5 2/10/09 16:03 2/10/09 16:27 \n", | |

| "1 20.6 100.0 4/6/09 14:31 4/6/09 15:20 \n", | |

| "2 20.5 100.0 4/6/09 14:36 4/6/09 14:55 \n", | |

| "3 16.1 100.0 4/10/09 17:58 4/10/09 18:34 \n", | |

| "4 16.2 100.0 4/10/09 17:59 4/10/09 18:35 " | |

| ] | |

| } | |

| ], | |

| "prompt_number": 11 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "For example, we might be interested in the distribution of transit lengths, so we can plot them as a histogram:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "segments.seg_length.hist(bins=500)" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 12, | |

| "text": [ | |

| "<matplotlib.axes.AxesSubplot at 0x111d53f10>" | |

| ] | |

| }, | |

| { | |

| "metadata": {}, | |

| "output_type": "display_data", | |

| "png": "iVBORw0KGgoAAAANSUhEUgAAAY0AAAECCAYAAAACQYvcAAAABHNCSVQICAgIfAhkiAAAAAlwSFlz\nAAALEgAACxIB0t1+/AAAIABJREFUeJzt3X9sVfd9//GnuTaxTWLf3FtsK7Fot/hnJhTGNXGRO3Bi\n2ipupSFlMNmblhgVpDk1oJqRqJoU3MjgJjfFLbpuBEEhbTdplawiTfLWLdc/hOkQ1zGOLIWoN25o\nYiXOFdzLBQf/uvb5/sGXmziAfcEfx8fc1+Of7hzfe/P5vHS9F+d9z4UUy7IsREREErBiqRcgIiLL\nh0pDREQSptIQEZGEqTRERCRhKg0REUmYSkNERBKWOt8D3nrrLbq7u5mamqK0tJRnn32WsbExjhw5\nQigUIjc3l4aGBtLT0wHo6Oigs7MTh8NBXV0dJSUlAAwPD9PW1sbk5CQej4eamhoAYrEYx44dY2ho\niKysLHbv3o3T6VzELYuIyN2a80pjdHSU3/3ud/zrv/4rhw4d4pNPPmFgYID29naKi4vxer0UFhbS\n3t4OXC+Grq4uWlpaaGxsxOfzceNrID6fjx07duD1erlw4QIDAwMA+P1+0tLS8Hq9bNmyhRMnTizu\njkVE5K7NWRorV64E4Nq1a0xOTjIxMcGqVavo6+tj8+bNAFRWVhIIBAAIBAJUVFSQmppKTk4OeXl5\nBINBIpEI4+PjFBQUALBp0ybOnj0LMOu1ysvLGRwcXJydiojIgs05nlq5ciU/+MEPeO6550hLS+Op\np56isLCQaDQaHyFlZ2cTjUYBiEQiFBYWxp/vdrsJh8Okpqbicrni510uF+FwGIBwOIzb7QbA4XCQ\nmZnJ6Ogo999/v9mdiojIgs1ZGleuXOH111/n8OHDrFq1ip/97Ge8/fbbsx6TkpKyqAsUERH7mLM0\n3n//fQoLC8nLywNg48aNnD9/nuzsbC5fvozT6SQSiZCdnQ1cv4K4dOlS/PmXLl3C7XbPurL44vkb\nz7l48SIul4vp6WmuXbs251XG//zP/+BwOO5+xyIiScjpdOLxeBb8OnOWRklJCW+88Qajo6Pcd999\nnDt3jurqagC6u7vZunUrPT09bNiwAYCysjJ+/vOf8/3vf59wOMzIyAgFBQWkpKSQkZFBMBikoKCA\nU6dO8dRTT8Wf09PTQ1FREWfOnGHt2rVzLtjhcLB+/foFb1xEJJn09/cbeZ2U+f6W2+7ubrq6upic\nnOSxxx5j+/btTExMzHnLrd/vj99yW1paCnx+y+3ExAQej4fa2lrg+i23R48ejd9yu2fPnjlvufX7\n/SoNg3p7e/nWt7611Mu4JyhLs5SnWf39/VRVVS34deYtDbtRaZilX0xzlKVZytMsU6Whb4QnOf1S\nmqMszVKe9qTSEBGRhKk0klxvb+9SL+GeoSzNUp72pNIQEZGEqTSSnObG5ihLs5SnPak0REQkYSqN\nJKe5sTnK0izlaU8qDRERSZhKI8lpbmyOsjRLedqTSkNERBKm0khymhuboyzNUp72pNIQEZGEqTSS\nnObG5ihLs5SnPak0REQkYSqNJKe5sTnK0izlaU8qDRERSZhKI8lpbmyOsjRLedrTsi6NT65M8MmV\niaVehohI0ljWpREanSQ0OrnUy1jWNDc2R1mapTztKXW+B3z88ce0trbGjz/99FP+/u//nieeeIIj\nR44QCoXIzc2loaGB9PR0ADo6Oujs7MThcFBXV0dJSQkAw8PDtLW1MTk5icfjoaamBoBYLMaxY8cY\nGhoiKyuL3bt343Q6F2O/IiKyAPNeaTz00EO8/PLLvPzyy7S0tHDffffx+OOP097eTnFxMV6vl8LC\nQtrb24HrxdDV1UVLSwuNjY34fD4sywLA5/OxY8cOvF4vFy5cYGBgAAC/309aWhper5ctW7Zw4sSJ\nxduxzKK5sTnK0izlaU93NJ4aHBwkLy+Pr33ta/T19bF582YAKisrCQQCAAQCASoqKkhNTSUnJ4e8\nvDyCwSCRSITx8XEKCgoA2LRpE2fPngWY9Vrl5eUMDg4a26CIiJhzR6Vx+vRpKioqAIhGo/ERUnZ2\nNtFoFIBIJILb7Y4/x+12Ew6HiUQiuFyu+HmXy0U4HAYgHA7Hn+NwOMjMzGR0dHQB25JEaW5sjrI0\nS3naU8KlEYvFePvtt9m4ceNNP0tJSTG6KBERsaeES+PcuXP85V/+JVlZWcD1q4vLly8D168usrOz\ngetXEJcuXYo/79KlS7jd7llXFl88f+M5Fy9eBGB6eppr165x//3333YtX/wTSDQanXXc29ur4zs4\nvnHOLutZzsff+ta3bLWe5X6sPBfn932hUqwbn1LPo7W1lXXr1lFZWQnAb37zG+6//362bt3KyZMn\n+eyzz/iHf/gHhoeH+fnPf86hQ4cIh8O89NJL/OIXvyAlJYUf//jH1NXVUVBQQEtLC0899RTr1q3j\n97//PR9++CE7d+7k9OnTBAIB9u7de8t1+P1+1q9fD8A7H18F4LGHHjAQhYjIvau/v5+qqqoFv05C\nVxrj4+MMDg5SXl4eP/f000/zxz/+kX379hEMBnn66acByM/P54knnuD555/H6/VSX18fH1/V19fz\nxhtvsG/fPr7+9a+zbt06AKqqqpiamqKxsZG33nqLZ599dsEbk8SY/lNIMlOWZilPe5r3exoA6enp\nHD9+fNa5jIwM9u/ff8vHV1dXU11dfdP5/Px8Dh48ePMiUlOpr69PZCkiIrKElvU3wmXhdC+8OcrS\nLOVpTyoNERFJmEojyWlubI6yNEt52pNKQ0REEqbSSHKaG5ujLM1Snvak0hARkYSpNJKc5sbmKEuz\nlKc9qTRERCRhKo0kp7mxOcrSLOVpTyoNERFJmEojyWlubI6yNEt52pNKQ0REEqbSSHKaG5ujLM1S\nnvak0hARkYSpNJKc5sbmKEuzlKc9qTRERCRhKo0kp7mxOcrSLOVpTyoNERFJmEojyWlubI6yNEt5\n2lNC/0b4+Pg4r7/+Oh9++CFTU1PU19eTn5/PkSNHCIVC5Obm0tDQQHp6OgAdHR10dnbicDioq6uj\npKQEgOHhYdra2picnMTj8VBTUwNALBbj2LFjDA0NkZWVxe7du3E6nYu0ZRERuVsJXWm8/vrrPPro\no7z88st4vV4efvhh2tvbKS4uxuv1UlhYSHt7O3C9GLq6umhpaaGxsRGfz4dlWQD4fD527NiB1+vl\nwoULDAwMAOD3+0lLS8Pr9bJlyxZOnDixOLuVm2hubI6yNEt52tO8pXHt2jXee+89nnzySQAcDgeZ\nmZn09fWxefNmACorKwkEAgAEAgEqKipITU0lJyeHvLw8gsEgkUiE8fFxCgoKANi0aRNnz54FmPVa\n5eXlDA4Omt+piIgs2LylEQqFyMrKwufz0djYyGuvvcbExATRaDQ+QsrOziYajQIQiURwu93x57vd\nbsLhMJFIBJfLFT/vcrkIh8MAhMPh+HNulNLo6Ki5XcptaW5sjrI0S3na07ylMT09zdDQEOXl5Rw6\ndIhYLMb//d//zXpMSkrKoi3wVr74ZopGo7OOe3t7dXwHx4ODg7Zaj451rOPFOzYhxbrxgcNtXL58\nmcbGRo4fPw7AuXPn6Onp4c9//jMvvvgiTqeTSCRCU1MTra2tnDx5EoCtW7cC0NzczPbt21m9ejVN\nTU0cPnw4vpnz58+zc+dOmpub2bZtG0VFRUxPT7Nr1674f+/L/H4/69evB+Cdj68C8NhDDxiIQkTk\n3tXf309VVdWCX2feKw2n0xn/XGJmZob+/n7Wrl2Lx+Ohu7sbgJ6eHjZs2ABAWVkZp0+fJhaLEQqF\nGBkZoaCgAKfTSUZGBsFgEMuyOHXq1Kzn9PT0AHDmzBnWrl274I2JiIh5815pAHz88cf4fD6uXLnC\nmjVraGhowLKsOW+59fv98VtuS0tLgc9vuZ2YmMDj8VBbWwtcv+X26NGj8Vtu9+zZc9tbbnWlYVZv\nb6/uUjFEWZqlPM0ydaWRUGnYiUrDLP1imqMszVKeZn1l4ym5t+mX0hxlaZbytCeVhoiIJEylkeRM\n346XzJSlWcrTnlQaIiKSMJVGktPc2BxlaZbytCeVhoiIJEylkeQ0NzZHWZqlPO1JpSEiIglTaSQ5\nzY3NUZZmKU97UmmIiEjCVBpJTnNjc5SlWcrTnlQaIiKSMJVGktPc2BxlaZbytCeVhoiIJEylkeQ0\nNzZHWZqlPO1JpSEiIglTaSQ5zY3NUZZmKU97UmmIiEjCVBpJTnNjc5SlWcrTnlITedBzzz1HRkYG\nK1aswOFwcOjQIcbGxjhy5AihUIjc3FwaGhpIT08HoKOjg87OThwOB3V1dZSUlAAwPDxMW1sbk5OT\neDweampqAIjFYhw7doyhoSGysrLYvXs3TqdzkbYsIiJ3K+ErjQMHDvDyyy9z6NAhANrb2ykuLsbr\n9VJYWEh7eztwvRi6urpoaWmhsbERn8+HZVkA+Hw+duzYgdfr5cKFCwwMDADg9/tJS0vD6/WyZcsW\nTpw4YXibcjuaG5ujLM1SnvaUcGnc+H/8N/T19bF582YAKisrCQQCAAQCASoqKkhNTSUnJ4e8vDyC\nwSCRSITx8XEKCgoA2LRpE2fPnr3ptcrLyxkcHFz4zkRExLiESiMlJYWf/OQn7N+/n7feeguAaDQa\nHyFlZ2cTjUYBiEQiuN3u+HPdbjfhcJhIJILL5Yqfd7lchMNhAMLhcPw5DoeDzMxMRkdHDWxP5qO5\nsTnK0izlaU8Jfabx0ksv8eCDDzI8PMyhQ4d4+OGHZ/08JSVlURZ3O729vfFL12g0Su+f3okf33ij\n6Tix4xtXdXZZj451rOPFOzYhxfry3Gkeb775Ji6XC7/fz4EDB3A6nUQiEZqammhtbeXkyZMAbN26\nFYDm5ma2b9/O6tWraWpq4vDhw/HNnD9/np07d9Lc3My2bdsoKipienqaXbt2cfz48Vv+9/1+P+vX\nrwfgnY+vAvDYQw/c3e5FRJJEf38/VVVVC36decdTExMTjI2NAXDlyhXOnTvHmjVrKCsro7u7G4Ce\nnh42bNgAQFlZGadPnyYWixEKhRgZGaGgoACn00lGRgbBYBDLsjh16tSs5/T09ABw5swZ1q5du+CN\niYiIefNeaYRCIV555RUAHnjgATZu3Mi3v/3teW+59fv98VtuS0tLgc9vuZ2YmMDj8VBbWwtcv+X2\n6NGj8Vtu9+zZc9tbbnWlYdYXR32yMMrSLOVplqkrjTseTy01lYZZ+sU0R1mapTzN+srGU3Jv0y+l\nOcrSLOVpTyoNERFJmEojyeleeHOUpVnK055UGiIikjCVRpLT3NgcZWmW8rQnlYaIiCRMpZHkNDc2\nR1mapTztSaUhIiIJU2kkOc2NzVGWZilPe1JpiIhIwlQaSU5zY3OUpVnK055UGiIikjCVRpLT3Ngc\nZWmW8rQnlYaIiCRMpZHkNDc2R1mapTztSaUhIiIJU2kkOc2NzVGWZilPe1JpiIhIwlQaSU5zY3OU\npVnK055SE3nQzMwML7zwAi6XixdeeIGxsTGOHDlCKBQiNzeXhoYG0tPTAejo6KCzsxOHw0FdXR0l\nJSUADA8P09bWxuTkJB6Ph5qaGgBisRjHjh1jaGiIrKwsdu/ejdPpXKTtiojIQiR0pdHR0UF+fj4p\nKSkAtLe3U1xcjNfrpbCwkPb2duB6MXR1ddHS0kJjYyM+nw/LsgDw+Xzs2LEDr9fLhQsXGBgYAMDv\n95OWlobX62XLli2cOHFiEbYpt6O5sTnK0izlaU/zlsalS5c4d+4cTz75ZLwA+vr62Lx5MwCVlZUE\nAgEAAoEAFRUVpKamkpOTQ15eHsFgkEgkwvj4OAUFBQBs2rSJs2fP3vRa5eXlDA4Omt+liIgYMW9p\nvPnmm/zjP/4jK1Z8/tBoNBofIWVnZxONRgGIRCK43e7449xuN+FwmEgkgsvlip93uVyEw2EAwuFw\n/DkOh4PMzExGR0cNbE0SobmxOcrSLOVpT3OWxttvv01WVhZ/8Rd/Eb/K+LIbI6uv0hffTNFodNZx\nb2+vju/geHBw0Fbr0bGOdbx4xyakWLdrA+Df//3fOXXqFCtWrGBqaoqxsTEef/xxhoaGOHDgAE6n\nk0gkQlNTE62trZw8eRKArVu3AtDc3Mz27dtZvXo1TU1NHD58OL6R8+fPs3PnTpqbm9m2bRtFRUVM\nT0+za9cujh8/ftsF+/1+1q9fD8A7H18F4LGHHjCThojIPaq/v5+qqqoFv86cVxq1tbX88pe/xOfz\nsXfvXv7qr/6KhoYGysrK6O7uBqCnp4cNGzYAUFZWxunTp4nFYoRCIUZGRigoKMDpdJKRkUEwGMSy\nLE6dOjXrOT09PQCcOXOGtWvXLnhTIiKyOO7oexo3RlFPP/00f/zjH9m3bx/BYJCnn34agPz8fJ54\n4gmef/55vF4v9fX18efU19fzxhtvsG/fPr7+9a+zbt06AKqqqpiamqKxsZG33nqLZ5991uD2ZD6m\nL12TmbI0S3naU0Lf0wB49NFHefTRRwHIyMhg//79t3xcdXU11dXVN53Pz8/n4MGDNy8gNZX6+vpE\nlyEiIkto2X8jfNVKx1IvYVnTvfDmKEuzlKc9LfvSuP8+lYaIyFdl2ZeGLIzmxuYoS7OUpz2pNERE\nJGEqjSSnubE5ytIs5WlPKg0REUmYSiPJaW5sjrI0S3nak0pDREQSptJIcpobm6MszVKe9qTSEBGR\nhKk0kpzmxuYoS7OUpz2pNEREJGEqjSSnubE5ytIs5WlPKg0REUmYSiPJaW5sjrI0S3nak0pDREQS\nptJIcpobm6MszVKe9qTSEBGRhKk0kpzmxuYoS7OUpz3N+W+ET05OcuDAAaampli5ciUbN27k+9//\nPmNjYxw5coRQKERubi4NDQ2kp6cD0NHRQWdnJw6Hg7q6OkpKSgAYHh6mra2NyclJPB4PNTU1AMRi\nMY4dO8bQ0BBZWVns3r0bp9O5yNsWEZG7MeeVxsqVK3nxxRd55ZVXOHDgAF1dXXzyySe0t7dTXFyM\n1+ulsLCQ9vZ24HoxdHV10dLSQmNjIz6fD8uyAPD5fOzYsQOv18uFCxcYGBgAwO/3k5aWhtfrZcuW\nLZw4cWJxdyyzaG5sjrI0S3na07zjqfvuuw+A8fFxZmZmSEtLo6+vj82bNwNQWVlJIBAAIBAIUFFR\nQWpqKjk5OeTl5REMBolEIoyPj1NQUADApk2bOHv2LMCs1yovL2dwcND8LkVExIh5S2NmZoZ/+Zd/\nYefOnXz3u9/la1/7GtFoND5Cys7OJhqNAhCJRHC73fHnut1uwuEwkUgEl8sVP+9yuQiHwwCEw+H4\ncxwOB5mZmYyOjprbocxJc2NzlKVZytOe5i2NFStW8Morr/CLX/yC3//+93zwwQezfp6SkrJoi7ud\nL76ZJsYnZh339vbq+A6OBwcHbbUeHetYx4t3bEKKdeNDhwT86le/wu1287//+78cOHAAp9NJJBKh\nqamJ1tZWTp48CcDWrVsBaG5uZvv27axevZqmpiYOHz4c38j58+fZuXMnzc3NbNu2jaKiIqanp9m1\naxfHjx+/7Rr8fj/r168H4J2Pr5L7wEryHrjvrgMQEUkG/f39VFVVLfh15rzSuHLlCp999hkAV69e\nZWBggDVr1lBWVkZ3dzcAPT09bNiwAYCysjJOnz5NLBYjFAoxMjJCQUEBTqeTjIwMgsEglmVx6tSp\nWc/p6ekB4MyZM6xdu3bBmxIRkcUx55XGhx9+iM/nY2ZmBqfTycaNG3nyySfnveXW7/fHb7ktLS0F\nPr/ldmJiAo/HQ21tLXD9ltujR4/Gb7nds2fPnLfc6krDrN7eXt2lYoiyNEt5mmXqSuOOxlN2oNIw\nS7+Y5ihLs5SnWV/JeEruffqlNEdZmqU87UmlISIiCVNpJDnTt+MlM2VplvK0J5WGiIgkTKWR5DQ3\nNkdZmqU87UmlISIiCVNpJDnNjc1RlmYpT3tSaYiISMJUGklOc2NzlKVZytOeVBoiIpIwlUaS09zY\nHGVplvK0J5WGiIgkTKWR5DQ3NkdZmqU87UmlISIiCVNpJDnNjc1RlmYpT3tSaYiISMKWfWl8NjnN\nJ1cmlnoZy5bmxuYoS7OUpz0t+9IIX5siNDq51MsQEUkKy740ZGE0NzZHWZqlPO0pdb4HXLx4EZ/P\nRzQaJSsri8rKSiorKxkbG+PIkSOEQiFyc3NpaGggPT0dgI6ODjo7O3E4HNTV1VFSUgLA8PAwbW1t\nTE5O4vF4qKmpASAWi3Hs2DGGhobIyspi9+7dOJ3ORdy2iIjcjXmvNFJTU3nmmWf42c9+xo9+9CP+\n7d/+jeHhYdrb2ykuLsbr9VJYWEh7eztwvRi6urpoaWmhsbERn8+HZVkA+Hw+duzYgdfr5cKFCwwM\nDADg9/tJS0vD6/WyZcsWTpw4sXg7llk0NzZHWZqlPO1p3tJwOp184xvfACArK4tHHnmEcDhMX18f\nmzdvBqCyspJAIABAIBCgoqKC1NRUcnJyyMvLIxgMEolEGB8fp6CgAIBNmzZx9uxZgFmvVV5ezuDg\noPGNiojIwt3RZxojIyMMDw9TVFRENBqNj5Cys7OJRqMARCIR3G53/Dlut5twOEwkEsHlcsXPu1wu\nwuEwAOFwOP4ch8NBZmYmo6OjC9uZJERzY3OUpVnK054SLo3x8XFaW1t55pln4p9d3JCSkmJ8YXP5\n4ptpZsaKF9aNn33x5zqe+3hwcNBW69GxjnW8eMcmpFg3PnCYQywW46c//Snr1q3je9/7HgB79+7l\nwIEDOJ1OIpEITU1NtLa2cvLkSQC2bt0KQHNzM9u3b2f16tU0NTVx+PDh+GbOnz/Pzp07aW5uZtu2\nbRQVFTE9Pc2uXbs4fvz4Ldfi9/tZv349AO98fJXJ6RlWOlbw2EMPLDwNEZF7VH9/P1VVVQt+nXmv\nNCzL4rXXXiM/Pz9eGABlZWV0d3cD0NPTw4YNG+LnT58+TSwWIxQKMTIyQkFBAU6nk4yMDILBIJZl\ncerUqVnP6enpAeDMmTOsXbt2wRsTERHz5r3SeO+993jxxRdZs2ZNfAxVW1tLcXHxnLfc+v3++C23\npaWlwOe33E5MTODxeKitrQWuX8kcPXo0fsvtnj17bnvLra40zOrt7dVdKoYoS7OUp1mmrjTm/Z5G\nSUkJ//Ef/3HLn+3fv/+W56urq6murr7pfH5+PgcPHrx5Eamp1NfXz7cUERFZYvpGeJLTn+TMUZZm\nKU97UmmIiEjCVBpJzvTteMlMWZqlPO1JpSEiIglTaSQ5zY3NUZZmKU97UmmIiEjCVBpJTnNjc5Sl\nWcrTnlQaIiKSsHuiNBwr0L8Tfpc0NzZHWZqlPO3pniiN6Pi0/p1wEZGvwD1RGnL3NDc2R1mapTzt\nSaUhIiIJU2kkOc2NzVGWZilPe1JpiIhIwlQaSU5zY3OUpVnK055UGiIikjCVRpLT3NgcZWmW8rQn\nlYaIiCRs2ZbGJ1cmmJ77nzeXBGhubI6yNEt52tO8/0Z4W1sb586dIysri1dffRWAsbExjhw5QigU\nIjc3l4aGBtLT0wHo6Oigs7MTh8NBXV0dJSUlAAwPD9PW1sbk5CQej4eamhoAYrEYx44dY2hoiKys\nLHbv3o3T6Zx34aHRSaZnVBoiIl+lea80nnjiCX784x/POtfe3k5xcTFer5fCwkLa29uB68XQ1dVF\nS0sLjY2N+Hw+rP9/NeDz+dixYwder5cLFy4wMDAAgN/vJy0tDa/Xy5YtWzhx4oThLcpcNDc2R1ma\npTztad7SKC0tZdWqVbPO9fX1sXnzZgAqKysJBAIABAIBKioqSE1NJScnh7y8PILBIJFIhPHxcQoK\nCgDYtGkTZ8+evem1ysvLGRwcNLc7EREx6q4+04hGo/ERUnZ2NtFoFIBIJILb7Y4/zu12Ew6HiUQi\nuFyu+HmXy0U4HAYgHA7Hn+NwOMjMzGR0dPTudiN3THNjc5SlWcrTnhb8QXhKSoqJddyRL76ZZmYs\nYrHYrJ998ec6nvt4cHDQVuvRsY51vHjHJqRY1vy3IIVCIX7605/GPwjfu3cvBw4cwOl0EolEaGpq\norW1lZMnTwKwdetWAJqbm9m+fTurV6+mqamJw4cPxzdy/vx5du7cSXNzM9u2baOoqIjp6Wl27drF\n8ePHb7sWv9/P+vXreefjq0xOzwAwOW1x/0oHjz30wMLSEBG5R/X391NVVbXg17mrK42ysjK6u7sB\n6OnpYcOGDfHzp0+fJhaLEQqFGBkZoaCgAKfTSUZGBsFgEMuyOHXq1Kzn9PT0AHDmzBnWrl274E2J\niMjimPdKo7W1lfPnz3P16lWys7PZvn073/zmN+e85dbv98dvuS0tLQU+v+V2YmICj8dDbW0tcP2W\n26NHj8Zvud2zZ8+ct9zqSsOs3t5e3aViiLI0S3maZepKY97vaezdu/eW5/fv33/L89XV1VRXV990\nPj8/n4MHD968gNRU6uvr51uGiIjYwLL9RriYoT/JmaMszVKe9qTSEBGRhKk0kpzp2/GSmbI0S3na\n07IsjU+uTMQ/BL9h1UrHEq1GRCR5LMvSCI1OMjk9+6av++9TadwNzY3NUZZmKU97WpalISIiS0Ol\nkeQ0NzZHWZqlPO1JpSEiIglTaSQ5zY3NUZZmKU97UmmIiEjCVBpJTnNjc5SlWcrTnu6Z0vhscppP\nrkws9TJERO5p90xphK9NERqdXOplLDuaG5ujLM1SnvZ0z5QGgGMFXJ2Izf9AERG5K/dUaUTHp/ls\ncnqpl7GsaG5sjrI0S3na0z1VGiIisrhUGklOc2NzlKVZytOeVBoiIpIw25TGu+++y/PPP8++ffv4\nr//6r6VeTtLQ3NgcZWmW8rQnW5TGzMwMv/zlL2lsbKSlpYXOzk6Gh4eXelkiIvIltiiN999/n7y8\nPHJyckhNTaWiooK+vr67eq3PJqcZuniNS5/pOxuJ0NzYHGVplvK0p9SlXgBAOBzG7XbHj10uF++/\n//7dvda1qev/QFPK9WP3qpUmligiItjkSmMxhK9NcXk8xtDFa3xw6Vr8f2/837oSuU5zY3OUpVnK\n055scaXLan8fAAAE7klEQVThcrm4dOlS/PjSpUu4XK5bPtbpdHJ5JEjGF85l3OZ/o3P8N6PAn+9u\nufeUzMxM+vv7l3oZ9wRlaZbyNMvpdBp5HVuUxiOPPMLIyAihUAiXy8Uf/vAH9uzZc8vHejyer3h1\nIiJyQ4plWdZSLwKu33J74sQJpqenqaqqorq6eqmXJCIiX2Kb0hAREfu7Zz8IFxER81QaIiKSMFt8\nEJ6Id999lzfffDP+mcdTTz211EtaFp577jkyMjJYsWIFDoeDQ4cOMTY2xpEjRwiFQuTm5tLQ0EB6\nejoAHR0ddHZ24nA4qKuro6SkZIl3sHTa2to4d+4cWVlZvPrqqwB3ld3w8DBtbW1MTk7i8XioqalZ\nsj0tpVvl+dvf/pbOzk6ysrIAqKmp4a//+q8B5Tmfixcv4vP5iEajZGVlUVlZSWVl5eK/R61lYHp6\n2vrhD39offrpp9bU1JS1b98+66OPPlrqZS0L9fX11tWrV2ed+/Wvf22dPHnSsizL+t3vfmf95je/\nsSzLsj766CNr37591tTUlPXpp59aP/zhD63p6emvfM128e6771p/+tOfrB/96Efxc3eS3czMjGVZ\nlvXCCy9YwWDQsizLOnjwoHXu3LmveCf2cKs8f/vb31r/+Z//edNjlef8IpGI9cEHH1iWZVnRaNT6\nwQ9+YH300UeL/h5dFuMpk3/NSDKyvnSvQ19fH5s3bwagsrKSQCAAQCAQoKKigtTUVHJycsjLy7vr\nb+bfC0pLS1m1atWsc3eSXTAYJBKJMD4+TkFBAQCbNm3i7NmzX+1GbOJWecLN709QnolwOp184xvf\nACArK4tHHnmEcDi86O/RZTGeMvnXjCSblJQUfvKTn5CSksJ3vvMdtmzZQjQajX/RJzs7m2j0+tcg\nI5EIhYWF8ee63W7C4fCSrNuu7jS71NTUWV9UdblcyvRL/vu//5vOzk6Kior4p3/6J1atWqU879DI\nyAjDw8MUFRUt+nt0WZSG3L2XXnqJBx98kOHhYQ4dOsTDDz886+cpKSlzPn++nyczZbNw3/nOd/i7\nv/s7xsbG+PWvf82vfvUr/vmf/3mpl7WsjI+P09rayjPPPBP/7OKGxXiPLovx1J38NSMy24MPPghA\nfn4+jz/+OO+//z7Z2dlcvnwZuP6nj+zsbEA5J+JOsnO73Tf9qU2ZzpadnU1KSgqZmZl897vfjU8Q\nlGdiYrEYr776Kn/zN3/Dhg0bgMV/jy6L0vjiXzMSi8X4wx/+QFlZ2VIvy/YmJiYYGxsD4MqVK5w7\nd441a9ZQVlZGd3c3AD09PfE3W1lZGadPnyYWixEKhRgZGYnPOeW6O83O6XSSkZFBMBjEsixOnTrF\n448/voQ7sJdIJALA9PQ0vb29rFmzBlCeibAsi9dee438/Hy+973vxc8v9nt02XwjXH/NyJ0LhUK8\n8sorADzwwANs3LiRb3/72/Pekuf3++O35JWWli7lFpZUa2sr58+f5+rVq2RnZ7N9+3a++c1v3nF2\nN25nnJiYwOPxUFtbu5TbWjI38rxy5QpOp5Nt27bx7rvvcuHCBVJTUyktLeVv//Zv4/N45Tm39957\njxdffJE1a9bEx1C1tbUUFxcv6nt02ZSGiIgsvWUxnhIREXtQaYiISMJUGiIikjCVhoiIJEylISIi\nCVNpiIhIwlQaIiKSMJWGiIgk7P8BidKuliAOOEQAAAAASUVORK5CYII=\n", | |

| "text": [ | |

| "<matplotlib.figure.Figure at 0x10b2cfd90>" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 12 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Though most of the transits appear to be short, there are a few longer distances that make the plot difficult to read. This is where a transformation is useful:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "segments.seg_length.apply(np.log).hist(bins=500)" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 13, | |

| "text": [ | |

| "<matplotlib.axes.AxesSubplot at 0x10d30d8d0>" | |

| ] | |

| }, | |

| { | |

| "metadata": {}, | |

| "output_type": "display_data", | |

| "png": "iVBORw0KGgoAAAANSUhEUgAAAYMAAAECCAYAAAAciLtvAAAABHNCSVQICAgIfAhkiAAAAAlwSFlz\nAAALEgAACxIB0t1+/AAAIABJREFUeJzt3X9s1Pd9x/Hn+cxhjLGvdy14m8fSYQeyKIPFJFHkDEyo\nKpHkDySkTDSVAijrFFO6KKDA0lVKFrWw4SwQ70ylIbWRur8mK1ErMa0KP1KctsKAiaLSBoeEpl5M\nrviOO/+6+/p+7A/nLsY+G2N/v/5+P/B6SFH4fv09/Lqv8b3v+35/v9/z5fP5PCIickcrczuAiIi4\nT8VARERUDERERMVARERQMRAREVQMREQEKJ/ui+3t7XR3d1NdXc2rr74KwMjICG1tbUSjUZYtW8au\nXbuoqKgA4NixY5w4cQK/38/27dtZtWoVAL29vbS3t2NZFo2NjWzduhWATCbDf/7nf3L58mWqq6v5\nzne+QzAYdPL5iohICdMeGWzYsIEXX3zxhnUdHR2sXLmS1tZWGhoa6OjoAMZe8E+ePMmBAwfYvXs3\nkUiEwiUMkUiEHTt20NraypUrV7hw4QIAx48fZ8GCBbS2tvK1r32NH//4xw48RRERuZlpi8E999zD\n4sWLb1h39uxZ1q9fD0BzczNdXV0AdHV10dTURHl5OUuXLqW2tpaenh7i8TipVIr6+noA1q1bx5kz\nZyb9XQ899BDvv/++vc9ORERm5JZnBolEotjKqampIZFIABCPxwmHw8XtwuEwsViMeDxOKBQqrg+F\nQsRiMQBisVjxMX6/n8rKSgYHB2f/bEREZFbmNED2+Xx25RARERdNO0AupaamhuvXrxMMBonH49TU\n1ABj7/j7+/uL2/X39xMOh284Ehi/vvCYa9euEQqFyGazDA8PU1VVNeX3/vnPf47f77/VyCIid7Rg\nMEhjY+O029xyMVi7di2nTp1i8+bNvPPOOzzwwAPF9YcPH+aJJ54gFotx9epV6uvr8fl8LFq0iJ6e\nHurr6zl9+jSbNm0qPuadd97h7rvv5te//jX33XfftN/b7/dz//3332pkEZE72vnz52+6zbRtokOH\nDvG9732Pvr4+nn32WU6ePMmWLVu4dOkSe/bsoaenhy1btgBQV1fHhg0b2Lt3L62trbS0tBTbSC0t\nLfzoRz9iz549/MVf/AVr1qwBYOPGjYyOjrJ7927efvtttm3bNsen7A2dnZ1uR5gR5bSXCTlNyAjK\n6YZpjwyee+65kutfeOGFkusfe+wxHnvssUnr6+rq+MEPfjD5m5eX09LSMpOcIiLiIJ9Jn2dw/Phx\ntYlERG7R+fPn2bhx47Tb6HYUIiKiYuAEU/qIymkvE3KakBGU0w0qBiIiopmBiMjtTjMDERGZERUD\nB5jSR1ROe5mQ04SMoJxuUDEQERHNDEREbneaGYjx+pJp+pJpt2OI3PZUDBxgSh/RhJzRQYue//uj\n2zFmxIT9aUJGUE43qBiIiIhmBuJt7306AMDqP13ichIRc2lmICIiM6Ji4ABT+oim5Cx8zrbXmbA/\nTcgIyukGFQMREdHMQLxNMwORudPMQEREZkTFwAGm9BFNyamZgX1MyAjK6QYVAxER0cxAvE0zA5G5\n08xARERmRMXAAab0EU3JqZmBfUzICMrpBhUDERHRzEC8TTMDkbnTzEBERGZExcABpvQRTcmpmYF9\nTMgIyukGFQMREdHMQLxNMwORudPMQEREZkTFwAGm9BFNyTl+ZtCXTNOXTLuYZmom7E8TMoJyukHF\nQIwSHbSIDlpuxxC57WhmIJ42cWagGYLIrdPMQEREZkTFwAGm9BFNyanrDOxjQkZQTjeoGIiIyOxn\nBm+//TanTp1idHSUe+65h23btjEyMkJbWxvRaJRly5axa9cuKioqADh27BgnTpzA7/ezfft2Vq1a\nBUBvby/t7e1YlkVjYyNbt26d8ntqZnDn0cxAZO4cmxkMDg7y5ptv8s///M/s37+fvr4+Lly4QEdH\nBytXrqS1tZWGhgY6OjqAsRf8kydPcuDAAXbv3k0kEqFQgyKRCDt27KC1tZUrV65w4cKF2UQSEZE5\nmFUxCAQCAAwPD2NZFul0msWLF3P27FnWr18PQHNzM11dXQB0dXXR1NREeXk5S5cupba2lp6eHuLx\nOKlUivr6egDWrVvHmTNn7HherjKlj2hKTs0M7GNCRlBON5TP5kGBQIBnnnmGnTt3smDBAjZt2kRD\nQwOJRIJgMAhATU1N8Zc4Ho/T0NBQfHw4HCYWi1FeXk4oFCquD4VCxGKxuTwfERGZhVkdGSSTSY4e\nPcprr71GJBLh0qVLnDt37oZtfD6fLQEnGl+JOzs7Pbn8yCOPeCrPVMuFdV7JU2o5lU5TU1NTXB5/\nlOCFfKbtz4lZ3c4z1fIjjzziqTxTLY/nhTw3+/c5nVkNkM+fP88vfvELnnvuOQB+/vOfE41GOXv2\nLC+99BLBYJB4PM7LL7/MoUOHeOuttwDYvHkzAN///vd58skn+cpXvsLLL7/Ma6+9Vgx/8eJFvvWt\nb5X8vhog33k0QBaZO8cGyKtWreLy5csMDg4yOjpKd3c3q1evZu3atZw6dQqAd955hwceeACAtWvX\n8u6775LJZIhGo1y9epX6+nqCwSCLFi2ip6eHfD7P6dOnefDBB2cTyVNupRq7yZScmhnYx4SMoJxu\nmNXMoLKyki1btnDw4EEsy2L16tXce++91NfX09bWxp49e4qnlgLU1dWxYcMG9u7di9/vp6WlpdhG\namlpob29nXQ6TWNjI2vWrLHv2cltoaZyodsRRG57ujeReNp7nw6wbEmA2iULi8ugNpHIrdC9iURE\nZEZUDBxgSh/RlJzplDc/v2AiE/anCRlBOd2gYiAiIpoZiLdpZiAyd5oZiIjIjKgYOMCUPqIpOTUz\nsI8JGUE53aBiIJ7Vl0yTNaeLKWI0zQzEs977dAArm+PPgxWaGYjMgWYGIiIyIyoGDjClj2hKTs0M\n7GNCRlBON6gYiIiIZgbiXZoZiNhDMwMREZkRFQMHmNJHNCWnZgb2MSEjKKcbVAxEREQzA/EuzQxE\n7KGZgYiIzIiKgQNM6SOaklMzA/uYkBGU0w0qBiIiopmBeE9fcuxIIDpoaWYgYoOZzAzK5ymLyIxF\nBy23I4jccdQmcoApfURTcmpmYB8TMoJyukHFQERENDMQ7ynMBQDNDERsoOsMRERkRlQMHGBKH9GU\nnJoZ2MeEjKCcblAxEBERzQzEezQzELGXZgYiIjIjKgYOMKWPaEpOzQzsY0JGUE43qBiIiIhmBuI9\nmhmI2Ev3JpLbRuHmdSLiDLWJHGBKH9GUnMkRi95EyvM3sDNhf5qQEZTTDSoG4nnxkSxW1phupoiR\nZj0zSKVSHD16lE8++YTR0VFaWlqoq6ujra2NaDTKsmXL2LVrFxUVFQAcO3aMEydO4Pf72b59O6tW\nrQKgt7eX9vZ2LMuisbGRrVu3Tvk9NTO4M0ycGYz9P09VwF9cr5mByMw5ep3B0aNH+au/+iv+7d/+\njdbWVv7sz/6Mjo4OVq5cSWtrKw0NDXR0dABjL/gnT57kwIED7N69m0gkQqEGRSIRduzYQWtrK1eu\nXOHChQuzjSS3ucXjioGI2GtWxWB4eJjf/e53PProowD4/X4qKys5e/Ys69evB6C5uZmuri4Aurq6\naGpqory8nKVLl1JbW0tPTw/xeJxUKkV9fT0A69at48yZM3Y8L1eZ0kc0JWcuN/bGoWqht4uBCfvT\nhIygnG6Y1dlE0WiU6upqIpEIH330EQ0NDWzfvp1EIkEwGASgpqaGRCIBQDwep6Ghofj4cDhMLBaj\nvLycUChUXB8KhYjFYnN5PiIiMguzOjLIZrNcvnyZhx56iP3795PJZPjVr351wzY+n8+WgCZ65JFH\n3I4wI6bkLCsz49+SCfvThIygnG6YVTEIh8NUVVWxdu1aAoEATU1NXLhwgWAwyPXr14Gxo4Gamhpg\n7B1/f39/8fH9/f2Ew+FJRwL9/f03HCmUMv6wrLOzU8u38XIikSi2iGDsthSFo82pHv/JJ594Jr+W\nteyl5ZuZ9dlE3/3ud9m2bRsrVqzgRz/6EXfddRd9fX1UVVWxefNm3nrrLYaGhnjqqafo7e3l8OHD\n7N+/n1gsxiuvvMLrr7+Oz+fjxRdfZPv27dTX13PgwAE2bdrEmjVrSn5PU84m6uzsNOIdg1dzTjyb\nKJfLk8nDivAiPhuwWBzwU//lShcTlubV/TmeCRlBOe3m6BXIO3fuJBKJkEwmWb58OU899RT5fJ62\ntjb27NlTPLUUoK6ujg0bNrB37178fj8tLS3FNlJLSwvt7e2k02kaGxunLAQiBV4fJIuYSPcmEs95\n79MBFgf8DFnZG64zKBwZLFsSKN6rSERuTp9nIMbSu3+R+aVi4IBbGdq4yZSc44fIXmbC/jQhIyin\nG1QMRERExcAJJpxdAObk1HUG9jEhIyinG1QMRERExcAJpvQRTcmpmYF9TMgIyukGFQMREdF1BuI9\n7306wLIlAT4bsG64zqB2SYDY8OgNn4ksIjen6wzkthIbHtUnnok4RMXAAab0Eb2cc/zVx5oZ2MeE\njKCcblAxEE/SUYDI/NLMQDznvU8HsLI5rGyegH/sGoPCnwv3KNLMQGTmNDOQO05fMk1fMu12DBHj\nqBg4wJQ+oik5b2VmEB20iA5aDqaZmgn704SMoJxuUDEQT+lLpouDYxGZPyoGDjDlfiVezBkdtCYN\njnVvIvuYkBGU0w0qBiIiomLgBFP6iKbknDgzGLKyDKQzLqWZmgn704SMoJxuUDEQ48SGRxmysm7H\nELmtqBg4wJQ+oik5NTOwjwkZQTndoGIgIiIqBk4wpY9oSk7dm8g+JmQE5XSDioGIiKgYOMGUPqIp\nOTUzsI8JGUE53aBiICIiKgZOMKWPaEpOzQzsY0JGUE43qBiIiIiKgRNM6SOaklMzA/uYkBGU0w0q\nBiIiomLgBFP6iKbk1MzAPiZkBOV0g4qBiIioGDjBlD6iKTk1M7CPCRlBOd2gYiAiIioGTjClj2hK\nTs0M7GNCRlBON6gYiIiIioETTOkjmpJTMwP7mJARlNMN5XN5cC6XY9++fYRCIfbt28fIyAhtbW1E\no1GWLVvGrl27qKioAODYsWOcOHECv9/P9u3bWbVqFQC9vb20t7djWRaNjY1s3bp17s9KRERuyZyO\nDI4dO0ZdXR0+39g7t46ODlauXElraysNDQ10dHQAYy/4J0+e5MCBA+zevZtIJEI+P9YHjkQi7Nix\ng9bWVq5cucKFCxfm+JTcZ0of0ZScmhnYx4SMoJxumHUx6O/vp7u7m0cffbT4wn727FnWr18PQHNz\nM11dXQB0dXXR1NREeXk5S5cupba2lp6eHuLxOKlUivr6egDWrVvHmTNn5vqc5A7Vl0yT/fzfYl8y\nTV8y7XIiEXPMuhi88cYbfPOb36Ss7Iu/IpFIEAwGAaipqSGRSAAQj8cJh8PF7cLhMLFYjHg8TigU\nKq4PhULEYrHZRvIMU/qIpuSc6cwgOmiR/fwoIjpoER20nIw1iQn704SMoJxumFUxOHfuHNXV1Xz1\nq18tHhVMVGgdiYiI982qGHzwwQecO3eOnTt3cvjwYX7zm9/Q1tZGTU0N169fB8aOBmpqaoCxd/z9\n/f3Fx/f39xMOhycdCfT3999wpFDK+B5dZ2enJ5cL67ySZ6rlI0eOeCpPZ2dn8WgSIJPJkMvlizOD\nXC5PJpMpfr370u/pvvT74nIikbhhvpBIJO74/Tlx+ciRI57KM9XyxN8lt/NMtWzK/pwJX36qt/Yz\ndPHiRX7605+yb98+fvKTn1BVVcXmzZt56623GBoa4qmnnqK3t5fDhw+zf/9+YrEYr7zyCq+//jo+\nn48XX3yR7du3U19fz4EDB9i0aRNr1qwp+b2OHz/O/fffP5e486Kzs9OIw0cv5nzv0wEGrSwBvw8r\nmyfg940VgTw3rPvzYAWfDYy1gVb/6ZLiY61sji8tWsCQlb3ha/PBi/tzIhMygnLa7fz582zcuHHa\nbeZ0amlBoSW0ZcsW2tra2LNnT/HUUoC6ujo2bNjA3r178fv9tLS0FB/T0tJCe3s76XSaxsbGKQuB\nSUz4xwHm5Cwr80F25u9Zqhb6i8VgPpmwP03ICMrphjkfGcwnU44MZPZKHRkAxT/P5Mig1NdE7mQz\nOTLQFcgOuJU+nZtMyanrDOxjQkZQTjeoGIiIiIqBE0zpI5qSU/cmso8JGUE53aBiICIiKgZOMKWP\naErOUjODISuLlc25kGZqJuxPEzKCcrpBxUCMFBsexbqF001FZHoqBg4wpY9oSs5bnRm4ddRgwv40\nISMopxtUDOS2o6MGkVunYuAAU/qIpuTUdQb2MSEjKKcbVAxERETFwAmm9BFNyXmzmUFfMs1AOjPt\nNvPBhP1pQkZQTjfYcqM6ETdFBy18voDbMUSMpiMDB5jSRzQlp2YG9jEhIyinG1QMxGj+Mjx38ZmI\nidQmcoApfURTck73eQaJ1Px/bsFUTNifJmQE5XSDjgxERETFwAmm9BFNyamZgX1MyAjK6QYVAxER\nUTFwgil9RFNy6vMM7GNCRlBON6gYiIiIioETTOkjmpJTMwP7mJARlNMNKgYiIqJi4ART+oim5Jzr\nzKAvmaYvmbYpzdRM2J8mZATldIOKgdz2ooMW0UHL7RginqZi4ABT+oim5NTMwD4mZATldIOKgXhG\nXzJt632GFgf8tv1dIrc7Xz6fN+NtF3D8+HHuv/9+t2OIQ977dIBBa+xeQwG/DyubJ+AfmxcU/jxx\nXcGK8CI+G7CKxcTK5lkRXkQ+D72JFIsWlBGuDPAn1Qvn+VmJuO/8+fNs3Lhx2m10ZCC3teighZXN\nk0hlNTcQmYaKgQNM6SOaknMmM4MhK+v6raxN2J8mZATldIOKgXiK3ze700hjw6M3tI1E5NaoGDjA\nlHOPvZjTX+Jf5HTXGcy2eDjBi/tzIhMygnK6QcVAjFaqeEy37XxcfCZiIhUDB5jSRzQlp13XGTg9\nRDZhf5qQEZTTDSoGIiKiYuAEU/qIpuTU5xnYx4SMoJxuUDGQ256XhswiXlU+mwddu3aNSCRCIpGg\nurqa5uZmmpubGRkZoa2tjWg0yrJly9i1axcVFRUAHDt2jBMnTuD3+9m+fTurVq0CoLe3l/b2dizL\norGxka1bt9r37FzS2dlpxDsGU3LOdWbgL4Ns9ovlwhDZ7quRTdifJmQE5XTDrI4MysvLefrpp/n3\nf/93nn/+ef7rv/6L3t5eOjo6WLlyJa2trTQ0NNDR0QGMveCfPHmSAwcOsHv3biKRCIW7YEQiEXbs\n2EFraytXrlzhwoUL9j07MYbd9yWaju5iKjLZrIpBMBjkrrvuAqC6upoVK1YQi8U4e/Ys69evB6C5\nuZmuri4Aurq6aGpqory8nKVLl1JbW0tPTw/xeJxUKkV9fT0A69at48yZMzY8LXeZ8k7BSzkLt40o\nZbYzg/FXJc9Hq8hL+3MqJmQE5XTDnGcGV69epbe3l7vvvptEIkEwGASgpqaGRCIBQDweJxwOFx8T\nDoeJxWLE43FCoVBxfSgUIhaLzTWSCHDjVcm3cj2CyJ1oTr8iqVSKQ4cO8fTTTxdnAwU+h96JjT+v\nt7Oz05PLhXVeyTPV8pEjRzyVJ5PJ3DAfKCwX1uVyeTKZzE23Lyi1feENihP5vbY/Sy0fOXLEU3mm\nWp74u+R2nqmWTdmfMzHrW1hnMhn+9V//lTVr1vD4448D8Nxzz/HSSy8RDAaJx+O8/PLLHDp0iLfe\neguAzZs3A/D973+fJ598kq985Su8/PLLvPbaa8XwFy9e5Fvf+lbJ72nKLaw7O80YKnkpZ+H21eNv\nU134fy6XJ5O/+W2tZ3LL65oKPyOjOb60aAH1X6609Tl4aX9OxYSMoJx2c+wW1vl8nh/+8IfU1dUV\nCwHA2rVrOXXqFADvvPMODzzwQHH9u+++SyaTIRqNcvXqVerr6wkGgyxatIienh7y+TynT5/mwQcf\nnE0kTzHhHweYk9PO6wwSqSxWNk/VQvs/+MaE/WlCRlBON8zq1NIPPviA06dPs3z5cl544QUAvvGN\nb7Blyxba2trYs2dP8dRSgLq6OjZs2MDevXvx+/20tLQU20gtLS20t7eTTqdpbGxkzZo1Nj01kakN\nWVn6kml92I3I5/RJZw4w5dDRSznnq01UEPD7CPjLWP2nS2x7Dl7an1MxISMop930SWci0/CXwUA6\nc/MNRe4AKgYOMOGdApiT06l7EyVSWYas7M03nCET9qcJGUE53aBiIK6bz6uPdZ8ikdJUDBxwK+f2\nuskrOae7+hjs+zwDcPbiM6/sz+mYkBGU0w0qBiIiomLgBFP6iKbk1OcZ2MeEjKCcblAxEBERFQMn\nmNJHNCWnnTMDJ5mwP03ICMrpBhUDcdV8nkkkIlNTMXCAKX1EL+S82ZlEoJmBnUzICMrpBhUDERFR\nMXCCKX1EU3JqZmAfEzKCcrpBxUA8QVcGi7hLxcABpvQRvZRzuiuDnZwZDFlZ+ocsW/4uL+3PqZiQ\nEZTTDSoGckeLDY8yakgbSsRJKgYOMKWPaEpOzQzsY0JGUE43qBiIiIiKgRNM6SOaklPXGdjHhIyg\nnG5QMRDX6UwiEfepGDjAlD6iV3Le7DMG5mNm0JdM05dMz+nv8Mr+nI4JGUE53VDudgARtw1ZWWLD\nowT8ZfxJ9UK344i4wpfP5804VQM4fvw4999/v9sxxCZ9yTS9iVRx2crmCfh9k/5f6mtObF9T4Sdc\nGZi2IPQl01Qt9LNkod5HiTnOnz/Pxo0bp91GbSJxzUxuUjefBtM5ooPTX4AWHbQYsrLzlEhk/qgY\nOMCUPqJbOfuSaS5fGyY7w4PS+brOwF829t9Us4O+ZHrazCb83E3ICMrpBh3ryryLDloMWtlii8ZL\nEqksFeXZYkEY3zKKDlpkDbkATuRW6cjAAaace+xUTjvOzBlvvq8z8PmgN5G6actoIhN+7iZkBOV0\ng4qB2C46aE35QnqzVosXxIZHyebGWkYD6YzbcUTmhYqBA0zpI9qdszALsLI5Fgf8N6z7fXyEy9eG\n+b9k+pZbLW7cm8hfNtYyKgyLZ/LxnCb83E3ICMrpBs0MxDaFWQCMtVouXxsmnsqQzuQmncppiiEr\ny+VrwyTSmWL+IWtspqBrEuR2omLgAFP6iE7mjA2P2vbiX1bmA5dOQS31PGLDowz6s5OKgQk/dxMy\ngnK6QW0isYUJswARmZrRxcDus1bsYkofcaY5x+/nifu8L5mmf8hy9LRLfZ6BfUzICMrpBqPbRIUz\nVkr1bkudJy43N3G/FW4ZEfj8bnK9iRSLFpQxbGWpDPiJDlosWxJwLa+I2MPoYjCd8YVivguDKX3E\nUjknnhJauGVEwP/Fn61slgRZqj4/Y2giu29J7ebMYCr+Mugfsggv/qIQmvBzNyEjKKcbbttiMN50\nRxAw+fYDOpqYXBRuxc1uSX07SKSyLFowdoaRjkzlduCJX9uLFy+yd+9e9uzZw//8z//M+/cvXCQ1\n3cVSE5WaVxTOqe++9HsnYtqiL5kuXkg1Vb9z8YR3/OPf6Rf+PH7dkJW96Tn4c+HVmUFseJT+YYv+\nIYu+ZLr4c+9Lpvm/ZJpEKuO5mZYpPW7lnH+uHxnkcjmOHDnC9773PUKhEP/0T//EfffdR11dndvR\nbjDxl7pUG6pwnn0266cvmS721ad75wjcsF1fMl08J3/YyrJ0SYDBdJaqhX4G09kbvlZQ5oOKBWMv\n4KnRLLl86XXlfh/RQYtQ5QKiAxZfXnEvv4+PkMnmKff7yGTzjOby+HxgZXMsWVjOQDqDvwwWlpcV\n/5zNfnFTNyubt/U0UtMUjhASqQxUVPFx/zDXhkeBsauXM7lccf9P9W9BxAtcLwYffvghtbW1LF26\nFICmpibOnj3ruWIw3RHDxK/V1NQUC0NViReAiUPZ8dsVBrKFxy9eODak9fkCk75WEPD7CPjHlq1s\nrvjCvGhBlpHR3Ofrv7iH/xcv3mUMFGcCX1wUVvj6wLgLrcb/uSCRmp+bzXlxZjBe7PMXfyvnJzuh\nMCZSYz8DK5snXLmAYStLmQ+qK8oJLw5M2U4qNcif7s3FTJnS41bO+ed6MYjFYoTD4eJyKBTiww8/\ndDGR88YPZZ2USI0ViDvxHbsXjS+oOeD6SIZ4KkNFuY/U6NjPavwR3JKF5cXi0T8ydiX3+IJSsUBH\nGmIf14vB7SiRSFBTU+N2jJvK5fLzfkfQ2fDqzGCiTCZDwL9gRtuOb60lUrmSR3CljsbGr1uyMF8s\nDIXWYOH/UHrd4PAwVZWVxRZiQWo0WywuhVbl+DOlZsquwXlnZ+ekd91eHMqXymkq1z/28tKlS/z3\nf/833/3udwF488038fl8bN68edK2586d4/r16/MdUUTEaMFgkMbGxmm3cf3IYMWKFVy9epVoNEoo\nFOKXv/wl//iP/1hy25s9GRERmR3Xjwxg7NTSH//4x2SzWTZu3Mhjjz3mdiQRkTuKJ4qBiIi4yxMX\nnYmIiLtUDERExP0B8kxdvHiRN954ozhX2LRpk9uRJmlvb6e7u5vq6mpeffVVt+NM6dq1a0QiERKJ\nBNXV1TQ3N9Pc3Ox2rBtYlsVLL73E6OgogUCAhx9+mCeeeMLtWFPK5XLs27ePUCjEvn373I5T0s6d\nO1m0aBFlZWX4/X7279/vdqSSUqkUR48e5ZNPPmF0dJRnn32Wu+++2+1YN/j00085dOhQcfmzzz7j\n7/7u7zw573z77bc5deoUo6Oj3HPPPWzbtq30hnkDZLPZ/Le//e38Z599lh8dHc3v2bMn/4c//MHt\nWJNcvHgx/9FHH+Wff/55t6NMKx6P5z/++ON8Pp/PJxKJ/DPPPOPJ/ZlKpfL5fD5vWVb++eefz/f1\n9bmcaGo/+9nP8ocPH84fOHDA7ShTamlpyQ8MDLgd46ba2tryx48fz+fz+Xwmk8kPDQ25nGh62Ww2\n//d///f5P/7xj25HmWRgYCDf0tKSHxkZyWez2fwPfvCDfHd3d8ltjWgTjb9lRXl5efGWFV5zzz33\nsHjxYrenaMLJAAADrUlEQVRj3FQwGOSuu+4CoLq6mhUrVhCPx90NVcLChWMXF6VSKbLZLOXl3jyQ\n7e/vp7u7m0cffZS8x8/H8Hq+4eFhfve73/Hoo48C4Pf7qaysdDnV9N5//32WLVvGl7/8ZbejTBII\njF04ODw8jGVZpNNpqqqqSm7rzd+uCe7EW1bMl6tXr9Lb20tDQ4PbUSbJ5XLs3buXP/zhD2zbts2T\nv2wAb7zxBt/85jcZGRlxO8q0fD4f//Iv/4LP5+PrX/86X/va19yONEk0GqW6uppIJMJHH31EQ0MD\nO3bsKL6oedG7777r2auQA4EAzzzzDDt37mTBggVs2rSJ+vr6ktsacWQgzkilUhw6dIinn36aiooK\nt+NMUlZWxsGDB3n99df53//9Xz7++GO3I01y7tw5qqur+epXv+r5d92vvPIKBw8e5Dvf+Q5vvvkm\nv/3tb92ONEk2m+Xy5cs89NBD7N+/n0wmw69+9Su3Y00pk8lw7tw5Hn74YbejlJRMJjl69CivvfYa\nkUiES5cucf78+ZLbGlEMQqEQ/f39xeX+/n5CoZCLicyXyWR49dVX+du//VseeOABt+NMa+nSpfzN\n3/wNFy9edDvKJB988AHnzp1j586dHD58mN/85jf8x3/8h9uxSvrSl74EQF1dHQ8++KAnj67D4TBV\nVVWsXbuWQCBAU1MT3d3dbseaUnd3N3/5l39JdXW121FK+vDDD2loaKC2tpYlS5bw8MMPT/l7ZEQx\nGH/Likwmwy9/+UvWrl3rdixj5fN5fvjDH1JXV8fjjz/udpySkskkQ0NDAAwMDHDhwgWWL1/ucqrJ\nvvGNb3DkyBEikQjPPfcc9957L9/+9rfdjjVJOp0utrGSySTd3d2e3J/BYJDa2lp6enrI5XKcP3+e\nv/7rv3Y71pTeffddmpqa3I4xpVWrVnH58mUGBwcZHR2lu7ub1atXl9zWiJmB3+/n2WefpbW1tXhq\nqdc+7wDg0KFD/Pa3v2VgYIBnn32WJ598kg0bNrgda5IPPviA06dPs3z5cl544QVg7EVtzZo1Lif7\nwvXr14lEIuRyOYLBIE888QT33Xef27Fuymfz5z/bJZFIcPDgQQCWLFnC448/PuWLgtt27txJJBIh\nmUyyfPlynnrqKbcjlZRKpXj//ff5h3/4B7ejTKmyspItW7Zw8OBBLMti9erV3HvvvSW31e0oRETE\njDaRiIg4S8VARERUDERERMVARERQMRAREVQMREQEFQMREUHFQEREgP8HXM8GaS2rZLkAAAAASUVO\nRK5CYII=\n", | |

| "text": [ | |

| "<matplotlib.figure.Figure at 0x10da5df10>" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 13 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "We can see that although there are date/time fields in the dataset, they are not in any specialized format, such as `datetime`." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "segments.st_time.dtype" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 15, | |

| "text": [ | |

| "dtype('O')" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 15 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Our first order of business will be to convert these data to `datetime`. The `strptime` method parses a string representation of a date and/or time field, according to the expected format of this information." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "datetime.strptime(segments.st_time.ix[0], '%m/%d/%y %H:%M')" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 16, | |

| "text": [ | |

| "datetime.datetime(2009, 2, 10, 16, 3)" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 16 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "The `dateutil` package includes a parser that attempts to detect the format of the date strings, and convert them automatically." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "from dateutil.parser import parse" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [], | |

| "prompt_number": 17 | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "parse(segments.st_time.ix[0])" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 18, | |

| "text": [ | |

| "datetime.datetime(2009, 2, 10, 16, 3)" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 18 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "We can convert all the dates in a particular column by using the `apply` method." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "segments.st_time.apply(lambda d: datetime.strptime(d, '%m/%d/%y %H:%M'))" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 19, | |

| "text": [ | |

| "0 2009-02-10 16:03:00\n", | |

| "1 2009-04-06 14:31:00\n", | |

| "2 2009-04-06 14:36:00\n", | |

| "3 2009-04-10 17:58:00\n", | |

| "4 2009-04-10 17:59:00\n", | |

| "5 2010-03-20 16:06:00\n", | |

| "6 2010-03-20 18:05:00\n", | |

| "7 2011-05-04 11:28:00\n", | |

| "8 2010-06-05 11:23:00\n", | |

| "9 2010-06-08 11:03:00\n", | |

| "...\n", | |

| "262515 2010-05-31 14:27:00\n", | |

| "262516 2010-06-05 05:25:00\n", | |

| "262517 2010-06-27 02:35:00\n", | |

| "262518 2010-07-01 03:49:00\n", | |

| "262519 2010-07-02 03:30:00\n", | |

| "262520 2010-06-13 10:32:00\n", | |

| "262521 2010-06-15 12:49:00\n", | |

| "262522 2010-06-15 21:32:00\n", | |

| "262523 2010-06-17 19:16:00\n", | |

| "262524 2010-06-18 02:52:00\n", | |

| "262525 2010-06-18 10:19:00\n", | |

| "Name: st_time, Length: 262526, dtype: datetime64[ns]" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 19 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "As a convenience, Pandas has a `to_datetime` method that will parse and convert an entire Series of formatted strings into `datetime` objects." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "pd.to_datetime(segments.st_time)" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 20, | |

| "text": [ | |

| "0 2009-02-10 16:03:00\n", | |

| "1 2009-04-06 14:31:00\n", | |

| "2 2009-04-06 14:36:00\n", | |

| "3 2009-04-10 17:58:00\n", | |

| "4 2009-04-10 17:59:00\n", | |

| "5 2010-03-20 16:06:00\n", | |

| "6 2010-03-20 18:05:00\n", | |

| "7 2011-05-04 11:28:00\n", | |

| "8 2010-06-05 11:23:00\n", | |

| "9 2010-06-08 11:03:00\n", | |

| "...\n", | |

| "262515 2010-05-31 14:27:00\n", | |

| "262516 2010-06-05 05:25:00\n", | |

| "262517 2010-06-27 02:35:00\n", | |

| "262518 2010-07-01 03:49:00\n", | |

| "262519 2010-07-02 03:30:00\n", | |

| "262520 2010-06-13 10:32:00\n", | |

| "262521 2010-06-15 12:49:00\n", | |

| "262522 2010-06-15 21:32:00\n", | |

| "262523 2010-06-17 19:16:00\n", | |

| "262524 2010-06-18 02:52:00\n", | |

| "262525 2010-06-18 10:19:00\n", | |

| "Name: st_time, Length: 262526, dtype: datetime64[ns]" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 20 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Pandas also has a custom NA value for missing datetime objects, `NaT`." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "pd.to_datetime([None])" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 21, | |

| "text": [ | |

| "<class 'pandas.tseries.index.DatetimeIndex'>\n", | |

| "[NaT]\n", | |

| "Length: 1, Freq: None, Timezone: None" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 21 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Also, if `to_datetime()` has problems parsing any particular date/time format, you can pass the spec in using the `format=` argument." | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "## Merging and joining DataFrame objects" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Now that we have the vessel transit information as we need it, we may want a little more information regarding the vessels themselves. In the `data/AIS` folder there is a second table that contains information about each of the ships that traveled the segments in the `segments` table." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "vessels = pd.read_csv(\"data/AIS/vessel_information.csv\", index_col='mmsi')\n", | |

| "vessels.head()" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 22, | |

| "text": [ | |

| " num_names names sov \\\n", | |

| "mmsi \n", | |

| "1 8 Bil Holman Dredge/Dredge Capt Frank/Emo/Offsho... Y \n", | |

| "9 3 000000009/Raven/Shearwater N \n", | |

| "21 1 Us Gov Vessel Y \n", | |

| "74 2 Mcfaul/Sarah Bell N \n", | |

| "103 3 Ron G/Us Navy Warship 103/Us Warship 103 Y \n", | |

| "\n", | |

| " flag flag_type num_loas loa \\\n", | |

| "mmsi \n", | |

| "1 Unknown Unknown 7 42.0/48.0/57.0/90.0/138.0/154.0/156.0 \n", | |

| "9 Unknown Unknown 2 50.0/62.0 \n", | |

| "21 Unknown Unknown 1 208.0 \n", | |

| "74 Unknown Unknown 1 155.0 \n", | |

| "103 Unknown Unknown 2 26.0/155.0 \n", | |

| "\n", | |

| " max_loa num_types type \n", | |

| "mmsi \n", | |

| "1 156 4 Dredging/MilOps/Reserved/Towing \n", | |

| "9 62 2 Pleasure/Tug \n", | |

| "21 208 1 Unknown \n", | |

| "74 155 1 Unknown \n", | |

| "103 155 2 Tanker/Unknown " | |

| ] | |

| } | |

| ], | |

| "prompt_number": 22 | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "[v for v in vessels.type.unique() if v.find('/')==-1]" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 23, | |

| "text": [ | |

| "['Unknown',\n", | |

| " 'Other',\n", | |

| " 'Tug',\n", | |

| " 'Towing',\n", | |

| " 'Pleasure',\n", | |

| " 'Cargo',\n", | |

| " 'WIG',\n", | |

| " 'Fishing',\n", | |

| " 'BigTow',\n", | |

| " 'MilOps',\n", | |

| " 'Tanker',\n", | |

| " 'Passenger',\n", | |

| " 'SAR',\n", | |

| " 'Sailing',\n", | |

| " 'Reserved',\n", | |

| " 'Law',\n", | |

| " 'Dredging',\n", | |

| " 'AntiPol',\n", | |

| " 'Pilot',\n", | |

| " 'HSC',\n", | |

| " 'Diving',\n", | |

| " 'Resol-18',\n", | |

| " 'Tender',\n", | |

| " 'Spare',\n", | |

| " 'Medical']" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 23 | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "vessels.type.value_counts()" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 28, | |

| "text": [ | |

| "Cargo 5622\n", | |

| "Tanker 2440\n", | |

| "Pleasure 601\n", | |

| "Tug 221\n", | |

| "Sailing 205\n", | |

| "Fishing 200\n", | |

| "Other 178\n", | |

| "Passenger 150\n", | |

| "Towing 117\n", | |

| "Unknown 106\n", | |

| "...\n", | |

| "BigTow/Tanker/Towing/Tug 1\n", | |

| "Fishing/SAR/Unknown 1\n", | |

| "BigTow/Reserved/Towing/Tug/WIG 1\n", | |

| "Reserved/Tanker/Towing/Tug 1\n", | |

| "Cargo/Reserved/Unknown 1\n", | |

| "Reserved/Towing/Tug 1\n", | |

| "BigTow/Unknown 1\n", | |

| "Fishing/Law 1\n", | |

| "BigTow/Towing/WIG 1\n", | |

| "Towing/Unknown/WIG 1\n", | |

| "AntiPol/Fishing/Pleasure 1\n", | |

| "Length: 206, dtype: int64" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 28 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "The challenge, however, is that several ships have travelled multiple segments, so there is not a one-to-one relationship between the rows of the two tables. The table of vessel information has a *one-to-many* relationship with the segments.\n", | |

| "\n", | |

| "In Pandas, we can combine tables according to the value of one or more *keys* that are used to identify rows, much like an index. Using a trivial example:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "df1 = pd.DataFrame(dict(id=range(4), age=np.random.randint(18, 31, size=4)))\n", | |

| "df2 = pd.DataFrame(dict(id=range(3)+range(3), score=np.random.random(size=6)))\n", | |

| "\n", | |

| "df1, df2" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 30, | |

| "text": [ | |

| "( age id\n", | |

| "0 27 0\n", | |

| "1 21 1\n", | |

| "2 30 2\n", | |

| "3 29 3,\n", | |

| " id score\n", | |

| "0 0 0.471604\n", | |

| "1 1 0.910118\n", | |

| "2 2 0.163896\n", | |

| "3 0 0.811360\n", | |

| "4 1 0.799790\n", | |

| "5 2 0.951837)" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 30 | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "pd.merge(df1, df2)" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 31, | |

| "text": [ | |

| " age id score\n", | |

| "0 27 0 0.471604\n", | |

| "1 27 0 0.811360\n", | |

| "2 21 1 0.910118\n", | |

| "3 21 1 0.799790\n", | |

| "4 30 2 0.163896\n", | |

| "5 30 2 0.951837" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 31 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Notice that without any information about which column to use as a key, Pandas did the right thing and used the `id` column in both tables. Unless specified otherwise, `merge` will used any common column names as keys for merging the tables. \n", | |

| "\n", | |

| "Notice also that `id=3` from `df1` was omitted from the merged table. This is because, by default, `merge` performs an **inner join** on the tables, meaning that the merged table represents an intersection of the two tables." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "pd.merge(df1, df2, how='outer')" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 32, | |

| "text": [ | |

| " age id score\n", | |

| "0 27 0 0.471604\n", | |

| "1 27 0 0.811360\n", | |

| "2 21 1 0.910118\n", | |

| "3 21 1 0.799790\n", | |

| "4 30 2 0.163896\n", | |

| "5 30 2 0.951837\n", | |

| "6 29 3 NaN" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 32 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "The **outer join** above yields the union of the two tables, so all rows are represented, with missing values inserted as appropriate. One can also perform **right** and **left** joins to include all rows of the right or left table (*i.e.* first or second argument to `merge`), but not necessarily the other." | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Looking at the two datasets that we wish to merge:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "segments.head(1)" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 33, | |

| "text": [ | |

| " mmsi name transit segment seg_length avg_sog min_sog max_sog \\\n", | |

| "0 1 Us Govt Ves 1 1 5.1 13.2 9.2 14.5 \n", | |

| "\n", | |

| " pdgt10 st_time end_time \n", | |

| "0 96.5 2/10/09 16:03 2/10/09 16:27 " | |

| ] | |

| } | |

| ], | |

| "prompt_number": 33 | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "vessels.head(1)" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 34, | |

| "text": [ | |

| " num_names names sov \\\n", | |

| "mmsi \n", | |

| "1 8 Bil Holman Dredge/Dredge Capt Frank/Emo/Offsho... Y \n", | |

| "\n", | |

| " flag flag_type num_loas loa \\\n", | |

| "mmsi \n", | |

| "1 Unknown Unknown 7 42.0/48.0/57.0/90.0/138.0/154.0/156.0 \n", | |

| "\n", | |

| " max_loa num_types type \n", | |

| "mmsi \n", | |

| "1 156 4 Dredging/MilOps/Reserved/Towing " | |

| ] | |

| } | |

| ], | |

| "prompt_number": 34 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "we see that there is a `mmsi` value (a vessel identifier) in each table, but it is used as an index for the `vessels` table. In this case, we have to specify to join on the index for this table, and on the `mmsi` column for the other." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "segments_merged = pd.merge(vessels, segments, left_index=True, right_on='mmsi')" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [], | |

| "prompt_number": 35 | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "segments_merged.head()" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 36, | |

| "text": [ | |

| "<class 'pandas.core.frame.DataFrame'>\n", | |

| "Int64Index: 5 entries, 0 to 4\n", | |

| "Data columns (total 21 columns):\n", | |

| "num_names 5 non-null values\n", | |

| "names 5 non-null values\n", | |

| "sov 5 non-null values\n", | |

| "flag 5 non-null values\n", | |

| "flag_type 5 non-null values\n", | |

| "num_loas 5 non-null values\n", | |

| "loa 5 non-null values\n", | |

| "max_loa 5 non-null values\n", | |

| "num_types 5 non-null values\n", | |

| "type 5 non-null values\n", | |

| "mmsi 5 non-null values\n", | |

| "name 5 non-null values\n", | |

| "transit 5 non-null values\n", | |

| "segment 5 non-null values\n", | |

| "seg_length 5 non-null values\n", | |

| "avg_sog 5 non-null values\n", | |

| "min_sog 5 non-null values\n", | |

| "max_sog 5 non-null values\n", | |

| "pdgt10 5 non-null values\n", | |

| "st_time 5 non-null values\n", | |

| "end_time 5 non-null values\n", | |

| "dtypes: float64(6), int64(6), object(9)" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 36 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "In this case, the default inner join is suitable; we are not interested in observations from either table that do not have corresponding entries in the other. \n", | |

| "\n", | |

| "Notice that `mmsi` field that was an index on the `vessels` table is no longer an index on the merged table." | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Here, we used the `merge` function to perform the merge; we could also have used the `merge` method for either of the tables:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "vessels.merge(segments, left_index=True, right_on='mmsi').head()" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 37, | |

| "text": [ | |

| "<class 'pandas.core.frame.DataFrame'>\n", | |

| "Int64Index: 5 entries, 0 to 4\n", | |

| "Data columns (total 21 columns):\n", | |

| "num_names 5 non-null values\n", | |

| "names 5 non-null values\n", | |

| "sov 5 non-null values\n", | |

| "flag 5 non-null values\n", | |

| "flag_type 5 non-null values\n", | |

| "num_loas 5 non-null values\n", | |

| "loa 5 non-null values\n", | |

| "max_loa 5 non-null values\n", | |

| "num_types 5 non-null values\n", | |

| "type 5 non-null values\n", | |

| "mmsi 5 non-null values\n", | |

| "name 5 non-null values\n", | |

| "transit 5 non-null values\n", | |

| "segment 5 non-null values\n", | |

| "seg_length 5 non-null values\n", | |

| "avg_sog 5 non-null values\n", | |

| "min_sog 5 non-null values\n", | |

| "max_sog 5 non-null values\n", | |

| "pdgt10 5 non-null values\n", | |

| "st_time 5 non-null values\n", | |

| "end_time 5 non-null values\n", | |

| "dtypes: float64(6), int64(6), object(9)" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 37 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Occasionally, there will be fields with the same in both tables that we do not wish to use to join the tables; they may contain different information, despite having the same name. In this case, Pandas will by default append suffixes `_x` and `_y` to the columns to uniquely identify them." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "segments['type'] = 'foo'\n", | |

| "pd.merge(vessels, segments, left_index=True, right_on='mmsi').head()" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 38, | |

| "text": [ | |

| "<class 'pandas.core.frame.DataFrame'>\n", | |

| "Int64Index: 5 entries, 0 to 4\n", | |

| "Data columns (total 22 columns):\n", | |

| "num_names 5 non-null values\n", | |

| "names 5 non-null values\n", | |

| "sov 5 non-null values\n", | |

| "flag 5 non-null values\n", | |

| "flag_type 5 non-null values\n", | |

| "num_loas 5 non-null values\n", | |

| "loa 5 non-null values\n", | |

| "max_loa 5 non-null values\n", | |

| "num_types 5 non-null values\n", | |

| "type_x 5 non-null values\n", | |

| "mmsi 5 non-null values\n", | |

| "name 5 non-null values\n", | |

| "transit 5 non-null values\n", | |

| "segment 5 non-null values\n", | |

| "seg_length 5 non-null values\n", | |

| "avg_sog 5 non-null values\n", | |

| "min_sog 5 non-null values\n", | |

| "max_sog 5 non-null values\n", | |

| "pdgt10 5 non-null values\n", | |

| "st_time 5 non-null values\n", | |

| "end_time 5 non-null values\n", | |

| "type_y 5 non-null values\n", | |

| "dtypes: float64(6), int64(6), object(10)" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 38 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "This behavior can be overridden by specifying a `suffixes` argument, containing a list of the suffixes to be used for the columns of the left and right columns, respectively." | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "## Concatenation\n", | |

| "\n", | |

| "A common data manipulation is appending rows or columns to a dataset that already conform to the dimensions of the exsiting rows or colums, respectively. In NumPy, this is done either with `concatenate` or the convenience functions `c_` and `r_`:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "np.concatenate([np.random.random(5), np.random.random(5)])" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 39, | |

| "text": [ | |

| "array([ 0.87689804, 0.87175082, 0.79683534, 0.48575766, 0.44686505,\n", | |

| " 0.96962006, 0.6165222 , 0.02947637, 0.034778 , 0.16926134])" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 39 | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "np.r_[np.random.random(5), np.random.random(5)]" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 40, | |

| "text": [ | |

| "array([ 0.51606204, 0.16778644, 0.11720138, 0.47316661, 0.04843167,\n", | |

| " 0.83395133, 0.15165154, 0.38555231, 0.91867151, 0.7072754 ])" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 40 | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "np.c_[np.random.random(5), np.random.random(5)]" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 41, | |

| "text": [ | |

| "array([[ 0.02698923, 0.34078316],\n", | |

| " [ 0.42019534, 0.22171457],\n", | |

| " [ 0.8503663 , 0.78958016],\n", | |

| " [ 0.21265774, 0.27176873],\n", | |

| " [ 0.65934945, 0.53319755]])" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 41 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "This operation is also called *binding* or *stacking*.\n", | |

| "\n", | |

| "With Pandas' indexed data structures, there are additional considerations as the overlap in index values between two data structures affects how they are concatenate.\n", | |

| "\n", | |

| "Lets import two microbiome datasets, each consisting of counts of microorganiams from a particular patient. We will use the first column of each dataset as the index." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "mb1 = pd.read_excel('data/microbiome/MID1.xls', 'Sheet 1', index_col=0, header=None)\n", | |

| "mb2 = pd.read_excel('data/microbiome/MID2.xls', 'Sheet 1', index_col=0, header=None)\n", | |

| "mb1.shape, mb2.shape" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 42, | |

| "text": [ | |

| "((272, 1), (288, 1))" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 42 | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "mb1.head()" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 43, | |

| "text": [ | |

| " 1\n", | |

| "0 \n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Desulfurococcales Desulfurococcaceae Ignisphaera 7\n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Desulfurococcales Pyrodictiaceae Pyrolobus 2\n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Sulfolobales Sulfolobaceae Stygiolobus 3\n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Thermoproteales Thermofilaceae Thermofilum 3\n", | |

| "Archaea \"Euryarchaeota\" \"Methanomicrobia\" Methanocellales Methanocellaceae Methanocella 7" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 43 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Let's give the index and columns meaningful labels:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "mb1.columns = mb2.columns = ['Count']" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [], | |

| "prompt_number": 44 | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "mb1.index.name = mb2.index.name = 'Taxon'" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [], | |

| "prompt_number": 45 | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "mb1.head()" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 46, | |

| "text": [ | |

| " Count\n", | |

| "Taxon \n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Desulfurococcales Desulfurococcaceae Ignisphaera 7\n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Desulfurococcales Pyrodictiaceae Pyrolobus 2\n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Sulfolobales Sulfolobaceae Stygiolobus 3\n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Thermoproteales Thermofilaceae Thermofilum 3\n", | |

| "Archaea \"Euryarchaeota\" \"Methanomicrobia\" Methanocellales Methanocellaceae Methanocella 7" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 46 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

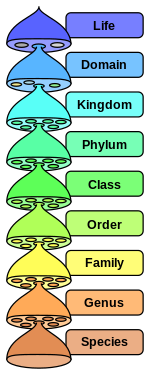

| "source": [ | |

| "The index of these data is the unique biological classification of each organism, beginning with *domain*, *phylum*, *class*, and for some organisms, going all the way down to the genus level.\n", | |

| "\n", | |

| "" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "mb1.index[:3]" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 47, | |

| "text": [ | |

| "Index([u'Archaea \"Crenarchaeota\" Thermoprotei Desulfurococcales Desulfurococcaceae Ignisphaera', u'Archaea \"Crenarchaeota\" Thermoprotei Desulfurococcales Pyrodictiaceae Pyrolobus', u'Archaea \"Crenarchaeota\" Thermoprotei Sulfolobales Sulfolobaceae Stygiolobus'], dtype=object)" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 47 | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "mb1.index.is_unique" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 48, | |

| "text": [ | |

| "True" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 48 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "If we concatenate along `axis=0` (the default), we will obtain another data frame with the the rows concatenated:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "pd.concat([mb1, mb2], axis=0).shape" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 49, | |

| "text": [ | |

| "(560, 1)" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 49 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "However, the index is no longer unique, due to overlap between the two DataFrames." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "pd.concat([mb1, mb2], axis=0).index.is_unique" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 50, | |

| "text": [ | |

| "False" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 50 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Concatenating along `axis=1` will concatenate column-wise, but respecting the indices of the two DataFrames." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "pd.concat([mb1, mb2], axis=1).shape" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 51, | |

| "text": [ | |

| "(438, 2)" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 51 | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "pd.concat([mb1, mb2], axis=1).head()" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 52, | |

| "text": [ | |

| " Count \\\n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Acidilobales Acidilobaceae Acidilobus NaN \n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Acidilobales Caldisphaeraceae Caldisphaera NaN \n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Desulfurococcales Desulfurococcaceae Ignisphaera 7 \n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Desulfurococcales Desulfurococcaceae Sulfophobococcus NaN \n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Desulfurococcales Desulfurococcaceae Thermosphaera NaN \n", | |

| "\n", | |

| " Count \n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Acidilobales Acidilobaceae Acidilobus 2 \n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Acidilobales Caldisphaeraceae Caldisphaera 14 \n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Desulfurococcales Desulfurococcaceae Ignisphaera 23 \n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Desulfurococcales Desulfurococcaceae Sulfophobococcus 1 \n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Desulfurococcales Desulfurococcaceae Thermosphaera 2 " | |

| ] | |

| } | |

| ], | |

| "prompt_number": 52 | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "pd.concat([mb1, mb2], axis=1).values[:5]" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 53, | |

| "text": [ | |

| "array([[ nan, 2.],\n", | |

| " [ nan, 14.],\n", | |

| " [ 7., 23.],\n", | |

| " [ nan, 1.],\n", | |

| " [ nan, 2.]])" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 53 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "If we are only interested in taxa that are included in both DataFrames, we can specify a `join=inner` argument." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "pd.concat([mb1, mb2], axis=1, join='inner').head()" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 54, | |

| "text": [ | |

| " Count \\\n", | |

| "Taxon \n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Desulfurococcales Desulfurococcaceae Ignisphaera 7 \n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Desulfurococcales Pyrodictiaceae Pyrolobus 2 \n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Sulfolobales Sulfolobaceae Stygiolobus 3 \n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Thermoproteales Thermofilaceae Thermofilum 3 \n", | |

| "Archaea \"Euryarchaeota\" \"Methanomicrobia\" Methanocellales Methanocellaceae Methanocella 7 \n", | |

| "\n", | |

| " Count \n", | |

| "Taxon \n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Desulfurococcales Desulfurococcaceae Ignisphaera 23 \n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Desulfurococcales Pyrodictiaceae Pyrolobus 2 \n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Sulfolobales Sulfolobaceae Stygiolobus 10 \n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Thermoproteales Thermofilaceae Thermofilum 9 \n", | |

| "Archaea \"Euryarchaeota\" \"Methanomicrobia\" Methanocellales Methanocellaceae Methanocella 9 " | |

| ] | |

| } | |

| ], | |

| "prompt_number": 54 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "If we wanted to use the second table to fill values absent from the first table, we could use `combine_first`." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "mb1.combine_first(mb2).head()" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "metadata": {}, | |

| "output_type": "pyout", | |

| "prompt_number": 55, | |

| "text": [ | |

| " Count\n", | |

| "Taxon \n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Acidilobales Acidilobaceae Acidilobus 2\n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Acidilobales Caldisphaeraceae Caldisphaera 14\n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Desulfurococcales Desulfurococcaceae Ignisphaera 7\n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Desulfurococcales Desulfurococcaceae Sulfophobococcus 1\n", | |

| "Archaea \"Crenarchaeota\" Thermoprotei Desulfurococcales Desulfurococcaceae Thermosphaera 2" | |

| ] | |

| } | |

| ], | |

| "prompt_number": 55 | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "We can also create a hierarchical index based on keys identifying the original tables." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "collapsed": false, | |

| "input": [ | |

| "pd.concat([mb1, mb2], keys=['patient1', 'patient2']).head()" | |

| ], | |

| "language": "python", | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |