-

-

Save justmarkham/15e98a78d2bf541d168006d89920c63f to your computer and use it in GitHub Desktop.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| { | |

| "cells": [ | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "# Office Hours session 2" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "### Starter code (copy from here: http://bit.ly/complex-pipeline)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 1, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "import pandas as pd\n", | |

| "from sklearn.impute import SimpleImputer\n", | |

| "from sklearn.preprocessing import OneHotEncoder\n", | |

| "from sklearn.feature_extraction.text import CountVectorizer\n", | |

| "from sklearn.linear_model import LogisticRegression\n", | |

| "from sklearn.compose import make_column_transformer\n", | |

| "from sklearn.pipeline import make_pipeline" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 2, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "cols = ['Parch', 'Fare', 'Embarked', 'Sex', 'Name', 'Age']" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 3, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "df = pd.read_csv('http://bit.ly/kaggletrain')\n", | |

| "X = df[cols]\n", | |

| "y = df['Survived']" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 4, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "df_new = pd.read_csv('http://bit.ly/kaggletest')\n", | |

| "X_new = df_new[cols]" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 5, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "imp_constant = SimpleImputer(strategy='constant', fill_value='missing')\n", | |

| "ohe = OneHotEncoder()" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 6, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "imp_ohe = make_pipeline(imp_constant, ohe)\n", | |

| "vect = CountVectorizer()\n", | |

| "imp = SimpleImputer()" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 7, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "ct = make_column_transformer(\n", | |

| " (imp_ohe, ['Embarked', 'Sex']),\n", | |

| " (vect, 'Name'),\n", | |

| " (imp, ['Age', 'Fare']),\n", | |

| " remainder='passthrough')" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 8, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "logreg = LogisticRegression(solver='liblinear', random_state=1)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 9, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "array([0, 1, 0, 0, 1, 0, 1, 0, 1, 0, 0, 0, 1, 0, 1, 1, 0, 0, 0, 1, 0, 1,\n", | |

| " 1, 0, 1, 0, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1,\n", | |

| " 1, 0, 0, 0, 1, 1, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 1, 1, 1,\n", | |

| " 1, 0, 0, 1, 1, 0, 1, 0, 1, 1, 0, 1, 0, 1, 0, 1, 0, 0, 0, 0, 1, 1,\n", | |

| " 1, 1, 1, 0, 1, 0, 0, 0, 1, 0, 1, 0, 1, 0, 0, 0, 1, 0, 0, 0, 0, 0,\n", | |

| " 0, 1, 1, 1, 1, 0, 0, 1, 0, 1, 1, 0, 1, 0, 0, 1, 0, 1, 0, 0, 0, 0,\n", | |

| " 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0,\n", | |

| " 1, 0, 1, 1, 0, 1, 1, 1, 1, 0, 0, 1, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1,\n", | |

| " 1, 0, 1, 1, 0, 0, 1, 0, 1, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 1, 1,\n", | |

| " 0, 1, 1, 0, 1, 1, 0, 0, 1, 0, 1, 0, 0, 0, 0, 1, 0, 0, 1, 0, 1, 0,\n", | |

| " 1, 0, 1, 0, 1, 1, 0, 1, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1,\n", | |

| " 1, 0, 0, 0, 1, 0, 1, 1, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 1, 1,\n", | |

| " 0, 0, 0, 0, 1, 0, 0, 0, 1, 1, 0, 1, 0, 0, 0, 0, 1, 0, 1, 1, 1, 0,\n", | |

| " 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 1, 0, 1,\n", | |

| " 0, 1, 0, 0, 0, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 1, 0, 0, 0,\n", | |

| " 1, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 1, 0, 1, 0, 1, 0, 1, 1, 0,\n", | |

| " 0, 0, 1, 0, 1, 0, 0, 1, 0, 1, 1, 0, 1, 0, 0, 1, 1, 0, 0, 1, 0, 0,\n", | |

| " 1, 1, 1, 0, 0, 1, 0, 0, 1, 1, 0, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 1,\n", | |

| " 0, 1, 0, 0, 1, 0, 1, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 0, 1, 0, 0, 1])" | |

| ] | |

| }, | |

| "execution_count": 9, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "pipe = make_pipeline(ct, logreg)\n", | |

| "pipe.fit(X, y)\n", | |

| "pipe.predict(X_new)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "### K: Why did you create the `imp_ohe` pipeline? Why didn't you instead add `imp_constant` to the ColumnTransformer?" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Here's a reminder of what imp_ohe contains:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 10, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "imp_ohe = make_pipeline(imp_constant, ohe)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Here's how I used it in the ColumnTransformer:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 11, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "ct = make_column_transformer(\n", | |

| " (imp_ohe, ['Embarked', 'Sex']),\n", | |

| " (vect, 'Name'),\n", | |

| " (imp, ['Age', 'Fare']),\n", | |

| " remainder='passthrough')" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Many people suggested that I use something like this instead (which will not work):" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 12, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "ct_suggestion = make_column_transformer(\n", | |

| " (imp_constant, ['Embarked', 'Sex']),\n", | |

| " (ohe, ['Embarked', 'Sex']),\n", | |

| " (vect, 'Name'),\n", | |

| " (imp, ['Age', 'Fare']),\n", | |

| " remainder='passthrough')" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "I'll create the 10 row dataset to help me explain why:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 13, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/html": [ | |

| "<div>\n", | |

| "<style scoped>\n", | |

| " .dataframe tbody tr th:only-of-type {\n", | |

| " vertical-align: middle;\n", | |

| " }\n", | |

| "\n", | |

| " .dataframe tbody tr th {\n", | |

| " vertical-align: top;\n", | |

| " }\n", | |

| "\n", | |

| " .dataframe thead th {\n", | |

| " text-align: right;\n", | |

| " }\n", | |

| "</style>\n", | |

| "<table border=\"1\" class=\"dataframe\">\n", | |

| " <thead>\n", | |

| " <tr style=\"text-align: right;\">\n", | |

| " <th></th>\n", | |

| " <th>PassengerId</th>\n", | |

| " <th>Survived</th>\n", | |

| " <th>Pclass</th>\n", | |

| " <th>Name</th>\n", | |

| " <th>Sex</th>\n", | |

| " <th>Age</th>\n", | |

| " <th>SibSp</th>\n", | |

| " <th>Parch</th>\n", | |

| " <th>Ticket</th>\n", | |

| " <th>Fare</th>\n", | |

| " <th>Cabin</th>\n", | |

| " <th>Embarked</th>\n", | |

| " </tr>\n", | |

| " </thead>\n", | |

| " <tbody>\n", | |

| " <tr>\n", | |

| " <th>0</th>\n", | |

| " <td>1</td>\n", | |

| " <td>0</td>\n", | |

| " <td>3</td>\n", | |

| " <td>Braund, Mr. Owen Harris</td>\n", | |

| " <td>male</td>\n", | |

| " <td>22.0</td>\n", | |

| " <td>1</td>\n", | |

| " <td>0</td>\n", | |

| " <td>A/5 21171</td>\n", | |

| " <td>7.2500</td>\n", | |

| " <td>NaN</td>\n", | |

| " <td>S</td>\n", | |

| " </tr>\n", | |

| " <tr>\n", | |

| " <th>1</th>\n", | |

| " <td>2</td>\n", | |

| " <td>1</td>\n", | |

| " <td>1</td>\n", | |

| " <td>Cumings, Mrs. John Bradley (Florence Briggs Th...</td>\n", | |

| " <td>female</td>\n", | |

| " <td>38.0</td>\n", | |

| " <td>1</td>\n", | |

| " <td>0</td>\n", | |

| " <td>PC 17599</td>\n", | |

| " <td>71.2833</td>\n", | |

| " <td>C85</td>\n", | |

| " <td>C</td>\n", | |

| " </tr>\n", | |

| " <tr>\n", | |

| " <th>2</th>\n", | |

| " <td>3</td>\n", | |

| " <td>1</td>\n", | |

| " <td>3</td>\n", | |

| " <td>Heikkinen, Miss. Laina</td>\n", | |

| " <td>female</td>\n", | |

| " <td>26.0</td>\n", | |

| " <td>0</td>\n", | |

| " <td>0</td>\n", | |

| " <td>STON/O2. 3101282</td>\n", | |

| " <td>7.9250</td>\n", | |

| " <td>NaN</td>\n", | |

| " <td>S</td>\n", | |

| " </tr>\n", | |

| " <tr>\n", | |

| " <th>3</th>\n", | |

| " <td>4</td>\n", | |

| " <td>1</td>\n", | |

| " <td>1</td>\n", | |

| " <td>Futrelle, Mrs. Jacques Heath (Lily May Peel)</td>\n", | |

| " <td>female</td>\n", | |

| " <td>35.0</td>\n", | |

| " <td>1</td>\n", | |

| " <td>0</td>\n", | |

| " <td>113803</td>\n", | |

| " <td>53.1000</td>\n", | |

| " <td>C123</td>\n", | |

| " <td>S</td>\n", | |

| " </tr>\n", | |

| " <tr>\n", | |

| " <th>4</th>\n", | |

| " <td>5</td>\n", | |

| " <td>0</td>\n", | |

| " <td>3</td>\n", | |

| " <td>Allen, Mr. William Henry</td>\n", | |

| " <td>male</td>\n", | |

| " <td>35.0</td>\n", | |

| " <td>0</td>\n", | |

| " <td>0</td>\n", | |

| " <td>373450</td>\n", | |

| " <td>8.0500</td>\n", | |

| " <td>NaN</td>\n", | |

| " <td>S</td>\n", | |

| " </tr>\n", | |

| " <tr>\n", | |

| " <th>5</th>\n", | |

| " <td>6</td>\n", | |

| " <td>0</td>\n", | |

| " <td>3</td>\n", | |

| " <td>Moran, Mr. James</td>\n", | |

| " <td>male</td>\n", | |

| " <td>NaN</td>\n", | |

| " <td>0</td>\n", | |

| " <td>0</td>\n", | |

| " <td>330877</td>\n", | |

| " <td>8.4583</td>\n", | |

| " <td>NaN</td>\n", | |

| " <td>Q</td>\n", | |

| " </tr>\n", | |

| " <tr>\n", | |

| " <th>6</th>\n", | |

| " <td>7</td>\n", | |

| " <td>0</td>\n", | |

| " <td>1</td>\n", | |

| " <td>McCarthy, Mr. Timothy J</td>\n", | |

| " <td>male</td>\n", | |

| " <td>54.0</td>\n", | |

| " <td>0</td>\n", | |

| " <td>0</td>\n", | |

| " <td>17463</td>\n", | |

| " <td>51.8625</td>\n", | |

| " <td>E46</td>\n", | |

| " <td>S</td>\n", | |

| " </tr>\n", | |

| " <tr>\n", | |

| " <th>7</th>\n", | |

| " <td>8</td>\n", | |

| " <td>0</td>\n", | |

| " <td>3</td>\n", | |

| " <td>Palsson, Master. Gosta Leonard</td>\n", | |

| " <td>male</td>\n", | |

| " <td>2.0</td>\n", | |

| " <td>3</td>\n", | |

| " <td>1</td>\n", | |

| " <td>349909</td>\n", | |

| " <td>21.0750</td>\n", | |

| " <td>NaN</td>\n", | |

| " <td>S</td>\n", | |

| " </tr>\n", | |

| " <tr>\n", | |

| " <th>8</th>\n", | |

| " <td>9</td>\n", | |

| " <td>1</td>\n", | |

| " <td>3</td>\n", | |

| " <td>Johnson, Mrs. Oscar W (Elisabeth Vilhelmina Berg)</td>\n", | |

| " <td>female</td>\n", | |

| " <td>27.0</td>\n", | |

| " <td>0</td>\n", | |

| " <td>2</td>\n", | |

| " <td>347742</td>\n", | |

| " <td>11.1333</td>\n", | |

| " <td>NaN</td>\n", | |

| " <td>S</td>\n", | |

| " </tr>\n", | |

| " <tr>\n", | |

| " <th>9</th>\n", | |

| " <td>10</td>\n", | |

| " <td>1</td>\n", | |

| " <td>2</td>\n", | |

| " <td>Nasser, Mrs. Nicholas (Adele Achem)</td>\n", | |

| " <td>female</td>\n", | |

| " <td>14.0</td>\n", | |

| " <td>1</td>\n", | |

| " <td>0</td>\n", | |

| " <td>237736</td>\n", | |

| " <td>30.0708</td>\n", | |

| " <td>NaN</td>\n", | |

| " <td>C</td>\n", | |

| " </tr>\n", | |

| " </tbody>\n", | |

| "</table>\n", | |

| "</div>" | |

| ], | |

| "text/plain": [ | |

| " PassengerId Survived Pclass \\\n", | |

| "0 1 0 3 \n", | |

| "1 2 1 1 \n", | |

| "2 3 1 3 \n", | |

| "3 4 1 1 \n", | |

| "4 5 0 3 \n", | |

| "5 6 0 3 \n", | |

| "6 7 0 1 \n", | |

| "7 8 0 3 \n", | |

| "8 9 1 3 \n", | |

| "9 10 1 2 \n", | |

| "\n", | |

| " Name Sex Age SibSp \\\n", | |

| "0 Braund, Mr. Owen Harris male 22.0 1 \n", | |

| "1 Cumings, Mrs. John Bradley (Florence Briggs Th... female 38.0 1 \n", | |

| "2 Heikkinen, Miss. Laina female 26.0 0 \n", | |

| "3 Futrelle, Mrs. Jacques Heath (Lily May Peel) female 35.0 1 \n", | |

| "4 Allen, Mr. William Henry male 35.0 0 \n", | |

| "5 Moran, Mr. James male NaN 0 \n", | |

| "6 McCarthy, Mr. Timothy J male 54.0 0 \n", | |

| "7 Palsson, Master. Gosta Leonard male 2.0 3 \n", | |

| "8 Johnson, Mrs. Oscar W (Elisabeth Vilhelmina Berg) female 27.0 0 \n", | |

| "9 Nasser, Mrs. Nicholas (Adele Achem) female 14.0 1 \n", | |

| "\n", | |

| " Parch Ticket Fare Cabin Embarked \n", | |

| "0 0 A/5 21171 7.2500 NaN S \n", | |

| "1 0 PC 17599 71.2833 C85 C \n", | |

| "2 0 STON/O2. 3101282 7.9250 NaN S \n", | |

| "3 0 113803 53.1000 C123 S \n", | |

| "4 0 373450 8.0500 NaN S \n", | |

| "5 0 330877 8.4583 NaN Q \n", | |

| "6 0 17463 51.8625 E46 S \n", | |

| "7 1 349909 21.0750 NaN S \n", | |

| "8 2 347742 11.1333 NaN S \n", | |

| "9 0 237736 30.0708 NaN C " | |

| ] | |

| }, | |

| "execution_count": 13, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "df_tiny = df.head(10).copy()\n", | |

| "df_tiny" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 14, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/html": [ | |

| "<div>\n", | |

| "<style scoped>\n", | |

| " .dataframe tbody tr th:only-of-type {\n", | |

| " vertical-align: middle;\n", | |

| " }\n", | |

| "\n", | |

| " .dataframe tbody tr th {\n", | |

| " vertical-align: top;\n", | |

| " }\n", | |

| "\n", | |

| " .dataframe thead th {\n", | |

| " text-align: right;\n", | |

| " }\n", | |

| "</style>\n", | |

| "<table border=\"1\" class=\"dataframe\">\n", | |

| " <thead>\n", | |

| " <tr style=\"text-align: right;\">\n", | |

| " <th></th>\n", | |

| " <th>Parch</th>\n", | |

| " <th>Fare</th>\n", | |

| " <th>Embarked</th>\n", | |

| " <th>Sex</th>\n", | |

| " <th>Name</th>\n", | |

| " <th>Age</th>\n", | |

| " </tr>\n", | |

| " </thead>\n", | |

| " <tbody>\n", | |

| " <tr>\n", | |

| " <th>0</th>\n", | |

| " <td>0</td>\n", | |

| " <td>7.2500</td>\n", | |

| " <td>S</td>\n", | |

| " <td>male</td>\n", | |

| " <td>Braund, Mr. Owen Harris</td>\n", | |

| " <td>22.0</td>\n", | |

| " </tr>\n", | |

| " <tr>\n", | |

| " <th>1</th>\n", | |

| " <td>0</td>\n", | |

| " <td>71.2833</td>\n", | |

| " <td>C</td>\n", | |

| " <td>female</td>\n", | |

| " <td>Cumings, Mrs. John Bradley (Florence Briggs Th...</td>\n", | |

| " <td>38.0</td>\n", | |

| " </tr>\n", | |

| " <tr>\n", | |

| " <th>2</th>\n", | |

| " <td>0</td>\n", | |

| " <td>7.9250</td>\n", | |

| " <td>S</td>\n", | |

| " <td>female</td>\n", | |

| " <td>Heikkinen, Miss. Laina</td>\n", | |

| " <td>26.0</td>\n", | |

| " </tr>\n", | |

| " <tr>\n", | |

| " <th>3</th>\n", | |

| " <td>0</td>\n", | |

| " <td>53.1000</td>\n", | |

| " <td>S</td>\n", | |

| " <td>female</td>\n", | |

| " <td>Futrelle, Mrs. Jacques Heath (Lily May Peel)</td>\n", | |

| " <td>35.0</td>\n", | |

| " </tr>\n", | |

| " <tr>\n", | |

| " <th>4</th>\n", | |

| " <td>0</td>\n", | |

| " <td>8.0500</td>\n", | |

| " <td>S</td>\n", | |

| " <td>male</td>\n", | |

| " <td>Allen, Mr. William Henry</td>\n", | |

| " <td>35.0</td>\n", | |

| " </tr>\n", | |

| " <tr>\n", | |

| " <th>5</th>\n", | |

| " <td>0</td>\n", | |

| " <td>8.4583</td>\n", | |

| " <td>Q</td>\n", | |

| " <td>male</td>\n", | |

| " <td>Moran, Mr. James</td>\n", | |

| " <td>NaN</td>\n", | |

| " </tr>\n", | |

| " <tr>\n", | |

| " <th>6</th>\n", | |

| " <td>0</td>\n", | |

| " <td>51.8625</td>\n", | |

| " <td>S</td>\n", | |

| " <td>male</td>\n", | |

| " <td>McCarthy, Mr. Timothy J</td>\n", | |

| " <td>54.0</td>\n", | |

| " </tr>\n", | |

| " <tr>\n", | |

| " <th>7</th>\n", | |

| " <td>1</td>\n", | |

| " <td>21.0750</td>\n", | |

| " <td>S</td>\n", | |

| " <td>male</td>\n", | |

| " <td>Palsson, Master. Gosta Leonard</td>\n", | |

| " <td>2.0</td>\n", | |

| " </tr>\n", | |

| " <tr>\n", | |

| " <th>8</th>\n", | |

| " <td>2</td>\n", | |

| " <td>11.1333</td>\n", | |

| " <td>S</td>\n", | |

| " <td>female</td>\n", | |

| " <td>Johnson, Mrs. Oscar W (Elisabeth Vilhelmina Berg)</td>\n", | |

| " <td>27.0</td>\n", | |

| " </tr>\n", | |

| " <tr>\n", | |

| " <th>9</th>\n", | |

| " <td>0</td>\n", | |

| " <td>30.0708</td>\n", | |

| " <td>C</td>\n", | |

| " <td>female</td>\n", | |

| " <td>Nasser, Mrs. Nicholas (Adele Achem)</td>\n", | |

| " <td>14.0</td>\n", | |

| " </tr>\n", | |

| " </tbody>\n", | |

| "</table>\n", | |

| "</div>" | |

| ], | |

| "text/plain": [ | |

| " Parch Fare Embarked Sex \\\n", | |

| "0 0 7.2500 S male \n", | |

| "1 0 71.2833 C female \n", | |

| "2 0 7.9250 S female \n", | |

| "3 0 53.1000 S female \n", | |

| "4 0 8.0500 S male \n", | |

| "5 0 8.4583 Q male \n", | |

| "6 0 51.8625 S male \n", | |

| "7 1 21.0750 S male \n", | |

| "8 2 11.1333 S female \n", | |

| "9 0 30.0708 C female \n", | |

| "\n", | |

| " Name Age \n", | |

| "0 Braund, Mr. Owen Harris 22.0 \n", | |

| "1 Cumings, Mrs. John Bradley (Florence Briggs Th... 38.0 \n", | |

| "2 Heikkinen, Miss. Laina 26.0 \n", | |

| "3 Futrelle, Mrs. Jacques Heath (Lily May Peel) 35.0 \n", | |

| "4 Allen, Mr. William Henry 35.0 \n", | |

| "5 Moran, Mr. James NaN \n", | |

| "6 McCarthy, Mr. Timothy J 54.0 \n", | |

| "7 Palsson, Master. Gosta Leonard 2.0 \n", | |

| "8 Johnson, Mrs. Oscar W (Elisabeth Vilhelmina Berg) 27.0 \n", | |

| "9 Nasser, Mrs. Nicholas (Adele Achem) 14.0 " | |

| ] | |

| }, | |

| "execution_count": 14, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "X_tiny = df_tiny[cols]\n", | |

| "X_tiny" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Here's a smaller ColumnTransformer that uses imp_ohe, but only on \"Embarked\":\n", | |

| "\n", | |

| "- There are no missing values, so the first step of the imp_ohe Pipeline passes it along to the second step\n", | |

| "- The second step of the imp_ohe Pipeline does the one-hot encoding and outputs three columns\n", | |

| "- This is exactly what we want" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 15, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "array([[0., 0., 1.],\n", | |

| " [1., 0., 0.],\n", | |

| " [0., 0., 1.],\n", | |

| " [0., 0., 1.],\n", | |

| " [0., 0., 1.],\n", | |

| " [0., 1., 0.],\n", | |

| " [0., 0., 1.],\n", | |

| " [0., 0., 1.],\n", | |

| " [0., 0., 1.],\n", | |

| " [1., 0., 0.]])" | |

| ] | |

| }, | |

| "execution_count": 15, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "make_column_transformer(\n", | |

| " (imp_ohe, ['Embarked']),\n", | |

| " remainder='drop').fit_transform(X_tiny)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Try splitting imp_ohe into two separate transformers:\n", | |

| "\n", | |

| "- imp_constant does the imputation, and ohe does the one-hot encoding\n", | |

| "- The results get stacked side-by-side, which is not what we want" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 16, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "array([['S', 0.0, 0.0, 1.0],\n", | |

| " ['C', 1.0, 0.0, 0.0],\n", | |

| " ['S', 0.0, 0.0, 1.0],\n", | |

| " ['S', 0.0, 0.0, 1.0],\n", | |

| " ['S', 0.0, 0.0, 1.0],\n", | |

| " ['Q', 0.0, 1.0, 0.0],\n", | |

| " ['S', 0.0, 0.0, 1.0],\n", | |

| " ['S', 0.0, 0.0, 1.0],\n", | |

| " ['S', 0.0, 0.0, 1.0],\n", | |

| " ['C', 1.0, 0.0, 0.0]], dtype=object)" | |

| ] | |

| }, | |

| "execution_count": 16, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "make_column_transformer(\n", | |

| " (imp_constant, ['Embarked']),\n", | |

| " (ohe, ['Embarked']),\n", | |

| " remainder='drop').fit_transform(X_tiny)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Try reversing the order of the transformers:\n", | |

| "\n", | |

| "- All this does is change the order of the output columns, which is still not what we want" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 17, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "array([[0.0, 0.0, 1.0, 'S'],\n", | |

| " [1.0, 0.0, 0.0, 'C'],\n", | |

| " [0.0, 0.0, 1.0, 'S'],\n", | |

| " [0.0, 0.0, 1.0, 'S'],\n", | |

| " [0.0, 0.0, 1.0, 'S'],\n", | |

| " [0.0, 1.0, 0.0, 'Q'],\n", | |

| " [0.0, 0.0, 1.0, 'S'],\n", | |

| " [0.0, 0.0, 1.0, 'S'],\n", | |

| " [0.0, 0.0, 1.0, 'S'],\n", | |

| " [1.0, 0.0, 0.0, 'C']], dtype=object)" | |

| ] | |

| }, | |

| "execution_count": 17, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "make_column_transformer(\n", | |

| " (ohe, ['Embarked']),\n", | |

| " (imp_constant, ['Embarked']),\n", | |

| " remainder='drop').fit_transform(X_tiny)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Key ideas:\n", | |

| "\n", | |

| "- Pipeline operates sequentially\n", | |

| " - It has steps: the output of step 1 is the input to step 2\n", | |

| " - This applies to our larger Pipeline (pipe) and our smaller Pipeline (imp_ohe)\n", | |

| "- ColumnTransformer operates in parallel\n", | |

| " - It does not have steps: data does not flow from one transformer to the next\n", | |

| " - It splits up the columns, transforms them independently, and combines the results side-by-side" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

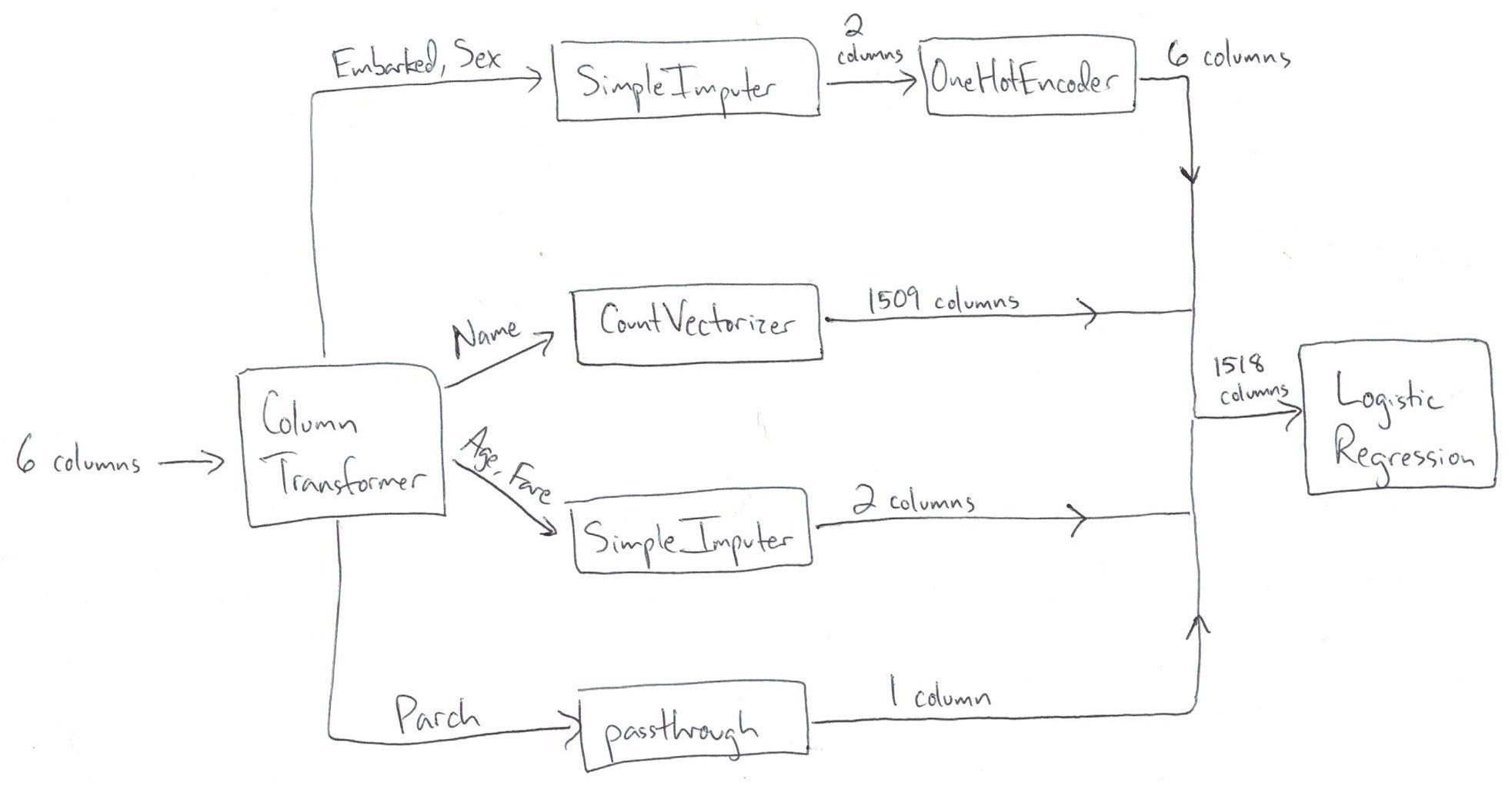

| "Take one more look at the correct ColumnTransformer (which matches the diagram above):" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 18, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "ct = make_column_transformer(\n", | |

| " (imp_ohe, ['Embarked', 'Sex']),\n", | |

| " (vect, 'Name'),\n", | |

| " (imp, ['Age', 'Fare']),\n", | |

| " remainder='passthrough')" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 19, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "<891x1518 sparse matrix of type '<class 'numpy.float64'>'\n", | |

| "\twith 7328 stored elements in Compressed Sparse Row format>" | |

| ] | |

| }, | |

| "execution_count": 19, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "ct.fit_transform(X)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Compare that to the ColumnTransformer many people were suggesting:\n", | |

| "\n", | |

| "- The first transformer (imp_constant) operates completely independently of the second transformer (ohe)\n", | |

| "- imp_constant doesn't pass transformed data down to the second transformer" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 20, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "ct_suggestion = make_column_transformer(\n", | |

| " (imp_constant, ['Embarked', 'Sex']),\n", | |

| " (ohe, ['Embarked', 'Sex']),\n", | |

| " (vect, 'Name'),\n", | |

| " (imp, ['Age', 'Fare']),\n", | |

| " remainder='passthrough')" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "It will error during fit_transform because the ohe transformer doesn't know how to handle missing values:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 21, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "# ct_suggestion.fit_transform(X)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "### Justin: What's the cost versus the benefit of adding so many columns for Name?" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Cross-validate our pipeline:\n", | |

| "\n", | |

| "- Use IPython's \"time\" magic function to time how long the operation takes\n", | |

| "- This accuracy score is our baseline score\n", | |

| "- 1509 of the 1518 features were created by the \"Name\" column" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 22, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "name": "stdout", | |

| "output_type": "stream", | |

| "text": [ | |

| "CPU times: user 112 ms, sys: 2.32 ms, total: 114 ms\n", | |

| "Wall time: 114 ms\n" | |

| ] | |

| }, | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "0.8114619295712762" | |

| ] | |

| }, | |

| "execution_count": 22, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "from sklearn.model_selection import cross_val_score\n", | |

| "%time cross_val_score(pipe, X, y, cv=5, scoring='accuracy').mean()" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Remove \"Name\" from the ColumnTransformer:\n", | |

| "\n", | |

| "- Using this notation is an easy way to drop some columns (\"Name\") and passthrough other columns (\"Parch\")\n", | |

| "- This results in only 9 features" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 23, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "ct_no_name = make_column_transformer(\n", | |

| " (imp_ohe, ['Embarked', 'Sex']),\n", | |

| " ('drop', 'Name'),\n", | |

| " (imp, ['Age', 'Fare']),\n", | |

| " remainder='passthrough')" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Cross-validate the pipeline that doesn't include \"Name\":" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 24, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "name": "stdout", | |

| "output_type": "stream", | |

| "text": [ | |

| "CPU times: user 65.8 ms, sys: 1.23 ms, total: 67 ms\n", | |

| "Wall time: 68.1 ms\n" | |

| ] | |

| }, | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "0.7833908731404181" | |

| ] | |

| }, | |

| "execution_count": 24, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "no_name = make_pipeline(ct_no_name, logreg)\n", | |

| "%time cross_val_score(no_name, X, y, cv=5, scoring='accuracy').mean()" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Create a pipeline that only includes \"Name\" and cross-validate it:\n", | |

| "\n", | |

| "- Since we're only using one column, there's no need for a ColumnTransformer\n", | |

| "- Pass the \"Name\" Series (rather than the entire X) to cross_val_score\n", | |

| "- This results in 1509 features" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 25, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "name": "stdout", | |

| "output_type": "stream", | |

| "text": [ | |

| "CPU times: user 44 ms, sys: 1.4 ms, total: 45.4 ms\n", | |

| "Wall time: 45.6 ms\n" | |

| ] | |

| }, | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "0.7945954428472788" | |

| ] | |

| }, | |

| "execution_count": 25, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "only_name = make_pipeline(vect, logreg)\n", | |

| "%time cross_val_score(only_name, X['Name'], y, cv=5, scoring='accuracy').mean()" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "What were the results?\n", | |

| "\n", | |

| "- The best results came from using all of our features\n", | |

| "- only_name had a slightly higher accuracy than no_name\n", | |

| "- only_name ran slightly faster than no_name (probably because there's overhead from the ColumnTransformer)\n", | |

| "\n", | |

| "What are the benefits of including \"Name\"?\n", | |

| "\n", | |

| "- Better accuracy, which tells us that the \"Name\" column contains more signal than noise (with respect to the target)\n", | |

| "\n", | |

| "What are the costs of including \"Name\"?\n", | |

| "\n", | |

| "- Less interpretability, since it's impractical to examine the coefficients of 1518 features\n", | |

| "\n", | |

| "Other thoughts:\n", | |

| "\n", | |

| "- Including \"Name\" in this model is not resulting in overfitting, since it's increasing the cross-validated accuracy\n", | |

| " - As demonstrated in this example, having more features (1518) than observations (891) does not automatically result in overfitting\n", | |

| "- It's possible that including \"Name\" would make some models worse, but you shouldn't assume that without trying it" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "### Anton: What's the target accuracy that you are trying to reach?" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "- For most real problems, it's impossible to know how accurate your model could be if you did enough tuning and tried enough models\n", | |

| "- It's also impossible to know how accurate your model could be if you got more observations or more features\n", | |

| "- Thus in most cases, you don't have a target accuracy, instead you just stop once you run out of time or money or ideas\n", | |

| "- The main exception is if you are working on a well-studied research problem, because in that case there may be a state-of-the-art benchmark that everyone is trying to surpass" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "### Motasem: When using cross_val_score, is the imputation value calculated separately for each fold?" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Here's a reminder of what our ColumnTransformer and Pipeline look like:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 26, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "ct = make_column_transformer(\n", | |

| " (imp_ohe, ['Embarked', 'Sex']),\n", | |

| " (vect, 'Name'),\n", | |

| " (imp, ['Age', 'Fare']),\n", | |

| " remainder='passthrough')" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 27, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "pipe = make_pipeline(ct, logreg)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Here's a reminder of how we cross-validate the Pipeline:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 28, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "0.8114619295712762" | |

| ] | |

| }, | |

| "execution_count": 28, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "cross_val_score(pipe, X, y, cv=5, scoring='accuracy').mean()" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "What happens \"under the hood\" when we run cross_val_score?\n", | |

| "\n", | |

| "- Step 1: cross_val_score splits the data, such that 80% is set aside for training and the remaining 20% is set aside for testing\n", | |

| "- Step 2: pipe.fit is run on the training portion, which means that the transformations are performed on the training portion and the transformed data is used to fit the model\n", | |

| "- Step 3: pipe.predict is run on the testing portion, which means that the transformations are applied to the testing portion and the transformed data is passed to the model for predictions\n", | |

| "- Step 4: The accuracy of the predictions is calculated\n", | |

| "- Steps 1 through 4 are repeated 4 more times, and each time a different 20% is set aside as the testing portion\n", | |

| "\n", | |

| "The key point is that cross_val_score does the transformations (steps 2 and 3) after splitting the data (step 1):\n", | |

| "\n", | |

| "- Thus, the imputation values for \"Age\" and \"Fare\" are calculated 5 different times\n", | |

| " - Each time, these values are calculated using the training portion, and applied to both the training and testing portions\n", | |

| " - This is done specifically to avoid data leakage, which is when you inadvertently include knowledge from the testing data when training a model" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "### Anusha: Why would missing value imputation in pandas lead to data leakage?" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "- Your model evaluation procedure (cross-validation in this case) is supposed to simulate the future, so that you can accurately estimate right now how well your model will perform on new data\n", | |

| "- If you impute missing values on your whole dataset in pandas and then pass your dataset to scikit-learn, your model evaluation procedure will no longer be an accurate simulation of reality\n", | |

| " - This is because the imputation values are based on your entire dataset, rather than just the training portion of your dataset\n", | |

| " - Keep in mind that the \"training portion\" will change 5 times during 5-fold cross-validation, thus it's impractical to avoid data leakage if you use pandas for imputation" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "### Hause: Regarding cross_val_score, what algorithm does scikit-learn use to split the data into different folds? Can you examine the data used in each fold?" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "When using cross_val_score, I've been passing an integer that specifies the number of folds:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 29, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "array([0.79888268, 0.8258427 , 0.80337079, 0.78651685, 0.84269663])" | |

| ] | |

| }, | |

| "execution_count": 29, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "cross_val_score(pipe, X, y, cv=5, scoring='accuracy')" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Here's what happens \"under the hood\" when you specify 5 folds for a classification problem:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 30, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "array([0.79888268, 0.8258427 , 0.80337079, 0.78651685, 0.84269663])" | |

| ] | |

| }, | |

| "execution_count": 30, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "from sklearn.model_selection import StratifiedKFold\n", | |

| "kf = StratifiedKFold(5)\n", | |

| "cross_val_score(pipe, X, y, cv=kf, scoring='accuracy')" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "StratifiedKFold is a cross-validation splitter, meaning that its role is to split datasets:\n", | |

| "\n", | |

| "- You pass the splitter to cross_val_score instead of an integer\n", | |

| "- \"Stratified\" means that it uses stratified sampling to ensure that the class frequencies are approximately equal in each split\n", | |

| " - Example: 38% of the y values are 1 (which means survived), so every time it splits, it ensures that about 38% of the training portion is survived passengers and about 38% of the testing portion is survived passengers\n", | |

| "\n", | |

| "You can examine the data used in each fold. Here are the training and testing indices used by the first split:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 31, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "(array([168, 169, 170, 171, 173, 174, 175, 176, 177, 178, 179, 180, 181,\n", | |

| " 182, 185, 188, 189, 191, 196, 197, 199, 200, 201, 202, 203, 204,\n", | |

| " 205, 206, 207, 208, 209, 210, 211, 212, 213, 214, 215, 216, 217,\n", | |

| " 218, 219, 220, 221, 222, 223, 224, 225, 226, 227, 228, 229, 230,\n", | |

| " 231, 232, 233, 234, 235, 236, 237, 238, 239, 240, 241, 242, 243,\n", | |

| " 244, 245, 246, 247, 248, 249, 250, 251, 252, 253, 254, 255, 256,\n", | |

| " 257, 258, 259, 260, 261, 262, 263, 264, 265, 266, 267, 268, 269,\n", | |

| " 270, 271, 272, 273, 274, 275, 276, 277, 278, 279, 280, 281, 282,\n", | |

| " 283, 284, 285, 286, 287, 288, 289, 290, 291, 292, 293, 294, 295,\n", | |

| " 296, 297, 298, 299, 300, 301, 302, 303, 304, 305, 306, 307, 308,\n", | |

| " 309, 310, 311, 312, 313, 314, 315, 316, 317, 318, 319, 320, 321,\n", | |

| " 322, 323, 324, 325, 326, 327, 328, 329, 330, 331, 332, 333, 334,\n", | |

| " 335, 336, 337, 338, 339, 340, 341, 342, 343, 344, 345, 346, 347,\n", | |

| " 348, 349, 350, 351, 352, 353, 354, 355, 356, 357, 358, 359, 360,\n", | |

| " 361, 362, 363, 364, 365, 366, 367, 368, 369, 370, 371, 372, 373,\n", | |

| " 374, 375, 376, 377, 378, 379, 380, 381, 382, 383, 384, 385, 386,\n", | |

| " 387, 388, 389, 390, 391, 392, 393, 394, 395, 396, 397, 398, 399,\n", | |

| " 400, 401, 402, 403, 404, 405, 406, 407, 408, 409, 410, 411, 412,\n", | |

| " 413, 414, 415, 416, 417, 418, 419, 420, 421, 422, 423, 424, 425,\n", | |

| " 426, 427, 428, 429, 430, 431, 432, 433, 434, 435, 436, 437, 438,\n", | |

| " 439, 440, 441, 442, 443, 444, 445, 446, 447, 448, 449, 450, 451,\n", | |

| " 452, 453, 454, 455, 456, 457, 458, 459, 460, 461, 462, 463, 464,\n", | |

| " 465, 466, 467, 468, 469, 470, 471, 472, 473, 474, 475, 476, 477,\n", | |

| " 478, 479, 480, 481, 482, 483, 484, 485, 486, 487, 488, 489, 490,\n", | |

| " 491, 492, 493, 494, 495, 496, 497, 498, 499, 500, 501, 502, 503,\n", | |

| " 504, 505, 506, 507, 508, 509, 510, 511, 512, 513, 514, 515, 516,\n", | |

| " 517, 518, 519, 520, 521, 522, 523, 524, 525, 526, 527, 528, 529,\n", | |

| " 530, 531, 532, 533, 534, 535, 536, 537, 538, 539, 540, 541, 542,\n", | |

| " 543, 544, 545, 546, 547, 548, 549, 550, 551, 552, 553, 554, 555,\n", | |

| " 556, 557, 558, 559, 560, 561, 562, 563, 564, 565, 566, 567, 568,\n", | |

| " 569, 570, 571, 572, 573, 574, 575, 576, 577, 578, 579, 580, 581,\n", | |

| " 582, 583, 584, 585, 586, 587, 588, 589, 590, 591, 592, 593, 594,\n", | |

| " 595, 596, 597, 598, 599, 600, 601, 602, 603, 604, 605, 606, 607,\n", | |

| " 608, 609, 610, 611, 612, 613, 614, 615, 616, 617, 618, 619, 620,\n", | |

| " 621, 622, 623, 624, 625, 626, 627, 628, 629, 630, 631, 632, 633,\n", | |

| " 634, 635, 636, 637, 638, 639, 640, 641, 642, 643, 644, 645, 646,\n", | |

| " 647, 648, 649, 650, 651, 652, 653, 654, 655, 656, 657, 658, 659,\n", | |

| " 660, 661, 662, 663, 664, 665, 666, 667, 668, 669, 670, 671, 672,\n", | |

| " 673, 674, 675, 676, 677, 678, 679, 680, 681, 682, 683, 684, 685,\n", | |

| " 686, 687, 688, 689, 690, 691, 692, 693, 694, 695, 696, 697, 698,\n", | |

| " 699, 700, 701, 702, 703, 704, 705, 706, 707, 708, 709, 710, 711,\n", | |

| " 712, 713, 714, 715, 716, 717, 718, 719, 720, 721, 722, 723, 724,\n", | |

| " 725, 726, 727, 728, 729, 730, 731, 732, 733, 734, 735, 736, 737,\n", | |

| " 738, 739, 740, 741, 742, 743, 744, 745, 746, 747, 748, 749, 750,\n", | |

| " 751, 752, 753, 754, 755, 756, 757, 758, 759, 760, 761, 762, 763,\n", | |

| " 764, 765, 766, 767, 768, 769, 770, 771, 772, 773, 774, 775, 776,\n", | |

| " 777, 778, 779, 780, 781, 782, 783, 784, 785, 786, 787, 788, 789,\n", | |

| " 790, 791, 792, 793, 794, 795, 796, 797, 798, 799, 800, 801, 802,\n", | |

| " 803, 804, 805, 806, 807, 808, 809, 810, 811, 812, 813, 814, 815,\n", | |

| " 816, 817, 818, 819, 820, 821, 822, 823, 824, 825, 826, 827, 828,\n", | |

| " 829, 830, 831, 832, 833, 834, 835, 836, 837, 838, 839, 840, 841,\n", | |

| " 842, 843, 844, 845, 846, 847, 848, 849, 850, 851, 852, 853, 854,\n", | |

| " 855, 856, 857, 858, 859, 860, 861, 862, 863, 864, 865, 866, 867,\n", | |

| " 868, 869, 870, 871, 872, 873, 874, 875, 876, 877, 878, 879, 880,\n", | |

| " 881, 882, 883, 884, 885, 886, 887, 888, 889, 890]),\n", | |

| " array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12,\n", | |

| " 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25,\n", | |

| " 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38,\n", | |

| " 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51,\n", | |

| " 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64,\n", | |

| " 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77,\n", | |

| " 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90,\n", | |

| " 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103,\n", | |

| " 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116,\n", | |

| " 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127, 128, 129,\n", | |

| " 130, 131, 132, 133, 134, 135, 136, 137, 138, 139, 140, 141, 142,\n", | |

| " 143, 144, 145, 146, 147, 148, 149, 150, 151, 152, 153, 154, 155,\n", | |

| " 156, 157, 158, 159, 160, 161, 162, 163, 164, 165, 166, 167, 172,\n", | |

| " 183, 184, 186, 187, 190, 192, 193, 194, 195, 198]))" | |

| ] | |

| }, | |

| "execution_count": 31, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "list(kf.split(X, y))[0]" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "By default, StratifiedKFold does not shuffle the rows:\n", | |

| "\n", | |

| "- There is no randomness in this process, thus you will always get the same results every time\n", | |

| "- This is why cross_val_score and GridSearchCV don't have a random_state parameter\n", | |

| "\n", | |

| "If the order of your rows is not arbitrary, then you can (and should) shuffle the rows by modifying the splitter:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 32, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "kf = StratifiedKFold(5, shuffle=True, random_state=0)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "### Leona: Would you recommend using a validation set when tuning the model's hyperparameters?" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "When we use grid search, we're choosing hyperparameters that maximize the cross-validation score on this dataset:\n", | |

| "\n", | |

| "- Thus, we're using the same data to do two separate jobs: tune the model hyperparameters and estimate its future performance\n", | |

| "- This biases the model to this dataset and can result in overly optimistic scores\n", | |

| "\n", | |

| "If you want a more realistic performance estimate and you have enough data to spare:\n", | |

| "\n", | |

| "- Split the training data into two sets: the first set is passed to grid search, and second set is used to evaluate the best model chosen by grid search\n", | |

| "- The score on the second set is a more realistic estimate of model performance because the model wasn't tuned to that set\n", | |

| "\n", | |

| "If you want a more realistic performance estimate and you don't have enough data to spare:\n", | |

| "\n", | |

| "- Use nested cross-validation, which has an outer loop and an inner loop:\n", | |

| " - Inner loop does the hyperparameter tuning using grid search\n", | |

| " - Outer loop estimates model performance using cross-validation\n", | |

| "\n", | |

| "Bottom line:\n", | |

| "\n", | |

| "- If you want the most realistic estimate of a model's performance, then you have to add additional complexity to your process\n", | |

| "- If you only care about choosing the best hyperparameters, then you don't need to add this complexity\n", | |

| "\n", | |

| "Recommended resources:\n", | |

| "\n", | |

| "- [Nested cross-validation description](https://sebastianraschka.com/blog/2018/model-evaluation-selection-part4.html#nested-cross-validation)\n", | |

| "- [Nested cross-validation code](https://github.com/rasbt/model-eval-article-supplementary/blob/master/code/nested_cv_code.ipynb)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "### JV: What is the difference between FeatureUnion and ColumnTransformer?" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "As a reminder, you can add a \"missing indicator\" to SimpleImputer (new in version 0.21):" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 33, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "array([[22. , 0. ],\n", | |

| " [38. , 0. ],\n", | |

| " [26. , 0. ],\n", | |

| " [35. , 0. ],\n", | |

| " [35. , 0. ],\n", | |

| " [28.11111111, 1. ],\n", | |

| " [54. , 0. ],\n", | |

| " [ 2. , 0. ],\n", | |

| " [27. , 0. ],\n", | |

| " [14. , 0. ]])" | |

| ] | |

| }, | |

| "execution_count": 33, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "SimpleImputer(add_indicator=True).fit_transform(X_tiny[['Age']])" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "How could we create the same output without using the \"add_indicator\" parameter?\n", | |

| "\n", | |

| "We can create the left column using the SimpleImputer:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 34, | |

| "metadata": { | |

| "scrolled": false | |

| }, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "array([[22. ],\n", | |

| " [38. ],\n", | |

| " [26. ],\n", | |

| " [35. ],\n", | |

| " [35. ],\n", | |

| " [28.11111111],\n", | |

| " [54. ],\n", | |

| " [ 2. ],\n", | |

| " [27. ],\n", | |

| " [14. ]])" | |

| ] | |

| }, | |

| "execution_count": 34, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "imp.fit_transform(X_tiny[['Age']])" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "We can create the right column using the MissingIndicator class:\n", | |

| "\n", | |

| "- It outputs False and True instead of 0 and 1, but otherwise the results are the same\n", | |

| "- This is available in version 0.20" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 35, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "array([[False],\n", | |

| " [False],\n", | |

| " [False],\n", | |

| " [False],\n", | |

| " [False],\n", | |

| " [ True],\n", | |

| " [False],\n", | |

| " [False],\n", | |

| " [False],\n", | |

| " [False]])" | |

| ] | |

| }, | |

| "execution_count": 35, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "from sklearn.impute import MissingIndicator\n", | |

| "indicator = MissingIndicator()\n", | |

| "indicator.fit_transform(X_tiny[['Age']])" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "We can use FeatureUnion to stack these two columns side-by-side:\n", | |

| "\n", | |

| "- Use make_union to create a FeatureUnion of SimpleImputer and MissingIndicator\n", | |

| "- False and True are converted to 0 and 1 when put in a numeric array" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 36, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "array([[22. , 0. ],\n", | |

| " [38. , 0. ],\n", | |

| " [26. , 0. ],\n", | |

| " [35. , 0. ],\n", | |

| " [35. , 0. ],\n", | |

| " [28.11111111, 1. ],\n", | |

| " [54. , 0. ],\n", | |

| " [ 2. , 0. ],\n", | |

| " [27. , 0. ],\n", | |

| " [14. , 0. ]])" | |

| ] | |

| }, | |

| "execution_count": 36, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "from sklearn.pipeline import make_union\n", | |

| "imp_indicator = make_union(imp, indicator)\n", | |

| "imp_indicator.fit_transform(X_tiny[['Age']])" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Comparing FeatureUnion and ColumnTransformer:\n", | |

| "\n", | |

| "- FeatureUnion applies multiple transformations to a single input column and stacks the results side-by-side\n", | |

| "- ColumnTransformer applies a different transformation to each input column and stacks the results side-by-side\n", | |

| "\n", | |

| "Thus we could include our FeatureUnion in a ColumnTransformer:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 37, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "array([[22. , 0. ],\n", | |

| " [38. , 0. ],\n", | |

| " [26. , 0. ],\n", | |

| " [35. , 0. ],\n", | |

| " [35. , 0. ],\n", | |

| " [28.11111111, 1. ],\n", | |

| " [54. , 0. ],\n", | |

| " [ 2. , 0. ],\n", | |

| " [27. , 0. ],\n", | |

| " [14. , 0. ]])" | |

| ] | |

| }, | |

| "execution_count": 37, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "make_column_transformer(\n", | |

| " (imp_indicator, ['Age']),\n", | |

| " remainder='drop').fit_transform(X_tiny)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Or we could achieve the same results without the FeatureUnion, by passing the \"Age\" column to the ColumnTransformer twice:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 38, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "array([[22. , 0. ],\n", | |

| " [38. , 0. ],\n", | |

| " [26. , 0. ],\n", | |

| " [35. , 0. ],\n", | |

| " [35. , 0. ],\n", | |

| " [28.11111111, 1. ],\n", | |

| " [54. , 0. ],\n", | |

| " [ 2. , 0. ],\n", | |

| " [27. , 0. ],\n", | |

| " [14. , 0. ]])" | |

| ] | |

| }, | |

| "execution_count": 38, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "make_column_transformer(\n", | |

| " (imp, ['Age']),\n", | |

| " (indicator, ['Age']),\n", | |

| " remainder='drop').fit_transform(X_tiny)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Conclusion:\n", | |

| "\n", | |

| "- In both FeatureUnion and ColumnTransformer, data does not flow from one transformer to the next\n", | |

| " - Instead, the transformers are applied independently (in parallel)\n", | |

| " - This is different from a Pipeline, in which data does flow from one step to the next\n", | |

| "- FeatureUnion was a precursor to ColumnTransformer\n", | |

| " - FeatureUnion is far less useful now that ColumnTransformer exists" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "### Gloria: Can you talk about the other two imputers in scikit-learn?" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "IterativeImputer (new in version 0.21) is experimental, meaning the API and predictions may change:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 39, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "from sklearn.experimental import enable_iterative_imputer\n", | |

| "from sklearn.impute import IterativeImputer" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Pass it \"Parch\" (no missing values), \"Fare\" (no missing values), and \"Age\" (1 missing value):" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 40, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "array([[ 0. , 7.25 , 22. ],\n", | |

| " [ 0. , 71.2833 , 38. ],\n", | |

| " [ 0. , 7.925 , 26. ],\n", | |

| " [ 0. , 53.1 , 35. ],\n", | |

| " [ 0. , 8.05 , 35. ],\n", | |

| " [ 0. , 8.4583 , 24.23702669],\n", | |

| " [ 0. , 51.8625 , 54. ],\n", | |

| " [ 1. , 21.075 , 2. ],\n", | |

| " [ 2. , 11.1333 , 27. ],\n", | |

| " [ 0. , 30.0708 , 14. ]])" | |

| ] | |

| }, | |

| "execution_count": 40, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "imp_iterative = IterativeImputer()\n", | |

| "imp_iterative.fit_transform(X_tiny[['Parch', 'Fare', 'Age']])" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "How it works:\n", | |

| "\n", | |

| "- For the 9 rows in which \"Age\" was not missing:\n", | |

| " - scikit-learn trains a regression model in which \"Parch\" and \"Fare\" are the features and \"Age\" is the target\n", | |

| "- For the 1 row in which \"Age\" was missing:\n", | |

| " - scikit-learn passes the \"Parch\" and \"Fare\" values to the trained model\n", | |

| " - The model makes a prediction for the \"Age\" value, and that value is used for imputation\n", | |

| "- In summary: IterativeImputer turned this into a regression problem with 2 features, 9 observations in the training set, and 1 observation in the testing set\n", | |

| "\n", | |

| "Notes:\n", | |

| "\n", | |

| "- Unlike SimpleImputer, you have to pass it multiple numeric columns, otherwise it can't do the regression problem\n", | |

| " - Thus you're deciding what features to use in this regression problem\n", | |

| "- You can pass it multiple features with missing values\n", | |

| " - Meaning: \"Parch\", \"Fare\", and \"Age\" can all have missing values\n", | |

| " - IterativeImputer will just do 3 different regression problems, and each column will have a turn being the target\n", | |

| "- You can choose which regression model IterativeImputer uses\n", | |

| "\n", | |

| "KNNImputer (new in version 0.22) is another option:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 41, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "array([[ 0. , 7.25 , 22. ],\n", | |

| " [ 0. , 71.2833, 38. ],\n", | |

| " [ 0. , 7.925 , 26. ],\n", | |

| " [ 0. , 53.1 , 35. ],\n", | |

| " [ 0. , 8.05 , 35. ],\n", | |

| " [ 0. , 8.4583, 30.5 ],\n", | |

| " [ 0. , 51.8625, 54. ],\n", | |

| " [ 1. , 21.075 , 2. ],\n", | |

| " [ 2. , 11.1333, 27. ],\n", | |

| " [ 0. , 30.0708, 14. ]])" | |

| ] | |

| }, | |

| "execution_count": 41, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "from sklearn.impute import KNNImputer\n", | |

| "imp_knn = KNNImputer(n_neighbors=2)\n", | |

| "imp_knn.fit_transform(X_tiny[['Parch', 'Fare', 'Age']])" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "How it works:\n", | |

| "\n", | |

| "- For the 1 row in which \"Age\" was missing:\n", | |

| " - Because I set n_neighbors=2, scikit-learn finds the 2 \"nearest\" rows to this row, which is measured by how close the \"Parch\" and \"Fare\" values are to this row\n", | |

| " - scikit-learn averages the \"Age\" values for those 2 rows (26 and 35), and that value (30.5) is used as the imputation value\n", | |

| "\n", | |

| "The intuition behind both of these imputers is that it can be useful to take other features into account when deciding what value to impute:\n", | |

| "\n", | |

| "- Example: Maybe a high \"Parch\" and a low \"Fare\" is common for kids\n", | |

| "- Thus if \"Age\" is missing for a row which has a high \"Parch\" and a low \"Fare\", then you should impute a low \"Age\" rather than then mean of \"Age\", which is what SimpleImputer would do" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "### Elin: How would you add feature selection to our Pipeline?" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "It can be useful to add feature selection to your workflow:\n", | |

| "\n", | |

| "- Model accuracy can be improved by removing irrelevant features (ones that are adding \"noise\", not \"signal\")\n", | |

| "- We can automate this process by including it in our Pipeline\n", | |

| "- I will demonstrate two of the many ways you can do this in scikit-learn: SelectPercentile and SelectFromModel\n", | |

| "\n", | |

| "Cross-validate our pipeline (without any hyperparameter tuning) to generate a \"baseline\" accuracy:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 42, | |

| "metadata": { | |

| "scrolled": true | |

| }, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "0.8114619295712762" | |

| ] | |

| }, | |

| "execution_count": 42, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "cross_val_score(pipe, X, y, cv=5, scoring='accuracy').mean()" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "SelectPercentile selects features based on statistical tests:\n", | |

| "\n", | |

| "- You specify a statistical test\n", | |

| "- It scores all features using that test\n", | |

| "- It keeps a percentage (that you specify) of the best scoring features\n", | |

| "\n", | |

| "Create a feature selection object:\n", | |

| "\n", | |

| "- Pass it the statistical test\n", | |

| " - We're using chi2, but other tests are available\n", | |

| "- Pass it the percentile\n", | |

| " - We're arbitrarily using 50 to keep 50% of the features (10 would keep 10% percent of the features)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 43, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "from sklearn.feature_selection import SelectPercentile, chi2\n", | |

| "selection = SelectPercentile(chi2, percentile=50)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Add feature selection to the Pipeline after the ColumnTransformer but before the model:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 44, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "pipe_selection = make_pipeline(ct, selection, logreg)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Cross-validate the updated Pipeline, and the score has improved:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 45, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "0.8193019898311469" | |

| ] | |

| }, | |

| "execution_count": 45, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "cross_val_score(pipe_selection, X, y, cv=5, scoring='accuracy').mean()" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "SelectFromModel scores features using a model:\n", | |

| "\n", | |

| "- You specify a model\n", | |

| " - Options include logistic regression, linear SVC, or any tree-based model (including ensembles)\n", | |

| "- It uses the coef_ or feature_importances_ attribute of that model as the score\n", | |

| "\n", | |

| "We'll try using logistic regression for feature selection:\n", | |

| "\n", | |

| "- Create a new instance to use for feature selection\n", | |

| "- This is completely separate from the logistic regression model we are using to make predictions" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 46, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "logreg_selection = LogisticRegression(solver='liblinear', penalty='l1', random_state=1)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Create a feature selection object:\n", | |

| "\n", | |

| "- Pass it the model we're using for selection\n", | |

| "- Pass it the threshold\n", | |

| " - This can be the mean or median of the scores, though you can optionally include a scaling factor (such as 1.5 times mean)\n", | |

| " - Higher threshold means fewer features will be kept" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 47, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "from sklearn.feature_selection import SelectFromModel\n", | |

| "selection = SelectFromModel(logreg_selection, threshold='mean')" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Update the Pipeline to use the new feature selection object:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 48, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "pipe_selection = make_pipeline(ct, selection, logreg)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Cross-validate the updated Pipeline, and the score has improved again:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 49, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "0.8260121775155358" | |

| ] | |

| }, | |

| "execution_count": 49, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "cross_val_score(pipe_selection, X, y, cv=5, scoring='accuracy').mean()" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Both of these approaches should be further tuned:\n", | |

| "\n", | |

| "- With SelectPercentile you should tune the percentile, and with SelectFromModel you should tune the threshold\n", | |

| "- You should also tune the transformer and model hyperparameters at the same time (using GridSearchCV), because you don't know which combination will produce the best results" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "### Khaled: How would you add feature standardization to our Pipeline?" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Some (but not all) Machine Learning models benefit from feature standardization, and this is often done with StandardScaler:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 50, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "from sklearn.preprocessing import StandardScaler\n", | |

| "scaler = StandardScaler()" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Here's a reminder of our existing ColumnTransformer:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 51, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "ct = make_column_transformer(\n", | |

| " (imp_ohe, ['Embarked', 'Sex']),\n", | |

| " (vect, 'Name'),\n", | |

| " (imp, ['Age', 'Fare']),\n", | |

| " remainder='passthrough')" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "If we wanted to scale \"Age\" and \"Fare\", we could make a Pipeline of imputation and scaling:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 52, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "imp_scaler = make_pipeline(imp, scaler)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Then replace imp with imp_scaler in our ColumnTransformer:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 53, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "ct_scaler = make_column_transformer(\n", | |

| " (imp_ohe, ['Embarked', 'Sex']),\n", | |

| " (vect, 'Name'),\n", | |

| " (imp_scaler, ['Age', 'Fare']),\n", | |

| " remainder='passthrough')" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Update the Pipeline:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 54, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "pipe_scaler = make_pipeline(ct_scaler, logreg)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Cross-validated accuracy has decreased slightly:\n", | |

| "\n", | |

| "- Don't assume that scaling is always needed\n", | |

| "- Our particular logisitic regression solver (liblinear) is robust to unscaled data" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 55, | |

| "metadata": {}, | |

| "outputs": [ | |

| { | |

| "data": { | |

| "text/plain": [ | |

| "0.8092210156299039" | |

| ] | |

| }, | |

| "execution_count": 55, | |

| "metadata": {}, | |

| "output_type": "execute_result" | |

| } | |

| ], | |

| "source": [ | |

| "cross_val_score(pipe_scaler, X, y, cv=5, scoring='accuracy').mean()" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "An alternative way to include scaling is to scale all columns:\n", | |

| "\n", | |

| "- StandardScaler will destroy the sparsity in a sparse matrix, so use MaxAbsScaler instead" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 56, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "from sklearn.preprocessing import MaxAbsScaler\n", | |

| "scaler = MaxAbsScaler()" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Update the Pipeline:\n", | |

| "\n", | |

| "- Use the original ColumnTransformer (does not include scaling)\n", | |

| "- MaxAbsScaler is the second step" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 57, | |

| "metadata": {}, | |

| "outputs": [], | |

| "source": [ | |

| "pipe_scaler = make_pipeline(ct, scaler, logreg)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |