Created

December 4, 2019 17:44

-

-

Save mikewlange/3f7690a2cb2b33927f1cf600a4dd081a to your computer and use it in GitHub Desktop.

/CreditWorkup/Data/start-here-a-gentle-introduction (1).ipynb

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| { | |

| "cells": [ | |

| { | |

| "metadata": { | |

| "_cell_guid": "551ce207-0976-48c3-9242-fac3e6bdf527", | |

| "_uuid": "66406036d8dd7a0071295d1aee64f13bffc44e3a" | |

| }, | |

| "cell_type": "markdown", | |

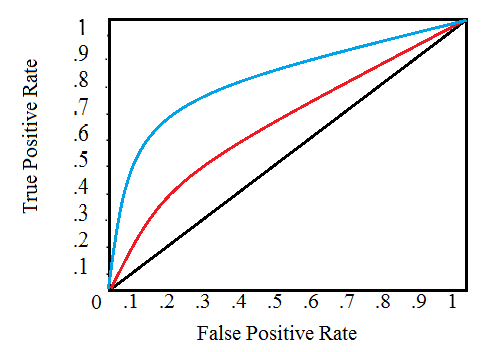

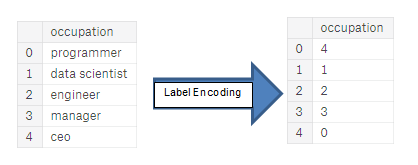

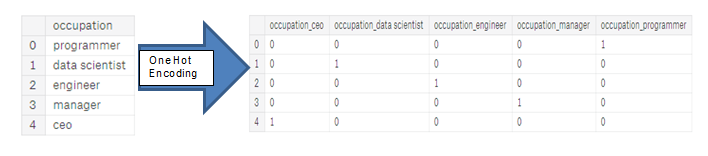

| "source": "# Introduction: Home Credit Default Risk Competition\n\nThis notebook is intended for those who are new to machine learning competitions or want a gentle introduction to the problem. I purposely avoid jumping into complicated models or joining together lots of data in order to show the basics of how to get started in machine learning! Any comments or suggestions are much appreciated.\n\nIn this notebook, we will take an initial look at the Home Credit default risk machine learning competition currently hosted on Kaggle. The objective of this competition is to use historical loan application data to predict whether or not an applicant will be able to repay a loan. This is a standard supervised classification task:\n\n* __Supervised__: The labels are included in the training data and the goal is to train a model to learn to predict the labels from the features\n* __Classification__: The label is a binary variable, 0 (will repay loan on time), 1 (will have difficulty repaying loan)\n\n\n# Data\n\nThe data is provided by [Home Credit](http://www.homecredit.net/about-us.aspx), a service dedicated to provided lines of credit (loans) to the unbanked population. Predicting whether or not a client will repay a loan or have difficulty is a critical business need, and Home Credit is hosting this competition on Kaggle to see what sort of models the machine learning community can develop to help them in this task. \n\nThere are 7 different sources of data:\n\n* application_train/application_test: the main training and testing data with information about each loan application at Home Credit. Every loan has its own row and is identified by the feature `SK_ID_CURR`. The training application data comes with the `TARGET` indicating 0: the loan was repaid or 1: the loan was not repaid. \n* bureau: data concerning client's previous credits from other financial institutions. Each previous credit has its own row in bureau, but one loan in the application data can have multiple previous credits.\n* bureau_balance: monthly data about the previous credits in bureau. Each row is one month of a previous credit, and a single previous credit can have multiple rows, one for each month of the credit length. \n* previous_application: previous applications for loans at Home Credit of clients who have loans in the application data. Each current loan in the application data can have multiple previous loans. Each previous application has one row and is identified by the feature `SK_ID_PREV`. \n* POS_CASH_BALANCE: monthly data about previous point of sale or cash loans clients have had with Home Credit. Each row is one month of a previous point of sale or cash loan, and a single previous loan can have many rows.\n* credit_card_balance: monthly data about previous credit cards clients have had with Home Credit. Each row is one month of a credit card balance, and a single credit card can have many rows.\n* installments_payment: payment history for previous loans at Home Credit. There is one row for every made payment and one row for every missed payment. \n\nThis diagram shows how all of the data is related:\n\n\n\nMoreover, we are provided with the definitions of all the columns (in `HomeCredit_columns_description.csv`) and an example of the expected submission file. \n\nIn this notebook, we will stick to using only the main application training and testing data. Although if we want to have any hope of seriously competing, we need to use all the data, for now we will stick to one file which should be more manageable. This will let us establish a baseline that we can then improve upon. With these projects, it's best to build up an understanding of the problem a little at a time rather than diving all the way in and getting completely lost! \n\n## Metric: ROC AUC\n\nOnce we have a grasp of the data (reading through the [column descriptions](https://www.kaggle.com/c/home-credit-default-risk/data) helps immensely), we need to understand the metric by which our submission is judged. In this case, it is a common classification metric known as the [Receiver Operating Characteristic Area Under the Curve (ROC AUC, also sometimes called AUROC)](https://stats.stackexchange.com/questions/132777/what-does-auc-stand-for-and-what-is-it).\n\nThe ROC AUC may sound intimidating, but it is relatively straightforward once you can get your head around the two individual concepts. The [Reciever Operating Characteristic (ROC) curve](https://en.wikipedia.org/wiki/Receiver_operating_characteristic) graphs the true positive rate versus the false positive rate:\n\n\n\nA single line on the graph indicates the curve for a single model, and movement along a line indicates changing the threshold used for classifying a positive instance. The threshold starts at 0 in the upper right to and goes to 1 in the lower left. A curve that is to the left and above another curve indicates a better model. For example, the blue model is better than the red model, which is better than the black diagonal line which indicates a naive random guessing model. \n\nThe [Area Under the Curve (AUC)](http://gim.unmc.edu/dxtests/roc3.htm) explains itself by its name! It is simply the area under the ROC curve. (This is the integral of the curve.) This metric is between 0 and 1 with a better model scoring higher. A model that simply guesses at random will have an ROC AUC of 0.5.\n\nWhen we measure a classifier according to the ROC AUC, we do not generation 0 or 1 predictions, but rather a probability between 0 and 1. This may be confusing because we usually like to think in terms of accuracy, but when we get into problems with inbalanced classes (we will see this is the case), accuracy is not the best metric. For example, if I wanted to build a model that could detect terrorists with 99.9999% accuracy, I would simply make a model that predicted every single person was not a terrorist. Clearly, this would not be effective (the recall would be zero) and we use more advanced metrics such as ROC AUC or the [F1 score](https://en.wikipedia.org/wiki/F1_score) to more accurately reflect the performance of a classifier. A model with a high ROC AUC will also have a high accuracy, but the [ROC AUC is a better representation of model performance.](https://datascience.stackexchange.com/questions/806/advantages-of-auc-vs-standard-accuracy)\n\nNot that we know the background of the data we are using and the metric to maximize, let's get into exploring the data. In this notebook, as mentioned previously, we will stick to the main data sources and simple models which we can build upon in future work. \n\n__Follow-up Notebooks__\n\nFor those looking to keep working on this problem, I have a series of follow-up notebooks:\n\n* [Manual Feature Engineering Part One](https://www.kaggle.com/willkoehrsen/introduction-to-manual-feature-engineering)\n* [Manual Feature Engineering Part Two](https://www.kaggle.com/willkoehrsen/introduction-to-manual-feature-engineering-p2)\n* [Introduction to Automated Feature Engineering](https://www.kaggle.com/willkoehrsen/automated-feature-engineering-basics)\n* [Advanced Automated Feature Engineering](https://www.kaggle.com/willkoehrsen/tuning-automated-feature-engineering-exploratory)\n* [Feature Selection](https://www.kaggle.com/willkoehrsen/introduction-to-feature-selection)\n* [Intro to Model Tuning: Grid and Random Search](https://www.kaggle.com/willkoehrsen/intro-to-model-tuning-grid-and-random-search)\n* [Automated Model Tuning](https://www.kaggle.com/willkoehrsen/automated-model-tuning)\n* [Model Tuning Results](https://www.kaggle.com/willkoehrsen/model-tuning-results-random-vs-bayesian-opt/notebook)\n\n\nI'll add more notebooks as I finish them! Thanks for all the comments! " | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "d632b08c-d252-4238-b496-e2c6edebec4b", | |

| "_uuid": "eb13bf76d4e1e60d0703856ec391cdc2c5bdf1fb" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "## Imports\n\nWe are using a typical data science stack: `numpy`, `pandas`, `sklearn`, `matplotlib`. " | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "b1076dfc-b9ad-4769-8c92-a6c4dae69d19", | |

| "_uuid": "8f2839f25d086af736a60e9eeb907d3b93b6e0e5", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# numpy and pandas for data manipulation\nimport numpy as np\nimport pandas as pd \n\n# sklearn preprocessing for dealing with categorical variables\nfrom sklearn.preprocessing import LabelEncoder\n\n# File system manangement\nimport os\n\n# Suppress warnings \nimport warnings\nwarnings.filterwarnings('ignore')\n\n# matplotlib and seaborn for plotting\nimport matplotlib.pyplot as plt\nimport seaborn as sns", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "a5e67831-4751-4f11-8e07-527e3e092671", | |

| "_uuid": "ded520f73b9e94ed47ac2e994a5fb1bcb9093d0f" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "## Read in Data \n\nFirst, we can list all the available data files. There are a total of 9 files: 1 main file for training (with target) 1 main file for testing (without the target), 1 example submission file, and 6 other files containing additional information about each loan. " | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "2cdca894-e637-43a9-8f80-5791c2bb9041", | |

| "_uuid": "c54e1559611512ebd447ac24f2226c2fffd61dcd", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# List files available\nprint(os.listdir(\"../input/\"))", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "79c7e3d0-c299-4dcb-8224-4455121ee9b0", | |

| "_uuid": "d629ff2d2480ee46fbb7e2d37f6b5fab8052498a", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# Training data\napp_train = pd.read_csv('../input/application_train.csv')\nprint('Training data shape: ', app_train.shape)\napp_train.head()", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "4695541966d3d29e8a7a8975b072d01caff1631d" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "The training data has 307511 observations (each one a separate loan) and 122 features (variables) including the `TARGET` (the label we want to predict)." | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "d077aee0-5271-440e-bc07-6087eab40b74", | |

| "_uuid": "cbd1c4111df6f07bc0d479b51f50895e728b717a", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# Testing data features\napp_test = pd.read_csv('../input/application_test.csv')\nprint('Testing data shape: ', app_test.shape)\napp_test.head()", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "e351f02c8a5886756507a2d4f1ddba4791220f12" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "The test set is considerably smaller and lacks a `TARGET` column. " | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "0b1a02afd367d1c4ee3a3a936382ca42fb921b9d" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "# Exploratory Data Analysis\n\nExploratory Data Analysis (EDA) is an open-ended process where we calculate statistics and make figures to find trends, anomalies, patterns, or relationships within the data. The goal of EDA is to learn what our data can tell us. It generally starts out with a high level overview, then narrows in to specific areas as we find intriguing areas of the data. The findings may be interesting in their own right, or they can be used to inform our modeling choices, such as by helping us decide which features to use." | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "23b20e53-3484-4c4b-bec9-2d8ac2ac918d", | |

| "_uuid": "7c006a09627df1333c557dc11a09f372bde34dda" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "## Examine the Distribution of the Target Column\n\nThe target is what we are asked to predict: either a 0 for the loan was repaid on time, or a 1 indicating the client had payment difficulties. We can first examine the number of loans falling into each category." | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "5fb6ab16-1b38-4ecf-8123-e48c7c061773", | |

| "_uuid": "2163ca09678b53dbe88388ccbc7d0e0f7d6c6230", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "app_train['TARGET'].value_counts()", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "0e93c1e2-f6b8-4a0b-82b6-7dad8df56048", | |

| "_uuid": "1b2611fb3cf392023c3f40fd2f7b96f56f5dee7d", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "app_train['TARGET'].astype(int).plot.hist();", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "48f008ff-d81e-46b2-80a3-e58f2a6627ca", | |

| "_uuid": "119106000875202a0030109f14b73245fc4285e1" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "From this information, we see this is an [_imbalanced class problem_](http://www.chioka.in/class-imbalance-problem/). There are far more loans that were repaid on time than loans that were not repaid. Once we get into more sophisticated machine learning models, we can [weight the classes](http://xgboost.readthedocs.io/en/latest/parameter.html) by their representation in the data to reflect this imbalance. " | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "507ec6b1-99d0-4324-a3ed-bdea2f916227", | |

| "_uuid": "58851dfef481f32b3026e89b086534ea3683440d" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "## Examine Missing Values\n\nNext we can look at the number and percentage of missing values in each column. " | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "fc4c675f-e4a1-4e4f-9ece-3c59e5c8f7fd", | |

| "_uuid": "7a2f5c72c45fa04d9fa95e8051ae595be806e9a2", | |

| "collapsed": true, | |

| "jupyter": { | |

| "outputs_hidden": true | |

| }, | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# Function to calculate missing values by column# Funct \ndef missing_values_table(df):\n # Total missing values\n mis_val = df.isnull().sum()\n \n # Percentage of missing values\n mis_val_percent = 100 * df.isnull().sum() / len(df)\n \n # Make a table with the results\n mis_val_table = pd.concat([mis_val, mis_val_percent], axis=1)\n \n # Rename the columns\n mis_val_table_ren_columns = mis_val_table.rename(\n columns = {0 : 'Missing Values', 1 : '% of Total Values'})\n \n # Sort the table by percentage of missing descending\n mis_val_table_ren_columns = mis_val_table_ren_columns[\n mis_val_table_ren_columns.iloc[:,1] != 0].sort_values(\n '% of Total Values', ascending=False).round(1)\n \n # Print some summary information\n print (\"Your selected dataframe has \" + str(df.shape[1]) + \" columns.\\n\" \n \"There are \" + str(mis_val_table_ren_columns.shape[0]) +\n \" columns that have missing values.\")\n \n # Return the dataframe with missing information\n return mis_val_table_ren_columns", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "786881f0-235e-441c-8319-f715a3b7d920", | |

| "_uuid": "98b0a82a3009b8f6d0bc718a2e1eaba779b4ace9", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# Missing values statistics\nmissing_values = missing_values_table(app_train)\nmissing_values.head(20)", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "df3c6a1d-b3ff-4565-bb32-5cba43c52729", | |

| "_uuid": "0b1be19103910ce83ebf54eeed99e42829643578" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "When it comes time to build our machine learning models, we will have to fill in these missing values (known as imputation). In later work, we will use models such as XGBoost that can [handle missing values with no need for imputation](https://stats.stackexchange.com/questions/235489/xgboost-can-handle-missing-data-in-the-forecasting-phase). Another option would be to drop columns with a high percentage of missing values, although it is impossible to know ahead of time if these columns will be helpful to our model. Therefore, we will keep all of the columns for now." | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "0672e40c3ab75a7901c0de35d248b322a227dc7f" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "## Column Types\n\nLet's look at the number of columns of each data type. `int64` and `float64` are numeric variables ([which can be either discrete or continuous](https://stats.stackexchange.com/questions/206/what-is-the-difference-between-discrete-data-and-continuous-data)). `object` columns contain strings and are [categorical features.](http://support.minitab.com/en-us/minitab-express/1/help-and-how-to/modeling-statistics/regression/supporting-topics/basics/what-are-categorical-discrete-and-continuous-variables/) . " | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "a03caadd76fa32f4b193e52467d4f39f2145d7b6", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# Number of each type of column\napp_train.dtypes.value_counts()", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "5859303c9acc63f7ff7acce063a9cd022a6d38cd" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "Let's now look at the number of unique entries in each of the `object` (categorical) columns." | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "2d021eda10939a19b141292d34491b357acd201a", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# Number of unique classes in each object column\napp_train.select_dtypes('object').apply(pd.Series.nunique, axis = 0)", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "10ceaf3ba31e26c822b242b1278d93ebfbefcc0a" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "Most of the categorical variables have a relatively small number of unique entries. We will need to find a way to deal with these categorical variables! " | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "86d1b309-5524-4298-b873-2c1c09eddec6", | |

| "_uuid": "1b49e667293daabffd8a4b2b6d02cf44bf6a3ba8" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "## Encoding Categorical Variables\n\nBefore we go any further, we need to deal with pesky categorical variables. A machine learning model unfortunately cannot deal with categorical variables (except for some models such as [LightGBM](http://lightgbm.readthedocs.io/en/latest/Features.html)). Therefore, we have to find a way to encode (represent) these variables as numbers before handing them off to the model. There are two main ways to carry out this process:\n\n* Label encoding: assign each unique category in a categorical variable with an integer. No new columns are created. An example is shown below\n\n\n\n* One-hot encoding: create a new column for each unique category in a categorical variable. Each observation recieves a 1 in the column for its corresponding category and a 0 in all other new columns. \n\n\n\nThe problem with label encoding is that it gives the categories an arbitrary ordering. The value assigned to each of the categories is random and does not reflect any inherent aspect of the category. In the example above, programmer recieves a 4 and data scientist a 1, but if we did the same process again, the labels could be reversed or completely different. The actual assignment of the integers is arbitrary. Therefore, when we perform label encoding, the model might use the relative value of the feature (for example programmer = 4 and data scientist = 1) to assign weights which is not what we want. If we only have two unique values for a categorical variable (such as Male/Female), then label encoding is fine, but for more than 2 unique categories, one-hot encoding is the safe option.\n\nThere is some debate about the relative merits of these approaches, and some models can deal with label encoded categorical variables with no issues. [Here is a good Stack Overflow discussion](https://datascience.stackexchange.com/questions/9443/when-to-use-one-hot-encoding-vs-labelencoder-vs-dictvectorizor). I think (and this is just a personal opinion) for categorical variables with many classes, one-hot encoding is the safest approach because it does not impose arbitrary values to categories. The only downside to one-hot encoding is that the number of features (dimensions of the data) can explode with categorical variables with many categories. To deal with this, we can perform one-hot encoding followed by [PCA](http://www.cs.otago.ac.nz/cosc453/student_tutorials/principal_components.pdf) or other [dimensionality reduction methods](https://www.analyticsvidhya.com/blog/2015/07/dimension-reduction-methods/) to reduce the number of dimensions (while still trying to preserve information). \n\nIn this notebook, we will use Label Encoding for any categorical variables with only 2 categories and One-Hot Encoding for any categorical variables with more than 2 categories. This process may need to change as we get further into the project, but for now, we will see where this gets us. (We will also not use any dimensionality reduction in this notebook but will explore in future iterations)." | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "95627792-157e-457a-88a8-3b3875c7e1d5", | |

| "_uuid": "46f5bf9a6de52e270aa911ffd895e704da5426ec" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "### Label Encoding and One-Hot Encoding\n\nLet's implement the policy described above: for any categorical variable (`dtype == object`) with 2 unique categories, we will use label encoding, and for any categorical variable with more than 2 unique categories, we will use one-hot encoding. \n\nFor label encoding, we use the Scikit-Learn `LabelEncoder` and for one-hot encoding, the pandas `get_dummies(df)` function." | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "70641d4d-1075-4837-8972-e58d70d8f242", | |

| "_uuid": "ddfaae5c3dcc7ec6bb47a2dffc10d364e8d25355", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# Create a label encoder object\nle = LabelEncoder()\nle_count = 0\n\n# Iterate through the columns\nfor col in app_train:\n if app_train[col].dtype == 'object':\n # If 2 or fewer unique categories\n if len(list(app_train[col].unique())) <= 2:\n # Train on the training data\n le.fit(app_train[col])\n # Transform both training and testing data\n app_train[col] = le.transform(app_train[col])\n app_test[col] = le.transform(app_test[col])\n \n # Keep track of how many columns were label encoded\n le_count += 1\n \nprint('%d columns were label encoded.' % le_count)", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "0851773b-39fd-4cf0-9a66-e30adeef3e57", | |

| "_uuid": "6796c6dc793a08e162b6e20c6f185ef37bdf51f3", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# one-hot encoding of categorical variables\napp_train = pd.get_dummies(app_train)\napp_test = pd.get_dummies(app_test)\n\nprint('Training Features shape: ', app_train.shape)\nprint('Testing Features shape: ', app_test.shape)", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "61d910b5-84f5-4655-bd8a-d29672c13741", | |

| "_uuid": "1b2c4198638ec8e5155097d112249de8754eb5c0" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "### Aligning Training and Testing Data\n\nThere need to be the same features (columns) in both the training and testing data. One-hot encoding has created more columns in the training data because there were some categorical variables with categories not represented in the testing data. To remove the columns in the training data that are not in the testing data, we need to `align` the dataframes. First we extract the target column from the training data (because this is not in the testing data but we need to keep this information). When we do the align, we must make sure to set `axis = 1` to align the dataframes based on the columns and not on the rows!" | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "d99ca215-e893-490c-a6a4-83f3e8a067b3", | |

| "_uuid": "e0d12a13cb95521c19b10d8829e8abe2b1118396", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "train_labels = app_train['TARGET']\n\n# Align the training and testing data, keep only columns present in both dataframes\napp_train, app_test = app_train.align(app_test, join = 'inner', axis = 1)\n\n# Add the target back in\napp_train['TARGET'] = train_labels\n\nprint('Training Features shape: ', app_train.shape)\nprint('Testing Features shape: ', app_test.shape)", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "802ffdbae02e43a9ca4e256ffcd6bd40ae15f3e9" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "The training and testing datasets now have the same features which is required for machine learning. The number of features has grown significantly due to one-hot encoding. At some point we probably will want to try [dimensionality reduction (removing features that are not relevant)](https://en.wikipedia.org/wiki/Dimensionality_reduction) to reduce the size of the datasets." | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "13918211-0e6b-4d72-955b-f997db19eea2", | |

| "_uuid": "4d7c8dd1d5bb5a0ef84cb78e6bff927249e62145" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "## Back to Exploratory Data Analysis\n\n### Anomalies\n\nOne problem we always want to be on the lookout for when doing EDA is anomalies within the data. These may be due to mis-typed numbers, errors in measuring equipment, or they could be valid but extreme measurements. One way to support anomalies quantitatively is by looking at the statistics of a column using the `describe` method. The numbers in the `DAYS_BIRTH` column are negative because they are recorded relative to the current loan application. To see these stats in years, we can mutliple by -1 and divide by the number of days in a year:\n\n" | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "a60be93c2d7d63855e6d65c1109f408ad85da134", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "(app_train['DAYS_BIRTH'] / -365).describe()", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "acb37a3e3f2e0b2fd581259788b9255398314157" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "Those ages look reasonable. There are no outliers for the age on either the high or low end. How about the days of employment? " | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "600c59dd5d970d3ccfea3a6af0036d85958adc91", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "app_train['DAYS_EMPLOYED'].describe()", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "1cdd9dafce28e497e08062cd3b189ac353c04cd9" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "That doesn't look right! The maximum value (besides being positive) is about 1000 years! " | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "2878bfb3a2be4554f33e03e1a04d4c1978b52a08", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "app_train['DAYS_EMPLOYED'].plot.hist(title = 'Days Employment Histogram');\nplt.xlabel('Days Employment');", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "d28ca1e799c0a6113cc5e920297e1dc93d380af4" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "Just out of curiousity, let's subset the anomalous clients and see if they tend to have higher or low rates of default than the rest of the clients." | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "67ea87d9ef6974b1780a7db1eefd13f90f81b5be", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "anom = app_train[app_train['DAYS_EMPLOYED'] == 365243]\nnon_anom = app_train[app_train['DAYS_EMPLOYED'] != 365243]\nprint('The non-anomalies default on %0.2f%% of loans' % (100 * non_anom['TARGET'].mean()))\nprint('The anomalies default on %0.2f%% of loans' % (100 * anom['TARGET'].mean()))\nprint('There are %d anomalous days of employment' % len(anom))", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "1edfcf786aadb004f083e9896989a29e43bf80da" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "Well that is extremely interesting! It turns out that the anomalies have a lower rate of default. \n\nHandling the anomalies depends on the exact situation, with no set rules. One of the safest approaches is just to set the anomalies to a missing value and then have them filled in (using Imputation) before machine learning. In this case, since all the anomalies have the exact same value, we want to fill them in with the same value in case all of these loans share something in common. The anomalous values seem to have some importance, so we want to tell the machine learning model if we did in fact fill in these values. As a solution, we will fill in the anomalous values with not a number (`np.nan`) and then create a new boolean column indicating whether or not the value was anomalous.\n\n" | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "e23ec3cb89428f3dd994b572f718cc729740cfab", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# Create an anomalous flag column\napp_train['DAYS_EMPLOYED_ANOM'] = app_train[\"DAYS_EMPLOYED\"] == 365243\n\n# Replace the anomalous values with nan\napp_train['DAYS_EMPLOYED'].replace({365243: np.nan}, inplace = True)\n\napp_train['DAYS_EMPLOYED'].plot.hist(title = 'Days Employment Histogram');\nplt.xlabel('Days Employment');", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "839595437c0721e2480f6b4ee58f3060b222f166" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "The distribution looks to be much more in line with what we would expect, and we also have created a new column to tell the model that these values were originally anomalous (becuase we will have to fill in the nans with some value, probably the median of the column). The other columns with `DAYS` in the dataframe look to be about what we expect with no obvious outliers. \n\nAs an extremely important note, anything we do to the training data we also have to do to the testing data. Let's make sure to create the new column and fill in the existing column with `np.nan` in the testing data." | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "a0d7c77b2adecaa878f39cf86ffddcfbbe51a190", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "app_test['DAYS_EMPLOYED_ANOM'] = app_test[\"DAYS_EMPLOYED\"] == 365243\napp_test[\"DAYS_EMPLOYED\"].replace({365243: np.nan}, inplace = True)\n\nprint('There are %d anomalies in the test data out of %d entries' % (app_test[\"DAYS_EMPLOYED_ANOM\"].sum(), len(app_test)))", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "fd656b392faad3b34ecfa448b55ad03e75449e0a" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "### Correlations\n\nNow that we have dealt with the categorical variables and the outliers, let's continue with the EDA. One way to try and understand the data is by looking for correlations between the features and the target. We can calculate the Pearson correlation coefficient between every variable and the target using the `.corr` dataframe method.\n\nThe correlation coefficient is not the greatest method to represent \"relevance\" of a feature, but it does give us an idea of possible relationships within the data. Some [general interpretations of the absolute value of the correlation coefficent](http://www.statstutor.ac.uk/resources/uploaded/pearsons.pdf) are:\n\n\n* .00-.19 “very weak”\n* .20-.39 “weak”\n* .40-.59 “moderate”\n* .60-.79 “strong”\n* .80-1.0 “very strong”\n" | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "02acdb8d-d95f-41b9-8ad1-e2b6cb26f398", | |

| "_uuid": "d39d15d64db1f2c9015c6f542911ef9a9cac119e", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# Find correlations with the target and sort\ncorrelations = app_train.corr()['TARGET'].sort_values()\n\n# Display correlations\nprint('Most Positive Correlations:\\n', correlations.tail(15))\nprint('\\nMost Negative Correlations:\\n', correlations.head(15))", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "8cfa409c-ec74-4fa4-8093-e7d00596c9c5", | |

| "_uuid": "67e1f0f22ec8e26c38827c24ca1e9409d73c9c64" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "Let's take a look at some of more significant correlations: the `DAYS_BIRTH` is the most positive correlation. (except for `TARGET` because the correlation of a variable with itself is always 1!) Looking at the documentation, `DAYS_BIRTH` is the age in days of the client at the time of the loan in negative days (for whatever reason!). The correlation is positive, but the value of this feature is actually negative, meaning that as the client gets older, they are less likely to default on their loan (ie the target == 0). That's a little confusing, so we will take the absolute value of the feature and then the correlation will be negative." | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "0f7b1cfb-9e5c-4720-9618-ad326940f3f3", | |

| "_uuid": "c1b831b6d1c3221efb123fbc1a4882aa1f598ec0" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "### Effect of Age on Repayment" | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "b0ab583c-dfbb-4ff7-80e5-d747fc408499", | |

| "_uuid": "f705c7aa49486ec3bf119c4edc4e4af58861b88d", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# Find the correlation of the positive days since birth and target\napp_train['DAYS_BIRTH'] = abs(app_train['DAYS_BIRTH'])\napp_train['DAYS_BIRTH'].corr(app_train['TARGET'])", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "3fde277c-ebf1-4eaf-a353-c18fc4b518a6", | |

| "_uuid": "2b95e2c33bdd50682e7105d0f27b9cc3ad5b482d" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "As the client gets older, there is a negative linear relationship with the target meaning that as clients get older, they tend to repay their loans on time more often. \n\nLet's start looking at this variable. First, we can make a histogram of the age. We will put the x axis in years to make the plot a little more understandable." | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "35e36393-e388-488e-ba7a-7473169d3e6f", | |

| "_uuid": "739226c4594130d6aabeb25ffb8742c37657d7a4", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# Set the style of plots\nplt.style.use('fivethirtyeight')\n\n# Plot the distribution of ages in years\nplt.hist(app_train['DAYS_BIRTH'] / 365, edgecolor = 'k', bins = 25)\nplt.title('Age of Client'); plt.xlabel('Age (years)'); plt.ylabel('Count');", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "02f5d3c5-e527-430b-a38d-531aeb8f3dd1", | |

| "_uuid": "340680b4a4ecf310a6369808157b17cac7c13461" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "By itself, the distribution of age does not tell us much other than that there are no outliers as all the ages are reasonable. To visualize the effect of the age on the target, we will next make a [kernel density estimation plot](https://en.wikipedia.org/wiki/Kernel_density_estimation) (KDE) colored by the value of the target. A [kernel density estimate plot shows the distribution of a single variable](https://chemicalstatistician.wordpress.com/2013/06/09/exploratory-data-analysis-kernel-density-estimation-in-r-on-ozone-pollution-data-in-new-york-and-ozonopolis/) and can be thought of as a smoothed histogram (it is created by computing a kernel, usually a Gaussian, at each data point and then averaging all the individual kernels to develop a single smooth curve). We will use the seaborn `kdeplot` for this graph." | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "3982a18f-2731-4bb2-80c9-831b2377421f", | |

| "_uuid": "2e045e65f048789b577477356df4337c9e5e2087", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "plt.figure(figsize = (10, 8))\n\n# KDE plot of loans that were repaid on time\nsns.kdeplot(app_train.loc[app_train['TARGET'] == 0, 'DAYS_BIRTH'] / 365, label = 'target == 0')\n\n# KDE plot of loans which were not repaid on time\nsns.kdeplot(app_train.loc[app_train['TARGET'] == 1, 'DAYS_BIRTH'] / 365, label = 'target == 1')\n\n# Labeling of plot\nplt.xlabel('Age (years)'); plt.ylabel('Density'); plt.title('Distribution of Ages');", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "9749e164-efea-47d2-ab60-5a8b89ff0570", | |

| "_uuid": "57757e02285b8067b61e3f586174ad64bec78ac1" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "The target == 1 curve skews towards the younger end of the range. Although this is not a significant correlation (-0.07 correlation coefficient), this variable is likely going to be useful in a machine learning model because it does affect the target. Let's look at this relationship in another way: average failure to repay loans by age bracket. \n\nTo make this graph, first we `cut` the age category into bins of 5 years each. Then, for each bin, we calculate the average value of the target, which tells us the ratio of loans that were not repaid in each age category." | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "4296e926-7245-40df-bb0a-f6e59d8e566a", | |

| "_uuid": "6c50572f095bff250bfed1993e2c53118277b5dd", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# Age information into a separate dataframe\nage_data = app_train[['TARGET', 'DAYS_BIRTH']]\nage_data['YEARS_BIRTH'] = age_data['DAYS_BIRTH'] / 365\n\n# Bin the age data\nage_data['YEARS_BINNED'] = pd.cut(age_data['YEARS_BIRTH'], bins = np.linspace(20, 70, num = 11))\nage_data.head(10)", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "18873d6b-3877-4c77-830e-0f3e10e5e7fb", | |

| "_uuid": "7082483e5fd9114856926de28968e5ae0b478b36", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# Group by the bin and calculate averages\nage_groups = age_data.groupby('YEARS_BINNED').mean()\nage_groups", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "004d1021-d73f-4356-9ef8-0464c95d1708", | |

| "_uuid": "823b5032f472b05ce079ae5a7680389f31ddd8b7", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "plt.figure(figsize = (8, 8))\n\n# Graph the age bins and the average of the target as a bar plot\nplt.bar(age_groups.index.astype(str), 100 * age_groups['TARGET'])\n\n# Plot labeling\nplt.xticks(rotation = 75); plt.xlabel('Age Group (years)'); plt.ylabel('Failure to Repay (%)')\nplt.title('Failure to Repay by Age Group');", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "2dad060f-bcab-4fe3-aa19-29fbf3e6fdab", | |

| "_uuid": "eb2bd6392ed6d6f7e002bc8dbea6aab0f30487d9" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "There is a clear trend: younger applicants are more likely to not repay the loan! The rate of failure to repay is above 10% for the youngest three age groups and beolow 5% for the oldest age group.\n\nThis is information that could be directly used by the bank: because younger clients are less likely to repay the loan, maybe they should be provided with more guidance or financial planning tips. This does not mean the bank should discriminate against younger clients, but it would be smart to take precautionary measures to help younger clients pay on time." | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "4749204f-ec63-4eeb-8d25-9c80967348f1", | |

| "_uuid": "43a3bb87bdaa65509e9dc887492239ae06cd1c77" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "### Exterior Sources\n\nThe 3 variables with the strongest negative correlations with the target are `EXT_SOURCE_1`, `EXT_SOURCE_2`, and `EXT_SOURCE_3`.\nAccording to the documentation, these features represent a \"normalized score from external data source\". I'm not sure what this exactly means, but it may be a cumulative sort of credit rating made using numerous sources of data. \n\nLet's take a look at these variables.\n\nFirst, we can show the correlations of the `EXT_SOURCE` features with the target and with each other." | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "e2ab3b7f-3a53-4495-a1de-31ad287f032a", | |

| "_uuid": "6197819149feaff75176e64e54c65ea6be3864fe", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# Extract the EXT_SOURCE variables and show correlations\next_data = app_train[['TARGET', 'EXT_SOURCE_1', 'EXT_SOURCE_2', 'EXT_SOURCE_3', 'DAYS_BIRTH']]\next_data_corrs = ext_data.corr()\next_data_corrs", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "0479863d-cfa9-47ab-83e6-7d7877e3e939", | |

| "_uuid": "20b21a6b4e15a726c29596abeb01346dc416729c", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "plt.figure(figsize = (8, 6))\n\n# Heatmap of correlations\nsns.heatmap(ext_data_corrs, cmap = plt.cm.RdYlBu_r, vmin = -0.25, annot = True, vmax = 0.6)\nplt.title('Correlation Heatmap');", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "78bd5acc-003d-4795-a57a-a6c4fc9c8c5f", | |

| "_uuid": "6a592aa7c01858b268489ccb8fd00690cd26cd58" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "All three `EXT_SOURCE` featureshave negative correlations with the target, indicating that as the value of the `EXT_SOURCE` increases, the client is more likely to repay the loan. We can also see that `DAYS_BIRTH` is positively correlated with `EXT_SOURCE_1` indicating that maybe one of the factors in this score is the client age.\n\nNext we can look at the distribution of each of these features colored by the value of the target. This will let us visualize the effect of this variable on the target." | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "5e2b6507-96d1-4f96-964f-d8241e321f09", | |

| "_uuid": "49afab6b3790abcc2dea04c483f462f39e536503", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "plt.figure(figsize = (10, 12))\n\n# iterate through the sources\nfor i, source in enumerate(['EXT_SOURCE_1', 'EXT_SOURCE_2', 'EXT_SOURCE_3']):\n \n # create a new subplot for each source\n plt.subplot(3, 1, i + 1)\n # plot repaid loans\n sns.kdeplot(app_train.loc[app_train['TARGET'] == 0, source], label = 'target == 0')\n # plot loans that were not repaid\n sns.kdeplot(app_train.loc[app_train['TARGET'] == 1, source], label = 'target == 1')\n \n # Label the plots\n plt.title('Distribution of %s by Target Value' % source)\n plt.xlabel('%s' % source); plt.ylabel('Density');\n \nplt.tight_layout(h_pad = 2.5)\n ", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "0ee531e8-f131-4ae3-b542-d4bf550d9bd5", | |

| "_uuid": "71ce5855665256dacfd7c52bceb11c68f5c58759" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "`EXT_SOURCE_3` displays the greatest difference between the values of the target. We can clearly see that this feature has some relationship to the likelihood of an applicant to repay a loan. The relationship is not very strong (in fact they are all [considered very weak](http://www.statstutor.ac.uk/resources/uploaded/pearsons.pdf), but these variables will still be useful for a machine learning model to predict whether or not an applicant will repay a loan on time." | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "53f486249f8afec0496d3de25120e57d956c2eb7" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "## Pairs Plot\n\nAs a final exploratory plot, we can make a pairs plot of the `EXT_SOURCE` variables and the `DAYS_BIRTH` variable. The [Pairs Plot](https://towardsdatascience.com/visualizing-data-with-pair-plots-in-python-f228cf529166) is a great exploration tool because it lets us see relationships between multiple pairs of variables as well as distributions of single variables. Here we are using the seaborn visualization library and the PairGrid function to create a Pairs Plot with scatterplots on the upper triangle, histograms on the diagonal, and 2D kernel density plots and correlation coefficients on the lower triangle.\n\nIf you don't understand this code, that's all right! Plotting in Python can be overly complex, and for anything beyond the simplest graphs, I usually find an existing implementation and adapt the code (don't repeat yourself)! " | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "7b185a4e-ac04-4ff2-b5cb-46eacf6a70b6", | |

| "_uuid": "9400f9d2810f4331005c9b91e040818279d1eaf8", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# Copy the data for plotting\nplot_data = ext_data.drop(columns = ['DAYS_BIRTH']).copy()\n\n# Add in the age of the client in years\nplot_data['YEARS_BIRTH'] = age_data['YEARS_BIRTH']\n\n# Drop na values and limit to first 100000 rows\nplot_data = plot_data.dropna().loc[:100000, :]\n\n# Function to calculate correlation coefficient between two columns\ndef corr_func(x, y, **kwargs):\n r = np.corrcoef(x, y)[0][1]\n ax = plt.gca()\n ax.annotate(\"r = {:.2f}\".format(r),\n xy=(.2, .8), xycoords=ax.transAxes,\n size = 20)\n\n# Create the pairgrid object\ngrid = sns.PairGrid(data = plot_data, size = 3, diag_sharey=False,\n hue = 'TARGET', \n vars = [x for x in list(plot_data.columns) if x != 'TARGET'])\n\n# Upper is a scatter plot\ngrid.map_upper(plt.scatter, alpha = 0.2)\n\n# Diagonal is a histogram\ngrid.map_diag(sns.kdeplot)\n\n# Bottom is density plot\ngrid.map_lower(sns.kdeplot, cmap = plt.cm.OrRd_r);\n\nplt.suptitle('Ext Source and Age Features Pairs Plot', size = 32, y = 1.05);", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "839f51f5-02f4-472d-9de4-aa2f760c171c", | |

| "_uuid": "88f9f486c74856bd87ff7699998088d9ee7fd926" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "In this plot, the red indicates loans that were not repaid and the blue are loans that are paid. We can see the different relationships within the data. There does appear to be a moderate positive linear relationship between the `EXT_SOURCE_1` and the `DAYS_BIRTH` (or equivalently `YEARS_BIRTH`), indicating that this feature may take into account the age of the client. " | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "bd49d18b-e35f-4122-a005-dd06d8f2f7ca", | |

| "_uuid": "d5506d0483af10dbf71e8ed11c99b2d5253680fb" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "# Feature Engineering\n\nKaggle competitions are won by feature engineering: those win are those who can create the most useful features out of the data. (This is true for the most part as the winning models, at least for structured data, all tend to be variants on [gradient boosting](http://blog.kaggle.com/2017/01/23/a-kaggle-master-explains-gradient-boosting/)). This represents one of the patterns in machine learning: feature engineering has a greater return on investment than model building and hyperparameter tuning. [This is a great article on the subject)](https://www.featurelabs.com/blog/secret-to-data-science-success/). As Andrew Ng is fond of saying: \"applied machine learning is basically feature engineering.\" \n\nWhile choosing the right model and optimal settings are important, the model can only learn from the data it is given. Making sure this data is as relevant to the task as possible is the job of the data scientist (and maybe some [automated tools](https://docs.featuretools.com/getting_started/install.html) to help us out).\n\nFeature engineering refers to a geneal process and can involve both feature construction: adding new features from the existing data, and feature selection: choosing only the most important features or other methods of dimensionality reduction. There are many techniques we can use to both create features and select features.\n\nWe will do a lot of feature engineering when we start using the other data sources, but in this notebook we will try only two simple feature construction methods: \n\n* Polynomial features\n* Domain knowledge features\n" | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "464705a1-7ecf-47ba-a1fe-9f870102eb85", | |

| "_uuid": "70322dd11709dcaaf879a56103fde8fc787b7d4c" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "## Polynomial Features\n\nOne simple feature construction method is called [polynomial features](http://scikit-learn.org/stable/modules/generated/sklearn.preprocessing.PolynomialFeatures.html). In this method, we make features that are powers of existing features as well as interaction terms between existing features. For example, we can create variables `EXT_SOURCE_1^2` and `EXT_SOURCE_2^2` and also variables such as `EXT_SOURCE_1` x `EXT_SOURCE_2`, `EXT_SOURCE_1` x `EXT_SOURCE_2^2`, `EXT_SOURCE_1^2` x `EXT_SOURCE_2^2`, and so on. These features that are a combination of multiple individual variables are called [interaction terms](https://en.wikipedia.org/wiki/Interaction_(statistics) because they capture the interactions between variables. In other words, while two variables by themselves may not have a strong influence on the target, combining them together into a single interaction variable might show a relationship with the target. [Interaction terms are commonly used in statistical models](https://www.theanalysisfactor.com/interpreting-interactions-in-regression/) to capture the effects of multiple variables, but I do not see them used as often in machine learning. Nonetheless, we can try out a few to see if they might help our model to predict whether or not a client will repay a loan. \n\nJake VanderPlas writes about [polynomial features in his excellent book Python for Data Science](https://jakevdp.github.io/PythonDataScienceHandbook/05.04-feature-engineering.html) for those who want more information.\n\nIn the following code, we create polynomial features using the `EXT_SOURCE` variables and the `DAYS_BIRTH` variable. [Scikit-Learn has a useful class called `PolynomialFeatures`](http://scikit-learn.org/stable/modules/generated/sklearn.preprocessing.PolynomialFeatures.html) that creates the polynomials and the interaction terms up to a specified degree. We can use a degree of 3 to see the results (when we are creating polynomial features, we want to avoid using too high of a degree, both because the number of features scales exponentially with the degree, and because we can run into [problems with overfitting](http://scikit-learn.org/stable/auto_examples/model_selection/plot_underfitting_overfitting.html#sphx-glr-auto-examples-model-selection-plot-underfitting-overfitting-py)). " | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "e5b0efd9-67ac-4aa0-91e9-2141a87a6a8a", | |

| "_uuid": "a63d53dcac14c4ac2e31ea9c5e16b5d161c2415b", | |

| "collapsed": true, | |

| "jupyter": { | |

| "outputs_hidden": true | |

| }, | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# Make a new dataframe for polynomial features\npoly_features = app_train[['EXT_SOURCE_1', 'EXT_SOURCE_2', 'EXT_SOURCE_3', 'DAYS_BIRTH', 'TARGET']]\npoly_features_test = app_test[['EXT_SOURCE_1', 'EXT_SOURCE_2', 'EXT_SOURCE_3', 'DAYS_BIRTH']]\n\n# imputer for handling missing values\nfrom sklearn.preprocessing import Imputer\nimputer = Imputer(strategy = 'median')\n\npoly_target = poly_features['TARGET']\n\npoly_features = poly_features.drop(columns = ['TARGET'])\n\n# Need to impute missing values\npoly_features = imputer.fit_transform(poly_features)\npoly_features_test = imputer.transform(poly_features_test)\n\nfrom sklearn.preprocessing import PolynomialFeatures\n \n# Create the polynomial object with specified degree\npoly_transformer = PolynomialFeatures(degree = 3)", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "2be7c1ab-d1e5-40f2-b8e7-e2b2ce1e2f9a", | |

| "_uuid": "72c5ecaae9c6ff038d16cbd9208f1abb69912631", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# Train the polynomial features\npoly_transformer.fit(poly_features)\n\n# Transform the features\npoly_features = poly_transformer.transform(poly_features)\npoly_features_test = poly_transformer.transform(poly_features_test)\nprint('Polynomial Features shape: ', poly_features.shape)", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "a7833b1e-714c-4988-8cbf-757d01290d8f", | |

| "_uuid": "4d837e47bada5411ffce06266605f043c6ffe19e" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "This creates a considerable number of new features. To get the names we have to use the polynomial features `get_feature_names` method." | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "7465d1e6-d360-4029-afa7-67cb34f60249", | |

| "_uuid": "121f98d2ec9c81c5dabb911dc68562d0b2b6d737", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "poly_transformer.get_feature_names(input_features = ['EXT_SOURCE_1', 'EXT_SOURCE_2', 'EXT_SOURCE_3', 'DAYS_BIRTH'])[:15]", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "7eaeb645-bb25-4ac5-884f-d1231ab6d88f", | |

| "_uuid": "4e68b80b2738ef46863b53b7b781f299d602d316" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "There are 35 features with individual features raised to powers up to degree 3 and interaction terms. Now, we can see whether any of these new features are correlated with the target." | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "95725a63-f8f2-4680-8f7a-4252f04e7f7f", | |

| "_uuid": "e712923de757457bb87a35ecaccd27007b351e6c", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# Create a dataframe of the features \npoly_features = pd.DataFrame(poly_features, \n columns = poly_transformer.get_feature_names(['EXT_SOURCE_1', 'EXT_SOURCE_2', \n 'EXT_SOURCE_3', 'DAYS_BIRTH']))\n\n# Add in the target\npoly_features['TARGET'] = poly_target\n\n# Find the correlations with the target\npoly_corrs = poly_features.corr()['TARGET'].sort_values()\n\n# Display most negative and most positive\nprint(poly_corrs.head(10))\nprint(poly_corrs.tail(5))", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "971de432-c65e-4c9a-a3c0-c923ed27ddcb", | |

| "_uuid": "082ac97a068afed6758ed191acf5ab485e39230c" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "Several of the new variables have a greater (in terms of absolute magnitude) correlation with the target than the original features. When we build machine learning models, we can try with and without these features to determine if they actually help the model learn. \n\nWe will add these features to a copy of the training and testing data and then evaluate models with and without the features. Many times in machine learning, the only way to know if an approach will work is to try it out! " | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "ed758ed436a86f92a8ee574999aa91089242ca7a", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# Put test features into dataframe\npoly_features_test = pd.DataFrame(poly_features_test, \n columns = poly_transformer.get_feature_names(['EXT_SOURCE_1', 'EXT_SOURCE_2', \n 'EXT_SOURCE_3', 'DAYS_BIRTH']))\n\n# Merge polynomial features into training dataframe\npoly_features['SK_ID_CURR'] = app_train['SK_ID_CURR']\napp_train_poly = app_train.merge(poly_features, on = 'SK_ID_CURR', how = 'left')\n\n# Merge polnomial features into testing dataframe\npoly_features_test['SK_ID_CURR'] = app_test['SK_ID_CURR']\napp_test_poly = app_test.merge(poly_features_test, on = 'SK_ID_CURR', how = 'left')\n\n# Align the dataframes\napp_train_poly, app_test_poly = app_train_poly.align(app_test_poly, join = 'inner', axis = 1)\n\n# Print out the new shapes\nprint('Training data with polynomial features shape: ', app_train_poly.shape)\nprint('Testing data with polynomial features shape: ', app_test_poly.shape)", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "9b27fad1522263c32b57a8127c84ad0e08ff9d8f" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "## Domain Knowledge Features\n\nMaybe it's not entirely correct to call this \"domain knowledge\" because I'm not a credit expert, but perhaps we could call this \"attempts at applying limited financial knowledge\". In this frame of mind, we can make a couple features that attempt to capture what we think may be important for telling whether a client will default on a loan. Here I'm going to use five features that were inspired by [this script](https://www.kaggle.com/jsaguiar/updated-0-792-lb-lightgbm-with-simple-features) by Aguiar:\n\n* `CREDIT_INCOME_PERCENT`: the percentage of the credit amount relative to a client's income\n* `ANNUITY_INCOME_PERCENT`: the percentage of the loan annuity relative to a client's income\n* `CREDIT_TERM`: the length of the payment in months (since the annuity is the monthly amount due\n* `DAYS_EMPLOYED_PERCENT`: the percentage of the days employed relative to the client's age\n\nAgain, thanks to Aguiar and [his great script](https://www.kaggle.com/jsaguiar/updated-0-792-lb-lightgbm-with-simple-features) for exploring these features.\n\n" | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "c8d4b165b45da6c3120911de18e9348d8726c70c", | |

| "collapsed": true, | |

| "jupyter": { | |

| "outputs_hidden": true | |

| }, | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "app_train_domain = app_train.copy()\napp_test_domain = app_test.copy()\n\napp_train_domain['CREDIT_INCOME_PERCENT'] = app_train_domain['AMT_CREDIT'] / app_train_domain['AMT_INCOME_TOTAL']\napp_train_domain['ANNUITY_INCOME_PERCENT'] = app_train_domain['AMT_ANNUITY'] / app_train_domain['AMT_INCOME_TOTAL']\napp_train_domain['CREDIT_TERM'] = app_train_domain['AMT_ANNUITY'] / app_train_domain['AMT_CREDIT']\napp_train_domain['DAYS_EMPLOYED_PERCENT'] = app_train_domain['DAYS_EMPLOYED'] / app_train_domain['DAYS_BIRTH']", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "d017103871bd4935a8c29599d6be33e0e74b2f83", | |

| "collapsed": true, | |

| "jupyter": { | |

| "outputs_hidden": true | |

| }, | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "app_test_domain['CREDIT_INCOME_PERCENT'] = app_test_domain['AMT_CREDIT'] / app_test_domain['AMT_INCOME_TOTAL']\napp_test_domain['ANNUITY_INCOME_PERCENT'] = app_test_domain['AMT_ANNUITY'] / app_test_domain['AMT_INCOME_TOTAL']\napp_test_domain['CREDIT_TERM'] = app_test_domain['AMT_ANNUITY'] / app_test_domain['AMT_CREDIT']\napp_test_domain['DAYS_EMPLOYED_PERCENT'] = app_test_domain['DAYS_EMPLOYED'] / app_test_domain['DAYS_BIRTH']", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "7e917d654c05bd0ca3251d4f51c8176d82fe613f" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "#### Visualize New Variables\n\nWe should explore these __domain knowledge__ variables visually in a graph. For all of these, we will make the same KDE plot colored by the value of the `TARGET`." | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "e9c10d7f55b4c636335f815762b93598fe4acb0a", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "plt.figure(figsize = (12, 20))\n# iterate through the new features\nfor i, feature in enumerate(['CREDIT_INCOME_PERCENT', 'ANNUITY_INCOME_PERCENT', 'CREDIT_TERM', 'DAYS_EMPLOYED_PERCENT']):\n \n # create a new subplot for each source\n plt.subplot(4, 1, i + 1)\n # plot repaid loans\n sns.kdeplot(app_train_domain.loc[app_train_domain['TARGET'] == 0, feature], label = 'target == 0')\n # plot loans that were not repaid\n sns.kdeplot(app_train_domain.loc[app_train_domain['TARGET'] == 1, feature], label = 'target == 1')\n \n # Label the plots\n plt.title('Distribution of %s by Target Value' % feature)\n plt.xlabel('%s' % feature); plt.ylabel('Density');\n \nplt.tight_layout(h_pad = 2.5)", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "e27d400f3ec5447cfe5e908952351f271d521784" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "It's hard to say ahead of time if these new features will be useful. The only way to tell for sure is to try them out! " | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "ebb64e63-6222-4509-a43c-302c6435ce09", | |

| "_uuid": "8bf057e523b2d99833f6dc9d95fe6141fb4e325a" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "# Baseline\n\nFor a naive baseline, we could guess the same value for all examples on the testing set. We are asked to predict the probability of not repaying the loan, so if we are entirely unsure, we would guess 0.5 for all observations on the test set. This will get us a Reciever Operating Characteristic Area Under the Curve (AUC ROC) of 0.5 in the competition ([random guessing on a classification task will score a 0.5](https://stats.stackexchange.com/questions/266387/can-auc-roc-be-between-0-0-5)).\n\nSince we already know what score we are going to get, we don't really need to make a naive baseline guess. Let's use a slightly more sophisticated model for our actual baseline: Logistic Regression.\n\n## Logistic Regression Implementation\n\nHere I will focus on implementing the model rather than explaining the details, but for those who want to learn more about the theory of machine learning algorithms, I recommend both [An Introduction to Statistical Learning](http://www-bcf.usc.edu/~gareth/ISL/) and [Hands-On Machine Learning with Scikit-Learn and TensorFlow](http://shop.oreilly.com/product/0636920052289.do). Both of these books present the theory and also the code needed to make the models (in R and Python respectively). They both teach with the mindset that the best way to learn is by doing, and they are very effective! \n\nTo get a baseline, we will use all of the features after encoding the categorical variables. We will preprocess the data by filling in the missing values (imputation) and normalizing the range of the features (feature scaling). The following code performs both of these preprocessing steps." | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "60ef8744-ca3a-4810-8439-2835fbfc1833", | |

| "_uuid": "784ae2f91cf7792702595a9973ba773b2acdec00", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "from sklearn.preprocessing import MinMaxScaler, Imputer\n\n# Drop the target from the training data\nif 'TARGET' in app_train:\n train = app_train.drop(columns = ['TARGET'])\nelse:\n train = app_train.copy()\n \n# Feature names\nfeatures = list(train.columns)\n\n# Copy of the testing data\ntest = app_test.copy()\n\n# Median imputation of missing values\nimputer = Imputer(strategy = 'median')\n\n# Scale each feature to 0-1\nscaler = MinMaxScaler(feature_range = (0, 1))\n\n# Fit on the training data\nimputer.fit(train)\n\n# Transform both training and testing data\ntrain = imputer.transform(train)\ntest = imputer.transform(app_test)\n\n# Repeat with the scaler\nscaler.fit(train)\ntrain = scaler.transform(train)\ntest = scaler.transform(test)\n\nprint('Training data shape: ', train.shape)\nprint('Testing data shape: ', test.shape)", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "1bcfab25-cc1c-4553-9473-96fcfeb2a61a", | |

| "_uuid": "364f0835a46f7a7bb7be487b54d92f5ff50ed341" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "We will use [`LogisticRegression`from Scikit-Learn](http://scikit-learn.org/stable/modules/generated/sklearn.linear_model.LogisticRegression.html) for our first model. The only change we will make from the default model settings is to lower the [regularization parameter](http://scikit-learn.org/stable/modules/linear_model.html#logistic-regression), C, which controls the amount of overfitting (a lower value should decrease overfitting). This will get us slightly better results than the default `LogisticRegression`, but it still will set a low bar for any future models.\n\nHere we use the familiar Scikit-Learn modeling syntax: we first create the model, then we train the model using `.fit` and then we make predictions on the testing data using `.predict_proba` (remember that we want probabilities and not a 0 or 1)." | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "6462ff85-e3b6-4a5f-b95c-9416841413b1", | |

| "_uuid": "9e8aba9401e8367f9902d710ba49e820294870e1", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "from sklearn.linear_model import LogisticRegression\n\n# Make the model with the specified regularization parameter\nlog_reg = LogisticRegression(C = 0.0001)\n\n# Train on the training data\nlog_reg.fit(train, train_labels)", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "fe98191a-da57-4d50-8d56-7d8077fc6c26", | |

| "_uuid": "0ad71fb750fac4af2845f30b0af73f5817e46101" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "Now that the model has been trained, we can use it to make predictions. We want to predict the probabilities of not paying a loan, so we use the model `predict.proba` method. This returns an m x 2 array where m is the number of observations. The first column is the probability of the target being 0 and the second column is the probability of the target being 1 (so for a single row, the two columns must sum to 1). We want the probability the loan is not repaid, so we will select the second column.\n\nThe following code makes the predictions and selects the correct column." | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "80c77c89-3fa9-4311-b441-412a4fbb1480", | |

| "_uuid": "2138782ddbfc9a803dc99a938460fc27d15972a9", | |

| "collapsed": true, | |

| "jupyter": { | |

| "outputs_hidden": true | |

| }, | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# Make predictions\n# Make sure to select the second column only\nlog_reg_pred = log_reg.predict_proba(test)[:, 1]", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "3a2612e95b13a94a13f679c1754b6c4fb28c332d" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "The predictions must be in the format shown in the `sample_submission.csv` file, where there are only two columns: `SK_ID_CURR` and `TARGET`. We will create a dataframe in this format from the test set and the predictions called `submit`. " | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "09a3d281e4c7ee6820f402e32f31775851113089", | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# Submission dataframe\nsubmit = app_test[['SK_ID_CURR']]\nsubmit['TARGET'] = log_reg_pred\n\nsubmit.head()", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "2a1bf4f54df8b37a71a7732e61a7bfebafd8be11" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "The predictions represent a probability between 0 and 1 that the loan will not be repaid. If we were using these predictions to classify applicants, we could set a probability threshold for determining that a loan is risky. " | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "77204f15-c3b9-4c67-8d93-173fa3afceaa", | |

| "_uuid": "fcaf338e52d8f42f119b31d437b516e336e787ec", | |

| "collapsed": true, | |

| "jupyter": { | |

| "outputs_hidden": true | |

| }, | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# Save the submission to a csv file\nsubmit.to_csv('log_reg_baseline.csv', index = False)", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "11e18bd5c4e75b931f90a22bc6ff84441a13570c" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "The submission has now been saved to the virtual environment in which our notebook is running. To access the submission, at the end of the notebook, we will hit the blue Commit & Run button at the upper right of the kernel. This runs the entire notebook and then lets us download any files that are created during the run. \n\nOnce we run the notebook, the files created are available in the Versions tab under the Output sub-tab. From here, the submission files can be submitted to the competition or downloaded. Since there are several models in this notebook, there will be multiple output files. \n\n__The logistic regression baseline should score around 0.671 when submitted.__" | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "462ea34f-3f66-490a-a61f-24a991271f69", | |

| "_uuid": "92687ac866441f6ee2919aa5e5c935490c172afc" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "## Improved Model: Random Forest\n\nTo try and beat the poor performance of our baseline, we can update the algorithm. Let's try using a Random Forest on the same training data to see how that affects performance. The Random Forest is a much more powerful model especially when we use hundreds of trees. We will use 100 trees in the random forest." | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "6643479e-7980-431c-a6a2-9087acdb0f42", | |

| "_uuid": "cf05e2318904b8f3575ae233c185cd995fd07643", | |

| "collapsed": true, | |

| "jupyter": { | |

| "outputs_hidden": true | |

| }, | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "from sklearn.ensemble import RandomForestClassifier\n\n# Make the random forest classifier\nrandom_forest = RandomForestClassifier(n_estimators = 100, random_state = 50, verbose = 1, n_jobs = -1)", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "020f0856-8f24-4b22-bca5-aac7f137f032", | |

| "_uuid": "52258a9b89b3069bc1d82829107e8e7c1ef05fd6", | |

| "collapsed": true, | |

| "jupyter": { | |

| "outputs_hidden": true | |

| }, | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# Train on the training data\nrandom_forest.fit(train, train_labels)\n\n# Extract feature importances\nfeature_importance_values = random_forest.feature_importances_\nfeature_importances = pd.DataFrame({'feature': features, 'importance': feature_importance_values})\n\n# Make predictions on the test data\npredictions = random_forest.predict_proba(test)[:, 1]", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_cell_guid": "25145966-669e-426d-89a3-98e30b861057", | |

| "_uuid": "1da4b02502388d2b8a2bc5376027c5bef50272f3", | |

| "collapsed": true, | |

| "jupyter": { | |

| "outputs_hidden": true | |

| }, | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "# Make a submission dataframe\nsubmit = app_test[['SK_ID_CURR']]\nsubmit['TARGET'] = predictions\n\n# Save the submission dataframe\nsubmit.to_csv('random_forest_baseline.csv', index = False)", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "cf6f600ed10c511dd26d4bd5efa7997ab8d6916a" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "These predictions will also be available when we run the entire notebook. \n\n__This model should score around 0.678 when submitted.__" | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "43d979aed7cdfd6d7bd6a995b5756a384bd2b7dc" | |

| }, | |

| "cell_type": "markdown", | |

| "source": "### Make Predictions using Engineered Features\n\nThe only way to see if the Polynomial Features and Domain knowledge improved the model is to train a test a model on these features! We can then compare the submission performance to that for the model without these features to gauge the effect of our feature engineering." | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "d9d49008fb73b8d15c797850c64d5e6f81375163", | |

| "collapsed": true, | |

| "jupyter": { | |

| "outputs_hidden": true | |

| }, | |

| "trusted": true | |

| }, | |

| "cell_type": "code", | |

| "source": "poly_features_names = list(app_train_poly.columns)\n\n# Impute the polynomial features\nimputer = Imputer(strategy = 'median')\n\npoly_features = imputer.fit_transform(app_train_poly)\npoly_features_test = imputer.transform(app_test_poly)\n\n# Scale the polynomial features\nscaler = MinMaxScaler(feature_range = (0, 1))\n\npoly_features = scaler.fit_transform(poly_features)\npoly_features_test = scaler.transform(poly_features_test)\n\nrandom_forest_poly = RandomForestClassifier(n_estimators = 100, random_state = 50, verbose = 1, n_jobs = -1)", | |

| "execution_count": null, | |

| "outputs": [] | |

| }, | |

| { | |

| "metadata": { | |

| "_uuid": "a7d3f3b6cdf8231832c56224c8a694056e456593", | |

| "collapsed": true, | |

| "jupyter": { | |

| "outputs_hidden": true | |

| }, | |