Last active

March 30, 2017 21:06

-

-

Save pkipsy/77b222a9863a7f9369149f35d222160a to your computer and use it in GitHub Desktop.

Estimating Sequence Probabilities with Scrapy and USENET

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| { | |

| "cells": [ | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "# Estimating Mondegreen Probabilities with Scrapy & USENET\n", | |

| "## June 2014\n", | |

| "\n", | |

| "### The Case of Lady Mondegreen\n", | |

| "**Purple Haze**, recorded in 1967, is testament to Jimi Hendrix's singular genius. The rock anthem, which is often cited as one of the greatest guitar songs of all time, has been covered by artists ranging from Ozzy Osbourne to the Kronos Quartet, and parodied by the likes of Frank Zappa and Cheech and Chong. Among linguists, it is also famous for another reason: its frequently misunderstood lyrics. The way Hendrix penned it, the opening verse reads like this:\n", | |

| "\n", | |

| ">Purple haze all in my brain \n", | |

| "Lately things just don't seem the same \n", | |

| "Actin' funny, but I don't know why \n", | |

| "'Scuse me while I kiss the sky. \n", | |

| "\n", | |

| "But many listeners could swear that what Jimi was really singing was, *'scuse me while I kiss this guy*. The mishearing is a classic case of a **mondegreen**: the mistaking of a word or phrase for a similar sounding expression.\n", | |

| "\n", | |

| "In his widely read work, **The Language Instinct**, Harvard psychologist Steven Pinker introduces the term to make a contentious theoretical point:\n", | |

| "\n", | |

| ">Another demonstration that speech perception is not [driven by expectation] comes from an illusion that the columnist Jon Carroll has called the mondegreen, after his mis-hearing of the folk balland \"The Bonnie Earl O'Moray\":\n", | |

| ">>Oh, ye hielands and ye lowlands, \n", | |

| "Oh, where ha ye been? \n", | |

| "They have slain the Earl of Moray, \n", | |

| "And laid him on the green. \n", | |

| "\n", | |

| ">He had always thought that the lines were \"They have slain the Earl of Moray, And Lady Mondegreen.\"\n", | |

| "\n", | |

| "In this instance, Pinker is using mondegreens to make a claim that **expectation** - i.e., what we expect to hear based on prior context or commonness of expression - does not play a key role in how we process spoken language. He trots out a number of examples, then muses:\n", | |

| "\n", | |

| ">The interesting thing about mondegreens is that the mis-hearings are generally less plausible than the intended lyrics. In no way do they bear out any sane listener's general expectations of what a speaker is likely to say or mean. [...] Apparently, listeners lock in to some set of words that fit the sound and that hang together more or less as English words and phrases, but plausibility and general expectations are not running the show.\n", | |

| "\n", | |

| "How ever did he come to that conclusion, I wondered? Today, nearly two decades after **The Language Instinct** was published, there are few cognitive linguists who would claim that expectation is not a matter of *central* importance to how we comprehend spoken language. So what led Pinker to this conclusion?\n", | |

| "\n", | |

| "Perhaps it has something to do with his stock of examples. A simple story about how our expectations give rise to mishearings will certainly be complicated by deliberately amusing misunderstandings. Such ‘intended accidents’ are often included in collections of mondegreens precisely because of their entertainment value, but would probably be better classified as a form of punning. Several of the examples Pinker cites fall into this category, like the substitution of \"lead us not into Penn Station\" for \"lead us not into temptation\" in the Lord's Prayer.\n", | |

| "\n", | |

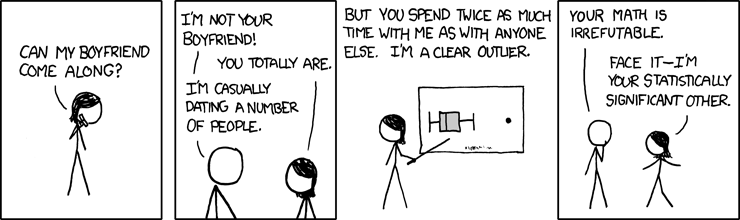

| "The problem with using examples of this type, is that clever word play achieves its effects by *subverting* - rather than conforming to - our expectations. Consider the phrase **statistically significant other**, which serves as the punchline to an XKCD comic. Both ‘statistically significant’ and ‘significant other’ are common expressions, but are used in such different contexts – statistics and dating, respectively – that we hardly expect to see them together. The phrase fuses these collocations into a linguistic chimera, that's part romcom, part stats class, and funny not in spite of, but *because of* the mismatch. Many deliberate mishearings work in a similar manner, by introducing a mischievious sound-a-like that transforms the meaning into something subversive, humorous, or naughty. Nevertheless, there is still reason to believe that for genuine mishearings – of the truly accidental variety – listeners’ prior expectations might indeed play a role, priming an incorrect read on what was intended.\n", | |

| "\n", | |

| "\n", | |

| "\n", | |

| "To pursue this question further, we can investigate **misheard song lyrics**, a rich source of mondegreens. A key question is whether listeners tend to mishear words or phrases that are actually more probable in context than the original. To give a simple example, my name, 'Melody' – which is comparatively rare – is often misheard as ‘Melanie’, the far more common moniker. This problem plagues idiom interpetation as well: ‘beyond reproach’ is misheard as ‘beyond approach’, ‘herding cats’ as ‘herding cattle’, ‘catalytic converter’ as ‘Cadillac converter’, and so on. Will the same be true of lyrics?\n", | |

| "\n", | |

| "### Examining Mondegreens at Scale\n", | |

| "\n", | |

| "In order to estimate the likelihood of a particular sequence of words - such as a turn of phrase, or a misheard verse - a common method is to employ a stochastic model that estimates the probability of the overall string on the basis of the observed probabilities of its subcomponents. The aim of such a **language model** is to supply a reliable approximation of the likelihood of a given linguistic sequence. Here, our interest is in to compare the likelihood of sequences of interest: specifically, song lyrics as intended vs. as misunderstood. \n", | |

| "\n", | |

| "To obtain materials, we can look to [kissthisguy.com](http://www.kissthisguy.com/), an online repository of misheard song lyrics that supplies original song lyrics alongside their misinterpretations. We can first extract the contents of the database using [Scrapy](https://scrapy.org/), an open source web scraper for Python. Setting up a spider with Scrapy is as simple as supplying the desired start urls, a set of extraction rules, and the appropriate XPath Selectors (Scrapy’s version of regular expressions). Once the spider has finished its crawl, mined data is returned in a JSON format, as part of an unordered list of originals and modegreens. \n", | |

| "\n", | |

| "\t['Come in here, Dear boy, have a cigar']\n", | |

| "\t['Come and hit a deer, boy - have a cigar']\n", | |

| "So how do we build this thing? Scrapy asks us to modify code in three simple scripts: settings.py, pipelines.py, items.py. Here's what ours will look like:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": null, | |

| "metadata": { | |

| "collapsed": true | |

| }, | |

| "outputs": [], | |

| "source": [ | |

| "#settings.py\n", | |

| "BOT_NAME = 'kissthisguy'\n", | |

| "\n", | |

| "SPIDER_MODULES = ['kissthisguy.spiders']\n", | |

| "NEWSPIDER_MODULE = 'kissthisguy.spiders'\n", | |

| "\n", | |

| "#pipelines.py\n", | |

| "class KissthisguyPipeline(object):\n", | |

| " def process_item(self, item, spider):\n", | |

| " return item\n", | |

| " \n", | |

| "#items.py\n", | |

| "from scrapy.item import Item, Field\n", | |

| "\n", | |

| "class KissItem(Item):\n", | |

| " title = Field()\n", | |

| " link = Field()\n", | |

| " original = Field()\n", | |

| " mondegreen = Field()\n", | |

| " visit_id = Field()\n", | |

| " visit_status = Field()" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Now we write our spider. We need to tell it the domain it will be crawling and the URLs to start from (we'll work with the URLs for each letter). We also need to supply some simple rules for how to traverse those URLS, and a list of item information we want it to extract." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": null, | |

| "metadata": { | |

| "collapsed": true | |

| }, | |

| "outputs": [], | |

| "source": [ | |

| "from scrapy.contrib.spiders import CrawlSpider, Rule\n", | |

| "from scrapy.selector import HtmlXPathSelector\n", | |

| "from scrapy.contrib.linkextractors.sgml import SgmlLinkExtractor\n", | |

| "\n", | |

| "from kissthisguy.items import KissItem\n", | |

| "\n", | |

| "class KissSpider(CrawlSpider):\n", | |

| " name = \"kissthisguy\"\n", | |

| " allowed_domains = [\"kissthisguy.com\"]\n", | |

| " start_urls = ['http://www.kissthisguy.com/A-songs.htm', 'http://www.kissthisguy.com/B-songs.htm', 'http://www.kissthisguy.com/C-songs.htm', 'http://www.kissthisguy.com/D-songs.htm', 'http://www.kissthisguy.com/E-songs.htm', 'http://www.kissthisguy.com/F-songs.htm', 'http://www.kissthisguy.com/G-songs.htm', 'http://www.kissthisguy.com/H-songs.htm', 'http://www.kissthisguy.com/I-songs.htm', 'http://www.kissthisguy.com/J-songs.htm', 'http://www.kissthisguy.com/K-songs.htm', 'http://www.kissthisguy.com/L-songs.htm', 'http://www.kissthisguy.com/M-songs.htm', 'http://www.kissthisguy.com/N-songs.htm', 'http://www.kissthisguy.com/O-songs.htm', 'http://www.kissthisguy.com/P-songs.htm', 'http://www.kissthisguy.com/Q-songs.htm', 'http://www.kissthisguy.com/R-songs.htm', 'http://www.kissthisguy.com/S-songs.htm', 'http://www.kissthisguy.com/T-songs.htm', 'http://www.kissthisguy.com/U-songs.htm', 'http://www.kissthisguy.com/V-songs.htm', 'http://www.kissthisguy.com/W-songs.htm', 'http://www.kissthisguy.com/X-songs.htm', 'http://www.kissthisguy.com/Y-songs.htm', 'http://www.kissthisguy.com/Z-songs.htm']\n", | |

| " \n", | |

| " rules = (\n", | |

| " Rule(SgmlLinkExtractor(allow=(r'\\d+song.*'))),\n", | |

| " Rule(SgmlLinkExtractor(allow=(r'\\d+misheard.htm')), callback='parse_kiss')\n", | |

| " )\n", | |

| " \n", | |

| " def parse_kiss(self, response):\n", | |

| " import urlparse\n", | |

| " hxs = HtmlXPathSelector(response)\n", | |

| " items = []\n", | |

| " item = KissItem()\n", | |

| " #\n", | |

| " title = hxs.select('//meta[@name=\"description\"]').re(r'\\\"Misheard\\ \\(wrong\\)\\ +.+\\\"')\n", | |

| " item['title'] = title\n", | |

| " #\n", | |

| " linknum = hxs.select('//input[@name=\"SubmissionID\"]').re(r'\\d+')\n", | |

| " item['link'] = linknum\n", | |

| " #\n", | |

| " original = hxs.select('//div[@class=\"data\"]/table/tr/td[@style=\"font-size: 15px;\"]/text()').extract()\n", | |

| " item['original'] = original\n", | |

| " #\n", | |

| " #monde = hxs.select('//div[@class=\"data\"]/h1/text()').re(r'\\r\\n\\s*(.*)')\n", | |

| " monde = hxs.select('//div[@class=\"data\"]/h1/text()').extract()\n", | |

| " mondegreen = []\n", | |

| " for i in range(1,len(monde)):\n", | |

| " if i == 1:\n", | |

| " mondegreen.append(monde[1][4:])\n", | |

| " else:\n", | |

| " mondegreen.append(monde[i])\n", | |

| " item['mondegreen'] = mondegreen\n", | |

| " #\n", | |

| " items.append(item)\n", | |

| " return items\n", | |

| "\n", | |

| "spider = KissSpider()" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Once we have all our scripts in place, here's what the run command looks like:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": null, | |

| "metadata": { | |

| "collapsed": true | |

| }, | |

| "outputs": [], | |

| "source": [ | |

| "#start project\n", | |

| "scrapy startproject kissthisguy\n", | |

| "\n", | |

| "#run command\n", | |

| "scrapy crawl kissthisguy -s JOBDIR=crawls/kissthisguy-1 -o scraped_kiss.json -t json" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "It is really that easy! \n", | |

| "\n", | |

| "Once we're finished scraping, let's take a look at what we've scraped. We can start by importing the .json file into Python." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": null, | |

| "metadata": { | |

| "collapsed": true | |

| }, | |

| "outputs": [], | |

| "source": [ | |

| "import json\n", | |

| "with open('scraped_kiss_new.json') as f:\n", | |

| " d = json.load(f)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Here's a quick peak at the structure of the file:\n", | |

| "```python\n", | |

| ">>> d[0][u'original']\n", | |

| "[u'Come in here, Dear boy, have a cigar']\n", | |

| "\n", | |

| ">>> d[0][u'mondegreen']\n", | |

| "[u'Come and hit a deer, boy - have a cigar']\n", | |

| "```\n", | |

| "How big is it?\n", | |

| "```python\n", | |

| ">>> len(d)\n", | |

| "47992\n", | |

| "```" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Given the resulting data – some *48,000 pairs* – we can now write a simple script to select suitable content for analysis. The script's job? To isolate the specific parts of the verse that were misheard, and from there, to identify mondegreens that are **the same length** as the phrase fround in the original lyrics, so that we can directly compare them. We should also remove single word entries, since those aren't of interest to us.\n", | |

| "\n", | |

| "In the interest of time, we'll gloss over the code for this - it's not very complicated. The important thing to know is that when we run such this script, we find that only about **41%** of the original yield meet these criteria. So we need to create a separate directory of **same-length** modegreens.\n", | |

| "\n", | |

| "```\n", | |

| "{'link': ['2046'], 'original': ['We are weak,', 'But he is strong'], 'mondegreen': ['They are wheat,', 'But He is straw.'], 'title': ['\"Hymns Lyrics from Jesus Loves Me\"']}\n", | |

| "\n", | |

| "{'link': ['31118'], 'original': ['Smoke on the water,', 'Fire in the sky.'], 'mondegreen': ['Butt like a moron,', 'tire in the night.'], 'title': ['\"Deep Purple Lyrics from Smoke On The Water\"']}\n", | |

| "```\n", | |

| "Now that we have these to hand, we want to calculate the transitional probabilities of the original and misheard phrases, using a first-order **Markov model** of each string. An n-order Markov model assumes that the likelihood of any given word in a sequence can be estimated from the prior n-words, rather than the entire preceding chain. For example, in a first-order Markov model, the probability of the sequence is approximated as the product of the probabilities of each w<sub>n</sub> given w<sub>n-1</sub>; in a second-order model, as the product of the probabilities of each w<sub>n</sub> given the sequence w<sub>n-1</sub>+w<sub>n-2</sub>; and so on.\n", | |

| "\n", | |

| "$$P(S)=\\prod _{i=1}^{N}P(w_i|w_1...w_{i-1}) \\approx \\prod _{i=1}^{N}P(w_{i-n+1}|w_1...w_{i-1}) \\equiv P_n(S)$$\n", | |

| "\n", | |

| "To facilitate these calculations, we should first strip the phrases of punctuation and transform them to lowercase. We can then decompose them into a tupples consisting of: w<sub>n</sub>+w<sub>n+1</sub>, w<sub>n</sub>\n", | |

| "\n", | |

| "To obtain more accurate frequency counts, we may then wish to replace unigrams with their **lemmas**, using the [WordNet Lemmatizer](http://www.nltk.org/_modules/nltk/stem/wordnet.html), available through NLTK.\n", | |

| "\n", | |

| "Here's a code snippet to get you started:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": null, | |

| "metadata": { | |

| "collapsed": true | |

| }, | |

| "outputs": [], | |

| "source": [ | |

| "import string\n", | |

| "from nltk.stem.wordnet import WordNetLemmatizer\n", | |

| "lmtzr = WordNetLemmatizer()\n", | |

| "punct = set(['!', '#', '\"', '%', '$', \"'\", '&', ')', '(', '+', '*', ',', '/', '.', ';', ':', '=', '<', '?', '>', '@', '[', ']', '\\\\', '_', '^', '`', '{', '}', '|', '~', '\\t', '\\n', '\\r'])\n", | |

| "hyphen = ['-']" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "Now we need to get **frequency information** for the unigrams and bigrams in our tupples, to estimate the transitional probabilities between words. One method I tried was to query **Google's API**, to get search result information. However, Google imposes a 10k query-limit, which won't work for us here. Instead, we can rely on the [USENET corpus](http://www.psych.ualberta.ca/~westburylab/downloads/usenetcorpus.download.html) to supply our unigram and bigram counts. In the following example, the first-order Markov probability of each string is computed from p(w<sub>1</sub>), p(w<sub>1</sub> + w<sub>2</sub> | w<sub>1</sub>), and p(w<sub>2</sub> + w<sub>3</sub> | w<sub>2</sub>) of the words in the original and mondegreen.\n", | |

| "```\n", | |

| "{'original': [('your protein', 'your'), ('protein pills', 'protein')], 'mondegreen': [('your fourteen', 'your'), ('fourteen pills', 'fourteen')]}\n", | |

| "\n", | |

| "{'original': [0.00020144205127973474, 1.7245630148938372e-05, 0], 'mondegreen': [0.00020144205127973474, 0, 0]}\n", | |

| "{'callback': 36, 'original': 0.0, 'mondegreen': 0.0}\n", | |

| "```\n", | |

| "Once we have all the data in place, we can run a statistical test to determine whether our originals or our mondegreens are higher probability. In the simplest case:" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": null, | |

| "metadata": { | |

| "collapsed": true | |

| }, | |

| "outputs": [], | |

| "source": [ | |

| "from scipy import stats\n", | |

| "paired_sample = stats.ttest_rel(original, mondegreen)\n", | |

| "print \"The t-statistic is %.3f and the p-value is %.6f.\" % paired_sample" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": {}, | |

| "source": [ | |

| "However, that would be ignoring a significant problem we encountered in collecting this data in the first place. From our already culled list, the entries that contain at least one frequency count of 0 is estimated at a whopping 77.8% (60% of original lyrics and 71% of mondegreens), making clear the problem of **data sparsity**. This dramatically reduces the set of reliable data. It also means that we may be biasing our sample, as we are throwing out more data in which the mondegreen produces a 0-count.\n", | |

| "\n", | |

| "## Smoothing Approaches to Data Sparsity\n", | |

| "\n", | |

| "The problem of [data sparsity](http://www.vinartus.net/spa/95c.pdf) guarantees that even when we keep the length of the sequence being estimated small, many *legal* combinations of words will never be observed, even when the corpus from which we draw our estimates is quite large. To combat this problem, various techniques have been proposed that redistribute probability mass over events in an attempt to better approximate the expected frequencies. Four major approaches in this domain include discounting, back-off, linear interpolation, and similarity-based smoothing.\n", | |

| "\n", | |

| "In additive smoothing, a simple form of **discounting**, each bigram frequency count is incremented by a small positive value, such as 1 (or 𝛿, where 0 < 𝛿 < 1). While this eliminates the zero-count problem, it has the undesirable side effect of incrementing both legitimate and illegitimate events similarly, and of flattening the distribution by reallocating a great deal of the probability mass to unseen events. It is thought to be one the worst performing methods. More sophisticated reallocation schemes, known as **backoff methods**, transfer value to unseen events in a number of more principled (and more successful) ways. \n", | |

| "\n", | |

| "Another set of techniques, like Jelinek-Mercer, address data sparsity by **interpolating** between unigram and bigram counts for both observed and unobserved events. Expected frequencies are estimated as a linear combination of both the observed conditional probability of an event, p(w<sub>n</sub>|w<sub>n-1</sub>) and its marginal probability, p(w<sub>n</sub>), with a free parameter λ to weight their respective contributions over instances. Higher-order variations of the technique take a recursive formulation.\n", | |

| "\n", | |

| "$$P_{JM}(w_i|w_{i-1}) \\equiv \\lambda P_{ML}(w_i|w_{i-1}) + (1 - \\lambda) P_{ML}(w_i)$$\n", | |

| "\n", | |

| "Witten-Bell is a special case of this method in which λ is fixed in such a way that smoothing tapers off as more data is observed: i.e., the maximum likelihood estimate of the bigram frequency is weighted more highly.\n", | |

| "\n", | |

| "While all of these methods differ in the details, they are essentially similar in that they use information from a lower-order distribution to improve estimates of a higher-order one. In recent years, **similarity** and **clustering-based approaches** have been [proposed](http://www.sfs.uni-tuebingen.de/~mramscar/papers/Draft_Yarlett_Similarity.pdf) to further refine these models. These methods are founded on the insight that in estimating the likelihood of rare or unseen sequences, directly observed estimates for distributionally similar words can be used to fill the gap. For example, the unobserved *p*(badger|X) can be estimated from the raw relative frequencies of *p*(cat|X) and *p*(skunk|X). Distributional similarity, a measure of the extent to which a pair of words occurs in similar linguistic contexts, is well-captured by vector space models (VSMs), such as [LSA](http://www.stat.cmu.edu/~cshalizi/350/2008/readings/Landauer-Dumais.pdf), and can be used to identify the nearest neighbors of a target word. The distribution of words following these neighbors can then be weighted and summed, supplying an alternative likelihood estimate.\n", | |

| "\n", | |

| "## Future Project Ideas\n", | |

| "\n", | |

| "This simple exercise makes clear the severity of the data sparsity problem, exposing some of the limitations of working with an unsmoothed n-gram model. However, it suggests a number of future project ideas.\n", | |

| "\n", | |

| "* Compare the distribution of frequency estimates produced by various discounting and backoff models, working with tools from the [Cambridge Statistical Language Modeling Toolkit](http://www.speech.cs.cmu.edu/SLM/toolkit.html). Do they all reach the same conclusion?\n", | |

| "\n", | |

| "Here, we defined ‘context’ as the several-word window in which the mondegreen appears. However, the notion of context need not be so narrowly constrained, and could readily be extended to include the broader discourse context - i.e., the previous lines or stanzas in the song. In the sentence processing literature, there is a good deal of evidence to suggest that online comprehension is a flexible inferential process, that involves the integration of prior knowledge and semantic context with incoming information at [multiple levels](https://www.ncbi.nlm.nih.gov/pubmed/19746299) of analysis. Accordingly, we might want to:\n", | |

| "\n", | |

| "* Write a script to scrape entire song lyrics, to inspect the full context preceding the mondegreen. Use similarity metrics, like [LSA cosine](http://scikit-learn.org/stable/modules/decomposition.html#truncated-singular-value-decomposition-and-latent-semantic-analysis), to examine the extent to which the mishearing was predicted by the preceding context. Compare the contribution of local and global factors. \n", | |

| "\n", | |

| "Or, we might ask:\n", | |

| "\n", | |

| "* Do mishearings occur when what is being sung is unlikely given the preceding context? After extracting the full set of song lyrics, test whether the probability of lines in which mondegreens occur are equiprobable to other lines in the song. \n", | |

| "\n", | |

| "Finally, we might want to conduct a more fine-grained analysis of the errors that occur:\n", | |

| "\n", | |

| "* In the online [Eggcorn Database](http://eggcorns.lascribe.net/), errors are categorized in various ways, including in terms of sound changes, such as the cot/caught merger and final d/t-deletion. The case for expectation might be stronger if these types of mistakes could be discriminated from other kinds of errors. " | |

| ] | |

| } | |

| ], | |

| "metadata": { | |

| "kernelspec": { | |

| "display_name": "Python [default]", | |

| "language": "python", | |

| "name": "python2" | |

| }, | |

| "language_info": { | |

| "codemirror_mode": { | |

| "name": "ipython", | |

| "version": 2 | |

| }, | |

| "file_extension": ".py", | |

| "mimetype": "text/x-python", | |

| "name": "python", | |

| "nbconvert_exporter": "python", | |

| "pygments_lexer": "ipython2", | |

| "version": "2.7.12" | |

| } | |

| }, | |

| "nbformat": 4, | |

| "nbformat_minor": 1 | |

| } |

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment