Last active

June 20, 2024 14:32

-

-

Save wesslen/00fad183a037559059464a09f32b1e0a to your computer and use it in GitHub Desktop.

pe-openai-lecture.ipynb

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| { | |

| "cells": [ | |

| { | |

| "cell_type": "markdown", | |

| "metadata": { | |

| "id": "view-in-github", | |

| "colab_type": "text" | |

| }, | |

| "source": [ | |

| "<a href=\"https://colab.research.google.com/gist/wesslen/00fad183a037559059464a09f32b1e0a/pe-openai-lecture.ipynb\" target=\"_parent\"><img src=\"https://colab.research.google.com/assets/colab-badge.svg\" alt=\"Open In Colab\"/></a>" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": { | |

| "id": "3HIuaawzR8RR" | |

| }, | |

| "source": [ | |

| "# Getting Started with Prompt Engineering\n", | |

| "\n", | |

| "This notebook contains examples and exercises to learning about prompt engineering. It was originally created by DAIR.AI | Elvis Saravia with modifications.\n", | |

| "\n", | |

| "We will be using the [OpenAI APIs](https://platform.openai.com/) for all examples. I am using the default settings `temperature=0.7` and `top-p=1`" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": { | |

| "id": "jego_SZUR8RR" | |

| }, | |

| "source": [ | |

| "---" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": { | |

| "id": "F0do4hkhR8RS" | |

| }, | |

| "source": [ | |

| "## 1. Prompt Engineering Basics: OpenAI API and configurations\n" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": { | |

| "id": "uOMd4TbUR8RS" | |

| }, | |

| "source": [ | |

| "Below we are loading the necessary libraries, utilities, and configurations." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 1, | |

| "metadata": { | |

| "id": "R1KWAb3_R8RS" | |

| }, | |

| "outputs": [], | |

| "source": [ | |

| "%%capture\n", | |

| "# update or install the necessary libraries\n", | |

| "!pip install --upgrade openai==1.35.1\n", | |

| "!pip install --upgrade langchain==0.2.5\n", | |

| "!pip install --upgrade langchain-openai==0.1.8\n", | |

| "!pip install langchain-community==0.2.5" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 4, | |

| "metadata": { | |

| "id": "yANHPO1MR8RS" | |

| }, | |

| "outputs": [], | |

| "source": [ | |

| "import openai\n", | |

| "import os\n", | |

| "import IPython\n", | |

| "from langchain.llms import OpenAI\n", | |

| "from google.colab import userdata" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": { | |

| "id": "0YhuFzr-R8RS" | |

| }, | |

| "source": [ | |

| "Load environment variables. Since I'm running this in a Colab notebook, I'm using `userdata.get()`. Just make sure to add your API Key into your Colab.\n", | |

| "\n", | |

| "\n", | |

| "\n", | |

| "Alternatively, you can use `python-dotenv` with a `.env` file with your `OPENAI_API_KEY` then load it." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 5, | |

| "metadata": { | |

| "id": "7Qs7ie0kR8RS" | |

| }, | |

| "outputs": [], | |

| "source": [ | |

| "# API configuration\n", | |

| "openai.api_key = userdata.get(\"OPENAI_API_KEY\")\n", | |

| "\n", | |

| "# for LangChain\n", | |

| "os.environ[\"OPENAI_API_KEY\"] = userdata.get(\"OPENAI_API_KEY\")" | |

| ] | |

| }, | |

| { | |

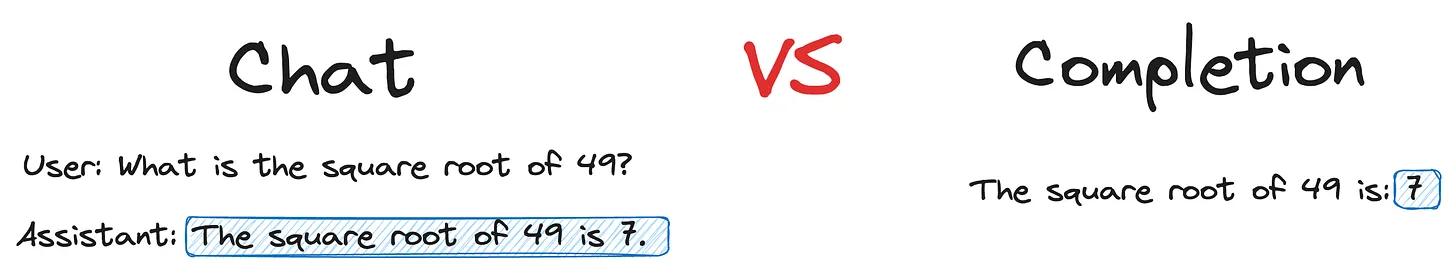

| "cell_type": "markdown", | |

| "source": [ | |

| "### Types of Endpoints\n", | |

| "\n", | |

| "OpenAI (and other LLM) API's typically have two types API endpoints: completion and chat.\n", | |

| "\n", | |

| "\n", | |

| "\n", | |

| "Source: [Generally Intelligent SubStack](https://generallyintelligent.substack.com/p/chat-vs-completion-endpoints)\n", | |

| "\n", | |

| "Originally, the completions endpoint was the first endpoint. However, OpenAI has [announced](https://community.openai.com/t/completion-models-are-now-considered-legacy/656302) it is deprecating that endpoint.\n", | |

| "\n", | |

| "`/completions` endpoint provides the completion for a single prompt and takes a single string as an input, whereas the `/chat/completions` provides the responses for a given dialog and requires the input in a specific format corresponding to the message history. Instead of taking a `prompt`, Chat models take a list of `messages` as input and return a model-generated message as output.\n", | |

| "\n", | |

| "\n", | |

| "\n", | |

| "| MODEL FAMILIES | EXAMPLES | API ENDPOINT |\n", | |

| "|------------------------------|--------------------------------------------------|--------------------------------------------|\n", | |

| "| Newer models (2023–) | gpt-4, gpt-4-turbo-preview, gpt-3.5-turbo | https://api.openai.com/v1/chat/completions |\n", | |

| "| Updated legacy models (2023) | gpt-3.5-turbo-instruct, babbage-002, davinci-002 | https://api.openai.com/v1/completions |\n", | |

| "\n", | |

| "\n", | |

| "Although the chat format is designed to make multi-turn conversations easy, it’s just as useful for single-turn tasks without any conversation.\n", | |

| "\n", | |

| "We're going to the use the Chat `openai.chat.completions` endpoint.\n", | |

| "\n", | |

| "For more details, check out [OpenAI's docs](https://platform.openai.com/docs/guides/text-generation/chat-completions-vs-completions)." | |

| ], | |

| "metadata": { | |

| "id": "nAbquZOc9hjP" | |

| } | |

| }, | |

| { | |

| "cell_type": "code", | |

| "source": [ | |

| "response = openai.chat.completions.create(\n", | |

| " model=\"gpt-3.5-turbo\",\n", | |

| " messages=[\n", | |

| " {\"role\": \"system\", \"content\": \"You are a helpful assistant.\"},\n", | |

| " {\"role\": \"user\", \"content\": \"Who won the world series in 2020?\"},\n", | |

| " {\"role\": \"assistant\", \"content\": \"The Los Angeles Dodgers won the World Series in 2020.\"},\n", | |

| " {\"role\": \"user\", \"content\": \"Where was it played?\"}\n", | |

| " ]\n", | |

| ")\n", | |

| "\n", | |

| "response" | |

| ], | |

| "metadata": { | |

| "colab": { | |

| "base_uri": "https://localhost:8080/" | |

| }, | |

| "id": "fKY-ePiJC3y6", | |

| "outputId": "4f1c3305-602c-4028-d984-8b593dc9df4a" | |

| }, | |

| "execution_count": 6, | |

| "outputs": [ | |

| { | |

| "output_type": "execute_result", | |

| "data": { | |

| "text/plain": [ | |

| "ChatCompletion(id='chatcmpl-9cCtiK7KDXCClcKdIXuEoINrjNwEq', choices=[Choice(finish_reason='stop', index=0, logprobs=None, message=ChatCompletionMessage(content='The 2020 World Series between the Los Angeles Dodgers and the Tampa Bay Rays was played at Globe Life Field in Arlington, Texas.', role='assistant', function_call=None, tool_calls=None))], created=1718893070, model='gpt-3.5-turbo-0125', object='chat.completion', service_tier=None, system_fingerprint=None, usage=CompletionUsage(completion_tokens=27, prompt_tokens=53, total_tokens=80))" | |

| ] | |

| }, | |

| "metadata": {}, | |

| "execution_count": 6 | |

| } | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "source": [ | |

| "Typically, what we're most interested in is the `choices[0].message.content`:" | |

| ], | |

| "metadata": { | |

| "id": "jcUqxHrqDVeW" | |

| } | |

| }, | |

| { | |

| "cell_type": "code", | |

| "source": [ | |

| "response.choices[0].message.content" | |

| ], | |

| "metadata": { | |

| "colab": { | |

| "base_uri": "https://localhost:8080/", | |

| "height": 53 | |

| }, | |

| "id": "TrAlcD1KEJ0X", | |

| "outputId": "ceb8c611-87da-4ec0-cf1f-0bbb15a8045b" | |

| }, | |

| "execution_count": 7, | |

| "outputs": [ | |

| { | |

| "output_type": "execute_result", | |

| "data": { | |

| "text/plain": [ | |

| "'The 2020 World Series between the Los Angeles Dodgers and the Tampa Bay Rays was played at Globe Life Field in Arlington, Texas.'" | |

| ], | |

| "application/vnd.google.colaboratory.intrinsic+json": { | |

| "type": "string" | |

| } | |

| }, | |

| "metadata": {}, | |

| "execution_count": 7 | |

| } | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "source": [ | |

| "To make things a bit easier to modify parameters, we can generalize the calls as a function, including parameters and messages." | |

| ], | |

| "metadata": { | |

| "id": "_JHq92uCDF38" | |

| } | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 8, | |

| "metadata": { | |

| "id": "S9HGq2JQR8RS" | |

| }, | |

| "outputs": [], | |

| "source": [ | |

| "def get_completion(params, messages):\n", | |

| " \"\"\" GET completion from openai api\"\"\"\n", | |

| "\n", | |

| " response = openai.chat.completions.create(\n", | |

| " model = params['model'],\n", | |

| " messages = messages,\n", | |

| " temperature = params['temperature'],\n", | |

| " max_tokens = params['max_tokens'],\n", | |

| " top_p = params['top_p'],\n", | |

| " frequency_penalty = params['frequency_penalty'],\n", | |

| " presence_penalty = params['presence_penalty'],\n", | |

| " )\n", | |

| " return response" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "source": [ | |

| "There's a variety of different parameters that are common with LLM's. Since LLM's are really just word predictors (auto-complete, distributions over vocabulary), they require [different sampling methods](https://huyenchip.com/2024/01/16/sampling.html) to get the next word.\n", | |

| "\n", | |

| "| Parameter | Description |\n", | |

| "|--------------------|---------------------------------------------------------------------------------------------------------------------------------------------------------------|\n", | |

| "| `model` | Specifies the model to be used for generating responses. Different models may have different capabilities, size, and performance characteristics. |\n", | |

| "\n", | |

| "Here's [an outline](https://www.promptingguide.ai/introduction/settings) of different common LLM parameters.\n" | |

| ], | |

| "metadata": { | |

| "id": "rqY79t7q8SFQ" | |

| } | |

| }, | |

| { | |

| "cell_type": "code", | |

| "source": [ | |

| "!openai --help" | |

| ], | |

| "metadata": { | |

| "colab": { | |

| "base_uri": "https://localhost:8080/" | |

| }, | |

| "id": "ZZ24btuoHvu9", | |

| "outputId": "a8ec7065-36ed-413b-b14a-15af2ef6d114" | |

| }, | |

| "execution_count": 9, | |

| "outputs": [ | |

| { | |

| "output_type": "stream", | |

| "name": "stdout", | |

| "text": [ | |

| "usage: openai [-h] [-v] [-b API_BASE] [-k API_KEY] [-p PROXY [PROXY ...]] [-o ORGANIZATION]\n", | |

| " [-t {openai,azure}] [--api-version API_VERSION] [--azure-endpoint AZURE_ENDPOINT]\n", | |

| " [--azure-ad-token AZURE_AD_TOKEN] [-V]\n", | |

| " {api,tools,migrate,grit} ...\n", | |

| "\n", | |

| "positional arguments:\n", | |

| " {api,tools,migrate,grit}\n", | |

| " api Direct API calls\n", | |

| " tools Client side tools for convenience\n", | |

| "\n", | |

| "options:\n", | |

| " -h, --help show this help message and exit\n", | |

| " -v, --verbose Set verbosity.\n", | |

| " -b API_BASE, --api-base API_BASE\n", | |

| " What API base url to use.\n", | |

| " -k API_KEY, --api-key API_KEY\n", | |

| " What API key to use.\n", | |

| " -p PROXY [PROXY ...], --proxy PROXY [PROXY ...]\n", | |

| " What proxy to use.\n", | |

| " -o ORGANIZATION, --organization ORGANIZATION\n", | |

| " Which organization to run as (will use your default organization if not\n", | |

| " specified)\n", | |

| " -t {openai,azure}, --api-type {openai,azure}\n", | |

| " The backend API to call, must be `openai` or `azure`\n", | |

| " --api-version API_VERSION\n", | |

| " The Azure API version, e.g. 'https://learn.microsoft.com/en-us/azure/ai-\n", | |

| " services/openai/reference#rest-api-versioning'\n", | |

| " --azure-endpoint AZURE_ENDPOINT\n", | |

| " The Azure endpoint, e.g. 'https://endpoint.openai.azure.com'\n", | |

| " --azure-ad-token AZURE_AD_TOKEN\n", | |

| " A token from Azure Active Directory, https://www.microsoft.com/en-\n", | |

| " us/security/business/identity-access/microsoft-entra-id\n", | |

| " -V, --version show program's version number and exit\n" | |

| ] | |

| } | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "source": [ | |

| "def set_open_params(\n", | |

| " model=\"gpt-3.5-turbo\",\n", | |

| " temperature=0.7,\n", | |

| " max_tokens=256,\n", | |

| " top_p=1,\n", | |

| " frequency_penalty=0,\n", | |

| " presence_penalty=0,\n", | |

| "):\n", | |

| " \"\"\" set openai parameters\"\"\"\n", | |

| "\n", | |

| " openai_params = {}\n", | |

| "\n", | |

| " openai_params['model'] = model\n", | |

| " openai_params['temperature'] = temperature\n", | |

| " openai_params['max_tokens'] = max_tokens\n", | |

| " openai_params['top_p'] = top_p\n", | |

| " openai_params['frequency_penalty'] = frequency_penalty\n", | |

| " openai_params['presence_penalty'] = presence_penalty\n", | |

| " return openai_params" | |

| ], | |

| "metadata": { | |

| "id": "6EWfKXQfBgm8" | |

| }, | |

| "execution_count": 10, | |

| "outputs": [] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 11, | |

| "metadata": { | |

| "id": "8Ljy2AtbR8RT" | |

| }, | |

| "outputs": [], | |

| "source": [ | |

| "# basic example\n", | |

| "\n", | |

| "params = set_open_params(temperature = 0.7)\n", | |

| "\n", | |

| "prompt = \"Write a haiku about a beagle.\"\n", | |

| "\n", | |

| "messages = [\n", | |

| " {\n", | |

| " \"role\": \"user\",\n", | |

| " \"content\": prompt\n", | |

| " }\n", | |

| "]\n", | |

| "\n", | |

| "response = get_completion(params, messages)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 12, | |

| "metadata": { | |

| "id": "cijXEzzVR8RT", | |

| "outputId": "7e1b8589-eab2-49d2-b030-cd41c0a5a063", | |

| "colab": { | |

| "base_uri": "https://localhost:8080/", | |

| "height": 46 | |

| } | |

| }, | |

| "outputs": [ | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Ears flopping in wind\nSniffing out scents in the air\nBeagle on the hunt" | |

| }, | |

| "metadata": {} | |

| } | |

| ], | |

| "source": [ | |

| "IPython.display.display(IPython.display.Markdown(response.choices[0].message.content))" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "source": [ | |

| "### Temperature" | |

| ], | |

| "metadata": { | |

| "id": "nwmhE4TvI__c" | |

| } | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": { | |

| "id": "36jqmKk7R8RT" | |

| }, | |

| "source": [ | |

| "\n", | |

| "| Parameter | Description |\n", | |

| "|--------------------|---------------------------------------------------------------------------------------------------------------------------------------------------------------|\n", | |

| "| `temperature` | Controls randomness in the response generation. A higher temperature results in more random responses, while a lower temperature produces more deterministic responses. |\n", | |

| "\n", | |

| "Try to modify the temperature from 0 to 1.\n", | |

| "\n", | |

| "> In terms of application, you might want to use a lower temperature value for tasks like fact-based QA to encourage more factual and concise responses. For poem generation or other creative tasks, it might be beneficial to increase the temperature value." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 13, | |

| "metadata": { | |

| "id": "2OxiV-uQR8RT", | |

| "outputId": "887bae48-9581-49d7-bd87-eaf3aac95eb9", | |

| "colab": { | |

| "base_uri": "https://localhost:8080/", | |

| "height": 902 | |

| } | |

| }, | |

| "outputs": [ | |

| { | |

| "output_type": "stream", | |

| "name": "stdout", | |

| "text": [ | |

| "Response with temperature=0:\n", | |

| "\n" | |

| ] | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Curious beagle nose\n" | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Sniffing out adventures near\n" | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Tail wags in delight" | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "stream", | |

| "name": "stdout", | |

| "text": [ | |

| "-------------------\n", | |

| "\n", | |

| "Response with temperature=0.2:\n", | |

| "\n" | |

| ] | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Curious beagle nose\n" | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Sniffing out adventures near\n" | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Tail wags in delight" | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "stream", | |

| "name": "stdout", | |

| "text": [ | |

| "-------------------\n", | |

| "\n", | |

| "Response with temperature=0.4:\n", | |

| "\n" | |

| ] | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Curious beagle nose\n" | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Sniffing out every scent trail\n" | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Tail wags in delight" | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "stream", | |

| "name": "stdout", | |

| "text": [ | |

| "-------------------\n", | |

| "\n", | |

| "Response with temperature=0.6:\n", | |

| "\n" | |

| ] | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Playful beagle pup\n" | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Sniffing out scents with delight\n" | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Tail wagging happily" | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "stream", | |

| "name": "stdout", | |

| "text": [ | |

| "-------------------\n", | |

| "\n", | |

| "Response with temperature=0.8:\n", | |

| "\n" | |

| ] | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Curious beagle nose\n" | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Sniffing, following scents keen\n" | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Tail wagging, ears flop" | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "stream", | |

| "name": "stdout", | |

| "text": [ | |

| "-------------------\n", | |

| "\n", | |

| "Response with temperature=1:\n", | |

| "\n" | |

| ] | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Ears flop in the breeze\n" | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Soft brown eyes full of mischief\n" | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Beagle's loyal heart" | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "stream", | |

| "name": "stdout", | |

| "text": [ | |

| "-------------------\n", | |

| "\n" | |

| ] | |

| } | |

| ], | |

| "source": [ | |

| "import re\n", | |

| "\n", | |

| "def split_on_capital(text):\n", | |

| " return re.findall(r'[A-Z][^A-Z]*', text)\n", | |

| "\n", | |

| "# Experiment with different temperature values\n", | |

| "for temp in [0, 0.2, 0.4, 0.6, 0.8, 1]:\n", | |

| " params = set_open_params(temperature=temp)\n", | |

| " response = get_completion(params, messages)\n", | |

| " lines = split_on_capital(response.choices[0].message.content.strip())\n", | |

| " print(f\"Response with temperature={temp}:\\n\")\n", | |

| " for line in lines:\n", | |

| " IPython.display.display(IPython.display.Markdown(line))\n", | |

| " print(\"-------------------\\n\")" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "source": [ | |

| "[View how I used ChatGPT to iterate on this code](https://chat.openai.com/share/6211107a-d868-491b-8a74-ea4debb7a760)" | |

| ], | |

| "metadata": { | |

| "id": "46-IqrqgNFdy" | |

| } | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "source": [ | |

| "> ### 🗒 Info: Is it possible to make LLM's deterministic and reproducibile?\n", | |

| "\n", | |

| "> It's possible by setting a seed and setting temperature equal to 0. But as mentioned in [OpenAI's docs](https://cookbook.openai.com/examples/reproducible_outputs_with_the_seed_parameter), \"it's important to note that while the seed ensures consistency, it does not guarantee the quality of the output.\"" | |

| ], | |

| "metadata": { | |

| "id": "TkSDDqo9iQl2" | |

| } | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "source": [ | |

| "### Top P\n", | |

| "\n", | |

| "Used in nucleus sampling, it defines the probability mass to consider for token generation. A smaller `top_p` leads to more focused sampling.\n", | |

| "\n", | |

| "* `top_p` computes the cumulative probability distribution, and cut off as soon as that distribution exceeds the value of `top_p`. **For example, a `top_p` of 0.3 means that only the tokens comprising the top 30% probability mass are considered.**\n", | |

| "\n", | |

| "* `top_p` shrinks or grows the \"pool\" of available tokens to choose from, the domain to select over. 1=big pool, 0=small pool. Within that pool, each token has a probability of coming next.\n", | |

| "\n", | |

| "Added in a `jinja2` template too (this is optional, but commonly used)." | |

| ], | |

| "metadata": { | |

| "id": "PMEu7ah-R_pP" | |

| } | |

| }, | |

| { | |

| "cell_type": "code", | |

| "source": [ | |

| "%%capture\n", | |

| "!pip install textstat" | |

| ], | |

| "metadata": { | |

| "id": "dG82BktkYQfu" | |

| }, | |

| "execution_count": 14, | |

| "outputs": [] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "source": [ | |

| "import re\n", | |

| "import json\n", | |

| "from textstat import flesch_kincaid_grade\n", | |

| "from jinja2 import Template\n", | |

| "\n", | |

| "# Define the prompt using a Jinja template\n", | |

| "template = Template(\"\"\"\n", | |

| "{\n", | |

| " \"role\": \"user\",\n", | |

| " \"content\": \"{{ prompt }}\"\n", | |

| "}\n", | |

| "\"\"\")\n", | |

| "prompt = \"Generate a unique and creative story idea involving time travel.\"\n", | |

| "\n", | |

| "# Function to count unique words\n", | |

| "def count_unique_words(text):\n", | |

| " words = re.findall(r'\\b\\w+\\b', text.lower())\n", | |

| " return len(set(words))\n", | |

| "\n", | |

| "# Generate the message using the template\n", | |

| "messages_str = template.render(prompt=prompt)\n", | |

| "messages = [json.loads(messages_str)]\n", | |

| "\n", | |

| "for top_p in [0.1, 0.5, 0.95]:\n", | |

| " params = set_open_params(top_p=top_p)\n", | |

| " response = get_completion(params, messages)\n", | |

| " text = response.choices[0].message.content\n", | |

| " unique_word_count = count_unique_words(text)\n", | |

| " fk_score = flesch_kincaid_grade(text)\n", | |

| " print(f\"Response with top_p={top_p}:\\n\")\n", | |

| " print(f\"Unique word count: {unique_word_count}\")\n", | |

| " print(f\"Flesch-Kincaid Grade Level: {fk_score}\")\n", | |

| " IPython.display.display(IPython.display.Markdown(text))\n", | |

| " print(\"-------------------\\n\")\n" | |

| ], | |

| "metadata": { | |

| "colab": { | |

| "base_uri": "https://localhost:8080/", | |

| "height": 1000 | |

| }, | |

| "id": "x-S0B2IHR0Th", | |

| "outputId": "817a436e-fc58-4d9f-c948-8e53ed847a7f" | |

| }, | |

| "execution_count": 15, | |

| "outputs": [ | |

| { | |

| "output_type": "stream", | |

| "name": "stdout", | |

| "text": [ | |

| "Response with top_p=0.1:\n", | |

| "\n", | |

| "Unique word count: 128\n", | |

| "Flesch-Kincaid Grade Level: 10.3\n" | |

| ] | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "In the year 3025, time travel has become a common form of entertainment for the wealthy elite. The Time Travel Corporation offers exclusive trips to different eras in history, allowing clients to witness major events firsthand.\n\nOne day, a young woman named Eliza wins a contest to travel back to the year 1920 and attend a lavish party hosted by the infamous gangster Al Capone. Excited for the adventure, Eliza steps into the time machine and is transported back in time.\n\nHowever, when she arrives in 1920, Eliza quickly realizes that something has gone wrong. Instead of being a mere observer, she finds herself in the middle of a dangerous plot to assassinate Al Capone. Unsure of who to trust, Eliza must navigate the treacherous world of 1920s Chicago while trying to prevent a major historical event from being altered.\n\nAs Eliza races against the clock to stop the assassination, she discovers that the Time Travel Corporation has been manipulating events throughout history for their own gain. With the help of a charming bootlegger and a group of rebels fighting against the corporation, Eliza must find a way to set things right and protect the timeline before it's too late.\n\nIn a thrilling race against time, Eliza must confront" | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "stream", | |

| "name": "stdout", | |

| "text": [ | |

| "-------------------\n", | |

| "\n", | |

| "Response with top_p=0.5:\n", | |

| "\n", | |

| "Unique word count: 121\n", | |

| "Flesch-Kincaid Grade Level: 8.4\n" | |

| ] | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "In the year 3021, time travel has become a common practice for those who can afford it. The Time Travel Agency offers trips to various points in history, allowing people to witness major events firsthand. \n\nOne day, a young woman named Amelia decides to take a trip back to the year 2021, a time period known for its technological advancements and global crises. However, during her trip, something goes wrong with the time machine and she becomes stuck in the past. \n\nAmelia must navigate the unfamiliar world of 2021, trying to blend in while also trying to find a way back to her own time. Along the way, she meets a group of rebels who are fighting against the oppressive government that controls the time travel technology. \n\nAs Amelia gets to know the rebels and their cause, she begins to question her own beliefs and values. She must decide whether to help the rebels in their fight or try to find a way back to her own time, knowing that her actions could have a major impact on the future. \n\nAs Amelia struggles to find her place in this new world, she discovers that the key to unlocking the secrets of time travel may lie in the past. With the help of the rebels, she embarks on a dangerous journey through time," | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "stream", | |

| "name": "stdout", | |

| "text": [ | |

| "-------------------\n", | |

| "\n", | |

| "Response with top_p=0.95:\n", | |

| "\n", | |

| "Unique word count: 129\n", | |

| "Flesch-Kincaid Grade Level: 9.4\n" | |

| ] | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "In the year 2050, a group of scientists develops a groundbreaking technology that allows for time travel. However, instead of using it for personal gain or historical exploration, they decide to use it to prevent disasters from happening in the future.\n\nThe team of scientists, led by Dr. Emily Williams, embarks on a mission to travel back in time to key moments in history where catastrophic events occurred. They work tirelessly to change the course of history and prevent these disasters from ever happening.\n\nHowever, they soon realize that changing the past has unforeseen consequences on the present and future. As they continue to alter key events, they start to notice changes in their own lives and the world around them.\n\nDr. Williams begins to question the ethics of their mission and wonders if they are playing with forces beyond their control. As they delve deeper into the complexities of time travel, they must confront their own moral dilemmas and decide if the sacrifices they are making are worth it in the end.\n\nAs they navigate through different timelines and face unexpected challenges, the team must band together and make difficult decisions that will ultimately shape the future of humanity. Will they be able to save the world from impending disasters, or will their actions create even more chaos and destruction? Only time will tell." | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "stream", | |

| "name": "stdout", | |

| "text": [ | |

| "-------------------\n", | |

| "\n" | |

| ] | |

| } | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "source": [ | |

| "> **If you are looking for exact and factual answers keep this low. If you are looking for more diverse responses, increase to a higher value.** If you use Top P it means that only the tokens comprising the top_p probability mass are considered for responses, so a low top_p value selects the most confident responses. This means that a high top_p value will enable the model to look at more possible words, including less likely ones, leading to more diverse outputs. **The general recommendation is to alter temperature or Top P but not both.**" | |

| ], | |

| "metadata": { | |

| "id": "NBhg8_glafVu" | |

| } | |

| }, | |

| { | |

| "cell_type": "code", | |

| "source": [ | |

| "prompt = \"What day is it?\"\n", | |

| "\n", | |

| "# Generate the message using the template and convert it to a dictionary\n", | |

| "messages_str = template.render(prompt=prompt)\n", | |

| "messages = [json.loads(messages_str)]\n", | |

| "\n", | |

| "for top_p in [0.5, 0.8, 0.95]:\n", | |

| " params = set_open_params(top_p=top_p)\n", | |

| " response = get_completion(params, messages)\n", | |

| " print(f\"Response with top_p={top_p}:\\n\")\n", | |

| " IPython.display.display(IPython.display.Markdown(response.choices[0].message.content))\n", | |

| " print(\"-------------------\\n\")" | |

| ], | |

| "metadata": { | |

| "colab": { | |

| "base_uri": "https://localhost:8080/", | |

| "height": 319 | |

| }, | |

| "id": "zZ-en7l3TRLN", | |

| "outputId": "b6917d56-cc6e-4e19-dd00-7c4a5ad8e436" | |

| }, | |

| "execution_count": 16, | |

| "outputs": [ | |

| { | |

| "output_type": "stream", | |

| "name": "stdout", | |

| "text": [ | |

| "Response with top_p=0.5:\n", | |

| "\n" | |

| ] | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "I am an AI and do not have the ability to know the current day. Please check your calendar or device for the current date." | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "stream", | |

| "name": "stdout", | |

| "text": [ | |

| "-------------------\n", | |

| "\n", | |

| "Response with top_p=0.8:\n", | |

| "\n" | |

| ] | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Today is Tuesday." | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "stream", | |

| "name": "stdout", | |

| "text": [ | |

| "-------------------\n", | |

| "\n", | |

| "Response with top_p=0.95:\n", | |

| "\n" | |

| ] | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "I am an AI and do not have the capability to know the current day. Please check your calendar or device for the most up-to-date information." | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "stream", | |

| "name": "stdout", | |

| "text": [ | |

| "-------------------\n", | |

| "\n" | |

| ] | |

| } | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "source": [ | |

| "### Max Tokens\n", | |

| "\n", | |

| "Defines the maximum length of the generated response measured in tokens (words or pieces of words). It helps in controlling the verbosity of the response.\n", | |

| "\n", | |

| "* **Prompt**: \"Explain how photosynthesis works in plants.\"\n", | |

| "* **Task**: Run the API three times with `max_tokens` set to 50, 100, and 200, respectively." | |

| ], | |

| "metadata": { | |

| "id": "0p8tzYliJCtK" | |

| } | |

| }, | |

| { | |

| "cell_type": "code", | |

| "source": [ | |

| "# Experiment with different max_tokens values\n", | |

| "prompt = \"Explain how photosynthesis works in plants.\"\n", | |

| "\n", | |

| "messages = [\n", | |

| " {\n", | |

| " \"role\": \"user\",\n", | |

| " \"content\": prompt\n", | |

| " }\n", | |

| "]\n", | |

| "\n", | |

| "\n", | |

| "for max_tokens in [50, 100, 200]:\n", | |

| " params = set_open_params(max_tokens=max_tokens)\n", | |

| " response = get_completion(params, messages)\n", | |

| " print(f\"Response with max_tokens={max_tokens}:\\n\")\n", | |

| " IPython.display.display(IPython.display.Markdown(response.choices[0].message.content))\n", | |

| " print(\"-------------------\\n\")" | |

| ], | |

| "metadata": { | |

| "colab": { | |

| "base_uri": "https://localhost:8080/", | |

| "height": 628 | |

| }, | |

| "id": "16W-v3mrI-eT", | |

| "outputId": "c9598af3-4b71-4819-fe37-755d3da3f87f" | |

| }, | |

| "execution_count": 17, | |

| "outputs": [ | |

| { | |

| "output_type": "stream", | |

| "name": "stdout", | |

| "text": [ | |

| "Response with max_tokens=50:\n", | |

| "\n" | |

| ] | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Photosynthesis is the process by which plants, algae, and some bacteria convert sunlight, carbon dioxide, and water into glucose (sugar) and oxygen gas. This process occurs in the chloroplasts of plant cells.\n\nDuring photosynthesis, light energy" | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "stream", | |

| "name": "stdout", | |

| "text": [ | |

| "-------------------\n", | |

| "\n", | |

| "Response with max_tokens=100:\n", | |

| "\n" | |

| ] | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Photosynthesis is the process by which plants, algae, and some bacteria convert light energy into chemical energy stored in glucose. This process occurs in the chloroplasts of plant cells.\n\nDuring photosynthesis, plants take in carbon dioxide from the air and water from the soil. They absorb sunlight through their chlorophyll pigments, which are located in chloroplasts. The sunlight provides the energy needed to convert carbon dioxide and water into glucose and oxygen.\n\nThe overall chemical equation for photosynthesis is:\n\n6" | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "stream", | |

| "name": "stdout", | |

| "text": [ | |

| "-------------------\n", | |

| "\n", | |

| "Response with max_tokens=200:\n", | |

| "\n" | |

| ] | |

| }, | |

| { | |

| "output_type": "display_data", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Photosynthesis is the process by which plants, algae, and some bacteria convert sunlight, carbon dioxide, and water into glucose (sugar) and oxygen. This process occurs in the chloroplasts of plant cells and is essential for the survival of plants and other organisms that rely on them for food.\n\nThe process of photosynthesis can be broken down into two main stages: the light-dependent reactions and the light-independent reactions (also known as the Calvin cycle).\n\nDuring the light-dependent reactions, chlorophyll (a green pigment in chloroplasts) absorbs sunlight and uses it to convert water into oxygen and high-energy molecules called ATP and NADPH. These molecules are then used in the second stage of photosynthesis.\n\nIn the light-independent reactions (Calvin cycle), ATP and NADPH are used to convert carbon dioxide into glucose. This process involves a series of chemical reactions that ultimately produce glucose, which can be used as an energy source by the plant or stored for later use.\n\nOverall, photos" | |

| }, | |

| "metadata": {} | |

| }, | |

| { | |

| "output_type": "stream", | |

| "name": "stdout", | |

| "text": [ | |

| "-------------------\n", | |

| "\n" | |

| ] | |

| } | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": { | |

| "id": "GiT0Cw4ZR8RT" | |

| }, | |

| "source": [ | |

| "### 1.1 Text Summarization" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 26, | |

| "metadata": { | |

| "id": "XP7CSLfTR8RT", | |

| "outputId": "33bdc81f-6364-410c-b436-40f47998f091", | |

| "colab": { | |

| "base_uri": "https://localhost:8080/", | |

| "height": 64 | |

| } | |

| }, | |

| "outputs": [ | |

| { | |

| "output_type": "execute_result", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Antibiotics are medications that kill or inhibit the growth of bacteria, helping the body's immune system to combat infections, but are not effective against viral infections and should be used judiciously to avoid antibiotic resistance." | |

| }, | |

| "metadata": {}, | |

| "execution_count": 26 | |

| } | |

| ], | |

| "source": [ | |

| "params = set_open_params(temperature=0.7)\n", | |

| "\n", | |

| "prompt = \"\"\"Explain the below in one sentence: Antibiotics are a type of medication \\\n", | |

| " used to treat bacterial infections. They work by either killing the bacteria \\\n", | |

| " or preventing them from reproducing, allowing the body's immune system to \\\n", | |

| " fight off the infection. Antibiotics are usually taken orally in the form \\\n", | |

| " of pills, capsules, or liquid solutions, or sometimes administered intravenously. \\\n", | |

| " They are not effective against viral infections, and using them inappropriately \\\n", | |

| " can lead to antibiotic resistance.\"\"\"\n", | |

| "\n", | |

| "# Generate the message using the template and convert it to a dictionary\n", | |

| "messages_str = template.render(prompt=prompt)\n", | |

| "messages = [json.loads(messages_str)]\n", | |

| "\n", | |

| "response = get_completion(params, messages)\n", | |

| "IPython.display.Markdown(response.choices[0].message.content)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": { | |

| "id": "KzOToTAxR8RT" | |

| }, | |

| "source": [ | |

| "> **Exercise**: Instruct the model to explain the paragraph in one sentence like \"I am 5\". Do you see any differences?" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": { | |

| "id": "pExMtUo-R8RT" | |

| }, | |

| "source": [ | |

| "### 1.2 Question Answering" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 31, | |

| "metadata": { | |

| "id": "X8lYXWErR8RT", | |

| "outputId": "8ab5d724-316c-4640-9b45-080d9d2035e4", | |

| "colab": { | |

| "base_uri": "https://localhost:8080/", | |

| "height": 46 | |

| } | |

| }, | |

| "outputs": [ | |

| { | |

| "output_type": "execute_result", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Mice" | |

| }, | |

| "metadata": {}, | |

| "execution_count": 31 | |

| } | |

| ], | |

| "source": [ | |

| "prompt = \"\"\"Answer the question based on the context below. \\\n", | |

| " Keep the answer short and concise. \\\n", | |

| " Respond 'Unsure about answer' if not sure about the answer. \\\n", | |

| " Context: Teplizumab traces its roots to a New Jersey drug company called Ortho Pharmaceutical. \\\n", | |

| " There, scientists generated an early version of the antibody, dubbed OKT3. \\\n", | |

| " Originally sourced from mice, the molecule was able to bind to the surface of T cells \\\n", | |

| " and limit their cell-killing potential. In 1986, it was approved to help prevent organ \\\n", | |

| " rejection after kidney transplants, making it the first therapeutic antibody allowed \\\n", | |

| " for human use. \\\n", | |

| " Question: What was OKT3 originally sourced from? \\\n", | |

| " Answer:\"\"\"\n", | |

| "\n", | |

| "# Generate the message using the template and convert it to a dictionary\n", | |

| "messages_str = template.render(prompt=prompt)\n", | |

| "messages = [json.loads(messages_str)]\n", | |

| "\n", | |

| "response = get_completion(params, messages)\n", | |

| "IPython.display.Markdown(response.choices[0].message.content)\n" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": { | |

| "id": "BLg9Z2i4R8RT" | |

| }, | |

| "source": [ | |

| "Context obtained from here: https://www.nature.com/articles/d41586-023-00400-x" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": { | |

| "id": "jrtEMAPsR8RU" | |

| }, | |

| "source": [ | |

| "> **Exercise**: Edit prompt and get the model to respond that it isn't sure about the answer." | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": { | |

| "id": "Dso3RN7sR8RU" | |

| }, | |

| "source": [ | |

| "### 1.3 Text Classification" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 35, | |

| "metadata": { | |

| "id": "wjw0n9rFR8RU", | |

| "outputId": "a6059594-d589-4c07-c9cf-a61ca8de2b5d", | |

| "colab": { | |

| "base_uri": "https://localhost:8080/", | |

| "height": 46 | |

| } | |

| }, | |

| "outputs": [ | |

| { | |

| "output_type": "execute_result", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Positive" | |

| }, | |

| "metadata": {}, | |

| "execution_count": 35 | |

| } | |

| ], | |

| "source": [ | |

| "prompt = \"\"\"Classify the text into neutral, negative or positive. \\\n", | |

| " \\\n", | |

| " Text: I think the food was amazing! \\\n", | |

| " \\\n", | |

| " Sentiment:\"\"\"\n", | |

| "\n", | |

| "# Generate the message using the template and convert it to a dictionary\n", | |

| "messages_str = template.render(prompt=prompt)\n", | |

| "messages = [json.loads(messages_str)]\n", | |

| "\n", | |

| "response = get_completion(params, messages)\n", | |

| "IPython.display.Markdown(response.choices[0].message.content)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": { | |

| "id": "2Ti6BNfnR8RU" | |

| }, | |

| "source": [ | |

| "> **Exercise**: Modify the prompt to instruct the model to provide an explanation to the answer selected." | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": { | |

| "id": "apKYpWJMR8RU" | |

| }, | |

| "source": [ | |

| "### 1.4 Role Playing" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 36, | |

| "metadata": { | |

| "id": "5c-L0twDR8RU", | |

| "outputId": "73a24c81-b2ab-4737-99f9-3a93d4ee2bde", | |

| "colab": { | |

| "base_uri": "https://localhost:8080/", | |

| "height": 81 | |

| } | |

| }, | |

| "outputs": [ | |

| { | |

| "output_type": "execute_result", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Black holes are created when a massive star collapses under its own gravity after it runs out of fuel and can no longer support itself against gravity. This collapse causes the star's core to implode, creating a singularity, a point of infinite density. The gravitational pull of this singularity is so strong that not even light can escape from it, creating what is known as a black hole." | |

| }, | |

| "metadata": {}, | |

| "execution_count": 36 | |

| } | |

| ], | |

| "source": [ | |

| "prompt = \"\"\"The following is a conversation with an AI research assistant. \\\n", | |

| "The assistant tone is technical and scientific. \\\n", | |

| " \\\n", | |

| "Human: Hello, who are you? \\\n", | |

| "AI: Greeting! I am an AI research assistant. How can I help you today? \\\n", | |

| "Human: Can you tell me about the creation of blackholes? \\\n", | |

| "AI:\"\"\"\n", | |

| "\n", | |

| "# Generate the message using the template and convert it to a dictionary\n", | |

| "messages_str = template.render(prompt=prompt)\n", | |

| "messages = [json.loads(messages_str)]\n", | |

| "\n", | |

| "response = get_completion(params, messages)\n", | |

| "IPython.display.Markdown(response.choices[0].message.content)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": { | |

| "id": "7GTLlgAaR8RU" | |

| }, | |

| "source": [ | |

| "> **Exercise**: Modify the prompt to instruct the model to keep AI responses concise and short." | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": { | |

| "id": "aH2Xt1dTR8RU" | |

| }, | |

| "source": [ | |

| "### 1.5 Code Generation" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 37, | |

| "metadata": { | |

| "id": "cQpcLZ3cR8RU", | |

| "outputId": "da15ec92-21f0-438e-8e01-21d18fc8887d", | |

| "colab": { | |

| "base_uri": "https://localhost:8080/", | |

| "height": 522 | |

| } | |

| }, | |

| "outputs": [ | |

| { | |

| "output_type": "execute_result", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Here is a basic example of a Python API for a virtual plant care assistant:\n\n```python\nfrom flask import Flask, request, jsonify\n\napp = Flask(__name__)\n\nplants = []\n\n@app.route('/plants', methods=['GET'])\ndef get_plants():\n return jsonify({'plants': plants})\n\n@app.route('/plants', methods=['POST'])\ndef add_plant():\n data = request.get_json()\n name = data.get('name')\n type = data.get('type')\n watering_schedule = data.get('watering_schedule')\n \n if not name or not type or not watering_schedule:\n return jsonify({'error': 'Missing data'}), 400\n \n plant = {\n 'name': name,\n 'type': type,\n 'watering_schedule': watering_schedule\n }\n \n plants.append(plant)\n \n return jsonify({'message': 'Plant added successfully'})\n\n@app.route('/water_plant', methods=['POST'])\ndef water_plant():\n data = request.get_json()\n name = data.get('name')\n \n if not name:\n return jsonify({'error': 'Missing data'}), 400\n \n for plant in plants:\n if plant['name'] == name:\n return jsonify({'message': f'Watered" | |

| }, | |

| "metadata": {}, | |

| "execution_count": 37 | |

| } | |

| ], | |

| "source": [ | |

| "prompt = \"\"\"Develop a small Python API that functions as a virtual plant care assistant.\"\"\"\n", | |

| "\n", | |

| "# Generate the message using the template and convert it to a dictionary\n", | |

| "messages_str = template.render(prompt=prompt)\n", | |

| "messages = [json.loads(messages_str)]\n", | |

| "\n", | |

| "response = get_completion(params, messages)\n", | |

| "IPython.display.Markdown(response.choices[0].message.content)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": { | |

| "id": "kfjREa5MR8RU" | |

| }, | |

| "source": [ | |

| "### 1.6 Reasoning" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 38, | |

| "metadata": { | |

| "id": "dZAwJhQ9R8RU", | |

| "outputId": "4d84970d-4672-4702-d871-2bc269754d1e", | |

| "colab": { | |

| "base_uri": "https://localhost:8080/", | |

| "height": 70 | |

| } | |

| }, | |

| "outputs": [ | |

| { | |

| "output_type": "execute_result", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "Odd numbers: 15, 5, 13, 7, 1\nAdd them: 15 + 5 + 13 + 7 + 1 = 41\n\nSince 41 is an odd number, the sum of the odd numbers in the group is also an odd number." | |

| }, | |

| "metadata": {}, | |

| "execution_count": 38 | |

| } | |

| ], | |

| "source": [ | |

| "prompt = \"\"\"The odd numbers in this group add up to an even number: 15, 32, 5, 13, 82, 7, 1. \\\n", | |

| " \\\n", | |

| "Solve by breaking the problem into steps. \\\n", | |

| " \\\n", | |

| "First, identify the odd numbers, add them, and indicate whether the result is odd or even.\"\"\"\n", | |

| "\n", | |

| "# Generate the message using the template and convert it to a dictionary\n", | |

| "messages_str = template.render(prompt=prompt)\n", | |

| "messages = [json.loads(messages_str)]\n", | |

| "\n", | |

| "response = get_completion(params, messages)\n", | |

| "IPython.display.Markdown(response.choices[0].message.content)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": { | |

| "id": "EYVo5K_gR8RU" | |

| }, | |

| "source": [ | |

| "> **Exercise**: Improve the prompt to have a better structure and output format." | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "source": [ | |

| "### 1.7 Brain storming" | |

| ], | |

| "metadata": { | |

| "id": "nabOY4InE3Rt" | |

| } | |

| }, | |

| { | |

| "cell_type": "code", | |

| "source": [ | |

| "prompt = \"\"\"Generate 10 different names for a grunge rock bands based on dog names. Keep it to only 2 word band names.\"\"\"\n", | |

| "\n", | |

| "# Generate the message using the template and convert it to a dictionary\n", | |

| "messages_str = template.render(prompt=prompt)\n", | |

| "messages = [json.loads(messages_str)]\n", | |

| "\n", | |

| "response = get_completion(params, messages)\n", | |

| "IPython.display.Markdown(response.choices[0].message.content)" | |

| ], | |

| "metadata": { | |

| "colab": { | |

| "base_uri": "https://localhost:8080/", | |

| "height": 219 | |

| }, | |

| "id": "KWNpTyvIE1q5", | |

| "outputId": "9b991396-d518-4806-9d7a-f45fbe9a31cf" | |

| }, | |

| "execution_count": 39, | |

| "outputs": [ | |

| { | |

| "output_type": "execute_result", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "1. Pug Riot\n2. Husky Haze\n3. Bulldog Rebellion\n4. Rottweiler Rage\n5. Boxer Chaos\n6. Doberman Distortion\n7. Beagle Fury\n8. Pitbull Pandemonium\n9. Dalmatian Anarchy\n10. Great Dane Grit" | |

| }, | |

| "metadata": {}, | |

| "execution_count": 39 | |

| } | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "source": [ | |

| "### 1.8 JSON generation\n", | |

| "\n", | |

| "Another new feature in OpenAI is [JSON mode](https://platform.openai.com/docs/guides/text-generation/json-mode).\n", | |

| "\n" | |

| ], | |

| "metadata": { | |

| "id": "GOLmKaFaGoO8" | |

| } | |

| }, | |

| { | |

| "cell_type": "code", | |

| "source": [ | |

| "response = openai.chat.completions.create(\n", | |

| " model=\"gpt-3.5-turbo\",\n", | |

| " response_format={ \"type\": \"json_object\" },\n", | |

| " messages=[\n", | |

| " {\"role\": \"system\", \"content\": \"You are a helpful assistant designed to output JSON.\"},\n", | |

| " {\"role\": \"user\", \"content\": \"Generate 5 doctor's notes as a 'text' field that precribes drugs, dosages, form, duration, and frequency. Then in JSON have an 'entities' nested dictionary with each of the entities\"}\n", | |

| " ]\n", | |

| ")\n", | |

| "print(response.choices[0].message.content)" | |

| ], | |

| "metadata": { | |

| "colab": { | |

| "base_uri": "https://localhost:8080/" | |

| }, | |

| "id": "DQe3ytShIdaH", | |

| "outputId": "5f32d025-fb95-4118-9b40-075bafffcec4" | |

| }, | |

| "execution_count": 40, | |

| "outputs": [ | |

| { | |

| "output_type": "stream", | |

| "name": "stdout", | |

| "text": [ | |

| "{\n", | |

| " \"doctor_notes\": [\n", | |

| " {\n", | |

| " \"text\": \"Prescription: Advil 200mg tablets, take 1 tablet by mouth every 4-6 hours as needed for pain. Duration: 7 days.\",\n", | |

| " \"entities\": {\n", | |

| " \"drug\": \"Advil\",\n", | |

| " \"dosage\": \"200mg\",\n", | |

| " \"form\": \"tablets\",\n", | |

| " \"route\": \"by mouth\",\n", | |

| " \"frequency\": \"every 4-6 hours\",\n", | |

| " \"duration\": \"7 days\"\n", | |

| " }\n", | |

| " },\n", | |

| " {\n", | |

| " \"text\": \"Prescription: Amoxicillin 500mg capsules, take 1 capsule by mouth twice daily with food. Duration: 10 days.\",\n", | |

| " \"entities\": {\n", | |

| " \"drug\": \"Amoxicillin\",\n", | |

| " \"dosage\": \"500mg\",\n", | |

| " \"form\": \"capsules\",\n", | |

| " \"route\": \"by mouth\",\n", | |

| " \"frequency\": \"twice daily\",\n", | |

| " \"duration\": \"10 days\"\n", | |

| " }\n", | |

| " },\n", | |

| " {\n", | |

| " \"text\": \"Prescription: Zyrtec 10mg tablets, take 1 tablet by mouth daily at bedtime. Duration: 14 days.\",\n", | |

| " \"entities\": {\n", | |

| " \"drug\": \"Zyrtec\",\n", | |

| " \"dosage\": \"10mg\",\n", | |

| " \"form\": \"tablets\",\n", | |

| " \"route\": \"by mouth\",\n", | |

| " \"frequency\": \"daily at bedtime\",\n", | |

| " \"duration\": \"14 days\"\n", | |

| " }\n", | |

| " },\n", | |

| " {\n", | |

| " \"text\": \"Prescription: Prozac 20mg capsules, take 1 capsule by mouth daily in the morning. Duration: 30 days.\",\n", | |

| " \"entities\": {\n", | |

| " \"drug\": \"Prozac\",\n", | |

| " \"dosage\": \"20mg\",\n", | |

| " \"form\": \"capsules\",\n", | |

| " \"route\": \"by mouth\",\n", | |

| " \"frequency\": \"daily in the morning\",\n", | |

| " \"duration\": \"30 days\"\n", | |

| " }\n", | |

| " },\n", | |

| " {\n", | |

| " \"text\": \"Prescription: Lipitor 40mg tablets, take 1 tablet by mouth daily with water. Duration: 90 days.\",\n", | |

| " \"entities\": {\n", | |

| " \"drug\": \"Lipitor\",\n", | |

| " \"dosage\": \"40mg\",\n", | |

| " \"form\": \"tablets\",\n", | |

| " \"route\": \"by mouth\",\n", | |

| " \"frequency\": \"daily with water\",\n", | |

| " \"duration\": \"90 days\"\n", | |

| " }\n", | |

| " }\n", | |

| " ]\n", | |

| "}\n" | |

| ] | |

| } | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "source": [ | |

| "> ### 🗒 Info\n", | |

| "> - When using JSON mode, always instruct the model to produce JSON via some message in the conversation, for example via your system message. If you don't include an explicit instruction to generate JSON, the model may generate an unending stream of whitespace and the request may run continually until it reaches the token limit. To help ensure you don't forget, the API will throw an error if the string \"JSON\" does not appear somewhere in the context.\n", | |

| "\n", | |

| "> - The JSON in the message the model returns may be partial (i.e. cut off) if finish_reason is length, which indicates the generation exceeded max_tokens or the conversation exceeded the token limit. To guard against this, check finish_reason before parsing the response.\n", | |

| "\n", | |

| "> - JSON mode will not guarantee the output matches any specific schema, only that it is valid and parses without errors." | |

| ], | |

| "metadata": { | |

| "id": "ytuw_jmKMxnu" | |

| } | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": { | |

| "id": "zoC_k0P2R8RU" | |

| }, | |

| "source": [ | |

| "## 2. Advanced Prompting Techniques\n", | |

| "\n", | |

| "Objectives:\n", | |

| "\n", | |

| "- Cover more advanced techniques for prompting: few-shot, chain-of-thoughts,..." | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": { | |

| "id": "qtLFfYkMR8RU" | |

| }, | |

| "source": [ | |

| "### 2.2 Few-shot prompts\n", | |

| "\n", | |

| "[Default v2 Prompt from Prodigy](https://prodi.gy/docs/large-language-models#more-config)." | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "source": [ | |

| "prompt = \"\"\"\n", | |

| "You are an expert Named Entity Recognition (NER) system. Your task is to accept Text as input and extract named entities for the set of predefined entity labels.\n", | |

| "\n", | |

| "From the Text input provided, extract named entities for each label in the following format:\n", | |

| "\n", | |

| "DISH: <comma delimited list of strings>\n", | |

| "INGREDIENT: <comma delimited list of strings>\n", | |

| "EQUIPMENT: <comma delimited list of strings>\n", | |

| "\n", | |

| "Below are definitions of each label to help aid you in what kinds of named entities to extract for each label.\n", | |

| "Assume these definitions are written by an expert and follow them closely.\n", | |

| "\n", | |

| "DISH: Extract the name of a known dish.\n", | |

| "INGREDIENT: Extract the name of a cooking ingredient, including herbs and spices.\n", | |

| "EQUIPMENT: Extract any mention of cooking equipment. e.g. oven, cooking pot, grill\n", | |

| "\n", | |

| "Below are some examples (only use these as a guide):\n", | |

| "\n", | |

| "Text:\n", | |

| "'''\n", | |

| "You can't get a great chocolate flavor with carob.\n", | |

| "'''\n", | |

| "\n", | |

| "INGREDIENT: carob\n", | |

| "\n", | |

| "Text:\n", | |

| "'''\n", | |

| "You can probably sand-blast it if it's an anodized aluminum pan.\n", | |

| "'''\n", | |

| "\n", | |

| "INGREDIENT:\n", | |

| "EQUIPMENT: anodized aluminum pan\n", | |

| "\n", | |

| "\n", | |

| "Here is the text that needs labeling:\n", | |

| "\n", | |

| "Text:\n", | |

| "'''\n", | |

| "In Silicon Valley, a Voice of Caution Guides a High-Flying Uber\n", | |

| "'''\n", | |

| "\"\"\"\n", | |

| "\n", | |

| "messages = [\n", | |

| " {\n", | |

| " \"role\": \"user\",\n", | |

| " \"content\": prompt\n", | |

| " }\n", | |

| "]\n", | |

| "\n", | |

| "response = get_completion(params, messages)\n", | |

| "IPython.display.Markdown(response.choices[0].message.content)" | |

| ], | |

| "metadata": { | |

| "colab": { | |

| "base_uri": "https://localhost:8080/", | |

| "height": 46 | |

| }, | |

| "id": "OttnGJApfbKS", | |

| "outputId": "982bf077-3537-491e-8333-aef6cf511e7b" | |

| }, | |

| "execution_count": 41, | |

| "outputs": [ | |

| { | |

| "output_type": "execute_result", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "DISH: \nINGREDIENT: \nEQUIPMENT: Silicon Valley, Uber" | |

| }, | |

| "metadata": {}, | |

| "execution_count": 41 | |

| } | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 42, | |

| "metadata": { | |

| "id": "t83KToQBR8RU", | |

| "outputId": "d8cd12c4-69bf-4d1e-e72b-226b8d03629a", | |

| "colab": { | |

| "base_uri": "https://localhost:8080/", | |

| "height": 46 | |

| } | |

| }, | |

| "outputs": [ | |

| { | |

| "output_type": "execute_result", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "The odd numbers in the group are 15, 5, 13, 7, and 1. Their sum is 15 + 5 + 13 + 7 + 1 = 41, which is an odd number." | |

| }, | |

| "metadata": {}, | |

| "execution_count": 42 | |

| } | |

| ], | |

| "source": [ | |

| "prompt = \"\"\"The odd numbers in this group add up to an even number: 15, 32, 5, 13, 82, 7, 1.\n", | |

| "A:\"\"\"\n", | |

| "\n", | |

| "messages = [\n", | |

| " {\n", | |

| " \"role\": \"user\",\n", | |

| " \"content\": prompt\n", | |

| " }\n", | |

| "]\n", | |

| "\n", | |

| "response = get_completion(params, messages)\n", | |

| "IPython.display.Markdown(response.choices[0].message.content)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 43, | |

| "metadata": { | |

| "id": "W4PTuArsR8RU", | |

| "outputId": "82efe1ca-9a66-4f96-9162-00948d7e9e73", | |

| "colab": { | |

| "base_uri": "https://localhost:8080/", | |

| "height": 46 | |

| } | |

| }, | |

| "outputs": [ | |

| { | |

| "output_type": "execute_result", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "The answer is True." | |

| }, | |

| "metadata": {}, | |

| "execution_count": 43 | |

| } | |

| ], | |

| "source": [ | |

| "prompt = \"\"\"The odd numbers in this group add up to an even number: 4, 8, 9, 15, 12, 2, 1.\n", | |

| "A: The answer is False.\n", | |

| "\n", | |

| "The odd numbers in this group add up to an even number: 17, 10, 19, 4, 8, 12, 24.\n", | |

| "A: The answer is True.\n", | |

| "\n", | |

| "The odd numbers in this group add up to an even number: 16, 11, 14, 4, 8, 13, 24.\n", | |

| "A: The answer is True.\n", | |

| "\n", | |

| "The odd numbers in this group add up to an even number: 17, 9, 10, 12, 13, 4, 2.\n", | |

| "A: The answer is False.\n", | |

| "\n", | |

| "The odd numbers in this group add up to an even number: 15, 32, 5, 13, 82, 7, 1.\n", | |

| "A:\"\"\"\n", | |

| "\n", | |

| "messages = [\n", | |

| " {\n", | |

| " \"role\": \"user\",\n", | |

| " \"content\": prompt\n", | |

| " }\n", | |

| "]\n", | |

| "\n", | |

| "response = get_completion(params, messages)\n", | |

| "IPython.display.Markdown(response.choices[0].message.content)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": { | |

| "id": "aAzMuH49R8RU" | |

| }, | |

| "source": [ | |

| "### 2.3 Chain-of-Thought (CoT) Prompting" | |

| ] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "execution_count": 44, | |

| "metadata": { | |

| "id": "g3G4aRcdR8RU", | |

| "outputId": "79c8aa53-ce4c-4e6d-f3bf-c44c05db6187", | |

| "colab": { | |

| "base_uri": "https://localhost:8080/", | |

| "height": 87 | |

| } | |

| }, | |

| "outputs": [ | |

| { | |

| "output_type": "execute_result", | |

| "data": { | |

| "text/plain": [ | |

| "<IPython.core.display.Markdown object>" | |

| ], | |

| "text/markdown": "\n\nInitially, you bought 10 apples. \nAfter giving 2 to the neighbor, you had 10 - 2 = 8 apples remaining. \nAfter giving 2 to the repairman, you had 8 - 2 = 6 apples remaining. \nThen you bought 5 more apples, so you had 6 + 5 = 11 apples. \nAfter eating 1 apple, you remained with 11 - 1 = 10 apples.\n\nSo, you remained with 10 apples in total." | |

| }, | |

| "metadata": {}, | |

| "execution_count": 44 | |

| } | |

| ], | |

| "source": [ | |

| "prompt = \"\"\"I went to the market and bought 10 apples. I gave 2 apples to the neighbor and 2 to the repairman. I then went and bought 5 more apples and ate 1. How many apples did I remain with?\n", | |

| "\n", | |

| "Let's think step by step.\"\"\"\n", | |

| "\n", | |

| "messages = [\n", | |

| " {\n", | |

| " \"role\": \"user\",\n", | |

| " \"content\": prompt\n", | |

| " }\n", | |

| "]\n", | |

| "\n", | |

| "response = get_completion(params, messages)\n", | |

| "IPython.display.Markdown(response.choices[0].message.content)" | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": { | |

| "id": "I4FVm7NsR8RY" | |

| }, | |

| "source": [ | |

| "### 2.5 Self-Consistency\n", | |

| "As an exercise, check examples in our [guide](https://github.com/dair-ai/Prompt-Engineering-Guide/blob/main/guides/prompts-advanced-usage.md#self-consistency) and try them here.\n", | |

| "\n", | |

| "### 2.6 Generate Knowledge Prompting\n", | |

| "\n", | |

| "As an exercise, check examples in our [guide](https://github.com/dair-ai/Prompt-Engineering-Guide/blob/main/guides/prompts-advanced-usage.md#generated-knowledge-prompting) and try them here." | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "source": [ | |

| "### 3 LangChain\n", | |

| "\n", | |

| "This is adopted from [this notebook](https://github.com/dair-ai/Prompt-Engineering-Guide/blob/main/notebooks/pe-chatgpt-langchain.ipynb)." | |

| ], | |

| "metadata": { | |

| "id": "La1xBnKINg3c" | |

| } | |

| }, | |

| { | |

| "cell_type": "code", | |

| "source": [ | |

| "from langchain_openai import ChatOpenAI\n", | |

| "from langchain import PromptTemplate, LLMChain\n", | |

| "from langchain.prompts.chat import (\n", | |

| " ChatPromptTemplate,\n", | |

| " SystemMessagePromptTemplate,\n", | |

| " AIMessagePromptTemplate,\n", | |

| " HumanMessagePromptTemplate,\n", | |

| ")\n", | |

| "from langchain.schema import (\n", | |

| " AIMessage,\n", | |

| " HumanMessage,\n", | |

| " SystemMessage\n", | |

| ")" | |

| ], | |

| "metadata": { | |

| "id": "2gsAdU8SNfj7" | |

| }, | |

| "execution_count": 45, | |

| "outputs": [] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "source": [ | |

| "# chat mode instance\n", | |

| "chat = ChatOpenAI(temperature=0)" | |

| ], | |

| "metadata": { | |

| "id": "mERx9bWONk82" | |

| }, | |

| "execution_count": 46, | |

| "outputs": [] | |

| }, | |

| { | |

| "cell_type": "code", | |

| "source": [ | |

| "USER_INPUT = \"I love programming.\"\n", | |

| "FINAL_PROMPT = \"\"\"Classify the text into neutral, negative or positive.\n", | |

| "\n", | |

| "Text: {user_input}.\n", | |

| "Sentiment:\"\"\"\n", | |

| "\n", | |

| "chat.invoke([HumanMessage(content=FINAL_PROMPT.format(user_input=USER_INPUT))])" | |

| ], | |

| "metadata": { | |

| "colab": { | |

| "base_uri": "https://localhost:8080/" | |

| }, | |

| "id": "7zMTQOuONsQH", | |

| "outputId": "00f8bb75-b5da-4f36-c3bb-ea602c76e632" | |

| }, | |

| "execution_count": 47, | |

| "outputs": [ | |

| { | |

| "output_type": "execute_result", | |

| "data": { | |

| "text/plain": [ | |

| "AIMessage(content='Positive', response_metadata={'token_usage': {'completion_tokens': 1, 'prompt_tokens': 27, 'total_tokens': 28}, 'model_name': 'gpt-3.5-turbo', 'system_fingerprint': None, 'finish_reason': 'stop', 'logprobs': None}, id='run-7a88107b-5e0b-46f4-ad32-6b77dac00ff5-0', usage_metadata={'input_tokens': 27, 'output_tokens': 1, 'total_tokens': 28})" | |

| ] | |

| }, | |

| "metadata": {}, | |

| "execution_count": 47 | |

| } | |

| ] | |

| }, | |

| { | |

| "cell_type": "markdown", | |

| "metadata": { | |

| "id": "dUIKyClUR8RY" | |

| }, | |

| "source": [ | |

| "---" | |

| ] | |

| } | |

| ], | |

| "metadata": { | |

| "kernelspec": { | |

| "display_name": "promptlecture", | |

| "language": "python", | |

| "name": "python3" | |

| }, | |

| "language_info": { | |

| "codemirror_mode": { | |

| "name": "ipython", | |

| "version": 3 | |

| }, | |

| "file_extension": ".py", | |

| "mimetype": "text/x-python", | |

| "name": "python", | |