|

[ |

|

{ |

|

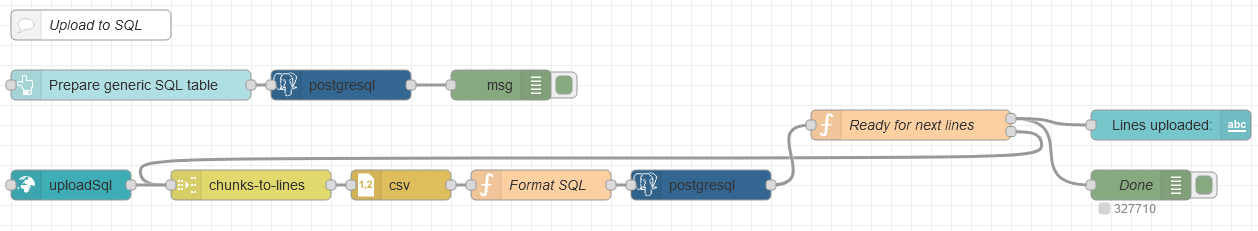

"id": "e6ec0f0a.388198", |

|

"type": "chunks-to-lines", |

|

"z": "d9a661f4.ef966", |

|

"name": "", |

|

"nbLines": "4096", |

|

"linesFormat": "csv", |

|

"decoder": "UTF-8", |

|

"x": 280, |

|

"y": 940, |

|

"wires": [ |

|

[ |

|

"436dce51.065f6" |

|

] |

|

] |

|

}, |

|

{ |

|

"id": "a2a16dc6.fbd32", |

|

"type": "function", |

|

"z": "d9a661f4.ef966", |

|

"name": "Ready for next lines", |

|

"func": "return [\n msg.complete ? msg : null,\n { tick: true },\n];\n", |

|

"outputs": 2, |

|

"noerr": 0, |

|

"initialize": "", |

|

"finalize": "", |

|

"libs": [], |

|

"x": 940, |

|

"y": 880, |

|

"wires": [ |

|

[ |

|

"87fbc09b.6deeb", |

|

"35615002.196b78" |

|

], |

|

[ |

|

"e6ec0f0a.388198" |

|

] |

|

] |

|

}, |

|

{ |

|

"id": "436dce51.065f6", |

|

"type": "csv", |

|

"z": "d9a661f4.ef966", |

|

"name": "", |

|

"sep": ",", |

|

"hdrin": true, |

|

"hdrout": "none", |

|

"multi": "mult", |

|

"ret": "\\n", |

|

"temp": "", |

|

"skip": "0", |

|

"strings": false, |

|

"include_empty_strings": "", |

|

"include_null_values": "", |

|

"x": 430, |

|

"y": 940, |

|

"wires": [ |

|

[ |

|

"4127dd44.2d49d4" |

|

] |

|

] |

|

}, |

|

{ |

|

"id": "4127dd44.2d49d4", |

|

"type": "function", |

|

"z": "d9a661f4.ef966", |

|

"name": "Format SQL", |

|

"func": "if (msg.payload && msg.payload.length > 0) {\n let payload = 'INSERT INTO your_table(time, sensor, data) VALUES ';\n \n for (const line of msg.payload) {\n payload += `('${line.time}', '${line.sensor}', '${line.data}'),`;\n }\n \n msg.payload = payload.slice(0, - 1) + ';';\n}\nreturn msg;\n", |

|

"outputs": 1, |

|

"noerr": 0, |

|

"initialize": "", |

|

"finalize": "", |

|

"libs": [], |

|

"x": 570, |

|

"y": 940, |

|

"wires": [ |

|

[ |

|

"65c0779b0f924c8b" |

|

] |

|

] |

|

}, |

|

{ |

|

"id": "d5504079.651a68", |

|

"type": "comment", |

|

"z": "d9a661f4.ef966", |

|

"name": "Upload to SQL", |

|

"info": "", |

|

"x": 120, |

|

"y": 780, |

|

"wires": [] |

|

}, |

|

{ |

|

"id": "715380a8.42ec7", |

|

"type": "debug", |

|

"z": "d9a661f4.ef966", |

|

"name": "", |

|

"active": true, |

|

"tosidebar": true, |

|

"console": false, |

|

"tostatus": false, |

|

"complete": "true", |

|

"targetType": "full", |

|

"statusVal": "", |

|

"statusType": "auto", |

|

"x": 530, |

|

"y": 840, |

|

"wires": [] |

|

}, |

|

{ |

|

"id": "87fbc09b.6deeb", |

|

"type": "debug", |

|

"z": "d9a661f4.ef966", |

|

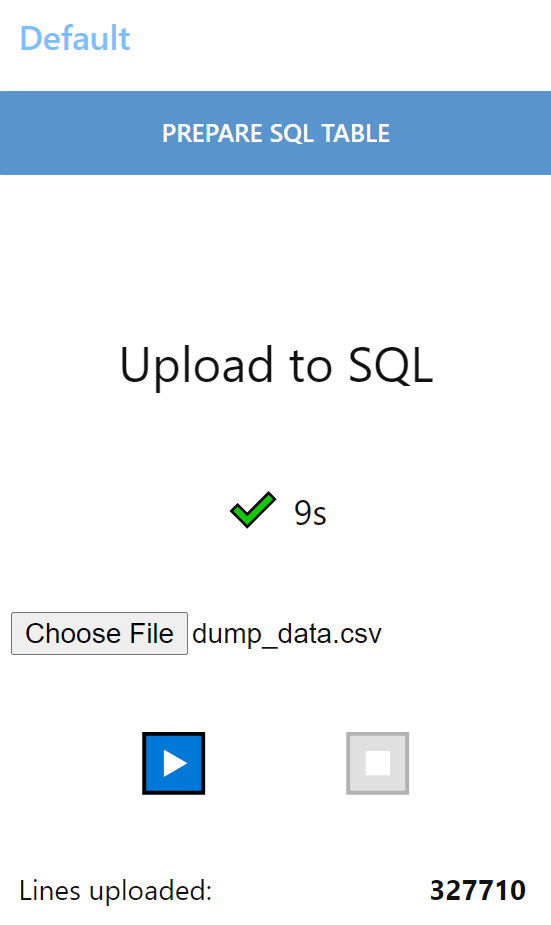

"name": "Done", |

|

"active": true, |

|

"tosidebar": true, |

|

"console": false, |

|

"tostatus": true, |

|

"complete": "true", |

|

"targetType": "full", |

|

"statusVal": "_parts.lines", |

|

"statusType": "msg", |

|

"x": 1170, |

|

"y": 940, |

|

"wires": [] |

|

}, |

|

{ |

|

"id": "4b31cec2.dad2c8", |

|

"type": "ui_upload", |

|

"z": "d9a661f4.ef966", |

|

"group": "593aaad02ba9e607", |

|

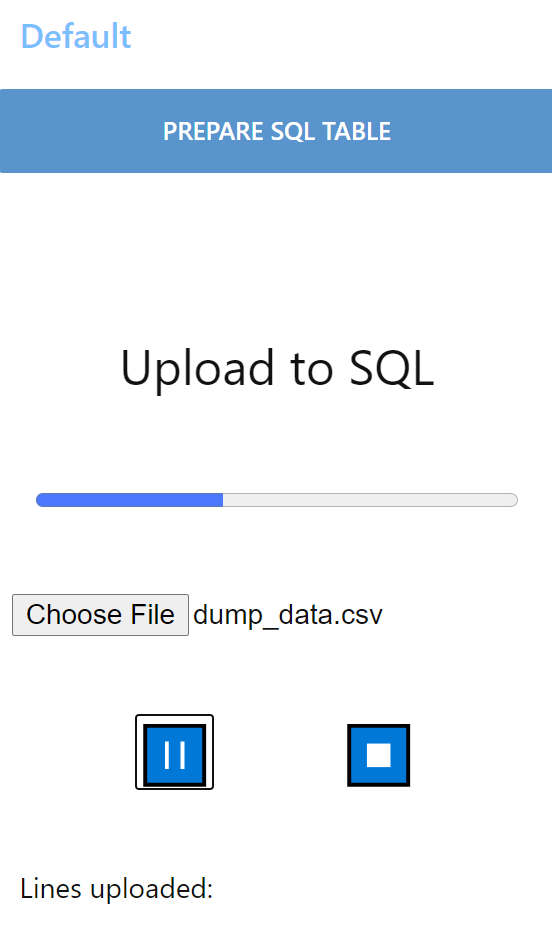

"title": "Generic upload to SQL", |

|

"name": "uploadSql", |

|

"order": 3, |

|

"width": 6, |

|

"height": 6, |

|

"chunk": "256", |

|

"transfer": "binary", |

|

"x": 100, |

|

"y": 940, |

|

"wires": [ |

|

[ |

|

"e6ec0f0a.388198" |

|

] |

|

] |

|

}, |

|

{ |

|

"id": "35615002.196b78", |

|

"type": "ui_text", |

|

"z": "d9a661f4.ef966", |

|

"group": "6426525.5bfe1ac", |

|

"order": 3, |

|

"width": 0, |

|

"height": 0, |

|

"name": "", |

|

"label": "Lines uploaded:", |

|

"format": "{{msg._parts.lines}}", |

|

"layout": "row-spread", |

|

"x": 1200, |

|

"y": 880, |

|

"wires": [] |

|

}, |

|

{ |

|

"id": "65d2e9d6.5ca5", |

|

"type": "ui_button", |

|

"z": "d9a661f4.ef966", |

|

"name": "", |

|

"group": "593aaad02ba9e607", |

|

"order": 1, |

|

"width": 0, |

|

"height": 0, |

|

"passthru": false, |

|

"label": "Prepare generic SQL table", |

|

"tooltip": "", |

|

"color": "", |

|

"bgcolor": "", |

|

"icon": "", |

|

"payload": "", |

|

"payloadType": "str", |

|

"topic": "", |

|

"topicType": "str", |

|

"x": 160, |

|

"y": 840, |

|

"wires": [ |

|

[ |

|

"6b76c340a580b0a4" |

|

] |

|

] |

|

}, |

|

{ |

|

"id": "6b76c340a580b0a4", |

|

"type": "postgresql", |

|

"z": "d9a661f4.ef966", |

|

"name": "", |

|

"query": "DROP TABLE IF EXISTS your_table;\nCREATE TABLE your_table (\n\tid\t\tSERIAL\tNOT NULL,\n\ttime\tTIMESTAMP WITH TIME ZONE\tNOT NULL,\n\tsensor\tTEXT,\n\tdata\tJSONB\n);\n\nSELECT create_hypertable('your_table', 'time');\nCREATE INDEX IF NOT EXISTS index_sensor ON your_table(\"sensor\");\n", |

|

"postgreSQLConfig": "20ae1e52d1eef983", |

|

"split": false, |

|

"rowsPerMsg": 1, |

|

"outputs": 1, |

|

"x": 370, |

|

"y": 840, |

|

"wires": [ |

|

[ |

|

"715380a8.42ec7" |

|

] |

|

] |

|

}, |

|

{ |

|

"id": "65c0779b0f924c8b", |

|

"type": "postgresql", |

|

"z": "d9a661f4.ef966", |

|

"name": "", |

|

"query": "{{{ msg.payload }}}", |

|

"postgreSQLConfig": "20ae1e52d1eef983", |

|

"split": false, |

|

"rowsPerMsg": 1, |

|

"outputs": 1, |

|

"x": 730, |

|

"y": 940, |

|

"wires": [ |

|

[ |

|

"a2a16dc6.fbd32" |

|

] |

|

] |

|

}, |

|

{ |

|

"id": "593aaad02ba9e607", |

|

"type": "ui_group", |

|

"name": "Default", |

|

"tab": "5f17e3a3.4c85ac", |

|

"order": 1, |

|

"disp": true, |

|

"width": "6", |

|

"collapse": false |

|

}, |

|

{ |

|

"id": "6426525.5bfe1ac", |

|

"type": "ui_group", |

|

"name": "Default", |

|

"tab": "cb135bc2.cc3d9", |

|

"order": 1, |

|

"disp": true, |

|

"width": "6", |

|

"collapse": true |

|

}, |

|

{ |

|

"id": "20ae1e52d1eef983", |

|

"type": "postgreSQLConfig", |

|

"name": "admin@timescale:5432/iot", |

|

"host": "timescale", |

|

"hostFieldType": "str", |

|

"port": "5432", |

|

"portFieldType": "num", |

|

"database": "iot", |

|

"databaseFieldType": "str", |

|

"ssl": "false", |

|

"sslFieldType": "bool", |

|

"max": "10", |

|

"maxFieldType": "num", |

|

"min": "1", |

|

"minFieldType": "num", |

|

"idle": "1000", |

|

"idleFieldType": "num", |

|

"connectionTimeout": "10000", |

|

"connectionTimeoutFieldType": "num", |

|

"user": "admin", |

|

"userFieldType": "str", |

|

"password": "RTffY87xVqGA", |

|

"passwordFieldType": "str" |

|

}, |

|

{ |

|

"id": "5f17e3a3.4c85ac", |

|

"type": "ui_tab", |

|

"name": "Generic demo", |

|

"icon": "dashboard", |

|

"disabled": false, |

|

"hidden": false |

|

}, |

|

{ |

|

"id": "cb135bc2.cc3d9", |

|

"type": "ui_tab", |

|

"name": "Anomaly", |

|

"icon": "dashboard", |

|

"disabled": false, |

|

"hidden": false |

|

} |

|

] |