2018.8 @AlloVince

- Scrapy (Python)

- pyspider (Python)

- Nutch (Java)

- colly (go)

- 异步任务调度

- 爬行算法

- URL去重和管理

- 数据ETL脚手架

An open source and collaborative framework for extracting the data you need from websites.

class DoubanMovieSpider(CrawlSpider):

start_urls = ['https://movie.douban.com/chart']

rules = (

Rule(LinkExtractor(allow='^https://movie.douban.com/(typerank|celebrity|tag)/.*', ), follow=True, ),

Rule(LinkExtractor(allow='^https://movie.douban.com/subject/\d+/$', ), follow=True, callback='handle_item'),

)

def handle_item(self, response: Response) -> RawHtmlItem:

return RawHtmlItem(url=response.url, html=response.text)- 爬虫

- 基于事件驱动的异步任务管理

- 自动处理重复url

- 深度优先 / 广度优先爬行算法

- 基于Xpath的dom解析

- 架构

- 丰富的 middleware / pipeline

- Shell console for debug & dev

- 编码自动处理

- 支持 telnet signal

An event-driven networking framework.

- event loop

- core: reactor

- event dispatcher: deferred

- communication: TCP/UDP/Process

- IO handler: threads pool

- Event Loop: 程序架构, Event Driven 的具体实现方式

- reactor: 一种设计模式, 提供异步事件的通用接口,底层根据不同操作系统选择不同的异步API,Linux下默认使用epoll

- threads pool: 处理IO操作, 默认10线程,延迟处理

import time

time.sleep(3)

print('Hello world')from twisted.internet import reactor

def aSillyBlockingMethod(x):

import time

time.sleep(3)

print('Hello world')

reactor.callInThread(aSillyBlockingMethod, "3 seconds have passed")

reactor.run()import asyncio

@asyncio.coroutine

def hello():

r = yield from asyncio.sleep(1)

print("hello world")

loop = asyncio.get_event_loop()

loop.run_until_complete(hello())

loop.close()import asyncio

async def hello():

r = await asyncio.sleep(1)

print("Hello world!")- 抽象程度高,组件化做的非常好

- 覆盖了80%常用的爬虫功能

- 社区成熟, 易扩展

- 多线程模型,只支持单机使用

- 抓取和ETL过程耦合

- Twisted增加了复杂度

- API/Ajax抓取

- 反爬取

- 全量和增量爬取

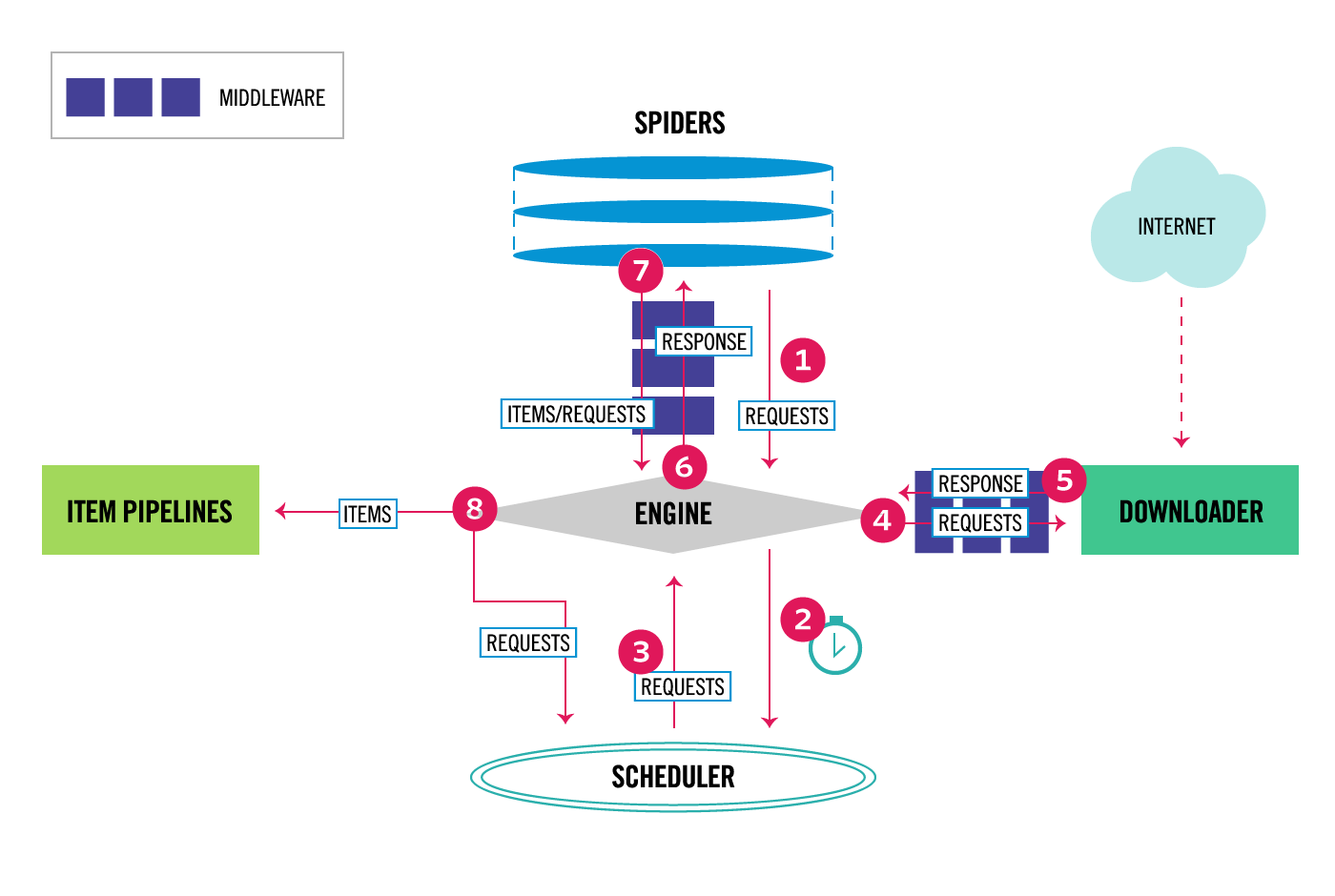

- Scrapy Engine

- Dupefilter <--> Redis Dupefilter

- Scheduler <--> Redis Scheduler

- Downloader

- Spiders <--> RedisCrawlSpider

- Item Pipeline <--> Redis Item Pipeline

- Downloader middlewares

- Spider middlewares

- 调试困难

- 重试困难

- 数据库成为瓶颈

- 分布式抓取: scrapy-redis

- 全量抓取和增量抓取

- 存储支持本地磁盘和OSS

- 消息队列通知

- 支持Docker

- 如何应对反爬取

- 频控

- 代理池

- 隧道

- 其他?

- Ajax内容

- API爬取