It's not uncommon to see beautiful visualizations in HPC center galleries, but the majority of these are either rendered off the HPC or created using programs that run on OpenGL or custom rasterization techniques. To put it simply the next generation of graphics provided by OpenGL's successor Vulkan is strangely absent in the super computing world. The aim of this survey of XSEDE resources is to determine the systems that can support Vulkan workflows and programs. This will assist me in my capacity as a campus champion to suggest which centers might support my researchers who have Vulkan needs. It will also allow me to compile a supplementary document presenting my results to share on the Open Science Framework (OSF) for other's who find themselves with questions about which supercomputers offer next generation graphics support.

XSEDE, the eXtreme Science and Engineering Discovery Environment, was the largest NSF-funded cyberinfrastructure support and coordination project that provided access to HPC centers regardless of institutional affiliation. For more information consider using the internet archive to look into the old project website or read more here https://www.nsf.gov/news/news_summ.jsp?cntn_id=121182.

Now that the community has transitioned to ACCESS, another stage of the survey may be conducted which will include more test programs and benchmarking. This survey is meant only to convey the barest indication of which systems support Vulkan so vulkaninfo outputs are all that are needed.

This document aims to provide background on loading vulkan with an array of different gpu cards used at different HPC centers around the US.

- Darwin:NVIDIA Tesla V100-SXM2-32GB

- Expanse: Tesla V100-SXM2-32GB

- Longhorn: Tesla V100-SXM2-16GB

- Anvil: VIDIA A100-SXM4-40GB

- Lonestar6: NVIDIA A100-PCIE-40GB

- Frontera Quadro RTX 5000

I have included a short snippet of relevant information instructing users on how to submit for an interactive allocation and load vulkan at each of the following HPC Centers. For some sites accompanying screenshots are available. In instances where the screenshots are available, they are provided underneath a section that holds all the code entered in a single section for ease of reuse.

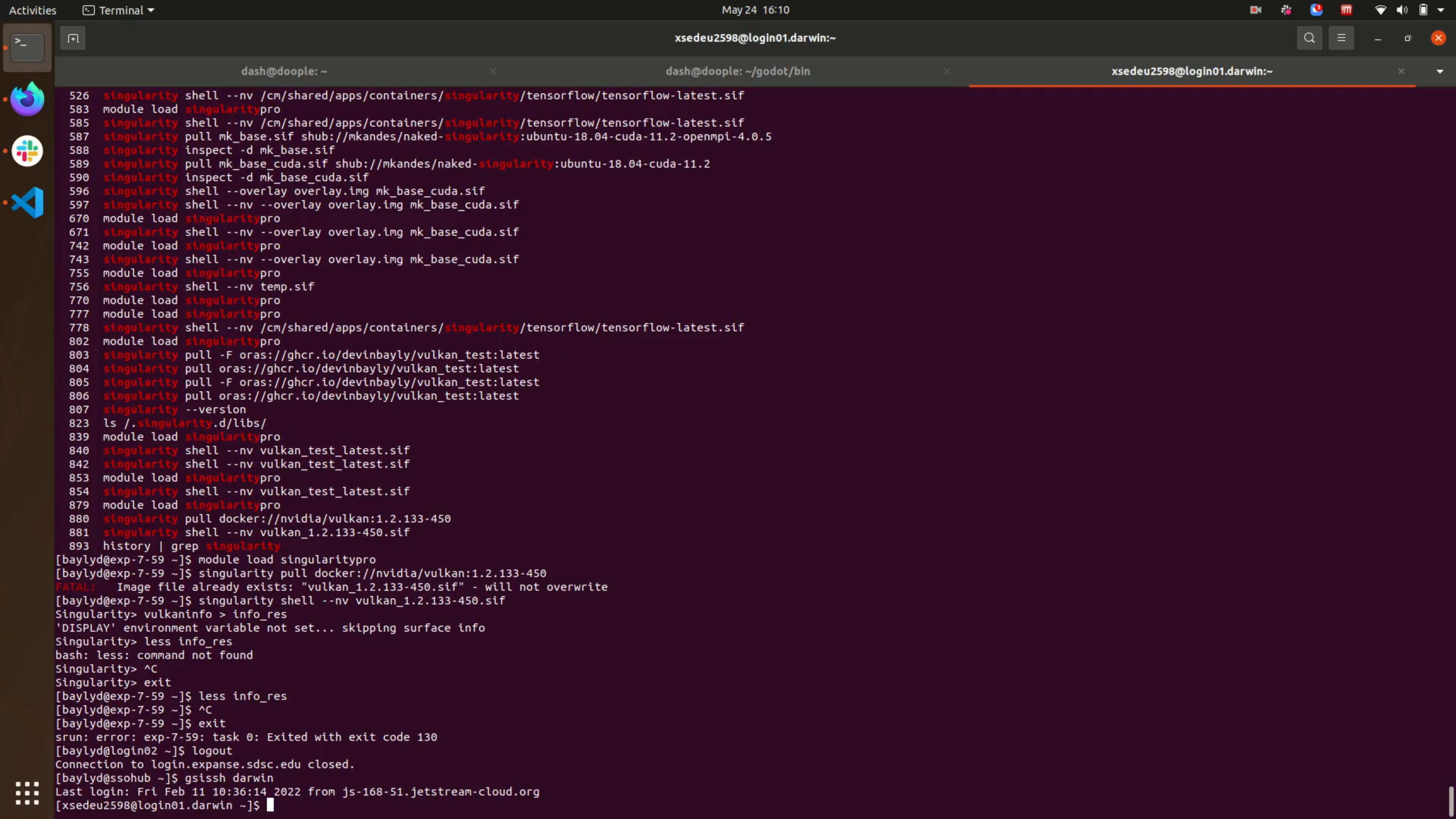

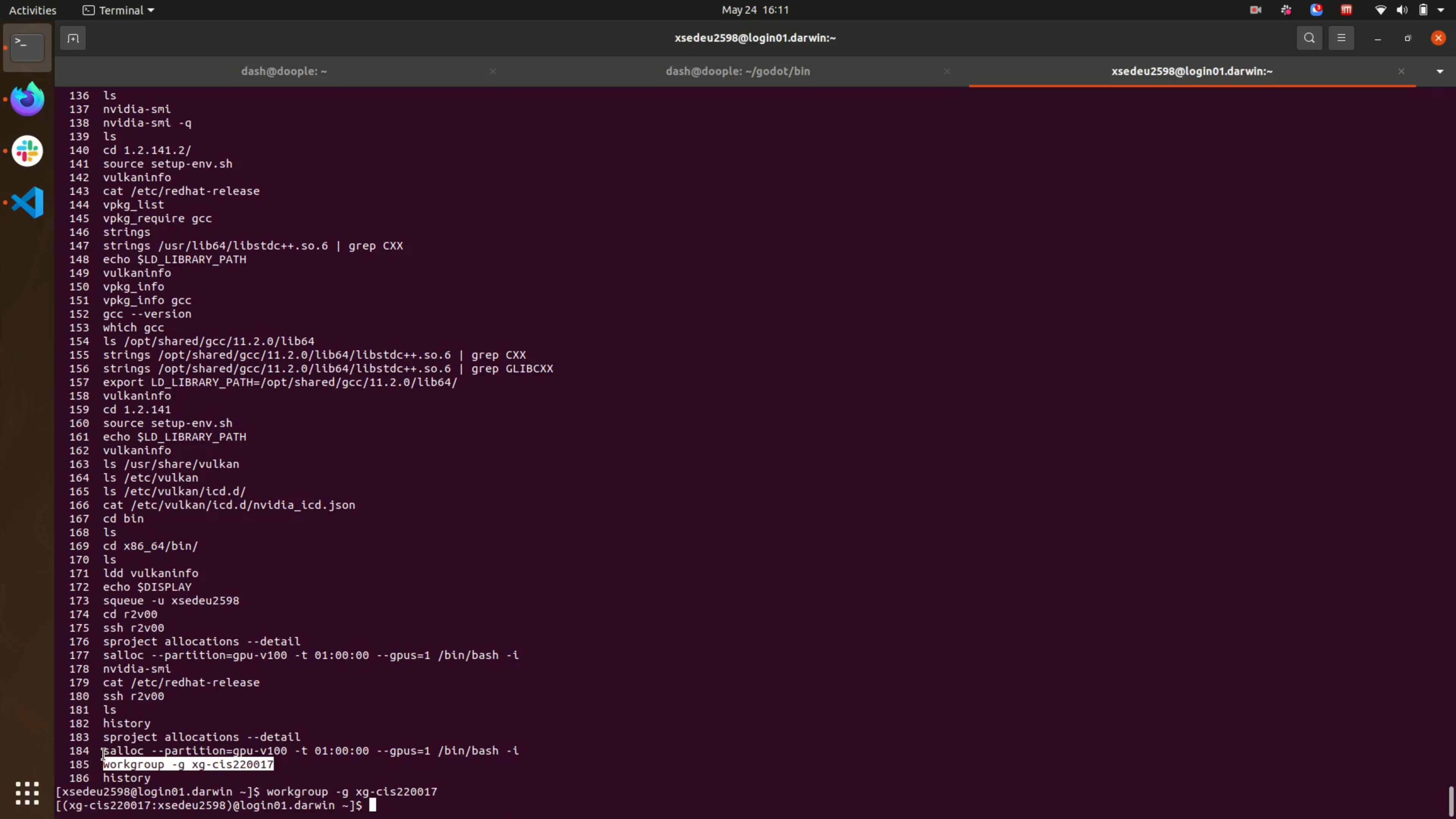

http://docs.hpc.udel.edu/abstract/darwin/darwin

Steps:

#use xsede portal to login

gsissh darwin

#select allocation workgroup

workgroup -g xg-cis220017

# request 1 hour of time for one v100 allocation

salloc --partition=gpu-v100 -t 01:00:00 --gpus=1 /bin/bash -i

#connect to node

ssh r2v00

#check hardware

nvidia-smi

nvidia-smi -L

# add gcc

vpkg_require gcc

#ensure the module loaded librarys are in env variable

export LD_LIBRARY_PATH=/opt/shared/gcc/11.2.0/lib64

#choose vulkan sdk directory

cd 1.2.198.1

source setup-env.sh

#check modified env variables

echo $PATH

echo $LD_LIBRARY_PATH

#run vulkaninfo and check for hardware names listed

https://www.rcac.purdue.edu/anvil#docs

gsissh anvil # log into anvil purdue

myquota # reveal allocation hours and project title

sinteractive -p gpu-debug -A "cis220017-gpu" --gres=gpu:1 -t 00:30:00 # launch interactive allocation for 30 mins

ls /etc/vulkan/icd.d # check on the files created upon installation of the vulkan files from nvidia driver

ls /usr/share/vulkan/icd.d

cat /usr/share/vulkan/icd.d/nvidia_icd.x86_64.json # show the support provided

wget "https://sdk.lunarg.com/sdk/download/1.2.198.1/linux/vulkansdk-linux-x86_64-1.2.198.1.tar.gz # download the latest sdk

tar xf vulkansdk-linux-x86_64-1.2.198.1.tar.gz # unzip it

cd 1.2.198.1/

source setup-env.sh # source the setup script

cd ..

vulkaninfo > info_res # pipe output into a file for inspection

gsissh expanse #access expanse

srun --partition=gpu-debug --pty --account=uofa118 --ntasks-per-node=10 --nodes=1 --mem=96G --gpus=1 -t 00:30:00 --wait=0 --export=ALL /bin/bash # start a debug session with 1 gpu for 30 mins

nvidia-smi -L # see the info on the card type that we have

module load singularitypro # load the means to pull a specific container

singularity pull docker://nvidia/vulkan:1.2.133-450 # pull the container that works best with SDSC

singularity shell --nv vulkan_1.2.133-450.sif # enter interactive session with the vulkan container

vulkaninfo > info_res # output the debug info into a specific file

less info_res # reveal the information showing the compatibility with the local GPU card

https://www.sdsc.edu/support/user_guides/expanse.html

https://portal.tacc.utexas.edu/user-guides

https://frontera-portal.tacc.utexas.edu/user-guide/

ssh baylyd@frontera.tacc.utexas.edu # connect to frontera

idev -p rtx-dev -t 00:30:00 -N 1 # connect to interactive developer partition with gpu cards

module load tacc-singularity # get singularity

nvidia-smi # check the provided cards

singularity pull docker://nvidia/vulkan:1.2.133-450 # retrieve container

singularity shell --nv vulkan_1.2.133-450.sif # start interactive container

vulkaninfo > info_res # send information to the specific file, this uses the version of vulkan in the container

less info_res # check that the correct cards are detected

# this is if you want a specific vulkan version

wget "https://sdk.lunarg.com/sdk/download/1.2.198.1/linux/vulkansdk-linux-x86_64-1.2.198.1.tar.gz"

tar xf vulkansdk-linux-x86_64-1.2.198.1.tar.gz

cd 1.2.198.1

source setup_env.sh

vulkaninfo > info_res

less info_res # will show the 1.2.198 version

https://portal.tacc.utexas.edu/user-guides/lonestar6#gpu-nodes

ssh baylyd@ls6.tacc.utexas.edu

idev -p gpu-a100 -t 00:300:00 -N 1

nvidia-smi -L

wget "https://sdk.lunarg.com/sdk/download/1.2.198.1/linux/vulkansdk-linux-x86_64-1.2.198.1.tar.gz"

tar xf vulkansdk-linux-x86_64-1.2.198.1.tar.gz

cd 1.2.198.1

source setup_env.sh

cd ..

vulkaninfo > info_res

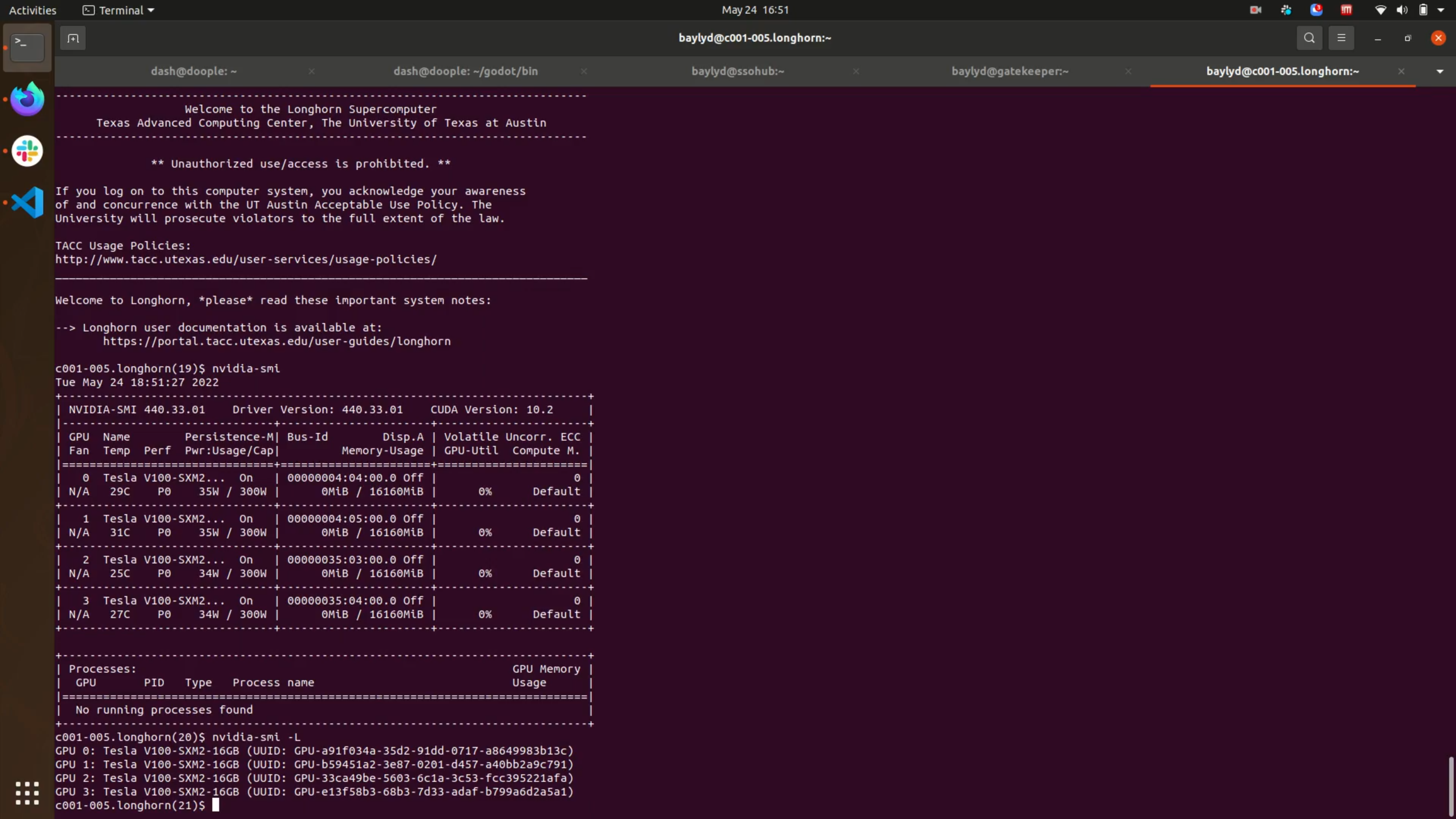

https://portal.tacc.utexas.edu/user-guides/longhorn

Sadly this system faces issues stemming from the Power 9 Processors

ssh baylyd@longhorn.tacc.utexas.edu

idev -p development -N 1 -t 00:30:00

nvidia-smi -L

wget "https://sdk.lunarg.com/sdk/download/1.2.198.1/linux/vulkansdk-linux-x86_64-1.2.198.1.tar.gz"

tar xf vulkansdk-linux-x86_64-1.2.198.1.tar.gz

cd 1.2.198.1

source setup_env.sh

cd ..

vulkaninfo > info_res

Followed by

-bash: /home/04766/baylyd/1.2.198.1/x86_64/bin/vulkaninfo: cannot execute binary file

Issue with the Power 9 processors

https://portal.xsede.org/psc-bridges-2

Steps:

gsissh bridges2

interact -p GPU --gres=gpu:v100-16:8

wget "https://sdk.lunarg.com/sdk/download/1.2.198.1/linux/vulkansdk-linux-x86_64-1.2.198.1.tar.gz"

tar xf vulkansdk-linux-x86_64-1.2.198.1.tar.gz

cd 1.2.198.1

source setup_env.sh

vulkaninfo > info_res

https://www.marcc.jhu.edu/getting-started/basic/

https://portal.xsede.org/jhu-rockfish

No xsede access (included in next system review)

I have now edited the section about Darwin, next is purdue