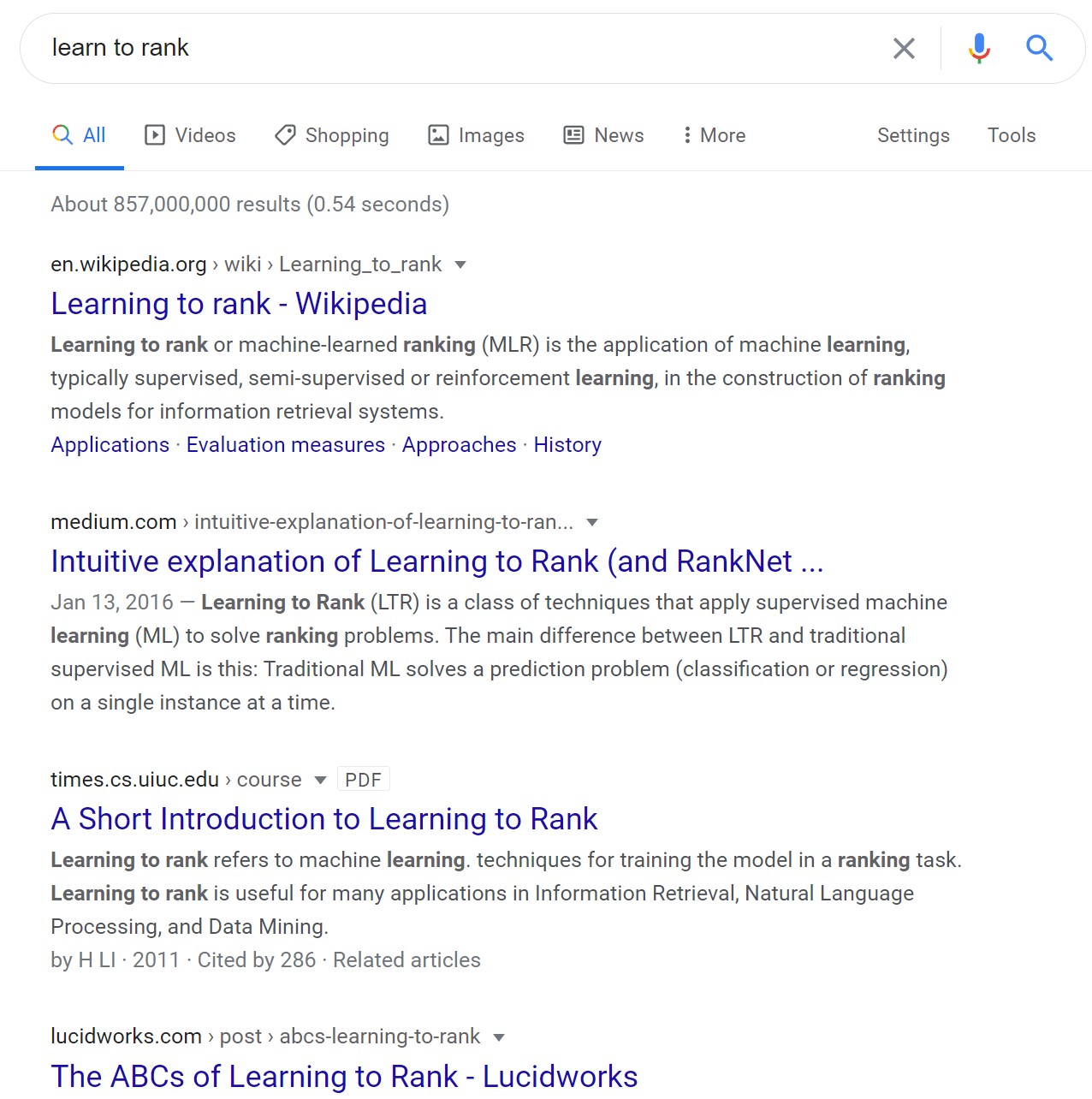

Learning to Rank (LTR) is an important technology in search engine. Its purpose is to sort candidate documents based on the relevance of candidate documents and query sentences, or select top-k documents. For example, in search engines, engineers need to select the most relevant search results based on user questions and display them on the first page. The picture below is the search result of the search engine:

According to the loss function, LTR can be divided into three types:

- Pointwise, This type of algorithm trains the LTR task as a regression task, that is, tries to train a scorer for documents and query sentences, and then sorts them according to the score.

- Pairwise, This type of algorithm considers two candidate documents. The learning goal is to rank highly relevant documents in the front. triplet loss belongs to Pairwise, and its loss function is

- $$ loss = max(0, score_{neg}-score_{pos}+margin)$$ It can be seen that the loss function considers two candidate documents at once.

- Listwise, The loss function of this type of algorithm will consider multiple candidate documents, which is the focus of this article, which will be described in detail below.

This article mainly introduces a new Listwise loss function that I invented during my study and research process, and the effeciency of the loss function. If the reader is not familiar with the LTR task and its algorithm, it is recommended to learn LTR related knowledge first.

This learning goal is to train a scorer, which can measure the relevance between q and d, scorer(q, d) is the relevance score, the larger the score, the more relevant. Under the current mainstream method, the scorer generally uses a deep neural network model .

The loss function is different, the method of constructing the training data will be different:

-Pointwise, The regression data set can be constructed, the relevant record is set to 1, and the irrelevant record is set to 0.

-Pairwise, The data set of triplet type can be constructed, such as (

In the previous summary, we can know that the training set is in the following form ($q,d^+_1,d^+_2..., d^+_n , d^-1, d^-2, ..., d^-{n+m}$), For a q, there are m related documents and n unrelated documents, so we can get a total of m+n scores: $(score_1,score_2,...,score_n,...,score{n+m})$, We want the scorer to score related documents close to positive infinity, and score unrelated documents close to negative infinity.

Do a softmax for m+n points to get

But this optimization goal is unreasonable. Assuming

In the current situation, the loss function can already be implemented by code, but we can also do some simplification work,

Take the logarithm of both sides:

Does this feel more refreshing, and then we convert it into the form of a loss function: $$ loss = -nlog(n) - \sum_{i=1}^{n}{log(p_i)}$$

So our training goal is:

After obtaining the final loss function, we still need to use code to implement it. The implementation code is as follows:

# A simple example for my listwise loss function

# Assuming that n=3, m=4

# In[1]

# scores

scores = torch.tensor([[3,4.3,5.3,0.5,0.25,0.25,1]])

print(scores)

print(scores.shape)

'''

tensor([[0.3000, 0.3000, 0.3000, 0.0250, 0.0250, 0.0250, 0.0250]])

torch.Size([1, 7])

'''

# In[2]

# log softmax

log_prob = torch.nn.functional.log_softmax(scores,dim=1)

print(log_prob)

'''

tensor([[-2.7073, -1.4073, -0.4073, -5.2073, -5.4573, -5.4573, -4.7073]])

'''

# In[3]

# compute loss

n = 3.

mask = torch.tensor([[1,1,1,0,0,0,0]]) # number of 1 is n

loss = -1*n*torch.log(torch.tensor([[n]])) - torch.sum(log_prob*mask,dim=1,keepdim=True)

print(loss)

loss = loss.mean()

print(loss)

'''

tensor([[1.2261]])

tensor(1.2261)

'''This sample code only shows the case where batch_size is 1. When batch_size is greater than 1, each piece of data has different m and n. In order to be sent to the model to calculate the score together, the mask needs to be used flexibly. I actually use two masks in the loss function: masking all candidate documents for each piece of data and related documents for each piece of data.

Since the evaluation data uses internal data, the code and data cannot be made public, so we can only briefly summarize the use effect:

- The effect is better than Pointwise and Pairwise, but the gap is not particularly large

- Compared with Pairwise, the convergence speed is extremely fast, and the best results can be basically achieved in one round of training

The following is personal experience:

- This loss function occupies more video memory, the actual batch_size is batch_size*(m+n), it is recommended to display more than 12G

- The more negative examples, the better the effect and the faster the convergence

- When using pytorch to implement log_softmax, do not implement it yourself, use the log_softmax function in torch directly, which is more efficient.

- There is only one positive example, you can also consider turning to a classification problem, using cross entropy for optimization, the effect is also good

The loss function is relatively simple. You can derive it by yourself with simple mathematical knowledge. It has achieved good results in actual use, and I hope it can help you. If you have a better approach, please let me know.

The article can be reprinted, but please indicate the source: