NOTE: Pytorch3d no longer has to be compiled i have stripped out the function we use to make this a lot easier and also so we do not have to use WSL2 with linux and can now run directly on your windows system.

Comments section is not checked often for issues please join the disco diffusion discord for assistance

You may now use the official disco diffusion notebook with this tutorial as it has been uodated to reflect the changes here for better cross platform support

This will walk you through getting your environment setup to run most of the GANs with Anaconda as a Virtual Python Environment.

System Requirements:

OS: Windows (11/10/8/7), Ubuntu(19,20)

GPU: Nvidia (AMD hasnt been tested)

VRAM: 12gb+

- A) Cuda enabled GPU

- Latest Nvidia Drivers

- CUDA Toolkit for your version

- B) Python (Anaconda)

- https://www.anaconda.com/products/individual#Downloads

- We use anaconda to make it easier to manage different projects

- Virtual python evironment and versioning

- Anaconda also includes Jupyter Notebook pre-installed

- C) Git

- https://git-scm.com/downloads

- version control manager for code

- we just use it to download repos from GitHub

- Must be on system PATH, When installing select the option add to system PATH

- D) FFmpeg

- https://ffmpeg.org/download.html

- Video encoding tool library

- we use this mainly to turn image sequences into videos

- Must be on system PATH

- E) ImageMagick

- https://imagemagick.org/script/download.php

- software suite for displaying, creating, converting, modifying, and editing raster images.

- used in some projects but handy to have already installed

- F) Wget

- used to download models for projects

- Windows users need this verison

- https://eternallybored.org/misc/wget/

- download the .exe

- create a new folder to put the .exe in (prefereable on the root of your C:/ drive)

- e.g

C:/wget/wget.exe - open Control Panel and search for

environment variables - select the one with the shield icon

Edit the system environment variables - click the button at the bottom

Environment Virables... - under

System variablesfind the and select thepathvariable - click

Edit...button and thenNew - add the new path to folder the .exe is in

- e.g

C:/wget - once entered click

Okon each window until all 3 are closed - for the new env variables to be used you must reopen a new Command Prompt window

- Linux users can just use the package in their distributions

(spoiler) Add to Path on Windows (spoiler)

- G) cURL

- used to download models, some projects use this instead of wget

- Latest versions of windows have cURL pre installed

- Older versions that dont include cURL use this one

- Linux users can just use the package in their distributions

- A) Setup and activate conda env

conda create --name disco-diffusion python=3.9conda activate disco-diffusion

- B) Install a few more pip dependencies

pip install ipykernel opencv-python pandas regex matplotlib ipywidgets

- C) Install Pytorch with CUDA support!

- https://pytorch.org/get-started/locally/

- be sure to install a version with GPU support!

- example of what worked for me

conda install pytorch torchvision torchaudio cudatoolkit=11.3 -c pytorch

- D) Download disco diffusion repo!

git clone https://github.com/alembics/disco-diffusion.git- change directories into the downloaded repo

cd disco-diffusion

there are several ways to run disco diffusion at this point:

plain python file wich means you will need to go into the file and manually find all the configuration options and change them as needed, an easy way to go about this is searching the document for #@param lines and edit ones containing that comment trailing the lines e.g. use_secondary_model = True #@param {type: 'boolean'}.

- Run disco diffusion

python -m disco.py

running the .ipynb file directly in VS also requires editing of the #@param lines in the code

- Download Visual Studio

- Open the .ipynb file in VS

- ENJOY!

- for more interactivity see the tutorial using jupyter extension

using the jupyter extension in VS we can get individual cell support to run the either the .ipynb or the .py file also requires editing of the #@param lines in the code

- Download Visual Studio

- Get the Jupyter Notebook extensions

- head over the to the extensions tab in VS code

- search for

jupyterand install the one from Microsoft - after this is enabled the notebook should have several new toolbars and features

- you can actually run both the .py file or .ipynb file which both support individual cells

- ENJOY!

using Jupyter notebooks to run the .ipynb file also requires editing of the #@param lines in the code

- with anaconda installed you should already have jupyter notebook installed if you dont simple run:

pip install jupyterlab - Run Jupyter

jupyter notebook- this launches the juptyer notebook in the current directory and open a webpage

- under the

Filestab double click and open the file namedDisco-Diffusion.ipynb - ENJOY!

using Google Colab as a front end to get the nice view of all the editable fields while using Jupyter as middleware to connect your local resources

- with anaconda installed you should already have jupyter notebook installed if you dont simple run:

pip install jupyterlab - Connect to colab front end

pip install --upgrade jupyter_http_over_ws>=0.0.7- Enable the extension for jupyter

jupyter serverextension enable --py jupyter_http_over_ws- Start the jupyter server

jupyter notebook --NotebookApp.allow_origin='https://colab.research.google.com' --port=8888 --NotebookApp.port_retries=0 --no-browser- Inside google colab click the down Arrow icon next to the Connect button to view more options

- Select connect to Local Runtime and enter the Local Host Url that was printed in the console when we started jupyter server

- ENJOY!

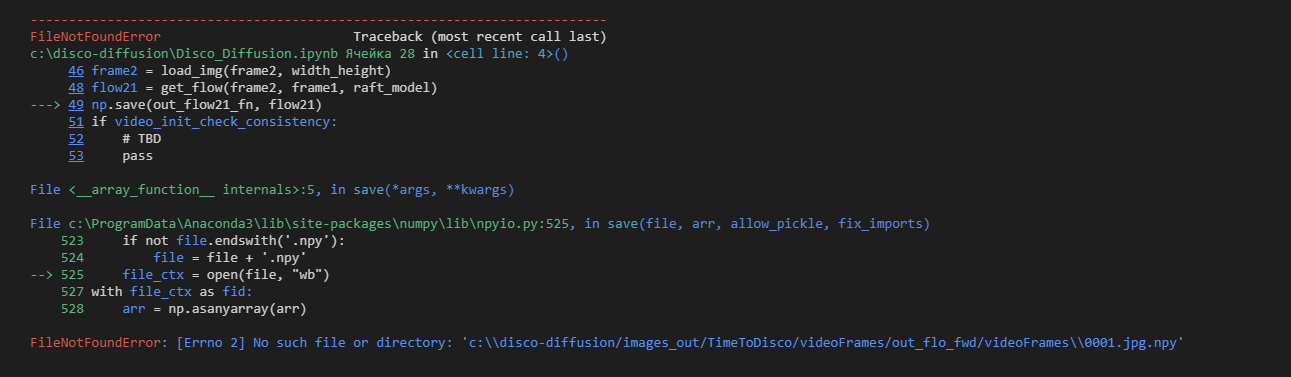

I finally got setup and running, but after generating the first frame I keep encountering this error and not able to find the solution. Any suggestion out here?