Student: Vedanta Keshav Jha

Mentor: Steven Puttermans

Link to accomplished work:

- PR for Global sampling method: opencv_contrib/pull/2241

- PR for AlphaGan matting : opencv_contrib/pull/2134

- Link to created dataset : Link

Hi, I am Vedanta.I am a student developer for GSoC 2019 with OpenCV

The main goal of the project was to add state of the art matting methods to the opencv module.

The aim was to implement the following two papers on matting: 1.AlphaGan matting[1] 2.Global sampling based method for matting[2]

The AlphaGan matting was trained on the adobe dataset, which we cannot use for opensource. So, my project included creating of dataset which could be used for training the model.

I created the training dataset using a software called gimp. I created alpha mattes of almost 250 images.

The test dataset for matting is publicly available here.

The AlphaGan model has a few different architecture options. The basic idea of the paper is that it uses a GANs for natural image matting. Input to the generator is Image(Foreground + Alpha matte), and the trimap.(Hence, the input has 4 channels), And the output of the generator is the alpha matte. The discriminator is fed the alpha matte generated from the generator and the original alpha matte.

The different architecture options are:

| Generator | Discriminator |

|---|---|

| Resnet50 | N Layer PatchGAN discriminator |

| Resnet50 | Pixel PatchGAN disccriminator |

| Resnet50+ ASPP mmodule | N Layer PatchGAN discriminator |

N is taken as 3 by default, there is an option to change its value.

It is a computer vision based method for matting. Unlike other sampling based matting methods , Global sampling method uses all available samples in the image, hence it avoids missing good samples.

Here I will demonstrate how you can use this module and reveal its POWER!

Make sure you build OpenCV with the contrib-module.

Now we can get matte of an image given its trimap using state of the art methods.

#include <opencv2/ximgproc.hpp>

using namespace std;

using namespace cv;

using namespace ximgproc;

//Create the object

GlobalMatting gm;

//Get the inputs

Mat img = cv::imread(img_path);

Mat tri = cv::imread(tri_path)

//Get the matte

Mat foreground,alpha;

int niter = 9;

gm.getMat(img,tri,foreground,alpha,niter);The benchmarks tests were run on alphamatting.com.

| Error type | Original implementation(Avg. Rank) | This implementation(Avg. Rank) |

|---|---|---|

| Sum of absolute differences | 31 | 31.3 |

| Mean square error | 28.3 | 29.5 |

| Gradient error | 25 | 26.3 |

| Connectivity error | 28 | 36.3 |

| Error type | Original implementation | Resnet50 +N Layer | Resnet50 + Pixel | Resnet50 + ASPP module |

|---|---|---|---|---|

| Sum of absolute differences | 11.7 | 42.8 | 43.8 | 53 |

| Mean square error | 15 | 45.8 | 45.6 | 54.2 |

| Gradient error | 14 | 52.9 | 52.7 | 55 |

| Connectivity error | 29.6 | 23.3 | 22.6 | 32.8 |

Steps

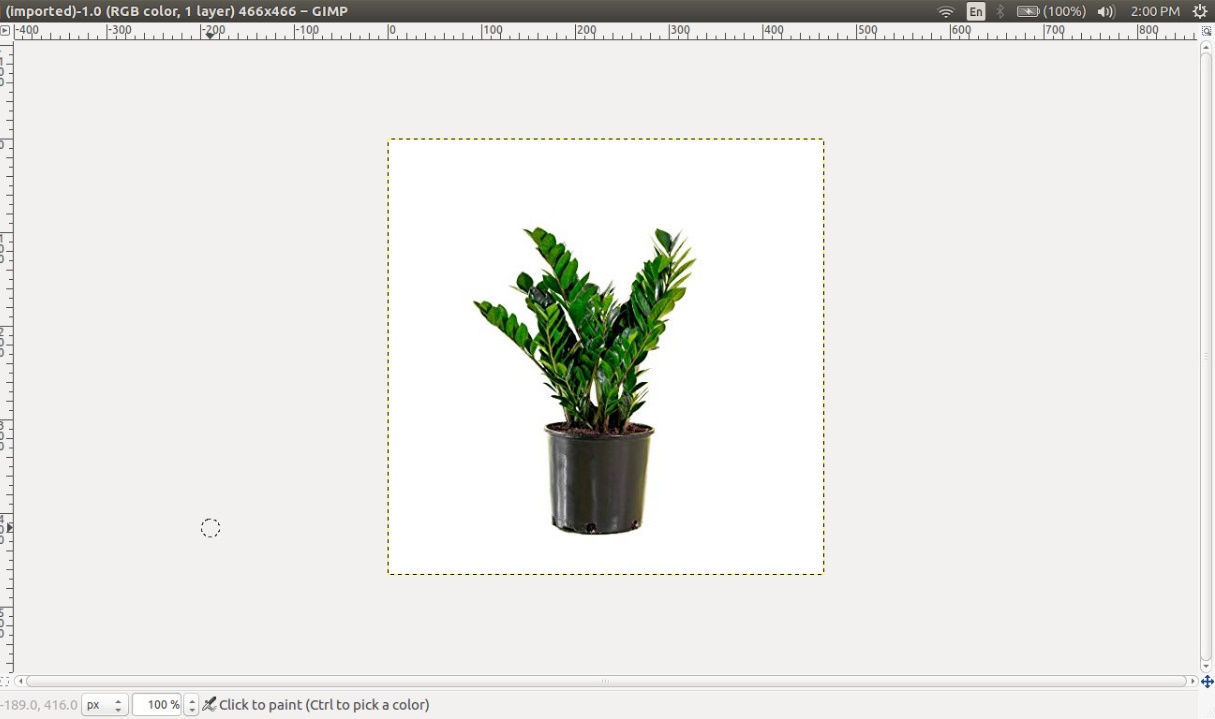

- Open the image on gimp,prefererably an image wit monochrome background, otherwise it gets really tough to generate decent matte.

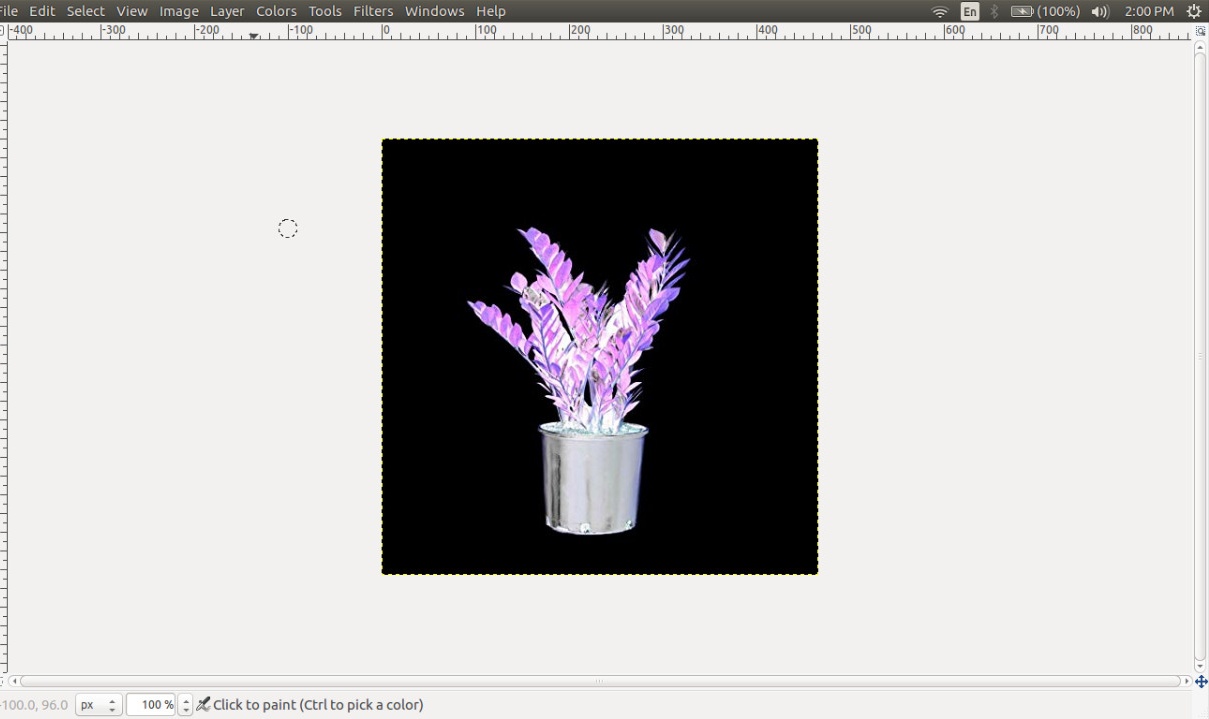

2.Invert the image from Colors->Invert

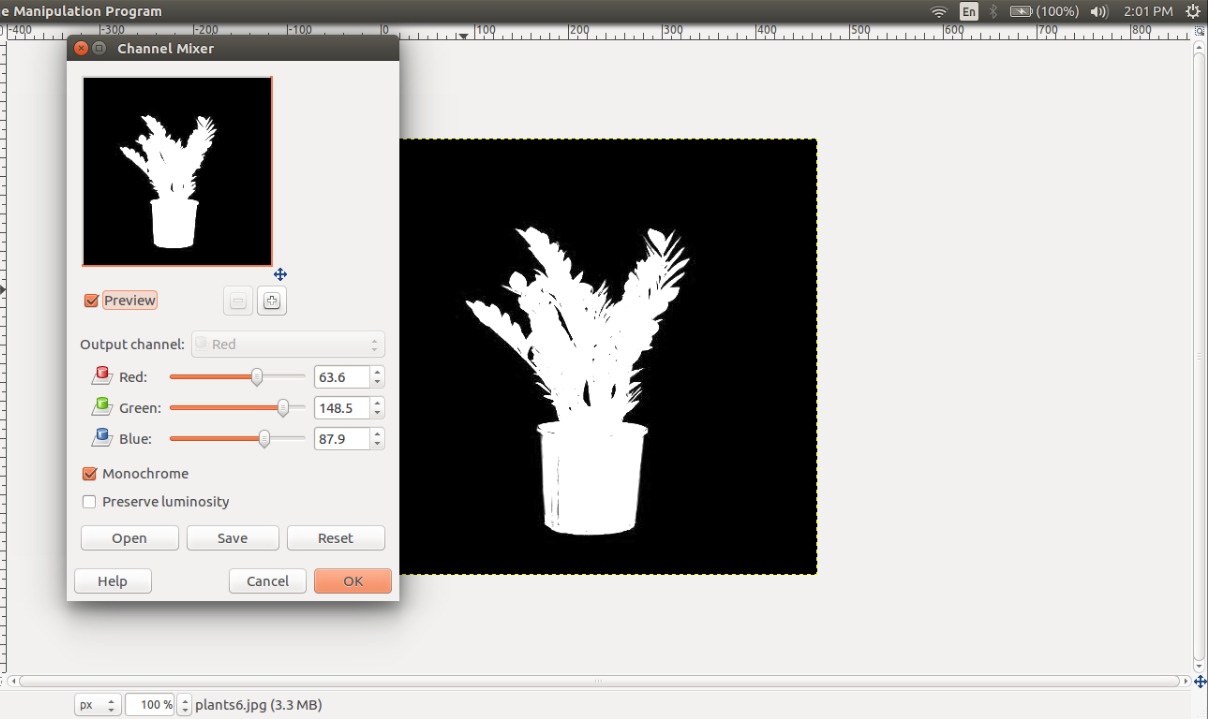

3.Open the Channel mixer(Colors->Components->Channel Mixer). Select the monochrome option. Vary the values until the matte looks decent.

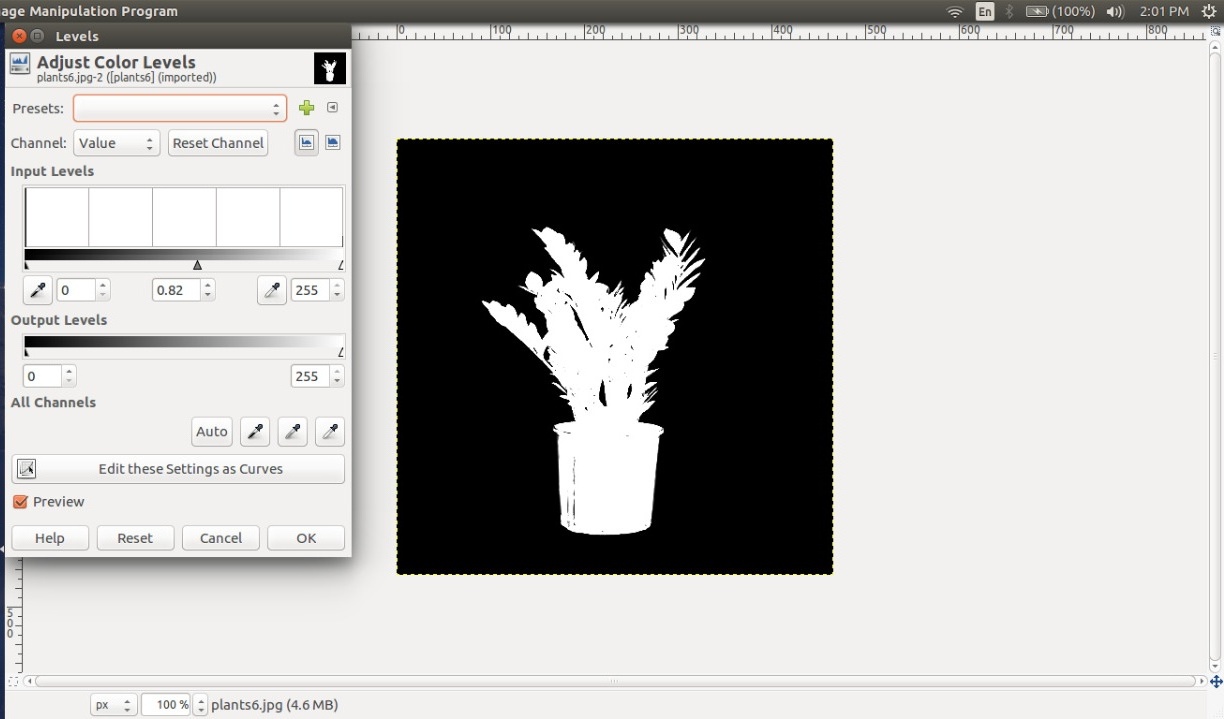

4.Open Levels (Colors->Levels). Vary level and make sure the pixels of background are fully black.

1.Get the ONNX model of the AlphaGAN architecture. I could not do it because currently ONNX does not support max_unpool2d function which plays an important role in the decoder of the generator. This issue was recently opened up. Link to issue.

The documentation does not mention any support for max_unpool2d function.Link to documentation

2.Clearly, The AlphaGAN implementation results are not very similar to the original implementation. One major reason behind this is the data set used, The dataset that I have created is not of the same quality as the Adobe dataset, they created their own green screen images, but in my dataset there are plenty of images where I had to use paint tools to mark individual pixels as foreground, because of this there must be some drop in accuracy.

2a. The authors report the best result of many experiments, I could only run it with one set of hyperparametrs, I would be adding results with different hyperparameters soon.

[1] Sebastian Lutz,Konstantinos Amplianitis,Aljoša Smolic , "AlphaGAN:Generative adversarial networks for natural image matting [arXiv]

[2] Kaiming He, Christoph Rhemann, Carsten Rother, Xiaoou Tang, and Jian Sun. Aglobal sampling method for alpha matting. In The 24th IEEE Conference on ComputerVision and Pattern Recognition, CVPR 2011, Colorado Springs, CO, USA, 20-25 June2011, pages 2049–2056, 2011. doi: 10.1109/CVPR.2011.5995495.