-

-

Save NicoKiaru/f45f56e3ff2d1fb708821c110fbdee62 to your computer and use it in GitHub Desktop.

| /** | |

| * ABBA Script : performs a cell detection on the root node annotation | |

| * imported from ABBA and restores the child objects after the detection has been performed | |

| * To use and modify like you need / want | |

| * You need to have imported the regions before running this script. See https://gist.github.com/NicoKiaru/723d8e628a3bb03902bb3f0f2f0fa466 for instance | |

| * See https://biop.github.io/ijp-imagetoatlas/ | |

| * Author: Olivier Burri, Nicolas Chiaruttini, BIOP, EPFL | |

| * Date: 2022-01-26 | |

| */ | |

| // setImageType('FLUORESCENCE'); // if your image is fluorescent | |

| // If you just want to clear the detections and keep the previously ABBA imported annotations | |

| clearDetections() | |

| // or, if you want to reset and re-import all annotations from ABBA | |

| // clearAllObjects(); | |

| // qupath.ext.biop.abba.AtlasTools.loadWarpedAtlasAnnotations(getCurrentImageData(), "acronym", true); | |

| def project = getProject() | |

| // We want to run cell detection only once on the entire brain, which means we need to select the Root node. We can find it by name | |

| def root = getAnnotationObjects().find{ it.getName().equals( 'Root' ) } | |

| // Duplicate root node. It is a bit contrived but this is how you can copy an annotation | |

| def regionMeasured = PathObjectTools.transformObject( root, null, true ) | |

| // Temporary name | |

| regionMeasured.setName( "Measured Region" ) | |

| // Need to add it to the current hierarchy | |

| addObject( regionMeasured ) | |

| // So that we can select it | |

| setSelectedObject( regionMeasured ) | |

| // RUN CELL DETECTION | |

| // Modify the line below by inserting your cell detection plugin line | |

| // ********************************************************************* | |

| // ********************************************************************* | |

| runPlugin( 'qupath.imagej.detect.cells.WatershedCellDetection', | |

| '{"detectionImage": "FL CY3", "requestedPixelSizeMicrons": 2.0, "backgroundRadiusMicrons": 8.0, "medianRadiusMicrons": 0.0, "sigmaMicrons": 2.0, "minAreaMicrons": 20.0, "maxAreaMicrons": 400.0, "threshold": 200.0, "watershedPostProcess": true, "cellExpansionMicrons": 0.0, "includeNuclei": true, "smoothBoundaries": true, "makeMeasurements": true}') | |

| // ********************************************************************* | |

| // ********************************************************************* | |

| // We no longer need the object we used for detection. We remove it but keep the child objects, which are the cells | |

| removeObject( regionMeasured, true ) | |

| // We can now add the cells to the original root object | |

| def cells = getDetectionObjects() | |

| root.addPathObjects( cells ) | |

| // Update the view for the user | |

| fireHierarchyUpdate() | |

| // From here, the rest of the script adds to all cells their coordinates in the reference atlas | |

| // to their measurement list | |

| // Get ABBA transform file located in entry path | |

| def targetEntry = getProjectEntry() | |

| def targetEntryPath = targetEntry.getEntryPath() | |

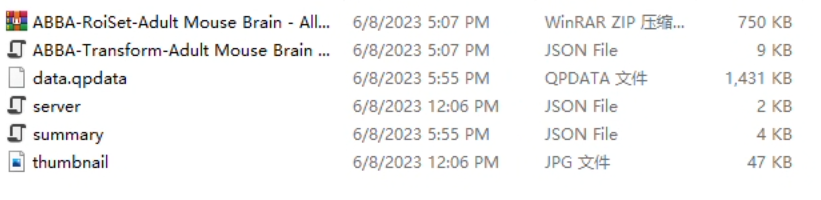

| def fTransform = new File(targetEntryPath.toFile(), "ABBA-Transform-Adult Mouse Brain - Allen Brain Atlas V3p1.json" ) | |

| if ( !fTransform.exists() ) { | |

| logger.error( "ABBA transformation file not found for entry {}", targetEntry ) | |

| return | |

| } | |

| def pixelToCCFTransform = Warpy.getRealTransform( fTransform ).inverse() // Needs the inverse transform | |

| getDetectionObjects().forEach{ detection -> | |

| def ccfCoordinates = new RealPoint(3) | |

| def ml = detection.getMeasurementList() | |

| ccfCoordinates.setPosition( [detection.getROI().getCentroidX(),detection.getROI().getCentroidY(), 0] as double[] ) | |

| // The Z=0 pixel coordinate is automatically transformed to the right atlas position in Z thanks to the fact that the slice | |

| // position is already known by the transform | |

| // This applies the transform in place to cffCoordinates | |

| pixelToCCFTransform.apply(ccfCoordinates, ccfCoordinates) | |

| // Fianlly: Add the coordinates as measurements | |

| ml.addMeasurement("Allen CCFv3 X mm", ccfCoordinates.getDoublePosition(0) ) | |

| ml.addMeasurement("Allen CCFv3 Y mm", ccfCoordinates.getDoublePosition(1) ) | |

| ml.addMeasurement("Allen CCFv3 Z mm", ccfCoordinates.getDoublePosition(2) ) | |

| ml.addMeasurement("Count: Num Spots", 1 ) | |

| } | |

| // imports | |

| import qupath.ext.biop.warpy.* | |

| import net.imglib2.RealPoint | |

| import qupath.lib.measurements.MeasurementList | |

| import qupath.lib.objects.PathObjectTools | |

| import qupath.lib.objects.PathObjects | |

| import qupath.lib.objects.PathCellObject | |

| import qupath.lib.objects.PathObject | |

| import qupath.lib.objects.PathDetectionObject | |

| import qupath.lib.roi.ROIs | |

| import qupath.lib.regions.ImagePlane |

hi,

I have two more questions, how to only detect one specific region atlas, like MOp? I don`t know how to edit the script. and if I want add the method of cellpose detection , how to do that? hope for your answer~

I've posted a few scripts here:

Maybe they are helpful ?

yes, it`s very helpful. now I can only detect the cell in the specific region using cellpose, but the detection results of position how to be transformed into the standard atlas space? thank you!

yes, it`s very helpful. now I can only detect the cell in the specific region using cellpose, but the detection results of position how to be transformed into the standard atlas space? thank you!

clearAllObjects()

removeObjects(getAnnotationObjects(), false) // last argument = keep child objects ?

qupath.ext.biop.abba.AtlasTools.loadWarpedAtlasAnnotations(getCurrentImageData(), "acronym", true);

def myObjects = getAllObjects().findAll{it.getName() == 'MOp'}

selectObjects(myObjects)

import qupath.ext.biop.cellpose.Cellpose2D

// Specify the model name (cyto, nuc, cyto2, omni_bact or a path to your custom model)

def pathModel = 'cyto2'

def cellpose = Cellpose2D.builder( pathModel )

.pixelSize( 0.5 ) // Resolution for detection

.channels( 'DAPI' ) // Select detection channel(s)

// .preprocess( ImageOps.Filters.median(1) ) // List of preprocessing ImageOps to run on the images before exporting them

// .tileSize(2048) // If your GPU can take it, make larger tiles to process fewer of them. Useful for Omnipose

// .cellposeChannels(1,2) // Overwrites the logic of this plugin with these two values. These will be sent directly to --chan and --chan2

// .maskThreshold(-0.2) // Threshold for the mask detection, defaults to 0.0

// .flowThreshold(0.5) // Threshold for the flows, defaults to 0.4

// .diameter(0) // Median object diameter. Set to 0.0 for the bact_omni model or for automatic computation

// .setOverlap(60) // Overlap between tiles (in pixels) that the QuPath Cellpose Extension will extract. Defaults to 2x the diameter or 60 px if the diameter is set to 0

// .invert() // Have cellpose invert the image

// .useOmnipose() // Add the --omni flag to use the omnipose segmentation model

// .excludeEdges() // Clears objects toutching the edge of the image (Not of the QuPath ROI)

// .clusterDBSCAN() // Use DBSCAN clustering to avoir over-segmenting long object

// .cellExpansion(5.0) // Approximate cells based upon nucleus expansion

// .cellConstrainScale(1.5) // Constrain cell expansion using nucleus size

// .classify("My Detections") // PathClass to give newly created objects

.measureShape() // Add shape measurements

.measureIntensity() // Add cell measurements (in all compartments)

// .createAnnotations() // Make annotations instead of detections. This ignores cellExpansion

.useGPU() // Optional: Use the GPU if configured, defaults to CPU only

.build()

// Run detection for the selected objects

def imageData = getCurrentImageData()

def pathObjects = getSelectedObjects()

if (pathObjects.isEmpty()) {

Dialogs.showErrorMessage("Cellpose", "Please select a parent object!")

return

}

cellpose.detectObjects(imageData, pathObjects)

println 'Done!'

removeObjects(getAnnotationObjects(), false) // last argument = keep child objects ?

qupath.ext.biop.abba.AtlasTools.loadWarpedAtlasAnnotations(getCurrentImageData(), "acronym", true);

You can use the script of the documentation (https://biop.github.io/ijp-imagetoatlas/qupath_analysis.html#display-results):

/**

* Computes the centroid coordinates of each detection within the atlas

* then adds these coordinates onto the measurement list.

* Measurements names: "Atlas_X", "Atlas_Y", "Atlas_Z"

*/

def pixelToAtlasTransform =

AtlasTools

.getAtlasToPixelTransform(getCurrentImageData())

.inverse() // pixel to atlas = inverse of atlas to pixel

getDetectionObjects().forEach(detection -> {

RealPoint atlasCoordinates = new RealPoint(3);

MeasurementList ml = detection.getMeasurementList();

atlasCoordinates.setPosition([detection.getROI().getCentroidX(),detection.getROI().getCentroidY(),0] as double[]);

pixelToAtlasTransform.apply(atlasCoordinates, atlasCoordinates);

ml.putMeasurement("Atlas_X", atlasCoordinates.getDoublePosition(0) )

ml.putMeasurement("Atlas_Y", atlasCoordinates.getDoublePosition(1) )

ml.putMeasurement("Atlas_Z", atlasCoordinates.getDoublePosition(2) )

})

import qupath.ext.biop.warpy.Warpy

import net.imglib2.RealPoint

import qupath.lib.measurements.MeasurementList

import qupath.ext.biop.abba.AtlasTools

This should add the coordinates of all detections within the atlas.

Let me know if that works!

Hi, thanks for your script. I met a bug like this:

>INFO: Processing complete in 16.02 seconds

>INFO: Tasks completed!

>ERROR: ABBA transformation file not found for entry 449#3_Syt2Ephys_Day6203.vsi - 10x_DAPI, GFP, DiI, Cy5_07

I found this might be due to the loss of ABBA transformation file but I don't know how to solve it.

And there's no error message when you ecport from Fiji ? Are you sure you're looking at the right QuPath project and not a duplicated one ?

It is really tricky somehow. I reopen my project, re-export on ABBA, and re-import using codes on the document:

setImageType('FLUORESCENCE'); clearAllObjects(); qupath.ext.biop.abba.AtlasTools.loadWarpedAtlasAnnotations(getCurrentImageData(), "acronym", false);

The entry folder this time shows the ABBA files. Strangely

But this time it raise a new error:

ERROR:` Cannot invoke "qupath.lib.objects.PathObject.getROI()" because "pathObject" is null in DetectCellsABBA.groovy at line number 19

ERROR: qupath.lib.objects.PathObjectTools.transformObjectImpl(PathObjectTools.java:1255)

This could be sovled by changing false into true within this code

qupath.ext.biop.abba.AtlasTools.loadWarpedAtlasAnnotations(getCurrentImageData(), "acronym", false);

but again when going to run 'DetectCellsABBA.groovy' code, the bug is still here:

ERROR: Error processing Polygon (-1016, 661, 1266, 732)

java.io.IOException: java.lang.InterruptedException

at qupath.lib.images.servers.bioformats.BioFormatsImageServer.readTile(BioFormatsImageServer.java:917)

at qupath.lib.images.servers.AbstractTileableImageServer.lambda$prerequestTiles$2(AbstractTileableImageServer.java:462)

at java.base/java.util.concurrent.FutureTask.run(Unknown Source)

at qupath.lib.images.servers.AbstractTileableImageServer.prerequestTiles(AbstractTileableImageServer.java:464)

at qupath.lib.images.servers.AbstractTileableImageServer.readRegion(AbstractTileableImageServer.java:295)

at qupath.lib.images.servers.AbstractTileableImageServer.readRegion(AbstractTileableImageServer.java:60)

at qupath.imagej.tools.IJTools.convertToImagePlus(IJTools.java:863)

at qupath.imagej.tools.IJTools.convertToImagePlus(IJTools.java:902)

at qupath.imagej.detect.cells.WatershedCellDetection$CellDetector.runDetection(WatershedCellDetection.java:216)

at qupath.lib.plugins.DetectionPluginTools$DetectionRunnable.run(DetectionPluginTools.java:112)

at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Unknown Source)

at java.base/java.util.concurrent.FutureTask.run(Unknown Source)

at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Unknown Source)

at java.base/java.util.concurrent.FutureTask.run(Unknown Source)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(Unknown Source)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(Unknown Source)

at java.base/java.lang.Thread.run(Unknown Source)

Caused by null at java.base/java.util.concurrent.locks.ReentrantLock$Sync.lockInterruptibly(Unknown Source)

at java.base/java.util.concurrent.locks.ReentrantLock.lockInterruptibly(Unknown Source)

at java.base/java.util.concurrent.ArrayBlockingQueue.put(Unknown Source)

at qupath.lib.images.servers.bioformats.BioFormatsImageServer$ReaderPool.openImage(BioFormatsImageServer.java:1354)

at qupath.lib.images.servers.bioformats.BioFormatsImageServer.readTile(BioFormatsImageServer.java:915)

at qupath.lib.images.servers.AbstractTileableImageServer.lambda$prerequestTiles$2(AbstractTileableImageServer.java:462)

at java.base/java.util.concurrent.FutureTask.run(Unknown Source)

at qupath.lib.images.servers.AbstractTileableImageServer.prerequestTiles(AbstractTileableImageServer.java:464)

at qupath.lib.images.servers.AbstractTileableImageServer.readRegion(AbstractTileableImageServer.java:295)

at qupath.lib.images.servers.AbstractTileableImageServer.readRegion(AbstractTileableImageServer.java:60)

at qupath.imagej.tools.IJTools.convertToImagePlus(IJTools.java:863)

at qupath.imagej.tools.IJTools.convertToImagePlus(IJTools.java:902)

at qupath.imagej.detect.cells.WatershedCellDetection$CellDetector.runDetection(WatershedCellDetection.java:216)

at qupath.lib.plugins.DetectionPluginTools$DetectionRunnable.run(DetectionPluginTools.java:112)

at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Unknown Source)

at java.base/java.util.concurrent.FutureTask.run(Unknown Source)

at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Unknown Source)

at java.base/java.util.concurrent.FutureTask.run(Unknown Source)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(Unknown Source)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(Unknown Source)

at java.base/java.lang.Thread.run(Unknown Source)

INFO: ParallelTileObject (Tile-Processing) (processing time: 0.00 seconds)

ERROR: Error running plugin: java.lang.NullPointerException: Cannot invoke "java.awt.image.BufferedImage.getSampleModel()" because "img" is null

java.util.concurrent.ExecutionException: java.lang.NullPointerException: Cannot invoke "java.awt.image.BufferedImage.getSampleModel()" because "img" is null

at java.base/java.util.concurrent.FutureTask.report(Unknown Source)

at java.base/java.util.concurrent.FutureTask.get(Unknown Source)

at qupath.lib.plugins.AbstractPluginRunner.awaitCompletion(AbstractPluginRunner.java:139)

at qupath.lib.plugins.AbstractPluginRunner.runTasks(AbstractPluginRunner.java:107)

at qupath.lib.gui.PluginRunnerFX.runTasks(PluginRunnerFX.java:98)

at qupath.lib.plugins.AbstractPlugin.runPlugin(AbstractPlugin.java:169)

at qupath.lib.gui.QuPathGUI.runPlugin(QuPathGUI.java:3961)

at qupath.lib.gui.scripting.QPEx.runPlugin(QPEx.java:236)

at qupath.lib.gui.scripting.QPEx.runPlugin(QPEx.java:258)

at org.codehaus.groovy.vmplugin.v8.IndyInterface.fromCache(IndyInterface.java:321)

at QuPathScript.run(QuPathScript:35)

at org.codehaus.groovy.jsr223.GroovyScriptEngineImpl.eval(GroovyScriptEngineImpl.java:331)

at org.codehaus.groovy.jsr223.GroovyScriptEngineImpl.eval(GroovyScriptEngineImpl.java:161)

at qupath.lib.gui.scripting.languages.DefaultScriptLanguage.execute(DefaultScriptLanguage.java:233)

at qupath.lib.gui.scripting.DefaultScriptEditor.executeScript(DefaultScriptEditor.java:1113)

at qupath.lib.gui.scripting.DefaultScriptEditor$3.run(DefaultScriptEditor.java:1478)

at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Unknown Source)

at java.base/java.util.concurrent.FutureTask.run(Unknown Source)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(Unknown Source)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(Unknown Source)

at java.base/java.lang.Thread.run(Unknown Source)

Caused by Cannot invoke "java.awt.image.BufferedImage.getSampleModel()" because "img" is null at qupath.imagej.tools.IJTools.convertToUncalibratedImagePlus(IJTools.java:791)

at qupath.imagej.tools.IJTools.convertToImagePlus(IJTools.java:864)

at qupath.imagej.tools.IJTools.convertToImagePlus(IJTools.java:902)

at qupath.imagej.detect.cells.WatershedCellDetection$CellDetector.runDetection(WatershedCellDetection.java:216)

at qupath.lib.plugins.DetectionPluginTools$DetectionRunnable.run(DetectionPluginTools.java:112)

at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Unknown Source)

at java.base/java.util.concurrent.FutureTask.run(Unknown Source)

at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Unknown Source)

at java.base/java.util.concurrent.FutureTask.run(Unknown Source)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(Unknown Source)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(Unknown Source)

at java.base/java.lang.Thread.run(Unknown Source)

ERROR: Error processing Geometry (128, 319, 3045, 1074)

java.io.IOException: java.lang.InterruptedException

at qupath.lib.images.servers.bioformats.BioFormatsImageServer.readTile(BioFormatsImageServer.java:917)

at qupath.lib.images.servers.AbstractTileableImageServer.lambda$prerequestTiles$2(AbstractTileableImageServer.java:462)

at java.base/java.util.concurrent.FutureTask.run(Unknown Source)

at qupath.lib.images.servers.AbstractTileableImageServer.prerequestTiles(AbstractTileableImageServer.java:464)

at qupath.lib.images.servers.AbstractTileableImageServer.readRegion(AbstractTileableImageServer.java:295)

at qupath.lib.images.servers.AbstractTileableImageServer.readRegion(AbstractTileableImageServer.java:60)

at qupath.imagej.tools.IJTools.convertToImagePlus(IJTools.java:863)

at qupath.imagej.tools.IJTools.convertToImagePlus(IJTools.java:902)

at qupath.imagej.detect.cells.WatershedCellDetection$CellDetector.runDetection(WatershedCellDetection.java:216)

at qupath.lib.plugins.DetectionPluginTools$DetectionRunnable.run(DetectionPluginTools.java:112)

at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Unknown Source)

at java.base/java.util.concurrent.FutureTask.run(Unknown Source)

at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Unknown Source)

at java.base/java.util.concurrent.FutureTask.run(Unknown Source)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(Unknown Source)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(Unknown Source)

at java.base/java.lang.Thread.run(Unknown Source)

Caused by null at java.base/java.util.concurrent.locks.ReentrantLock$Sync.lockInterruptibly(Unknown Source)

at java.base/java.util.concurrent.locks.ReentrantLock.lockInterruptibly(Unknown Source)

at java.base/java.util.concurrent.ArrayBlockingQueue.put(Unknown Source)

at qupath.lib.images.servers.bioformats.BioFormatsImageServer$ReaderPool.openImage(BioFormatsImageServer.java:1354)

at qupath.lib.images.servers.bioformats.BioFormatsImageServer.readTile(BioFormatsImageServer.java:915)

at qupath.lib.images.servers.AbstractTileableImageServer.lambda$prerequestTiles$2(AbstractTileableImageServer.java:462)

at java.base/java.util.concurrent.FutureTask.run(Unknown Source)

at qupath.lib.images.servers.AbstractTileableImageServer.prerequestTiles(AbstractTileableImageServer.java:464)

at qupath.lib.images.servers.AbstractTileableImageServer.readRegion(AbstractTileableImageServer.java:295)

at qupath.lib.images.servers.AbstractTileableImageServer.readRegion(AbstractTileableImageServer.java:60)

at qupath.imagej.tools.IJTools.convertToImagePlus(IJTools.java:863)

at qupath.imagej.tools.IJTools.convertToImagePlus(IJTools.java:902)

at qupath.imagej.detect.cells.WatershedCellDetection$CellDetector.runDetection(WatershedCellDetection.java:216)

at qupath.lib.plugins.DetectionPluginTools$DetectionRunnable.run(DetectionPluginTools.java:112)

at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Unknown Source)

at java.base/java.util.concurrent.FutureTask.run(Unknown Source)

at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Unknown Source)

at java.base/java.util.concurrent.FutureTask.run(Unknown Source)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(Unknown Source)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(Unknown Source)

at java.base/java.lang.Thread.run(Unknown Source)

INFO: ParallelTileObject (Tile-Processing) (processing time: 0.04 seconds)

INFO: Processing complete in 0.14 seconds

INFO: Completed with error java.lang.NullPointerException: Cannot invoke "java.awt.image.BufferedImage.getSampleModel()" because "img" is null

ERROR: ABBA transformation file not found for entry 449#3_Syt2Ephys_Day6205.vsi - 10x_DAPI, GFP, DiI, Cy5_08

Hi, I found there are some mistakes in my coding.

1, during ABBA annotation importing: qupath.ext.biop.abba.AtlasTools.loadWarpedAtlasAnnotations(getCurrentImageData(), "acronym", false);, false should be changed to true

2, during cell detection: in my case, "ABBA-Transform-Adult Mouse Brain - Allen Brain Atlas V3.json" should be changed to "ABBA-Transform-Adult Mouse Brain - Allen Brain Atlas V3p1.json"

3, I by order ran the script 0,1,2 and happened to meet some problems, but i simple integrated all the script with some codes deleted, thing went more smooth.

My workflow code like this, basically they are based on NicoKiaru's scripts:

// imports

import qupath.ext.biop.warpy.*

import net.imglib2.RealPoint

import qupath.lib.measurements.MeasurementList

import qupath.lib.objects.PathObjectTools

// import annotation from ABBA

setImageType('FLUORESCENCE');

clearAllObjects();

qupath.ext.biop.abba.AtlasTools.loadWarpedAtlasAnnotations(getCurrentImageData(), "acronym", true);

// cell detection

clearDetections()

def project = getProject()

// We want to run cell detection only once on the entire brain, which means we need to select the Root node. We can find it by name

def root = getAnnotationObjects().find{ it.getName().equals( 'Root' ) }

// Duplicate root node. It is a bit contrived but this is how you can copy an annotation

def regionMeasured = PathObjectTools.transformObject( root, null, true )

// Temporary name

regionMeasured.setName( "Measured Region" )

// Need to add it to the current hierarchy

addObject( regionMeasured )

// So that we can select it

setSelectedObject( regionMeasured )

// RUN CELL DETECTION

// Modify the line below by inserting your cell detection plugin line

// *********************************************************************

// *********************************************************************

runPlugin( 'qupath.imagej.detect.cells.WatershedCellDetection',

'{"detectionImage": "Cy3", "requestedPixelSizeMicrons": 1.0, "backgroundRadiusMicrons": 8.0, "medianRadiusMicrons": 0.0, "sigmaMicrons": 2.0, "minAreaMicrons": 20.0, "maxAreaMicrons": 200.0, "threshold": 50.0, "watershedPostProcess": true, "cellExpansionMicrons": 10.0, "includeNuclei": True, "smoothBoundaries": true, "makeMeasurements": true}')

// *********************************************************************

// *********************************************************************

// re-loading annotation

qupath.ext.biop.abba.AtlasTools.loadWarpedAtlasAnnotations(getCurrentImageData(), "acronym", true);

// Get ABBA transform file located in entry path

def targetEntry = getProjectEntry()

def targetEntryPath = targetEntry.getEntryPath()

def fTransform = new File(targetEntryPath.toFile(), "ABBA-Transform-Adult Mouse Brain - Allen Brain Atlas V3p1.json" )

if ( !fTransform.exists() ) {

logger.error( "ABBA transformation file not found for entry {}", targetEntry )

return

}

def pixelToCCFTransform = Warpy.getRealTransform( fTransform ).inverse() // Needs the inverse transform

getDetectionObjects().forEach{ detection ->

def ccfCoordinates = new RealPoint(3)

def ml = detection.getMeasurementList()

ccfCoordinates.setPosition( [detection.getROI().getCentroidX(),detection.getROI().getCentroidY(), 0] as double[] )

// The Z=0 pixel coordinate is automatically transformed to the right atlas position in Z thanks to the fact that the slice

// position is already known by the transform

// This applies the transform in place to cffCoordinates

pixelToCCFTransform.apply(ccfCoordinates, ccfCoordinates)

// Fianlly: Add the coordinates as measurements

ml.addMeasurement("Allen CCFv3 X mm", ccfCoordinates.getDoublePosition(0) )

ml.addMeasurement("Allen CCFv3 Y mm", ccfCoordinates.getDoublePosition(1) )

ml.addMeasurement("Allen CCFv3 Z mm", ccfCoordinates.getDoublePosition(2) )

ml.addMeasurement("Count: Num Spots", 1 )

}

// To execute before:

// 0 - https://gist.github.com/NicoKiaru/723d8e628a3bb03902bb3f0f2f0fa466

// 1 - https://gist.github.com/NicoKiaru/f45f56e3ff2d1fb708821c110fbdee62

// save annotations

File directory = new File(buildFilePath(PROJECT_BASE_DIR,'export'));

directory.mkdirs();

def imageData = getCurrentImageData();

imageName = ServerTools.getDisplayableImageName(imageData.getServer())

def filename = imageName//.take(imageName.indexOf('.'))

saveMeasurements(

imageData,

PathDetectionObject.class,

buildFilePath(directory.toString(),filename + '.tsv'),

"Class",

"Allen CCFv3 X mm",

"Allen CCFv3 Y mm",

"Allen CCFv3 Z mm",

"Count: Num Spots"

);

import qupath.lib.objects.PathObjects

import qupath.lib.objects.PathObject

import qupath.lib.objects.PathDetectionObject

import qupath.lib.objects.PathCellObject

import qupath.lib.roi.ROIs

import qupath.lib.regions.ImagePlane

import qupath.lib.measurements.MeasurementList

import qupath.lib.objects.PathCellObject

Thanks a lot @AthiemoneZero , I'll update my script based on your modifications, indeed some parts are not up to date

Hello Cindy,

What do you mean by inverse ? Mind the weird XYZ convention of the Allen Brain atlas

You can always choose the forward or inverse transform by removing

.inverse()in the line:def pixelToCCFTransform = Warpy.getRealTransform( fTransform ).inverse()