-

-

Save Thimira/354b90d59faf8b0d758f74eae3a511e2 to your computer and use it in GitHub Desktop.

| ''' | |

| Using Bottleneck Features for Multi-Class Classification in Keras | |

| We use this technique to build powerful (high accuracy without overfitting) Image Classification systems with small | |

| amount of training data. | |

| The full tutorial to get this code working can be found at the "Codes of Interest" Blog at the following link, | |

| https://www.codesofinterest.com/2017/08/bottleneck-features-multi-class-classification-keras.html | |

| Please go through the tutorial before attempting to run this code, as it explains how to setup your training data. | |

| The code was tested on Python 3.5, with the following library versions, | |

| Keras 2.0.6 | |

| TensorFlow 1.2.1 | |

| OpenCV 3.2.0 | |

| This should work with Theano as well, but untested. | |

| ''' | |

| import numpy as np | |

| from keras.preprocessing.image import ImageDataGenerator, img_to_array, load_img | |

| from keras.models import Sequential | |

| from keras.layers import Dropout, Flatten, Dense | |

| from keras import applications | |

| from keras.utils.np_utils import to_categorical | |

| import matplotlib.pyplot as plt | |

| import math | |

| import cv2 | |

| # dimensions of our images. | |

| img_width, img_height = 224, 224 | |

| top_model_weights_path = 'bottleneck_fc_model.h5' | |

| train_data_dir = 'data/train' | |

| validation_data_dir = 'data/validation' | |

| # number of epochs to train top model | |

| epochs = 50 | |

| # batch size used by flow_from_directory and predict_generator | |

| batch_size = 16 | |

| def save_bottlebeck_features(): | |

| # build the VGG16 network | |

| model = applications.VGG16(include_top=False, weights='imagenet') | |

| datagen = ImageDataGenerator(rescale=1. / 255) | |

| generator = datagen.flow_from_directory( | |

| train_data_dir, | |

| target_size=(img_width, img_height), | |

| batch_size=batch_size, | |

| class_mode=None, | |

| shuffle=False) | |

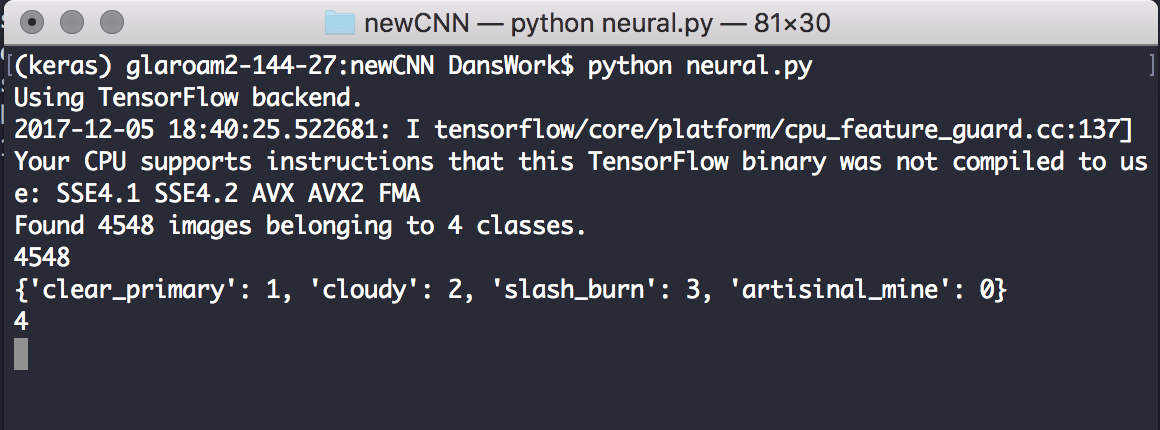

| print(len(generator.filenames)) | |

| print(generator.class_indices) | |

| print(len(generator.class_indices)) | |

| nb_train_samples = len(generator.filenames) | |

| num_classes = len(generator.class_indices) | |

| predict_size_train = int(math.ceil(nb_train_samples / batch_size)) | |

| bottleneck_features_train = model.predict_generator( | |

| generator, predict_size_train) | |

| np.save('bottleneck_features_train.npy', bottleneck_features_train) | |

| generator = datagen.flow_from_directory( | |

| validation_data_dir, | |

| target_size=(img_width, img_height), | |

| batch_size=batch_size, | |

| class_mode=None, | |

| shuffle=False) | |

| nb_validation_samples = len(generator.filenames) | |

| predict_size_validation = int( | |

| math.ceil(nb_validation_samples / batch_size)) | |

| bottleneck_features_validation = model.predict_generator( | |

| generator, predict_size_validation) | |

| np.save('bottleneck_features_validation.npy', | |

| bottleneck_features_validation) | |

| def train_top_model(): | |

| datagen_top = ImageDataGenerator(rescale=1. / 255) | |

| generator_top = datagen_top.flow_from_directory( | |

| train_data_dir, | |

| target_size=(img_width, img_height), | |

| batch_size=batch_size, | |

| class_mode='categorical', | |

| shuffle=False) | |

| nb_train_samples = len(generator_top.filenames) | |

| num_classes = len(generator_top.class_indices) | |

| # save the class indices to use use later in predictions | |

| np.save('class_indices.npy', generator_top.class_indices) | |

| # load the bottleneck features saved earlier | |

| train_data = np.load('bottleneck_features_train.npy') | |

| # get the class lebels for the training data, in the original order | |

| train_labels = generator_top.classes | |

| # https://github.com/fchollet/keras/issues/3467 | |

| # convert the training labels to categorical vectors | |

| train_labels = to_categorical(train_labels, num_classes=num_classes) | |

| generator_top = datagen_top.flow_from_directory( | |

| validation_data_dir, | |

| target_size=(img_width, img_height), | |

| batch_size=batch_size, | |

| class_mode=None, | |

| shuffle=False) | |

| nb_validation_samples = len(generator_top.filenames) | |

| validation_data = np.load('bottleneck_features_validation.npy') | |

| validation_labels = generator_top.classes | |

| validation_labels = to_categorical( | |

| validation_labels, num_classes=num_classes) | |

| model = Sequential() | |

| model.add(Flatten(input_shape=train_data.shape[1:])) | |

| model.add(Dense(256, activation='relu')) | |

| model.add(Dropout(0.5)) | |

| model.add(Dense(num_classes, activation='sigmoid')) | |

| model.compile(optimizer='rmsprop', | |

| loss='categorical_crossentropy', metrics=['accuracy']) | |

| history = model.fit(train_data, train_labels, | |

| epochs=epochs, | |

| batch_size=batch_size, | |

| validation_data=(validation_data, validation_labels)) | |

| model.save_weights(top_model_weights_path) | |

| (eval_loss, eval_accuracy) = model.evaluate( | |

| validation_data, validation_labels, batch_size=batch_size, verbose=1) | |

| print("[INFO] accuracy: {:.2f}%".format(eval_accuracy * 100)) | |

| print("[INFO] Loss: {}".format(eval_loss)) | |

| plt.figure(1) | |

| # summarize history for accuracy | |

| plt.subplot(211) | |

| plt.plot(history.history['acc']) | |

| plt.plot(history.history['val_acc']) | |

| plt.title('model accuracy') | |

| plt.ylabel('accuracy') | |

| plt.xlabel('epoch') | |

| plt.legend(['train', 'test'], loc='upper left') | |

| # summarize history for loss | |

| plt.subplot(212) | |

| plt.plot(history.history['loss']) | |

| plt.plot(history.history['val_loss']) | |

| plt.title('model loss') | |

| plt.ylabel('loss') | |

| plt.xlabel('epoch') | |

| plt.legend(['train', 'test'], loc='upper left') | |

| plt.show() | |

| def predict(): | |

| # load the class_indices saved in the earlier step | |

| class_dictionary = np.load('class_indices.npy').item() | |

| num_classes = len(class_dictionary) | |

| # add the path to your test image below | |

| image_path = 'path/to/your/test_image' | |

| orig = cv2.imread(image_path) | |

| print("[INFO] loading and preprocessing image...") | |

| image = load_img(image_path, target_size=(224, 224)) | |

| image = img_to_array(image) | |

| # important! otherwise the predictions will be '0' | |

| image = image / 255 | |

| image = np.expand_dims(image, axis=0) | |

| # build the VGG16 network | |

| model = applications.VGG16(include_top=False, weights='imagenet') | |

| # get the bottleneck prediction from the pre-trained VGG16 model | |

| bottleneck_prediction = model.predict(image) | |

| # build top model | |

| model = Sequential() | |

| model.add(Flatten(input_shape=bottleneck_prediction.shape[1:])) | |

| model.add(Dense(256, activation='relu')) | |

| model.add(Dropout(0.5)) | |

| model.add(Dense(num_classes, activation='sigmoid')) | |

| model.load_weights(top_model_weights_path) | |

| # use the bottleneck prediction on the top model to get the final | |

| # classification | |

| class_predicted = model.predict_classes(bottleneck_prediction) | |

| probabilities = model.predict_proba(bottleneck_prediction) | |

| inID = class_predicted[0] | |

| inv_map = {v: k for k, v in class_dictionary.items()} | |

| label = inv_map[inID] | |

| # get the prediction label | |

| print("Image ID: {}, Label: {}".format(inID, label)) | |

| # display the predictions with the image | |

| cv2.putText(orig, "Predicted: {}".format(label), (10, 30), | |

| cv2.FONT_HERSHEY_PLAIN, 1.5, (43, 99, 255), 2) | |

| cv2.imshow("Classification", orig) | |

| cv2.waitKey(0) | |

| cv2.destroyAllWindows() | |

| save_bottlebeck_features() | |

| train_top_model() | |

| predict() | |

| cv2.destroyAllWindows() |

I am getting an error that says the number of input samples is not equal to the number of input target in the validation data section on line 140.

Can you please help me out!!

@bluedistro that means the number of your labels between train and validation data are not the same

Epoch 12/50

6680/6680 [==============================] - 9s 1ms/step - loss: 3.7119 - acc: 0.1298 - val_loss: 3.9675 - val_acc: 0.1593

Epoch 13/50

6680/6680 [==============================] - 9s 1ms/step - loss: 3.6549 - acc: 0.1395 - val_loss: 3.7386 - val_acc: 0.1581

Epoch 14/50

6680/6680 [==============================] - 9s 1ms/step - loss: 3.6398 - acc: 0.1440 - val_loss: 3.8301 - val_acc: 0.1545

Epoch 15/50

6680/6680 [==============================] - 9s 1ms/step - loss: nan - acc: 0.1337 - val_loss: nan - val_acc: 0.0096

Epoch 16/50

6680/6680 [==============================] - 9s 1ms/step - loss: nan - acc: 0.0096 - val_loss: nan - val_acc: 0.0096

Epoch 17/50

6680/6680 [==============================] - 10s 2ms/step - loss: nan - acc: 0.0096 - val_loss: nan - val_acc: 0.0096

Epoch 18/50

6680/6680 [==============================] - 10s 1ms/step - loss: nan - acc: 0.0096 - val_loss: nan - val_acc: 0.0096

Epoch 19/50

6680/6680 [==============================] - 10s 2ms/step - loss: nan - acc: 0.0096 - val_loss: nan - val_acc: 0.0096

Epoch 20/50

4128/6680 [=================>............] - ETA: 4s - loss: nan - acc: 0.0099

sir why should i get loss = none ? any reason ?

@MrNakum increase your data size. The GD has already reached an optimum and can cause overfitting.

@2193860C, how long does it stay like that?

If running on CPU, and depending on the size of your training set, the predict generator for training can take half an hour or more.

Try setting verbose=1 in the predict generator to see whether it's running or stuck,

bottleneck_features_train = model.predict_generator( generator, predict_size_train, verbose=1)

This approach surely doesn't seem to work. I get following output.

Train on 6680 samples, validate on 835 samples

Epoch 1/50

6600/6680 [============================>.] - ETA: 0s - loss: 15.8788 - acc: 0.0117

Epoch 00001: val_loss improved from inf to 15.60484, saving model to saved_models/weights_aug_vgg19.hd5

6680/6680 [==============================] - 2s 275us/step - loss: 15.8778 - acc: 0.0117 - val_loss: 15.6048 - val_acc: 0.0263

Epoch 2/50

6460/6680 [============================>.] - ETA: 0s - loss: 15.7413 - acc: 0.0204

Epoch 00002: val_loss did not improve

6680/6680 [==============================] - 2s 234us/step - loss: 15.7416 - acc: 0.0205 - val_loss: 15.6860 - val_acc: 0.0228

Epoch 3/50

6460/6680 [============================>.] - ETA: 0s - loss: 15.6772 - acc: 0.0235

Epoch 00003: val_loss improved from 15.60484 to 15.53631, saving model to saved_models/weights_aug_vgg19.hd5

6680/6680 [==============================] - 2s 237us/step - loss: 15.6807 - acc: 0.0234 - val_loss: 15.5363 - val_acc: 0.0311

IT is clear from the output that train_labels are not correct when we augment it in this way.

And i feel that too as we are using flow_from_directory once for each train_data and train_label. They should be generated under one so that labels are correct for the data.

shouldn't the last layer's activation be 'softmax' for multiclass logistic regression ?

Yes that's right. Use softmax for multiclass regression.

For some reason during model fit, it does not train on all images. If the batch size is 16, it will take every 16th image, and not all images. Can you suggest what can be wrong ? I have checked the code its the exact same

@satendra929 What makes you feel that its escaping the inbetween images & considering only the 16th image?

This is the output with verbose - 1 :

Epoch 5/5

16/120 [===>..........................] - ETA: 1s - loss: 0.6931 - acc: 0.3750�������������������������������������������������������������������������������

32/120 [=======>......................] - ETA: 0s - loss: 0.6931 - acc: 0.5312�������������������������������������������������������������������������������

48/120 [===========>..................] - ETA: 0s - loss: 0.6931 - acc: 0.4792�������������������������������������������������������������������������������

64/120 [===============>..............] - ETA: 0s - loss: 0.6931 - acc: 0.4688�������������������������������������������������������������������������������

80/120 [===================>..........] - ETA: 0s - loss: 0.6931 - acc: 0.4875�������������������������������������������������������������������������������

96/120 [=======================>......] - ETA: 0s - loss: 0.6931 - acc: 0.4688�������������������������������������������������������������������������������

112/120 [===========================>..] - ETA: 0s - loss: 0.6931 - acc: 0.4911�������������������������������������������������������������������������������

120/120 [==============================] - 2s 13ms/step - loss: 0.6931 - acc: 0.5000 - val_loss: 0.6931 - val_acc: 0.5000

@adimyth see how its picking every 16th image in set of 120 images. And if I set batch size to 5 it will be 5th, 10th and so on.. Cannot figure out whats causing this.

Hi, Great tutorial on the bottleneck features. When I come to the prediction stage and I try to load the trained weights, I am getting the following error:

ValueError: Dimension 0 in both shapes must be equal, but are 25088 and 8192. Shapes are [25088,256] and [8192,256]. for 'Assign_26' (op: 'Assign') with input shapes: [25088,256], [8192,256].

Does anybody have any fixes for this issue? Any help would be greatly appreciated

Many thanks

@sur-sakthy check image dimensions you are taking throughout the code. The code above take width and height as 224. If you have changed at one place, change everywhere

Hi, I have followed the tutorial and found it very helpful. Thanks.

problem is that the predict method consumes memory upon calling it for many unseen images. i guess the reason being that the model is built again in the predict function.

Is there way predict the label of unseen images without re-building the model ?

or clear out the memory after a successful prediction ?

Hi,

how can we import train_test_split function from scikit learn utilities in the part we import the training set and the validation set? (http://scikit-learn.org/stable/modules/generated/sklearn.model_selection.train_test_split.html). I want the code to split the two images sets instead of dong it manually in order to avoid overfitting problems.

Hi,

Will transfer learning improve accuracy even in the case of huge data, I have close to 1.5 lakhs images, should I still go for Transfer learning?

@ghost

Hey I am also stuck there. Did you find the solution?

I am getting an error - StopIteration: cannot identify image file '/Users/Anuj/Desktop/Python codes/Dataset_faces/training_set\Ananth\00000.png'. The code runs until the two graphs are displayed. I get the error after that. Could you please help me out?

Hi, Thanks for building an application for multi label classification.

Would you please help me out to have 2 different files for training and testing, so that we don't need to train again and again whenever the application runs.

thanks

@Thimira

Can anybody help me ,how we can use Live webcam for the prediction for this blog....thank you

hi,

i have used this code for training 50,000 training images and 30,000 validation. while training it train only using 2 epochs,after 2 epochs it decrease the accuracy. so kindly tell me im going in a right direction or not?

on 2 epochs a model can train perfectly?

hi,

can anybody tells , only using 2 epochs a model can be trained or not?

as im using 70,000 training images and 30,000 validation images.

it gives 94% accuracy on 2 epochs only ,after 2 it decreases the accuracy

@mehki 2 epochs are not normally enough for a dataset of that size. How is the validation accuracy behaving?

This code gist is a little bit old. I have a more improved version of multiclass classification in keras with bottleneck and fine tuning in by Bird Watch project. You can check the project at https://github.com/Thimira/bird_watch. See the 'bird_watch_train.py' and 'bird_watch_train_optimized.py' files.

The complete tutorial can be found here: Using Bottleneck Features for Multi-Class Classification in Keras and TensorFlow

You'll notice that the code isn't the most optimized. We can easily extract some of the repeated code - such as the multiple image data generators - out to some functions. But, I kept them as is since it's easier to walk through the code in the tutorial like that.