记录一下对 Gluon-CV 中 FCN 的笔记。对于深度网络这种复杂模型,代码一般是怎么组织起来的?

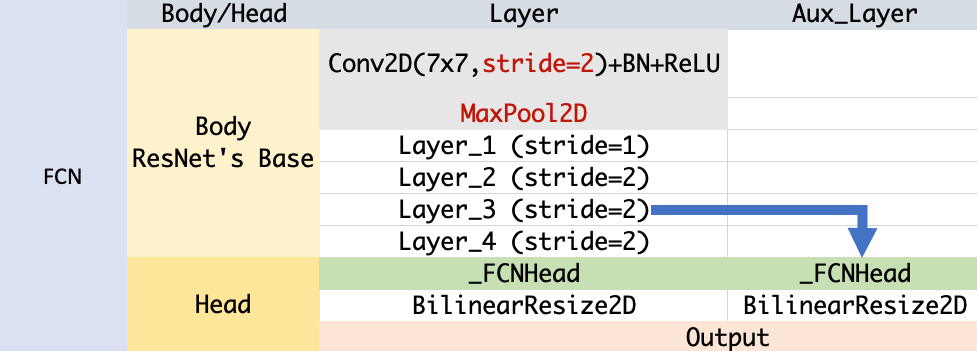

Operator (Conv, BN, ReLU) -> Block (BottleneckV1b) -> Layer -> (Body/Head) -> Net

- 这里的 Body 是用来做 Feature Extraction 的,基本就是 ResNet 的骨干网络,里面的 Layer 1、2、3、4 都是直接从 ResNet 中取过来的。

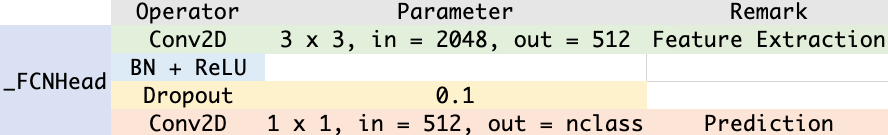

_FCNHead是 Predictor 的作用,将 Feature Map 变换成 Prediction Score- 注意,这里是对 32 被降采样后的 Feature Map 预测 Prediction Score,然后才是将降采样的 Prediction Score 上采样变成跟原图一样大

- 其实完整的 Model 最后还应该有 Softmax,但是这里没有是因为出于数值稳定的考虑,将 Softmax 环节跟 Loss 结合起来了

- FCN Head 的结构如下图所示:

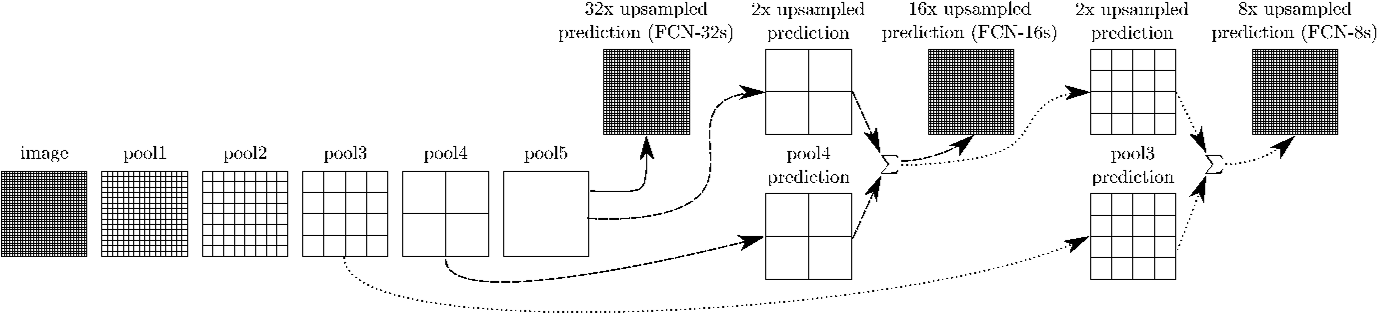

FCN 的 Skip Layer 是怎么一回事?下面这张转自 http://deeplearning.net/tutorial/fcn_2D_segm.html 的图画的很形象

所以看 Gluon-CV 中 FCN 的实现 是没有实现 Skip Layer 结构的,虽然如果用 aux 的话, FCN 类的实现是返回c3 和 c4 两个对应的预测,但是这个是为了 Loss 服务的,并没有实现 Skip Connection。

发现 Deep Learning Tutorials 写得蛮好的,里面含有可读性很好的代码,虽然是用 Theano 和 Lasagne 实现的,但是可读性很好,可以指导后面自己的实现。FCN 的代码在这里 http://deeplearning.net/tutorial/code/fcn_2D_segm/fcn8.py

- 32s: ConvPred(conv7_dropout) => score32s

- 16s: DeConv(score32s) + ConvPred(pool4) => score16s

- 8s: DeConv(score16s) + ConvPred(pool3) => score8s

- final: DeConv(score8s) => score_final

def buildFCN8(nb_in_channels, input_var,

path_weights='/Tmp/romerosa/itinf/models/' +

'camvid/new_fcn8_model_best.npz',

n_classes=21, load_weights=True,

void_labels=[], trainable=False,

layer=['probs_dimshuffle'], pascal=False,

temperature=1.0, dropout=0.5):

'''

Build fcn8 model

'''

net = {}

# Contracting path

net['input'] = InputLayer((None, nb_in_channels, None, None),input_var)

# pool 1

net['conv1_1'] = ConvLayer(net['input'], 64, 3, pad=100, flip_filters=False)

net['conv1_2'] = ConvLayer(net['conv1_1'], 64, 3, pad='same', flip_filters=False)

net['pool1'] = PoolLayer(net['conv1_2'], 2)

# pool 2

net['conv2_1'] = ConvLayer(net['pool1'], 128, 3, pad='same', flip_filters=False)

net['conv2_2'] = ConvLayer(net['conv2_1'], 128, 3, pad='same', flip_filters=False)

net['pool2'] = PoolLayer(net['conv2_2'], 2)

# pool 3

net['conv3_1'] = ConvLayer(net['pool2'], 256, 3, pad='same', flip_filters=False)

net['conv3_2'] = ConvLayer(net['conv3_1'], 256, 3, pad='same', flip_filters=False)

net['conv3_3'] = ConvLayer(net['conv3_2'], 256, 3, pad='same', flip_filters=False)

net['pool3'] = PoolLayer(net['conv3_3'], 2)

# pool 4

net['conv4_1'] = ConvLayer(net['pool3'], 512, 3, pad='same', flip_filters=False)

net['conv4_2'] = ConvLayer(net['conv4_1'], 512, 3, pad='same', flip_filters=False)

net['conv4_3'] = ConvLayer(net['conv4_2'], 512, 3, pad='same', flip_filters=False)

net['pool4'] = PoolLayer(net['conv4_3'], 2)

# pool 5

net['conv5_1'] = ConvLayer(net['pool4'], 512, 3, pad='same', flip_filters=False)

net['conv5_2'] = ConvLayer(net['conv5_1'], 512, 3, pad='same', flip_filters=False)

net['conv5_3'] = ConvLayer(net['conv5_2'], 512, 3, pad='same', flip_filters=False)

net['pool5'] = PoolLayer(net['conv5_3'], 2)

# fc6

net['fc6'] = ConvLayer(net['pool5'], 4096, 7, pad='valid', flip_filters=False)

net['fc6_dropout'] = DropoutLayer(net['fc6'], p=dropout)

# fc7

net['fc7'] = ConvLayer(net['fc6_dropout'], 4096, 1, pad='valid', flip_filters=False)

net['fc7_dropout'] = DropoutLayer(net['fc7'], p=dropout)

net['score_fr'] = ConvLayer(net['fc7_dropout'], n_classes, 1, pad='valid', flip_filters=False)

# Upsampling path

# Unpool

net['score2'] = DeconvLayer(net['score_fr'], n_classes, 4,

stride=2, crop='valid', nonlinearity=linear)

net['score_pool4'] = ConvLayer(net['pool4'], n_classes, 1,pad='same')

net['score_fused'] = ElemwiseSumLayer((net['score2'],net['score_pool4']),

cropping=[None, None, 'center','center'])

# Unpool

net['score4'] = DeconvLayer(net['score_fused'], n_classes, 4,

stride=2, crop='valid', nonlinearity=linear)

net['score_pool3'] = ConvLayer(net['pool3'], n_classes, 1,pad='valid')

net['score_final'] = ElemwiseSumLayer((net['score4'],net['score_pool3']),

cropping=[None, None, 'center','center'])

# Unpool

net['upsample'] = DeconvLayer(net['score_final'], n_classes, 16,

stride=8, crop='valid', nonlinearity=linear)

upsample_shape = lasagne.layers.get_output_shape(net['upsample'])[1]

net['input_tmp'] = InputLayer((None, upsample_shape, None, None), input_var)

net['score'] = ElemwiseMergeLayer((net['input_tmp'], net['upsample']),

merge_function=lambda input, deconv:

deconv,

cropping=[None, None, 'center',

'center'])

# Final dimshuffle, reshape and softmax

net['final_dimshuffle'] = \

lasagne.layers.DimshuffleLayer(net['score'], (0, 2, 3, 1))

laySize = lasagne.layers.get_output(net['final_dimshuffle']).shape

net['final_reshape'] = \

lasagne.layers.ReshapeLayer(net['final_dimshuffle'],

(T.prod(laySize[0:3]),

laySize[3]))

net['probs'] = lasagne.layers.NonlinearityLayer(net['final_reshape'],

nonlinearity=softmax)resnet50_v1s 是得到 ResNetV1s-50 model 的代码,Model 的 Class 是 ResNetV1b,其中的 Residual Block 是 BottleneckV1b

在看 Gluon 里面的 FCN 代码,里面的 Residual Block 代码里面的 Residual 和 out(主干值)刚好和理解是相反的。在代码里,Residual 是主干,out 恰恰是计算出来的残差。

def hybrid_forward(self, F, x):

residual = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu1(out)

out = self.conv2(out)

out = self.bn2(out)

if self.downsample is not None:

residual = self.downsample(x)

out = out + residual

out = self.relu2(out)

return out