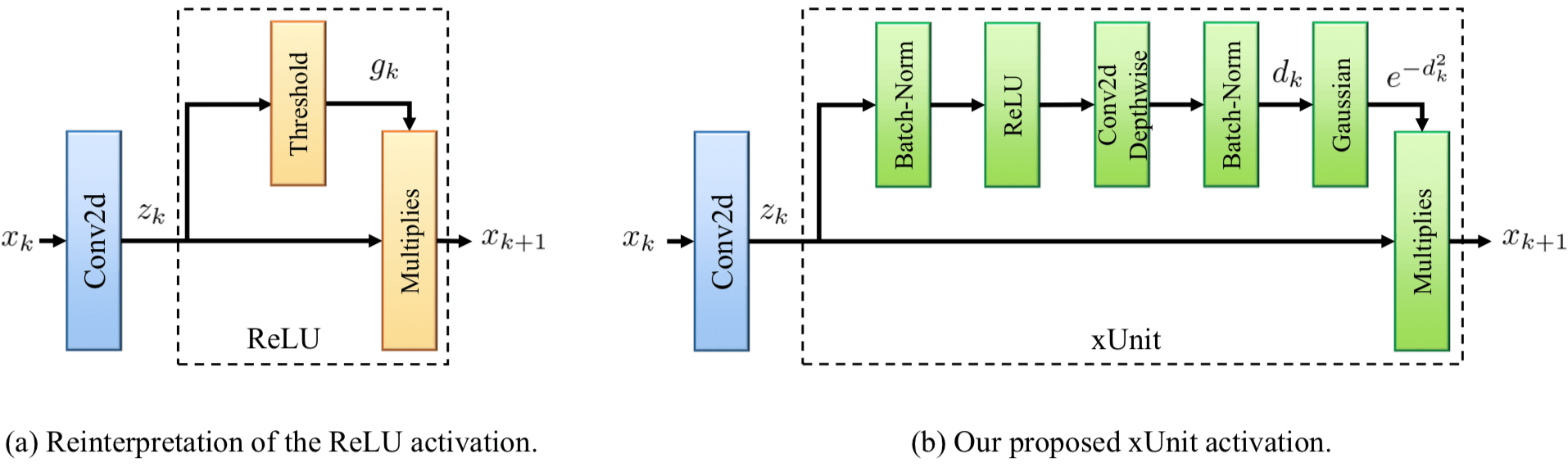

本文的 Motivation 是 a learnable nonlinear function with spatial connections 来 making the nonlinear activations more effective. 事实上, xUnit, a layer with spatial and learnable connections 也可以理解成跟 SENet, GENet 一样的 Attention 模块. 从下图看, xUnit 其实也就是跟 GENet 一样的模块, 这点在 GENet 的论文里也提到了.

对我而言, 本文最大的贡献是指出了 Nonlinear Activation 函数可以写成 Element-wise Multiplication 的形式

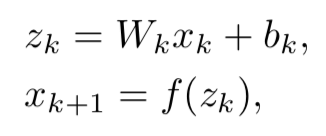

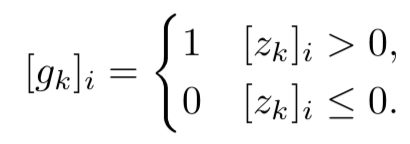

原始的 Nonlinear Activation 形式

其中,

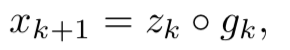

作者指出上式可以写成如下形式

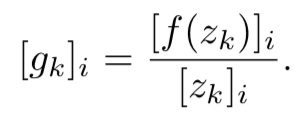

gk 的形式为

以 ReLU 为例, 可以写成

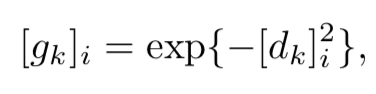

因此, 只要得到 gk 就可以了, 可以在 gk 中蕴含 spatial context, 就完成了考虑 spatial context 的 Activation Function, 如下图

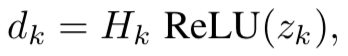

其中 dk 为

- Hk denoting depth-wise convolution

The idea is to introduce

- nonlinearity (ReLU),

- spatial processing (depth-wise convolution),

- construction of gating maps in the range [0, 1] (Gaussian).