All of this is untested/work in progress

Last active

March 4, 2021 12:50

-

-

Save Zeitwaechter/1c3ad5fa6d580cee48dadd84e0f272c6 to your computer and use it in GitHub Desktop.

Kubernetes Scripts

- Login as Root

touch /etc/docker/daemon.jsonsystemctl enable docker.service --nownano /etc/docker/daemon.json{ "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "100m" }, "storage-driver": "overlay2" }systemctl restart docker.service

- If you want to change your docker image location away from

/var/lib/docker- First stop all running Docker images if you have already started any

- Then

systemctl stop docker.service

mkdir /etc/systemd/system/docker.service.dnano /etc/systemd/system/docker.service.d/docker-storage.conf[Service] ExecStart= ExecStart=/usr/bin/dockerd --data-root=[new_docker_images_path] -H fd://systemctl daemon-reloadsystemctl start docker.service

Kubernetes v1.20.X Tutorial

At the end of 2020 - community patched finally in:

See also https://wiki.archlinux.org/index.php/Kubernetes as this is now explained there in more detail (yay!)

Currently still/again under reconstruction ;)

- Login as Root > User

yaourt -Sy etcd cfssl- Login as Root

pacman -S kubelet kubeadm kubectl cni-plugins ethtool ebtables socat conntrack-tools lsof xclip terraform cri-osysctl net.bridge.bridge-nf-call-ip6tables=1sysctl net.bridge.bridge-nf-call-iptables=1mkdir /etc/cni/net.d -pnano /etc/cni/net.d/99-loopback.conf-

{ "cniVersion": "0.2.0", "type": "loopback" } nano /etc/default/kubelet- Add to the file:

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd \ --fail-swap-on=false \ --runtime-cgroups=/systemd/system.slice \ --kubelet-cgroups=/systemd/system.slice"

- Add to the file:

systemctl enable kubelet.service --now

-

- Amend hostname and add IP+hostname in hosts

nano /etc/hostnamenano /etc/hosts- Setup Kubernetes

kubeadm config images pullkubeadm init --pod-network-cidr=10.244.0.0/16 --control-plane-endpoint=[ipv4address] --ignore-preflight-errors=swap --v=10- You maybe need to stop all (now) running Docker containers due to errors

- Ultimate Correction Routine

kubeadm resetdocker stop (docker ps -a -q)docker rm (docker ps -a -q)iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X- Redo the flow starting from

kubeadm init [...]

- Ultimate Correction Routine

groupadd kubernetesuseradd -m -g users -G wheel,docker,kubernetes -s /bin/bash [kubernetes_user]mkdir ~/.kubecp /etc/kubernetes/admin.conf /home/[kubernetes_user]/.kube/config- Add to sudoers

nano /etc/sudoers

chown [kubernetes_user]:users /home/[kubernetes_user]/.kube -Rsu [kubernetes_user]kubectl get componentstatuskubectl get nokubectl get pods --namespace=kube-systemkubectl describe nodekubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.ymlkubectl taint nodes [__current_node_name__] node-role.kubernetes.io/master- --overwrite- To check name of the currendt node again:

kubectl describe node | grep Hostname - Check taints of all nodes:

kubectl get nodes -o custom-columns=NAME:.metadata.name,TAINTS:.spec.taints --no-headers

- To check name of the currendt node again:

- NGINX Ingress Controller + NodePort (Check Link)

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-0.32.0/deploy/static/provider/baremetal/deploy.yaml- Verify Installation

kubectl get pods --all-namespaces -l app.kubernetes.io/name=ingress-nginx --watch

- Check what is available/possible

kubeadm upgrade plan

- Optional: Check your config again

kubectl -n kube-system get cm kubeadm-config -oyaml

- Follow what it tells you

- e.g.

kubeadm upgrade apply v1.18.16

- e.g.

- Follow what it tells you / lower the next possible Version to what it tells you

- Login as [kubernetes_user]

yaourt -Sy kubernetes-helm-binhelm repo add stablehttps://charts.helm.sh/stablehelm repo add incubator https://charts.helm.sh/incubatorhelm repo update

- Login as [kubernetes_user]

kubectl create ns gitlabhelm repo add gitlab https://charts.gitlab.io/helm repo updatekubectl create clusterrolebinding cluster-admin-binding --clusterrole cluster-admin --user [kubernetes_user]- If you are on a "cloud" server/online and/or don't want to configure sth else

helm upgrade --install gitlab gitlab/gitlab --namespace gitlab --timeout 600s --set global.hosts.domain=[cluster].[domain] --set global.hosts.externalIP=[ipv4address] --set certmanager-issuer.email=[specific_email_address]- otherwise (bare metal/local host) create a custom installer:

mkdir ~/.helm/install_configsnano ~/.helm/install_configs/gitlab-values.yaml(redefined from Link RAW | Link Blob)-

# - Minimized CPU/Memory load, can fit into 2-3 CPU, 4-6 GB of RAM (barely) # - Some services are entirely removed, or scaled down to 1 replica. # - Configured to use [ipv4address], and [gitlaburi] for the domain # Minimal settings global: edition: ce ingress: configureCertmanager: false class: "nginx" hosts: domain: gitlab.ciarmor.localhost externalIP: 192.168.2.80 # GitLab Runner isn't a big fan of self-signed certificates gitlab-runner: install: false # Reduce replica counts, resource requests reducing CPU & memory requirements nginx-ingress: controller: replicaCount: 1 minAvailable: 1 # Use standard ingress class name to share with deployed apps ingressClass: nginx resources: requests: cpu: 20m memory: 100Mi defaultBackend: minAvailable: 1 replicaCount: 1 resources: requests: cpu: 5m memory: 5Mi gitlab: webservice: minReplicas: 1 maxReplicas: 1 resources: limits: memory: 1.5G requests: cpu: 100m memory: 900M workhorse: resources: limits: memory: 100M requests: cpu: 10m memory: 10M sidekiq: minReplicas: 1 maxReplicas: 1 resources: limits: memory: 1.5G requests: cpu: 50m memory: 625M gitlab-shell: minReplicas: 1 maxReplicas: 1 gitaly: resources: requests: cpu: 50m persistence: volumeName: gitlab-persistant-volume shared-secrets: resources: requests: cpu: 10m migrations: resources: requests: cpu: 10m task-runner: resources: requests: cpu: 10m registry: hpa: minReplicas: 1 maxReplicas: 1 redis: master: persistence: volumeName: redis-persistant-volume metrics: resources: requests: cpu: 10m memory: 64Mi postgresql: metrics: resources: requests: cpu: 10m persistence: volumeName: posgresql-persistant-volume prometheus: alertmanager: enabled: false replicaCount: 1 # persistence: # volumeName: prometheus-alertmanager-persistant-volume persistentVolume: enabled: true size: 3Gi storageClass: local-storage pushgateway: enabled: false replicaCount: 1 # persistence: # volumeName: prometheus-pushgateway-persistant-volume persistentVolume: enabled: true size: 3Gi storageClass: local-storage server: enabled: true replicaCount: 1 # persistence: # volumeName: prometheus-server-persistant-volume persistentVolume: enabled: true size: 3Gi storageClass: local-storage resources: requests: cpu: 20m configmapReload: resources: requests: cpu: 10m # Don't use certmanager, we'll self-sign certmanager: install: false minio: resources: requests: cpu: 50m memory: 64Mi persistence: volumeName: minio-persistant-volume - And now to the fun part of not having anything that works even though helm is a package installer..

mkdir -p ~/.kube/scripts/storagenano ~/.kube/scripts/storage/local-storage.yml-

apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: local-storage provisioner: kubernetes.io/no-provisioner volumeBindingMode: WaitForFirstConsumer

-

sudo mkdir -p /var/lib/kubernetes/volume-datasudo chown [kubernetes_user]:kubernetes /var/lib/kubernetes/volume-data -Rmkdir /var/lib/kubernetes/volume-data/minio-persistant-volume-datamkdir /var/lib/kubernetes/volume-data/redis-persistant-volume-datamkdir /var/lib/kubernetes/volume-data/prometheus-server-persistant-volume-datamkdir /var/lib/kubernetes/volume-data/prometheus-alertmanager-persistant-volume-datamkdir /var/lib/kubernetes/volume-data/prometheus-pushgateway-persistant-volume-datamkdir /var/lib/kubernetes/volume-data/posgresql-persistant-volume-datamkdir /var/lib/kubernetes/volume-data/gitlab-persistant-volume-datamkdir ~/.kube/scripts/storage/gitlabnano ~/.kube/scripts/storage/gitlab/gitlab-persistant-volume.yml-

kind: PersistentVolume apiVersion: v1 metadata: name: gitlab-persistant-volume labels: type: local spec: storageClassName: local-storage capacity: storage: 100Gi local: path: /var/lib/kubernetes/volume-data/gitlab-persistant-volume-data persistentVolumeReclaimPolicy: Retain accessModes: - ReadWriteOnce storageClassName: local-storage nodeAffinity: required: nodeSelectorTerms: - matchExpressions: - key: app operator: In values: - gitlab

-

nano ~/.kube/scripts/storage/gitlab/redis-persistant-volume.yml-

kind: PersistentVolume apiVersion: v1 metadata: name: redis-persistant-volume labels: type: local spec: storageClassName: local-storage capacity: storage: 10Gi local: path: /var/lib/kubernetes/volume-data/redis-persistant-volume-data persistentVolumeReclaimPolicy: Retain accessModes: - ReadWriteOnce storageClassName: local-storage nodeAffinity: required: nodeSelectorTerms: - matchExpressions: - key: app operator: In values: - gitlab

-

nano ~/.kube/scripts/storage/gitlab/minio-persistant-volume.yml-

kind: PersistentVolume apiVersion: v1 metadata: name: minio-persistant-volume labels: type: local spec: storageClassName: local-storage capacity: storage: 20Gi local: path: /var/lib/kubernetes/volume-data/minio-persistant-volume-data persistentVolumeReclaimPolicy: Retain accessModes: - ReadWriteOnce storageClassName: local-storage nodeAffinity: required: nodeSelectorTerms: - matchExpressions: - key: app operator: In values: - gitlab

-

nano ~/.kube/scripts/storage/gitlab/posgresql-persistant-volume.yml-

kind: PersistentVolume apiVersion: v1 metadata: name: posgresql-persistant-volume labels: type: local spec: storageClassName: local-storage capacity: storage: 20Gi local: path: /var/lib/kubernetes/volume-data/posgresql-persistant-volume-data persistentVolumeReclaimPolicy: Retain accessModes: - ReadWriteOnce storageClassName: local-storage nodeAffinity: required: nodeSelectorTerms: - matchExpressions: - key: app operator: In values: - gitlab

-

nano ~/.kube/scripts/storage/gitlab/prometheus-persistant-volume.yml-

kind: PersistentVolume apiVersion: v1 metadata: name: prometheus-alertmanager-persistant-volume labels: type: local spec: storageClassName: local-storage capacity: storage: 3Gi local: path: /var/lib/kubernetes/volume-data/prometheus-alertmanager-persistant-volume-data persistentVolumeReclaimPolicy: Retain accessModes: - ReadWriteOnce storageClassName: local-storage nodeAffinity: required: nodeSelectorTerms: - matchExpressions: - key: app operator: In values: - gitlab --- kind: PersistentVolume apiVersion: v1 metadata: name: prometheus-pushgateway-persistant-volume labels: type: local spec: storageClassName: local-storage capacity: storage: 3Gi local: path: /var/lib/kubernetes/volume-data/prometheus-pushgateway-persistant-volume-data persistentVolumeReclaimPolicy: Retain accessModes: - ReadWriteOnce storageClassName: local-storage nodeAffinity: required: nodeSelectorTerms: - matchExpressions: - key: app operator: In values: - gitlab --- kind: PersistentVolume apiVersion: v1 metadata: name: prometheus-server-persistant-volume labels: type: local spec: storageClassName: local-storage capacity: storage: 1Gi local: path: /var/lib/kubernetes/volume-data/prometheus-server-persistant-volume-data persistentVolumeReclaimPolicy: Retain accessModes: - ReadWriteOnce storageClassName: local-storage nodeAffinity: required: nodeSelectorTerms: - matchExpressions: - key: app operator: In values: - gitlab

-

kubectl apply -f ~/.kube/scripts/storage/local-storage.ymlkubectl apply -f ~/.kube/scripts/storage/gitlab/gitlab-persistant-volume.ymlkubectl apply -f ~/.kube/scripts/storage/gitlab/minio-persistant-volume.ymlkubectl apply -f ~/.kube/scripts/storage/gitlab/posgresql-persistant-volume.ymlkubectl apply -f ~/.kube/scripts/storage/gitlab/prometheus-persistant-volume.ymlkubectl apply -f ~/.kube/scripts/storage/gitlab/redis-persistant-volume.yml- Check:

kubectl get pv --namespace gitlab helm upgrade --install --namespace gitlab -f ~/.helm/install_configs/gitlab-values.yaml gitlab gitlab/gitlab --timeout 600s --replace --skip-crds- If you need to uninstall/reinstall but having a bad state due to issues with helm try:

helm delete gitlab --namespace gitlab- Then:

kubectl -n gitlab delete pod,rs,ds,deployment,statefulset,horizontalpodautoscaler,svc,secret,job,pvc --all

- Login as [kubernetes_user]

helm repo add gitlab https://charts.gitlab.io/helm repo updatehelm get values gitlab > gitlab.yamlhelm upgrade gitlab gitlab/gitlab -f gitlab.yaml --timeout 600 --set global.hosts.domain=[cluster].[domain] --set global.hosts.externalIP=[ipv4address] --set certmanager-issuer.email=[specific_email_address]- or

helm upgrade gitlab gitlab/gitlab -f gitlab.yaml --timeout 600 --set global.hosts.domain=[hostname].localhost --set global.hosts.externalIP=[ipv4address] --set certmanager-issuer.email=[specific_email_address]

- or

(unfinished draft)

On cluster master:

pacman -S haproxynano /etc/haproxy/haproxy.cfg-

#--------------------------------------------------------------------- # Example configuration. See the full configuration manual online. # # http://www.haproxy.org/download/1.7/doc/configuration.txt # #--------------------------------------------------------------------- global maxconn 20000 log 127.0.0.1 local0 user haproxy chroot /usr/share/haproxy pidfile /run/haproxy.pid daemon frontend k8s bind :6443 mode tcp option tcplog log global option dontlognull timeout client 30s default_backend k8s-control-plane backend k8s-control-plane mode tcp balance roundrobin timeout connect 30s timeout server 30s server k8s-node01 [ip]:6443 check #server k8s-node02 [ip2]:6443 check #server k8s-node03 [ip3]:6443 check - Note to update [ip], [ip2](, ...) to your needed IP(s)

-

systemctl enable haproxy.servicesystemctl start haproxy.service

echo (wget)kubectl create -f 04-security-policies.ymlkubectl create -f 05-network-policies.ymlkubectl create namespace gitlab-ci-bare-metal-nskubectl create -f <(istioctl kube-inject -f 06-kubernetes-compose-gitlab.yml)kubectl create namespace wordpress-bare-metal-nskubectl create -f <(istioctl kube-inject -f 07-kubernetes-compose-wordpress.yml)

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| --- | |

| # Originally taken from ENDOCODE / Thomas Fricke // https://fahrplan.events.ccc.de/camp/2019/Fahrplan/events/10178.html | |

| apiVersion: policy/v1beta1 | |

| kind: PodSecurityPolicy | |

| metadata: | |

| name: default | |

| annotations: | |

| seccomp.security.alpha.kubernetes.io/allowedProfileNames: 'docker/default,runtime/default' | |

| apparmor.security.beta.kubernetes.io/allowedProfileNames: 'runtime/default' | |

| seccomp.security.alpha.kubernetes.io/defaultProfileName: 'runtime/default' | |

| apparmor.security.beta.kubernetes.io/defaultProfileName: 'runtime/default' | |

| spec: | |

| # Containers aren't allowed to us the host's IPC, PID or namespace. | |

| hostNetwork: false | |

| hostIPC: false | |

| hostPID: false | |

| # Pods can only bind to the following host ports | |

| hostPorts: | |

| - min: 10000 | |

| max: 11000 | |

| - min: 13000 | |

| max: 14000 | |

| - min: 15000 | |

| max: 17000 | |

| priviledged: false | |

| # Required to prevent escalation to root | |

| allowPriviledgeEscalation: false | |

| # This is redundant with non-root + disallow privilege escalation | |

| # but we can provide it for defense in depth | |

| allowedCapabilities: | |

| - SYS_TIME | |

| defaultAddCapabilities: | |

| - CHOWN | |

| requiredDropCapabilities: | |

| - ALL | |

| # - SYS_ADMIN | |

| # - SYS_MODULE | |

| # Allow core colume types | |

| volumes: | |

| - configMap | |

| - emptyDir | |

| - secret | |

| - downwardAPI | |

| # Assuming that persistenVolumes set by the cluster admin are safe to use | |

| - persistentVolumeClaim | |

| # - '*' | |

| runAsUser: | |

| # Require the container to run without root privileges | |

| rule: MustRunAsNonRoot | |

| ranges: | |

| - min: 2 | |

| max: 2 | |

| seLinux: | |

| # This policy assumes the nodes are using AppArmor rather than SELinux | |

| rule: RunAsAny | |

| supplementalGroups: | |

| rule: MustRunAs | |

| ranges: | |

| # Forbid adding the root group | |

| - min: 2 | |

| max: 10 | |

| fsGroup: | |

| rule: MustRunAs | |

| ranges: | |

| # Forbid adding the root group | |

| - min: 2 | |

| max: 10 | |

| # Containers are forced to run with a read-only root filesystem | |

| readOnlyRootFilesystem: true |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| --- |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| --- | |

| apiVersion: networking.k8s.io/v1 | |

| kind: NetworkPolicy | |

| metadata: | |

| name: gitlab-ci-redis-network-policy | |

| namespace: default | |

| spec: | |

| podSelector: | |

| matchLabels: | |

| app: gitlab-ci | |

| policyTypes: | |

| - Ingress | |

| ingress: | |

| - from: | |

| - namespaceSelector: | |

| matchLabels: | |

| app: gitlab-ci | |

| ports: | |

| - name: gitlab-ci-redis-network-policy-tcp | |

| protocol: tcp | |

| port: 6379 | |

| - name: gitlab-ci-redis-network-policy-udp | |

| protocol: udp | |

| port: 6379 | |

| --- | |

| apiVersion: networking.k8s.io/v1 | |

| kind: NetworkPolicy | |

| metadata: | |

| name: gitlab-ci-postgre-network-policy | |

| namespace: default | |

| spec: | |

| podSelector: | |

| matchLabels: | |

| app: gitlab-ci | |

| policyTypes: | |

| - Ingress | |

| ingress: | |

| - from: | |

| - namespaceSelector: | |

| matchLabels: | |

| app: gitlab-ci | |

| ports: | |

| - name: gitlab-ci-postgre-network-policy-tcp | |

| protocol: tcp | |

| port: 5432 | |

| - name: gitlab-ci-postgre-network-policy-udp | |

| protocol: udp | |

| port: 5432 | |

| --- | |

| apiVersion: networking.k8s.io/v1 | |

| kind: NetworkPolicy | |

| metadata: | |

| name: gitlab-ci-network-policy | |

| namespace: default | |

| spec: | |

| podSelector: | |

| matchLabels: | |

| app: gitlab-ci | |

| policyTypes: | |

| - Ingress | |

| ingress: | |

| - from: | |

| - podSelector: | |

| matchLabels: | |

| app: gitlab-ci | |

| ports: | |

| - name: gitlab-ci-ui-network-policy-tcp | |

| # Used protocol | |

| protocol: tcp | |

| # Outside of cluster // public | |

| port: 80 | |

| - name: gitlab-ci-ui-tls-network-policy-tcp | |

| # Used protocol | |

| protocol: tcp | |

| # Outside of cluster // public | |

| port: 443 | |

| - name: gitlab-ci-ssh-network-policy-tcp | |

| # Used protocol | |

| protocol: tcp | |

| # Outside of cluster // public | |

| port: 22 | |

| --- | |

| apiVersion: v1 | |

| kind: Service | |

| metadata: | |

| name: gitlab-ci-service | |

| namespace: gitlab-ci-bare-metal-ns | |

| labels: | |

| app: gitlab-ci-service | |

| gpu: false | |

| env: staging | |

| role: service | |

| annotations: | |

| # AWS - Network Load Balancer | |

| service.beta.kubernetes.io/aws-load-balancer-type: "nlb" | |

| spec: | |

| sessionAffinity: ClientIP | |

| selector: | |

| app: gitlab-ci | |

| ports: | |

| - name: gitlab-ui-tcp-service | |

| protocol: tcp | |

| # Port the service will be available at | |

| port: 80 | |

| # Port the call will be forwarded to in the cluster | |

| targetPort: gitlab-ce-ui-tcp | |

| - name: gitlab-ui-tls-tcp-service | |

| protocol: tcp | |

| # Port the service will be available at | |

| port: 443 | |

| # Port the call will be forwarded to in the cluster | |

| targetPort: gitlab-ce-ui-tls-tcp | |

| - name: gitlab-ssh-tcp-service | |

| # Used protocol | |

| protocol: tcp | |

| # Inside of cluster | |

| port: 22 | |

| # Inside the pod | |

| targetPort: gitlab-ce-ssh-tcp | |

| type: LoadBalancer | |

| --- | |

| apiVersion: v1 | |

| kind: ConfigMap | |

| metadata: | |

| labels: | |

| app: gitlab-ci-runner | |

| gpu: false | |

| env: staging | |

| role: config | |

| name: gitlab-ci-runner-cm | |

| namespace: gitlab-ci-bare-metal-ns | |

| spec: | |

| matchLabels: | |

| app: gitlab-ci-runner | |

| env: staging | |

| selector: | |

| app: gitlab-ci-runner | |

| data: | |

| REGISTER_NON_INTERACTIVE: "true" | |

| REGISTER_LOCKED: "true" | |

| CLONE_URL: "http://gitlab.localhost" | |

| CI_SERVER_URL: "http://gitlab.localhost/ci" | |

| RUNNER_REQUEST_CONCURRENCY: "4" | |

| RUNNER_EXECUTOR: "kubi" | |

| KUBERNETES_NAMESPACE: "gitlab-ci" | |

| KUBERNETES_PRIVILEGED: "true" | |

| KUBERNETES_DISABLE_CACHE: "true" | |

| KUBERNETES_CPU_LIMIT: "1" | |

| KUBERNETES_MEMORY_LIMIT: "1Gi" | |

| KUBERNETES_SERVICE_CPI_LIMIT: "1" | |

| KUBERNETES_SERVICE_MEMORY_LIMIT: "1Gi" | |

| KUBERNETES_HELPER_CPU_LIMIT: "500m" | |

| KUBERNETES_HELPER_MEMORY_LIMIT: "100Mi" | |

| KUBERNETES_PULL_POLICY: "if-not-present" | |

| --- | |

| apiVersion: v1 | |

| kind: ConfigMap | |

| metadata: | |

| labels: | |

| app: gitlab-ci-runner | |

| gpu: false | |

| rel: beta | |

| name: gitlab-ci-runner-scripts | |

| namespace: gitlab-ci-bare-metal-ns | |

| data: | |

| run.sh: | | |

| #!/bin/bash | |

| unregister() { | |

| kill %1 | |

| echo "Unregistering runner ${RUNNER_NAME} ..." | |

| /usr/bin/gitlab-ci-multi-runner unregister -t \ | |

| "$(/usr/bin/gitlab-ci-multi-runner list 2>&1 | tail -n1 \ | |

| | awk '{print $4}' | cut -d '=' -f2)" -n ${RUNNER_NAME} | |

| exit $? | |

| } | |

| trap 'unregister' EXIT HUP INT QUIT PIPE TERM | |

| echo "Registering runner ${RUNNER_NAME} ..." | |

| /usr/bin/gitlab-ci-multi-runner register -r ${GITLAB_CI_TOKEN} | |

| echo "Starting runner ${RUNNER_NAME} ..." | |

| /usr/bin/gitlab-ci-multi-runner run -n ${RUNNER_NAME} & | |

| wait | |

| --- | |

| apiVersion: apps/v1 | |

| kind: Deployment | |

| metadata: | |

| name: gitlab-ci-deployment | |

| spec: | |

| replicas: 1 | |

| template: | |

| metadata: | |

| namespace: gitlab-ci-bare-metal-ns | |

| labels: | |

| app: gitlab-ci | |

| gpu: false | |

| env: staging | |

| role: deployment | |

| spec: | |

| selector: | |

| matchLabels: | |

| app: gitlab-ci | |

| env: staging | |

| containers: | |

| - name: gitlab-redis | |

| image: redis:latest | |

| imagePullPolicy: IfNotPresent | |

| resources: | |

| requests: | |

| cpu: 125m | |

| memory: 200Mi | |

| limits: | |

| cpu: 250m | |

| memory: 400Mi | |

| securityContext: | |

| runAsUser: 2 # non-root user | |

| allowPriviledgeEscalation: false | |

| ports: | |

| - name: gitlab-redis-tcp | |

| protocol: tcp | |

| # Inside of pod | |

| containerPort: 16379 | |

| - name: gitlab-redis-udp | |

| protocol: udp | |

| # Inside of pod | |

| containerPort: 16379 | |

| args: | |

| - [ "--loglevel warning" ] | |

| lifecycle: | |

| postStart: | |

| preStop: | |

| securityContext: | |

| capabilities: | |

| drop: | |

| - all | |

| - name: gitlab-postgre | |

| image: postgres:latest | |

| imagePullPolicy: IfNotPresent | |

| resources: | |

| requests: | |

| cpu: 250m | |

| memory: 250Mi | |

| limits: | |

| cpu: 500m | |

| memory: 500Mi | |

| securityContext: | |

| runAsUser: 2 # non-root user | |

| allowPriviledgeEscalation: false | |

| ports: | |

| - name: gitlab-postgre-tcp | |

| protocol: tcp | |

| # Inside of pod | |

| containerPort: 15432 | |

| - name: gitlab-postgre-udp | |

| protocol: udp | |

| # Inside of pod | |

| containerPort: 15432 | |

| lifecycle: | |

| postStart: | |

| preStop: | |

| securityContext: | |

| capabilities: | |

| drop: | |

| - all | |

| env: | |

| - name: DB_USER | |

| value: gitlab | |

| - name: DB_PASS | |

| value: password | |

| - name: DB_NAME | |

| value: gitlabhq_production | |

| - name: DB_EXTENSION | |

| value: pg_trgm | |

| - name: gitlab-ce | |

| image: gitlab/gitlab-ce:latest | |

| imagePullPolicy: IfNotPresent | |

| resources: | |

| requests: | |

| cpu: 1 | |

| memory: 2Gi | |

| limits: | |

| cpu: 2 | |

| memory: 2500Mi | |

| securityContext: | |

| runAsUser: 2 # non-root user | |

| allowPriviledgeEscalation: false | |

| ports: | |

| - name: gitlab-ce-ui-tcp | |

| protocol: tcp | |

| # Inside of pod | |

| containerPort: 10080 | |

| - name: gitlab-ce-ui-tls-tcp | |

| protocol: tcp | |

| # Inside of pod | |

| containerPort: 10443 | |

| - name: gitlab-ce-ssh-tcp | |

| protocol: tcp | |

| # Inside of pod | |

| containerPort: 10022 | |

| lifecycle: | |

| postStart: | |

| preStop: | |

| securityContext: | |

| capabilities: | |

| drop: | |

| - all | |

| env: | |

| - name: DB_USER | |

| value: gitlab | |

| - name: gitlab-runner | |

| image: gitlab/gitlab-runner:latest | |

| imagePullPolicy: IfNotPresent | |

| resources: | |

| requests: | |

| cpu: 250m | |

| memory: 200Mi | |

| limits: | |

| cpu: 500m | |

| memory: 400Mi | |

| securityContext: | |

| runAsUser: 2 # non-root user | |

| allowPriviledgeEscalation: false | |

| ports: | |

| - name: gitlab-ui-tcp | |

| # Used protocol | |

| protocol: tcp | |

| # Inside of pod | |

| containerPort: 10080 | |

| - name: gitlab-ssh-tcp | |

| # Used protocol | |

| protocol: tcp | |

| # Inside of pod | |

| containerPort: 10022 | |

| lifecycle: | |

| postStart: | |

| preStop: | |

| securityContext: | |

| capabilities: | |

| drop: | |

| - all | |

| env: | |

| - name: DB_USER | |

| value: gitlab | |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| # Originally taken from ENDOCODE / Thomas Fricke // https://fahrplan.events.ccc.de/camp/2019/Fahrplan/events/10178.html | |

| --- | |

| apiVersion: apps/v1 | |

| kind: Deployment | |

| metadata: | |

| name: wordpress | |

| namespace: wordpress-bare-metal-ns | |

| labels: | |

| app: wordpress | |

| gpu: false | |

| env: staging | |

| spec: | |

| selector: | |

| matchLabels: | |

| app: wordpress | |

| template: | |

| metadata: | |

| labels: | |

| app: wordpress | |

| spec: | |

| containers: | |

| - name: web | |

| image: wordpress:latest | |

| imagePullPolicy: IfNotPresent | |

| resources: | |

| requests: | |

| cpu: 250m | |

| memory: 250Mi | |

| limits: | |

| cpu: 500m | |

| memory: 500Mi | |

| ports: | |

| - name: wordpress-web | |

| protocol: tcp | |

| containerPort: 80 | |

| lifecycle: | |

| postStart: | |

| preStop: | |

| securityContext: | |

| capabilities: | |

| drop: | |

| - all | |

| env: | |

| - name: WORDPRESS_DB_HOST | |

| value: 127.0.0.1:3306 | |

| # [START cloudsql_secrects] | |

| - name: WORDPRESS_DB_USER | |

| valueFrom: | |

| secretKeyRef: | |

| name: cloudsql-db-credentials | |

| key: username | |

| - name: WORDPRESS_DB_PASSWORD | |

| valueFrom: | |

| secretKeyRef: | |

| name: cloudsql-db-credentials | |

| key: password | |

| # [END cloudsql_secrets] | |

| - name: cloudsql-proxy | |

| image: gcr.io/cloudsql-docker/gce-proxy:latest | |

| command: ["/cloud_sql_procy", | |

| "-instance=${PROJECT}:${REGION}:${INSTANCE}=tcp:3306", | |

| "-credential_file=/secrets/cloudsql/credentials.json"] | |

| securityContext: | |

| runAsUser: 2 # non-root user | |

| allowPriviledgeEscalation: false | |

| # [START volumes] | |

| volumes: | |

| - name: cloudsql-instance-credentials | |

| secret: | |

| secretName: cloudsql-instance-credentials | |

| # [END volumes] | |

| --- |

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

e.G.

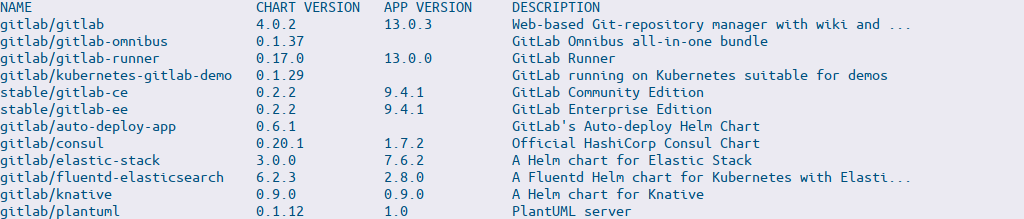

helm search repo gitlabresults in: