- What is your input data?

- What are you trying to predict?

- What type of problem is it - Supervised? Unsupervised? Self-Supervised? Reinforcement Learning?

- Be aware of the hypotheses that you are making at this stage:

- You are hypothesizing that your outputs can be predicted given your inputs.

- You are hypothesizing that your available data is sufficiently informative to learn the relationship between inputs and outputs.

Your metric for success guides the choice of your loss function, i.e. the choice of what your model will optimize. It should directly align with your higher-level goals, such as the success of your business. For example:

- For balanced classification problems, where every class is equally likely, accuracy and ROC-AUC are common metrics.

- For class-imbalanced problems, one may use Precision-Recall.

Other than optimizing metrics, you can choose a satisficing metric which means that the metric has to meet the expectation set by your business. For example, the running time of the model has to be under 100ms.

Also, it isn’t uncommon to define your custom metric by which you want to measure success.

Once you know what you are aiming for, you must establish how you will measure your current progress. The three common evaluation protocols are :

- Maintaining a hold-out validation set; this is the way to go when you have plenty of data.

- Doing K-fold cross-validation; this is the way to go when you have too few samples for hold-out validation to be reliable.

- Doing iterated K-fold validation; this is for performing highly accurate model evaluation when little is available.

Remember to follow this protocol on your dataset: Training Set - Validation Set - Test Set. It is essential to choose the validation and test sets from the same distribution as the training set, and it must be taken randomly from the data.

Once you know what you are training on, what you are optimizing for, and how to evaluate your approach, you are almost

ready to start training models. However first and foremost, for getting a better understanding of your data you should summarize your data and visualize it using standard matplotlib, ggplot or seaborn tools. After that, you should format your data in a way that can be fed into a machine learning model.

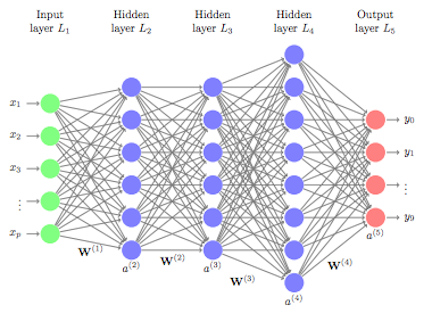

For a deep neural network, a few important steps are:

- First, your data should be formatted as tensors.

- The values taken by these tensors should almost typically be scaled to small values, e.g. in the [0, 1] range.

- If different features take values in different ranges (heterogeneous data), then the data should be normalized.

- You may also want to do some feature engineering, especially for small data problems.

Your goal at this stage is to achieve statistical power, i.e. develop a small model that is capable of beating a random baseline. If your model is not able to, then you must go through the above steps. Assuming that things go well, there are three keys choices you need to make to build your first working deep learning model:

- Choice of the last-layer activation. This establishes useful constraints on the network’s output.

- Choice of the loss function. It should match the type of problem you are trying to solve. It should be differentiable.

- Choice of optimization configuration: what optimizer will you use? What will its learning rate be?

Here is a table to help you pick a last-layer activation and a loss function for a few common problem types:

| Problem type | Last-layer Activation | Loss function |

|---|---|---|

| Binary classification | Sigmoid | Binary_crossentropy |

| Multi-class, single-label classification | Softmax | Categorical_crossentropy |

| Multi-class, multi-label classification | Sigmoid | Binary_crossentropy |

| Regression to arbitrary values | (None) | MSE |

| Regression to values between 0 and 1 | Sigmoid | MSE or Binary_crossentropy |

Once you have obtained a model that has statistical power, the question becomes: is your model powerful enough? Does it have enough layers and parameters to model the problem at hand properly? Remember that the universal tension in machine learning is between optimization and generalization; the ideal model is one that stands right at the border between under-fitting and over-fitting; under-capacity and over-capacity. To figure out where this border lies, first you must cross it. To figure out how big a model you will need, you must develop a model that overfits. This is fairly easy:

- Add layers.

- Make your layers bigger.

- Train for more epochs.

When you see that the performance of the model on the validation data starts degrading, you have achieved overfitting.

This is the part that will take you the most time, where you will regularize and tune your model to get as close as possible to the ideal model, that is neither underfitting nor overfitting. In some cases, by knowing the Bayes optimal error, it is easier to focus on whether bias or variance avoidance tactics will improve the performance of the model.

- If the difference between human-level error (the proxy for Bayes error) and the training error is bigger than the difference between the training error and the validation error, the focus should be on bias reduction techniques (optimization) such as training a bigger neural network, training on better optimization algorithms, trying different architecture or running the training set longer.

- If the difference between training error and the validation error is bigger than the difference between the human-level error and the training error, the focus should be on variance reduction techniques (generalization) such as regularization (L1/L2/dropout), data augmentation, hyperparameter (number of units per layer, the learning rate of the optimizer) tuning, iterate on feature engineering or have a bigger training set.

You are always welcome to try a non-conventional method here or manually examine the model and analyze the errors.

Once you have developed a seemingly good enough model configuration, you can train your final production model on all data available (training and validation) and evaluate it one last time on the test set.

- Define the problem at hand and the data you will be training on; collect this data or annotate it with labels if need be.

- Choose how you will measure success on your problem. Which metrics will you be monitoring on your validation data?

- Determine your evaluation protocol: hold-out validation? K-fold validation? Which portion of the data should you use for validation?

- Analyze and then Prepare your data before feeding to machine learning model.

- Develop a first model that does better than a basic baseline: a model that has "statistical power".

- Develop a model that overfits.

- Regularize your model and tune its hyperparameters, based on performance on the validation data.

Sources :

'Deep Learning with Python' book by Francois Chollet

Deeplearning.ai 'Structuring ML Projects' Course by Andrew Ng