Andy Thomason is a Senior Programmer at Genomics PLC. He has been witing graphics systems, games and compilers since the '70s and specialises in code performance.

The human genome consists of two copies of about 3 billion base pairs of DNA using the alphabet A, C, G and T. This is about two bits per base or

3,000,000,000 * 2 * 2 / 8 = 1,500,000,000 or about 1.5GB of data.

https://en.wikipedia.org/wiki/Human_genome

In reality the two copies are very similar and indeed the DNA of all humans is nearly identical from Wall Street trader to Australian aboriginal.

There are a number of "reference genomes" such as the Ensembl Fasta files available from here:

ftp://ftp.ensembl.org/pub/release-96/fasta/homo_sapiens/

Reference genomes help us to build a map of where we might find particlar features in human DNA, but do not represent any real individuals.

For example, we can use it to name a "location" for a protein coding gene such as BRCA2, a DNA repair mechanism implicated in breast cancer:

https://www.ensembl.org/Homo_sapiens/Gene/Summary?db=core;g=ENSG00000139618;r=13:32315474-32400266

This gene is on chromosome 13 from position 32315474 to 32400266.

Humans are so similar that all we usually have to store to represent them is a small set of "variants".

Over time our DNA gets damaged by cosmic rays and copy errors and so the DNA that parents hand down to children is very slightly different than their own.

A process called recombination further shuffles things so that the DNA a child inherits from their parent is actually a mix of the DNA from their two grandparents on that side.

So for each change to our DNA, we only need to store the differences from the reference genome and this usually comes in the form of a VCF file (Variant Call Format).

As with almost all files in bioinformatics, this is a TSV (Tab separated value) file.

You can get your own VCF file from the likes of 23 and me and ancestry.com: you pay a relatively small fee and send a sample which will be have a subset of its variants sequenced on an array chip. The chip lights up where DNA matches expected sequences.

Example abbreviated from

##fileDate=20090805

##source=myImputationProgramV3.1

##reference=1000GenomesPilot-NCBI36

##phasing=partial

#CHROM POS ID REF ALT QUAL FILTER INFO FORMAT NA00001 NA00002 NA00003

20 14370 rs6054257 G A 29 PASS NS=3;DP=14;AF=0.5;DB;H2 GT:GQ:DP:HQ 0|0:48:1:51,51 1|0:48:8:51,51 1/1:43:5:.,.

Here we have three people named NA00001..3 (we take personal data security very seriously in the genetics world) who have some variants 0|0 1|0 and 1|1 changing from G to A in position 14370 of chromosome 20.

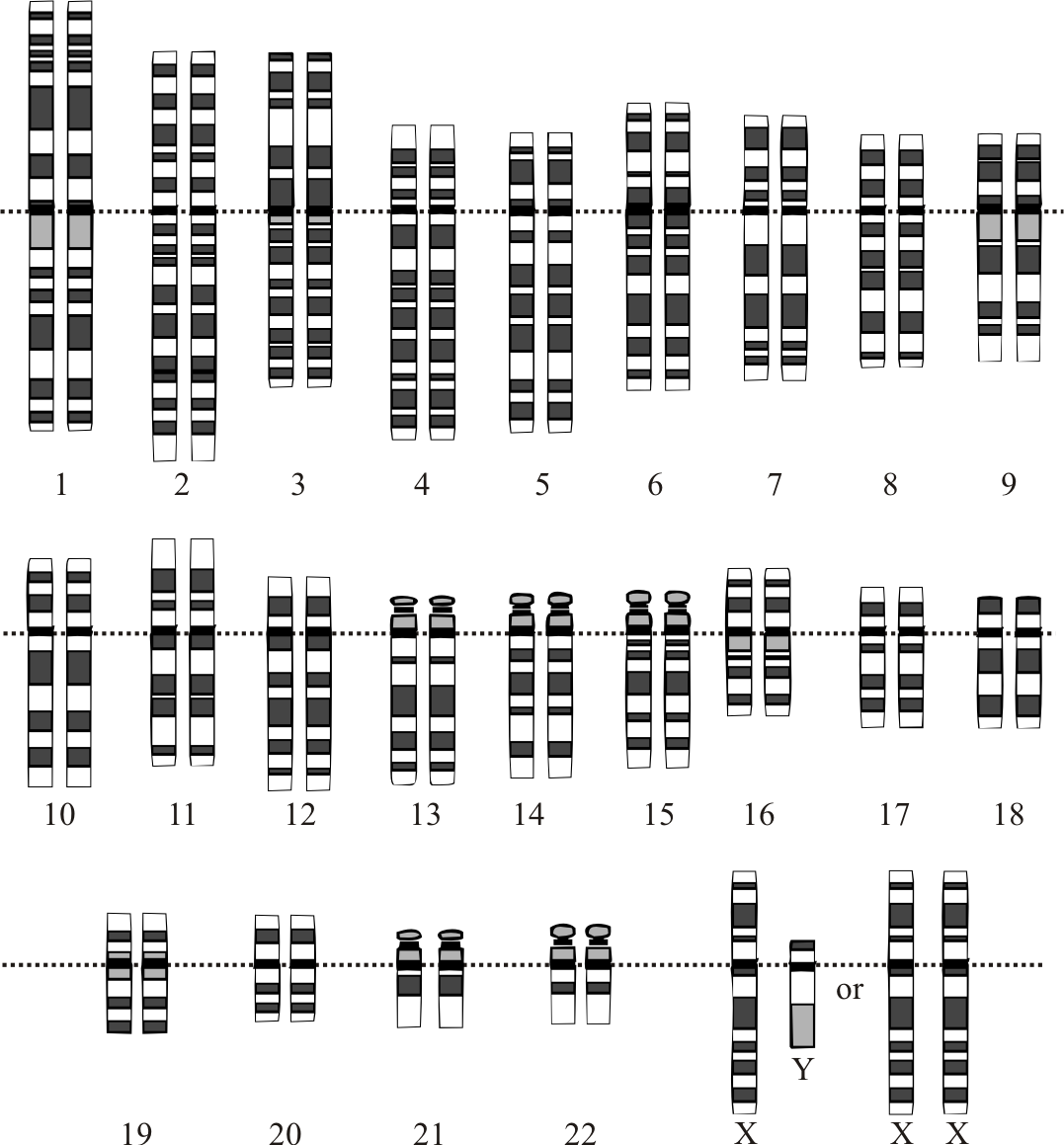

There are two numbers per person as we all have two copies of chromosome 20 (one from each parent; the only exceptions are the sex chromosomes. I am unlucky enough to only have one X chromosome and so I have inheirited colour blindness from my Grandfather via my mother.).

We can have either

0|0 both the same as the reference

1|0 and 0|1 only one chromosome is different from the reference

1|1 both chromosomes are different from the reference.

VCF files are "Phased" if we can work out which chromosome the variant is actually on, or at least where it is relative to its neighbours. In practice, unless you have a very small pair of tweezers, it is hard to tell which chromosome the DNA came from and so we guess!

So in reality we have a bit vector 001011 which is enough to classify these three humans in this variation.

These are the haplotypes or variation on individual chromosomes.

We can now use this bit vector to try to work out what bits of the genome affect what diseases or other things like hair colour or height. For each variant we plot each haplotype against the thing we want to measure (the phenotype)

This is a GWAS or Genome wide association study and is the core of genetic variant analysis. It makes a map from variants to observations.

For example

Haplotype Height Person

0 1.5m NA00001

0 1.5m

1 1.75m NA00002

0 1.75m

1 1.95m NA00003

1 1.95m

Note that everyone has two haplotypes because we have pairs of chromosomes.

Here we see that the amount of 1'ness gives more height and we do a linear regression to find two values:

beta The change in height with a change in variant.

standard error A measure of how noisy our data is.

In practice the data is really noisy and the error tends to be larger than the beta, but often we have a few variants where the beta is much stronger than the error and this ratio - the Z-score and its associated value the p-value show which variants are likely to be an influence on height.

The simplest way to do the regression is to use the Moore Penrose inverse.

https://en.wikipedia.org/wiki/Moore%E2%80%93Penrose_inverse

We make a 2x2 matrix of covariance with the dot product of the two vectors and then solve to find the least squares solution.

For a good sized data set we have literally trillions of data points, so doing this efficiently is important.

Because we inheirit big chunks of DNA from our parents it means that when we look at a particular area of the DNA we look very similar - far more similar that chance would dictate.

This is great for us as our genes carry on working the way they did in our ancestors, but bad for genomics researchers. It means that we are not sufficiently different for us to be able to tell which variant is the cause of a phenotype change.

Linkage Disequilibrium (LD) is a measure of how similar two vectors of variants are.

https://en.wikipedia.org/wiki/Linkage_disequilibrium

It gives a value between -1 and 1 where

-1 The two variants are completely opposite

0 The variants are not alike

1 The variants are exactly the same

We make big square matrices of LD around certain sites in the genome to explore how similar the variants are. In practice many of the variants surrounding a site are almost identical to the variant in the middle.

The matrix ends up a bit like this with big squares of similarity.

v0 v2 v4 v6 v8 va vc ve vg

v1 v3 v5 v7 v9 vb vd vf

v0 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 0

v1 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 0

v2 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 0

v3 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 0

v4 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 0

v5 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 0

v6 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 0

v7 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 0

v8 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1

v9 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1

va 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1

vb 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1

vc 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1

vd 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1

ve 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1

vf 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1

vg 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1

The actual values aren't exactly 0 or 1, but very similar.

The gap between v7 and v8 is where recombination has happened. This has made v0..v7 different from v8..vg

The problem with the similarity is that we know that one of the variants in the group caused something, but not which one.

This limits the resolution of out genomic microscope and we need to use further methods such as functional genomics to resolve this issue.

At the end of the day, we can't be sure that our choice of an answer to "what causes my thing" is the right one, but that is genetics through and through. Biology is not a nice tidy machine with perfect clockwork parts, it is a seething mass of randomness that somehow makes all this stuff we call life. That is why statistics, or "Machine Learning" as it has been rebranded is so important.

This was not bad, assuming a beginning learner. The guide is to teach programmers a little bit of Biology right? In this light it does a good job of outlining the process, and the basics. There are more features of DNA known (now) to influence phenotypic variation, but this serves as a starting point. As a life scientist it seems fair to simplify the biology for coders, just like many lessons are simplifying coding for life scientists. We inherit our DNA in big chunks from each of our parents.