Last active

September 9, 2022 15:47

-

-

Save conormm/1c82b093c9c6002e7ca6ff6e9fb34f05 to your computer and use it in GitHub Desktop.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| import tensorflow as tf | |

| from tensorflow.examples.tutorials.mnist import input_data | |

| import begin | |

| l1_nodes = 200 | |

| l2_nodes = 100 | |

| final_layer_nodes = 10 | |

| # define placeholder for data | |

| # also considered as the "visibale layer, the layer that we see" | |

| X = tf.placeholder(dtype=tf.float32, shape=[None, 784]) | |

| # placeholder for correct labels | |

| Y_ = tf.placeholder(dtype=tf.float32) | |

| # define weights / layers here | |

| # needs weights and bias for each layer in the network. Input to one layer is the | |

| # output from the previous layer | |

| w1 = tf.Variable(initial_value=tf.truncated_normal([784, l1_nodes], stddev=0.1)) | |

| b1 = tf.Variable(initial_value=tf.zeros([l1_nodes])) | |

| Y1 = tf.nn.relu(tf.matmul(X, w1) + b1) | |

| w2 = tf.Variable(initial_value=tf.truncated_normal([l1_nodes, l2_nodes], stddev=0.1)) | |

| b2 = tf.Variable(tf.zeros([l2_nodes])) | |

| Y2 = tf.nn.relu(tf.matmul(Y1, w2) + b2) | |

| w3 = tf.Variable(initial_value=tf.truncated_normal([l2_nodes, final_layer_nodes], stddev=0.1)) | |

| b3 = tf.Variable(tf.zeros([final_layer_nodes])) | |

| Y = tf.nn.softmax(tf.matmul(Y2, w3) + b3) | |

| # defien cost function and evaluation metric | |

| cross_entropy = -tf.reduce_sum(Y_ * tf.log(Y)) | |

| is_correct = tf.equal(tf.argmax(Y, 1), tf.argmax(Y_, 1)) | |

| accuracy = tf.reduce_mean(tf.cast(is_correct, tf.float32)) | |

| # gradient descent | |

| optimizer = tf.train.GradientDescentOptimizer(learning_rate=0.003) | |

| train_step = optimizer.minimize(loss=cross_entropy) | |

| init = tf.global_variables_initializer() | |

| sess = tf.Session() | |

| sess.run(init) | |

| @begin.start | |

| def main(n_iter : "number of iterations in model"): | |

| mnist = input_data.read_data_sets('MNIST_data', one_hot=True) | |

| for i in range(int(n_iter)): | |

| batch_X, batch_y = mnist.train.next_batch(100) | |

| # link previously defined placeholders to incoming data | |

| train_data = {X: batch_X, Y_: batch_y} | |

| # train | |

| sess.run(train_step, feed_dict=train_data) | |

| # training accuracy and cost | |

| train_a, train_c = sess.run([accuracy, cross_entropy], feed_dict=train_data) | |

| # test accuract and cost | |

| test_data = {X: mnist.test.images, Y_: mnist.test.labels} | |

| test_a, test_c = sess.run([accuracy, cross_entropy], feed_dict=test_data) | |

| if i % 100 == 0: | |

| print("Train accuracy: {}, Test accuracy: {}".format(train_a, test_a)) |

ah, just remove the "import begin" line from the top of the script

or rather pip install begins if you want to run this script from the command line

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

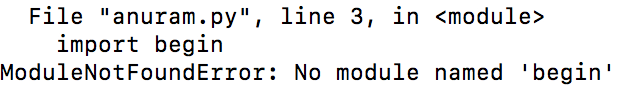

When I try running the above script I get the following error:

File "anuram.py", line 3, in

import begin

ModuleNotFoundError: No module named 'begin'