Prompt Engineering is a burgeoning field that sits at the crossroads of Natural Language Processing (NLP), Machine Learning (ML), and Human-Computer Interaction. It's about crafting the right prompts to elicit desired responses from Language Models (LMs). This article embarks on an exploration into the three core LLM (Large Language Model) concepts, journeying through the latent and emergent properties of language models, all under the illuminating light of Bloom's Taxonomy.

Reductive operations are about condensing information. They take a large amount of text and produce a smaller, more manageable output, ensuring the essence remains intact.

- Summarization: Condensing information while retaining the core message.

Example:

Original Text: "The quick brown fox jumps over the lazy dog."

Summarized: "A fox jumps over a dog."- Distillation: Purifying the underlying principles or facts, stripping away the noise.

Example:

Original Text: "Water boils at 100 degrees Celsius, a fact discovered centuries ago."

Distilled: "Water boils at 100°C."- Extraction: Retrieving specific types of information like dates, names, or answers to questions.

Example:

Text: "The Declaration of Independence was signed on July 4, 1776."

Extracted Date: "July 4, 1776"- Characterizing: Describing the content of the text, either as a whole or within a specific subject matter.

- Analyzing: Finding patterns or evaluating text against a framework.

- Evaluation: Measuring, grading, or judging the content based on certain criteria.

- Critiquing: Providing feedback within the context of the text for improvement.

Transformation operations are about altering the format or structure of the text while retaining its original meaning or size.

- Reformatting: Changing the presentation, like converting prose to screenplay or XML to JSON.

Example:

Original Format: "<tag>hello</tag>"

Reformatted: "{ "tag": "hello" }"- Refactoring: Achieving the same results but with more efficiency.

- Language Change: Translating text from one language to another.

- Restructuring: Optimizing structure for logical flow.

- Modification: Rewriting to achieve a different intention, like changing tone or style.

- Clarification: Making text more comprehensible.

Generative operations are about creating a large amount of text from a small set of instructions or data.

- Drafting: Generating drafts or documents.

Example:

Instruction: "Draft a letter to Mr. Smith regarding the meeting schedule."

Generated Draft:

"Dear Mr. Smith,

I am writing to inform you about the meeting schedule..."- Planning: Coming up with plans given certain parameters.

- Brainstorming: Listing out possibilities using imagination.

- Amplification: Expanding on a topic to provide a deeper understanding.

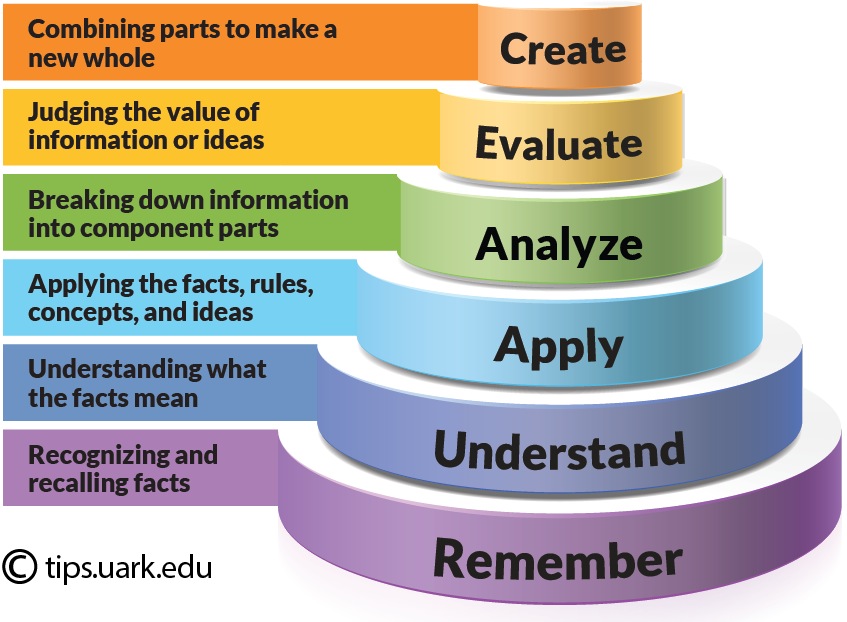

Bloom's Taxonomy is a hierarchical model classifying educational learning objectives into levels of complexity and specificity, from simple recall of facts (Remembering) to the creation of new work (Creating). It's a lens through which we can understand the capabilities of LLMs.

Latent content is the embedded knowledge within the model, originating from the training data. It encompasses world knowledge, scientific principles, cultural norms, historical data, and language structures.

Emergent capabilities are the abilities that manifest in LLMs, enabling them to exhibit behaviors like Theory of Mind, Implied Cognition, Logical Reasoning, and In-Context Learning, which are not explicitly present in the training data.

Hallucination in LLMs is akin to human creativity, differing mainly in the recognition of its fictitious nature. Both involve similar cognitive processes for generating ideas, with the output's value or risk being context-dependent.

Understanding the mechanics of prompt engineering is pivotal for anyone looking to harness the power of LLMs effectively. The journey through reductive, transformation, and generative operations, alongside a dive into latent and emergent properties, provides a robust framework for appreciating the intricacies of language models.