-

-

Save daattali/3868f67e60f477c8c0f0 to your computer and use it in GitHub Desktop.

| # Get a person's name, location, summary, # of connections, and skills & endorsements from LinkedIn | |

| # URL of the LinkedIn page | |

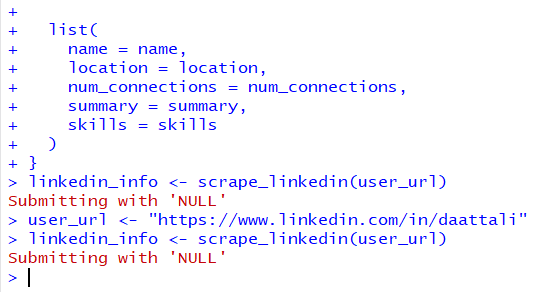

| user_url <- "https://www.linkedin.com/in/daattali" | |

| # since the information isn't available without being logged in, the web | |

| # scraper needs to log in. Provide your LinkedIn user/pw here (this isn't stored | |

| # anywhere as you can see, it's just used to log in during the scrape session) | |

| username <- "yourusername" | |

| password <- "yourpassword" | |

| # takes a couple seconds and might throw a warning, but ignore the warning | |

| # (linkedin_info <- scrape_linkedin(user_url)) | |

| ############################ | |

| library(rvest) | |

| scrape_linkedin <- function(user_url) { | |

| linkedin_url <- "http://linkedin.com/" | |

| pgsession <- html_session(linkedin_url) | |

| pgform <- html_form(pgsession)[[1]] | |

| filled_form <- set_values(pgform, | |

| session_key = username, | |

| session_password = password) | |

| submit_form(pgsession, filled_form) | |

| pgsession <- jump_to(pgsession, user_url) | |

| page_html <- read_html(pgsession) | |

| name <- | |

| page_html %>% html_nodes("#name") %>% html_text() | |

| location <- | |

| page_html %>% html_nodes("#location .locality") %>% html_text() | |

| num_connections <- | |

| page_html %>% html_nodes(".member-connections strong") %>% html_text() | |

| summary <- | |

| page_html %>% html_nodes("#summary-item-view") %>% html_text() | |

| skills_nodes <- | |

| page_html %>% html_nodes("#profile-skills .skill-pill") | |

| skills <- | |

| lapply(skills_nodes, function(node) { | |

| num <- node %>% html_nodes(".num-endorsements") %>% html_text() | |

| name <- node %>% html_nodes(".endorse-item-name-text") %>% html_text() | |

| data.frame(name = name, num = num) | |

| }) | |

| skills <- do.call(rbind, skills) | |

| list( | |

| name = name, | |

| location = location, | |

| num_connections = num_connections, | |

| summary = summary, | |

| skills = skills | |

| ) | |

| } |

| # Make a wordcloud of the most common words in a person's tweets | |

| # Need to create a Twitter App and get credentials | |

| setup_twitter_oauth(USE_YOUR_CREDENTIALS_HERE) | |

| # Username of the Twitter user | |

| name <- "daattali" | |

| ##################### | |

| library(twitteR) | |

| library(SnowballC) | |

| library(wordcloud) | |

| library(tm) | |

| library(stringr) | |

| library(dplyr) | |

| user <- userTimeline(user = name, n = 3200, includeRts = FALSE, excludeReplies = TRUE) | |

| tweets <- sapply(user, function(x) { strsplit(gsub("[^[:alnum:] ]", "", x$text), " +")[[1]] }) | |

| topwords <- | |

| tweets %>% | |

| paste(collapse = " ") %>% | |

| str_split("\\s") %>% | |

| unlist %>% | |

| tolower %>% | |

| removePunctuation %>% | |

| removeWords(stopwords("english")) %>% | |

| #wordStem %>% | |

| .[. != ""] %>% | |

| table %>% | |

| sort(decreasing = TRUE) %>% | |

| head(100) | |

| wordcloud(names(topwordscloud), topwords, min.freq = 3) |

Cool script. Thanks for sharing. I've noticed several words in my cloud have letter 'c' stuck in front of them. Any ideas what causes that?

@JessicaRudd I'm getting the same error. I'm thinking many things have changed on LinkedIn in 2 years since this was posted...

@JessicaRudd @taraskaduk - sorry for not responding, github doesn't seem to send me messages when a comment gets posted on a gist.

This code was written in a rush for a hackathon a long time ago, it was not meant to be robust and stand a test of time. I'm sure that since then the linkedin website has changed enough that this doesn't work anymore, sorry! But hopefully this can still be a helpful starting point

Thanks @daattali.

Redirected to SO for help:

Sir i want scrap the career growth of an alumini of a college's linkedin profile. How can this be done.

hey, do you still need help with this?

Sir i want scrap the career growth of an alumini of a college's linkedin profile. How can this be done.