- We've been learning a lot of practical / procedural knowledge

- We've learned there is more than one way to solve a software problem

- We've seen a sampling of technical interview questions

- Employers still want a firm basis of theoretical understanding

- One area of focus: algorithmic complexity

- A fancy word for a: step-by-step way to solve a problem

IE, write a method that determines if an item appears in an array:

const exists = (array, target) => {

for (element of array) {

if (element === target) {

return exists

}

}

}We could also use .find()

- We've coached: sloppy code is better than no code

- As our apps and problems become more complex with larger volumes of data, how will our apps perform?

- How does the

existsmethod perform for large data sets?

const exists = (array, target) => {

for (element of array) {

if (element === target) {

return exists

}

}

}Imagine if the array is over 10,000 elements long...

- How many operations/loops if the item we're looking for is the first item in the list?

- How many operations/loops if the item we're looking for is at the end of the list?

const exists = (array, target) => {

for (element of array) {

if (element === target) {

return exists

}

}

}Big O is here to help us decipher how complex or expensive an algorithm is with massive data sets

- Imagine a really large number of items in the array (close to infinity). That n

- For every loop "around the race track" there is a computational expense, we call Big-O

- Created by Paul Bachmann,

Ostands for "Ordnung", or the order of approximation

- Created by Paul Bachmann,

The worst case performance of this algorithm is O(n)

The worst case performance of this algorithm is O(n)

Reasoning

Worst case scenario: If the item we're seeking is either not in the list, or at the very end of the list, we have to loop through ALL of the items in the array. It takes "n" amount of time (or computational expense) in that bad scenario.

- Computer Scientists (that's you) will interact with large volumes of data, thus algorithmic performance and code at scale is important

- Generally, you simply need to be able to take a measure of the general complexity of the code you are working with, and be able to speak to whether it is inefficient or increasingly efficient, etc.

- Accessing an item in an array

- No matter how large the data set is, the time to access a single item is constant.

list = [1,4,6,7]

list[1]- Good performance at scale

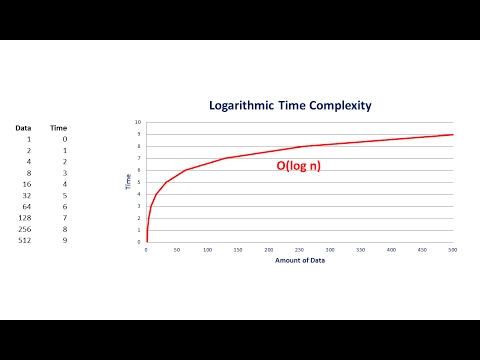

- usually pre-sorted list

- As data volume grows, efficiencies are gained

1 item takes 1 second, 10 items takes 2 seconds, 100 items takes 3 seconds.

const binarySearch = (n, array) => {

let middle = array.length / 2

let i = 0

let j = array.length - 1

while (i < j) {

if (array[middle] === n) {

return true

} else if (array[middle] < n) {

i = middle + 1

middle = i + j / 2

} else {

j = middle - 1

middle = i + j / 2

}

}

return false

}Simple iteration of array with n elements

1 item takes 1 second, 10 items takes 10 seconds, 100 items takes 100 seconds.

What happens if for some reason (who knows why) we have to iterate over the array twice?

const exists = (array, target) => {

let check1, check2

for (element of array) {

if (element === target) {

check1 = true

}

}

for (element of array) {

if (element === target) {

check2 = true

}

}

return check1 && check2

}Complexity: Big O(2n)

But this isn't meaningful enough of a difference. We might want to update our code to speed it up somehow, but this isn't a big enough leap for it too matter too much.

However, if the thing we are measuring is page re-renders, then this actually might matter a lot

Point: Big O is more concerned with dramatic changes in complexity. However, if each process is already costly, we'll have lower tolerance for more complexity.

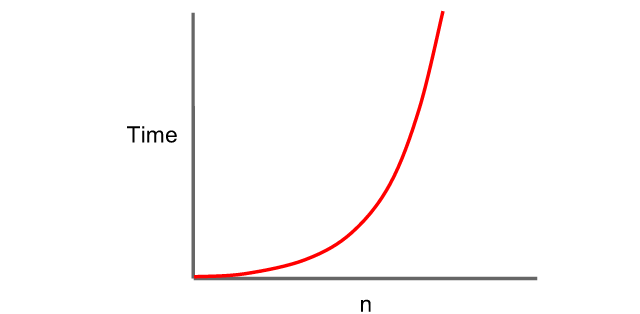

- Generally encountered when dealing with nested loops, or lookups while looping.

1 item takes 1 second, 10 items takes 100, 100 items takes 10000.

const allCombinations = (theList) => {

let results = []

theList.forEach((outerItem) => {

theList.forEach((innerItem) => {

results.push([outerItem, innerItem])

})

})

return results

}- Each new addition of data dramatically impacts performance

- These algorithms do not scale!

Performance benchmarking in ms, n = # of houses

bestTravelingSalesmanRoute(1) // 0.02 ms

bestTravelingSalesmanRoute(2) // 0.04 ms

bestTravelingSalesmanRoute(10) // 42.08 ms

bestTravelingSalesmanRoute(12) // 5231.54 ms => 5 seconds

bestTravelingSalesmanRoute(13) // 69565.01 ms => 70 SECONDS!

bestTravelingSalesmanRoute(14) // SMOKE & FLAMES!!- Big O represents worst-case performance of an algorithm.

- Linear and logarithmic complexities are usually in acceptable bounds.

- O(2^n) and O(n!) performing algorithms do not scale.

- Direct access? O(1)

- Iterating over a list once? O(n)

- Does processing decrease over time for large datasets? Does it bisect nodes? O(log n)

- Nest iteration? O(n^2)

- Check out our curriculum

- Big O JavaScript

- Big O Cheatsheet

- Big O Considerations in Ruby

- Big O Explained in Ruby

- Idiot's Guide to Big O