Current image generation models use a technique called classifier-free guidance1 or super conditioning2: At each step, they draw not just what fits the prompt best, but what sets images fitting that description apart from ones that reach similar intermediate results so far but might fit a totally different caption in the end.

To do that, they predict what they'd do next if the prompt was blank and decide based on the difference to what they'd do for the real prompt alone.

There ain't no rule that says we can't change that blank "antiprompt" to something meaningful, though. For Stable Diffusion, others have shown what replacing it can do (to try it, see e.g. AUTOMATIC1111's UI).

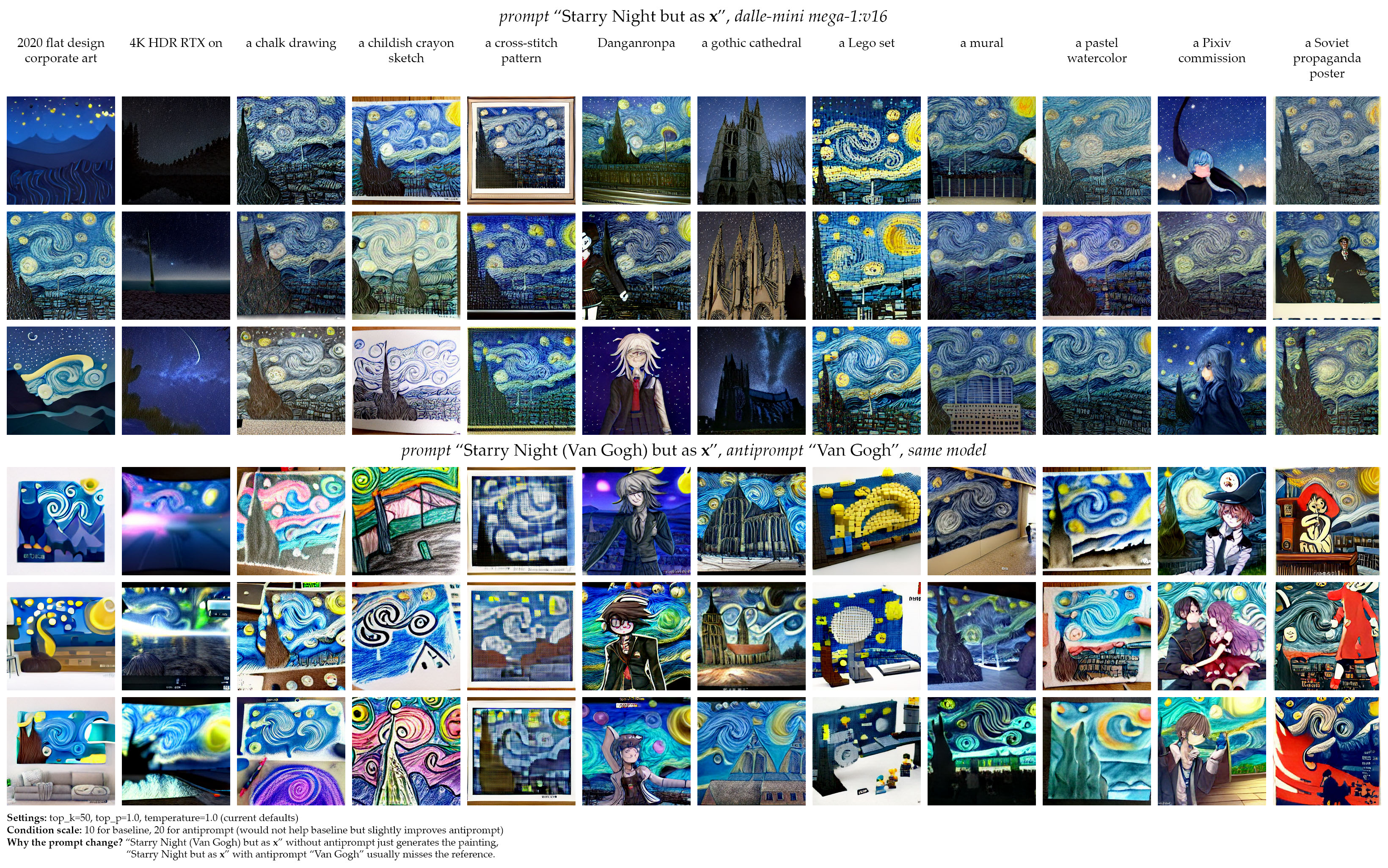

With Craiyon / DALL-E Mega, "antiprompts" can radically change the style - here's a 34 prompts X 104 antiprompts gallery3.

...and make variations of Starry Night, otherwise nigh-impossible as the model associates anything named "Starry Night" and sort of looks like it with the exact painting.

You can try this right now with a quick change to the reference notebook:

-

Under the 🖍 Text Prompt section, add an antiprompts list under the prompts list in matching order, in the same code cell:

prompts = [ "sunset over a lake in the mountains", "the Eiffel tower landing on the moon", ] antiprompts = [ "unreal engine", "mc escher visits paris", ]

To use the normal super conditioning prompt, just leave the entry in the antiprompts list empty (""). (Technically, that's not the super conditioning prompt. The code below skips empty entries, otherwise you might get different results.)

-

Change the next code cell to the following:

tokenized_prompts = processor(prompts) tokenized_antiprompts = processor(antiprompts) for i, ap in enumerate(antiprompts): if ap != "": tokenized_prompts['input_ids_uncond'][i] = tokenized_antiprompts['input_ids'][i] tokenized_prompts['attention_mask_uncond'][i] = tokenized_antiprompts['attention_mask'][i]

-

That's all the necessary changes! Run all the code cells before the "Optional" section, in order, by clicking the Play buttons on the left (or hitting Shift+Enter while the cell is focused). To try different prompts, just change the lists and run the cells starting from there again.

- Sometimes the Colab runs out of memory and crashes when loading the model. Just scroll to the top and run the cells again if that happens.

- The notebook is set to the half-precision version

mega-1-fp16(without Colab Pro or code changes, the fullmega-1likely wouldn't load). The fullmega-1(used in web apps and my examples) is slightly better but not as much as "half" makes it sound. (The official web app often seems better than running the model yourself because it generates more than 9 images and shows you the best ones.)

Diffusion models like DALL-E 2, Imagen or Stable Diffusion iteratively refine an image - technically by denoising, but if you look at their output step-by-step, you see they put broad shapes and colors down first, details towards the end.

Autoregressive models like DALL-E, Parti or Craiyon / DALL-E Mega are machine translation models whose output language is "image words" (VQGAN tokens) rather than French. Craiyon, specifically, writes from left to right, top to bottom (image words are not squares in the final image, but what's in the upper left won't affect the bottom right much).

Either way, say you're building up "a movie frame with black bars". The rough shape of such images has a lot of black on the outside, but not in the center. The same goes for "a bright logo on a black background". You'd take similar first steps drawing either picture - so without a prompt, either direction would be reasonable to continue with - but the logo is much more likely to have a black frame on all sides, and much less likely to be highly detailed anywhere.

And that's the knowledge CFG / super conditioning lets us use. With antiprompts, we guide generation away from concepts, especially stylistic influences, our request could be confused with.

Keep in mind the model doesn't evaluate the two prompts together. Results still match its understanding of the original prompt, given the image state. "An apple" produces photos of reddish apples. The antiprompt "red" turns them green. The antiprompt "fruit"? Vector illustrations of apples, as those aren't what comes to mind for "fruit", but the prompt doesn't leave room for totally different meanings.

Footnotes

-

For diffusion models like Stable Diffusion; Ho et al., 2022 ↩

-

For autoregressive models like Craiyon; Katherine Crowson (@RiversHaveWings), 2022 ↩

-

If there's interest in the full data (images as VQGAN sequences, including ~20 more prompts that weren't as interesting, plus CLIP scores), bug me and I'll upload it. ↩