Support Vector Machine (SVM) é um classificador formalmente definido pela separação de um hiperplano. Em outras palavras, dado um prévio conjunto de dados já classificado (aprendizado supervisionado), o algoritmo retorna um hiperplano ideal que categoriza novos exemplos.

Considere o seguinte problema:

Para um conjunto de pontos 2D que pertencem a duas classes distintas, encontre uma linha reta que separe os pontos de acordo com sua classe.

In the above picture you can see that there exists multiple lines that offer a solution to the problem. Is any of them better than the others? We can intuitively define a criterion to estimate the worth of the lines:

A line is bad if it passes too close to the points because it will be noise sensitive and it will not generalize correctly. Therefore, our goal should be to find the line passing as far as possible from all points.

Then, the operation of the SVM algorithm is based on finding the hyperplane that gives the largest minimum distance to the training examples. Twice, this distance receives the important name of margin within SVM’s theory. Therefore, the optimal separating hyperplane maximizes the margin of the training data.

Nota-se que o objetivo do SVM em um espaço bidimensional é emcontrar uma linha. Porém em um espaço tridimensional o objetivo é encontrar planos e para casos de espaço com mais de três dimensões, o objetivo é encontrar hiperplanos.

Os elementos de cada classe mais próximos da "linha" ótima (destacados na imagem anterior) são chamados de Support Vectors e são utilizados pelo classificador do SVM para fazer uma comparação entre a sua posição e a posição do elemento a ser classificado. Para que isso seja possível, o algoritmo de treinamento do SVM utiliza técnicas de otimização. Uma dessas técnicas é a Sequential minimal optimization (SMO).

O algoritmo do SVM, tanto na aprendizagem como na classificação, ainda é capaz de tratar casos de conjunto de elementos não-linearmente separáveis. Para isso, ele faz o uso de uma função de Kernel, ao invés dos produtos utilizados nas equações para a multiplicação dos vetores de características de dois elementos. Caso o problema ainda assim não seja resolvido, são utilizadas variáveis de folga, fazendo com que a reta de separação seja flexível (SEMOLINI, 2002).

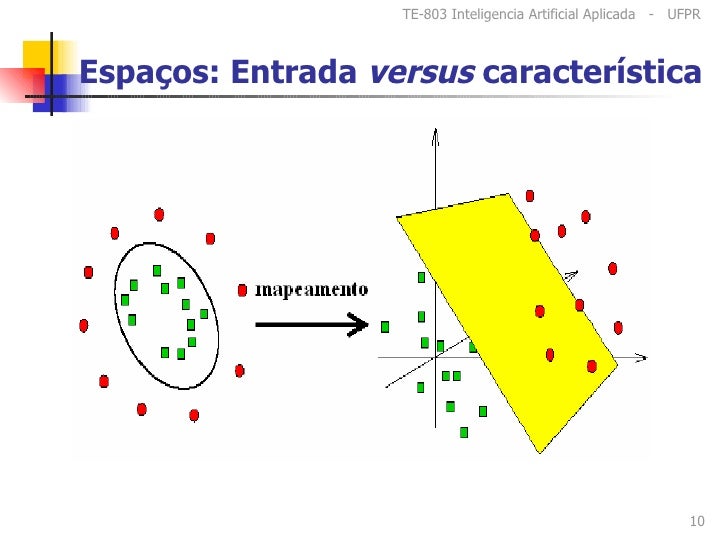

A utilização de uma função de Kernel,$K(x,x')$ tem como objetivo mapear os elementros em um novo espaço com o número de dimensões muito maior do que as do espaço original. Com isso, os elementos podem se tornar linearmente separáveis, já que suas posições no novo espaço são diferentes das no espaço original, possibilitando a definição de um hiperplano que separe os elementos de diferentes classes (HAN e KAMBER, 2006).

A figura acima ilustra a aplicação de uma função de Kernel, em elementos inicialmente mapeados em um espaço com

Seque três funções de Kernel mais comuns utilizadas em SVM :

- Função de Base Radial (RBF)

- Função Polinomial

- Perceptron

Sequential minimal optimization (SMO) is an algorithm for solving the optimization problem which arises during the training of support vector machines. It was invented by John Platt in 1998 at Microsoft Research.[1] SMO is widely used for training support vector machines and is implemented by the popular LIBSVM tool.[2][3] The publication of the SMO algorithm in 1998 has generated a lot of excitement in the SVM community, as previously available methods for SVM training were much more complex and required expensive third-party QP solvers

Consider a binary classification problem with a dataset

subject to:

where

SMO is an iterative algorithm for solving the optimization problem described above. SMO breaks this problem into a series of smallest possible sub-problems, which are then solved analytically. Because of the linear equality constraint involving the Lagrange multipliers

and this reduced problem can be solved analytically: one needs to find a minimum of a one-dimensional quadratic function. k is the sum over the rest of terms in the equality constraint, which is fixed in each iteration.

The algorithm proceeds as follows:

- Find a Lagrange multiplier

$\alpha_1$ that violates the Karush–Kuhn–Tucker (KKT) conditions for the optimization problem. - Pick a second multiplier

$\alpha_2$ and optimize the pair ($\alpha_1,\alpha_2$ ). - Repeat steps 1 and 2 until convergence.

When all the Lagrange multipliers satisfy the KKT conditions (within a user-defined tolerance), the problem has been solved. Although this algorithm is guaranteed to converge, heuristics are used to choose the pair of multipliers so as to accelerate the rate of convergence.

In machine learning, the (Gaussian) radial basis function kernel, or RBF kernel, is a popular kernel function used in support vector machine classification.[1]

The RBF kernel on two samples

Since the value of the RBF kernel decreases with distance and ranges between zero (in the limit) and one (when

$\exp\left(-\frac{1}{2}||\mathbf{x} - \mathbf{x'}||2^2\right) = \sum{j=0}^\infty \frac{(\mathbf{x}^\top \mathbf{x'})^j}{j!} \exp\left(-\frac{1}{2}||\mathbf{x}||_2^2\right) \exp\left(-\frac{1}{2}||\mathbf{x'}||_2^2\right)$

Hola soy nuevo