kind: Service

apiVersion: v1

metadata:

name: nfs-server

spec:

ports:

- name: nfs

port: 2049

- name: mountd

port: 20048

- name: rpcbind

port: 111

clusterIP: None

selector:

app: nfs-server

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: nfs-server

spec:

serviceName: "nfs-server"

podManagementPolicy: "Parallel"

replicas: 1

selector:

matchLabels:

app: nfs-server

template:

metadata:

labels:

app: nfs-server

spec:

containers:

- name: nfs-server

image: k8s.gcr.io/volume-nfs:0.8

ports:

- name: nfs

containerPort: 2049

- name: mountd

containerPort: 20048

- name: rpcbind

containerPort: 111

securityContext:

privileged: true

volumeMounts:

- mountPath: /exports

name: provision-pvc

volumeClaimTemplates: # a new gcloud persistent disk will be created automatically

- metadata:

name: provision-pvc

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 1GiapiVersion: v1

kind: PersistentVolume

metadata:

name: nfs

spec:

capacity:

storage: 1Mi

accessModes:

- ReadWriteMany

nfs:

server: nfs-server.default.svc.cluster.local

path: "/"

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs

spec:

accessModes:

- ReadWriteMany # we're able to do this because the backend is a NFS server and not a compute engine disk (supports read by many only)

storageClassName: "" # only able to bound to PVs which don't define a class

resources:

requests:

storage: 1Mi # this is not important, because the PV lookup is mainly concerned with the 'accessModes' whenNOTE: PVs are in the global scope (not namespace scoped), but once bound to a PVC (namespace scoped), they are not usable by other PVCs from other namespaces.

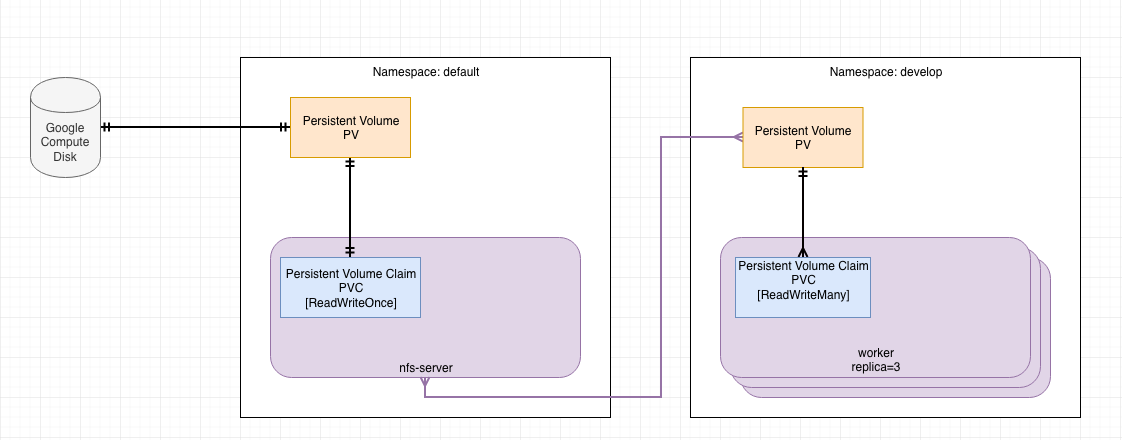

In the example below we see two, global PVs (pointing to the same NFS server) and two PVCs. One PVC is in the default namespace and the other one is in the nfs-work namespace.

$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

nfs 1Mi RWX Retain Bound default/nfs 21m

nfs-work-ns 1Mi RWX Retain Bound nfs-work/nfs-work-ns 7m

$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

nfs Bound nfs 1Mi RWX 21m

$ kubectl get pvc -n nfs-work

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

nfs-work-ns Bound nfs-work-ns 1Mi RWX 7m

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nfs-worker

spec:

replicas: 2

selector:

matchLabels:

app: nfs-worker

template:

metadata:

labels:

app: nfs-worker

spec:

containers:

- image: busybox

command:

- sh

- -c

- 'while true; do date > /mnt/index-`hostname`.html; hostname >> /mnt/index-`hostname`.html; sleep $(($RANDOM % 5 + 5)); done'

imagePullPolicy: IfNotPresent

name: busybox

volumeMounts:

- name: nfs

mountPath: "/mnt"

volumes:

- name: nfs

persistentVolumeClaim:

claimName: nfsAfter applying the above, you can list the contents of the NFS drive, by accessing one of the workers.

kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-server-0 1/1 Running 0 1m

nfs-worker-5cc569cb87-lrmch 1/1 Running 0 1m

nfs-worker-5cc569cb87-mwtzh 1/1 Running 0 1m

kubectl exec -ti nfs-worker-5cc569cb87-lrmch -- ls /mnt/

index-nfs-worker-5cc569cb87-lrmch.html

index-nfs-worker-5cc569cb87-mwtzh.html

index.html

You should see files generated from both workers.

When there are multiple NFS servers running, you probably want to target them specifically from an app eg.

media-app > media-nfs-server

That's doable by defining labels on the PV and matching them on a PVC.

# PV

metadata:

name: media-pv

labels:

storage: media

# PVC - media-pvc

spec:

selector:

matchLabels:

storage: media

And then on the PodSpec:

spec:

template:

spec:

containers:

- ...

volumeMounts:

- name: nfs

mountPath: "/mnt"

volumes:

- name: nfs

persistentVolumeClaim:

claimName: media-pvc