- SECTION - Javascript Paradigms, Design Patterns and Best Practices

- What are Javascripts Paradigms

- 1. Object Oriented Programming (OOP) Paradigms

- What is OOP?

- 1. Inheritance

- 2. Encapsulation

- 3. Polymorphism

- Functional Programming Design

- Higher-order functions (using first-class functions)

- Functional composition

- Recursion

- Pure functions

- Currying

- Functional Programming in the Wild

- Procedural Programming

- How does Design Patterns fit into this?

- Application Architecture

- Paradigms vs Design Patterns vs Application architecture

- Paradigm Chart - (including declartative, impearal ,mono

- Paradigms - Advantages of Object Oriented Programming

- Declarative vs Imperative

- What’s the Difference Between classical & Prototypal Inheritance? - When is prototypal inheritance an appropriate choice?

- Loss vs tight comping?

- Sington Design Pattern

- Observer Design Pattern

- Revealing Module Pattern.

- What are the pros and cons of functional programming vs object-oriented programming?

- When is classical inheritance an appropriate choice?

- When is prototypal inheritance an appropriate choice?

- What are two-way data binding and one-way data flow, and how are they different?

- What are the pros and cons of monolithic vs microservice architectures? - Good to hear:

- What is asynchronous programming, and why is it important in JavaScript?

- What is asynchronous programming, and why is it important in JavaScript?

- Right way to create objects in JavaScript

- Don’t you need a constructor function to specify object instantiation behavior and handle object initialization?

- Don’t you need constructor functions for privacy in JavaScript?

- Does

newmean that code is using classical inheritance? - Is There a Big Performance Difference Between Classical and Prototypal Inheritance?

- Is There a Big Difference in Memory Consumption Between Classical and Prototypal?

- The Native APIs use Constructors. Aren’t they More Idiomatic than Factories?

- Isn’t Classical Inheritance More Idiomatic than Prototypal Inheritance?

- Doesn’t the Choice Between Classical and Prototypal Inheritance Depend on the Use Case?

- ES6 Has the

classkeyword. Doesn’t that mean we should all be using it? - What’s the Difference Between Class & Prototypal Inheritance?

- Issues with Class Inheritance

- Is All Inheritance Bad?

- What does “favor object composition over (class) inheritance” mean?

- Composition over Inheritance

- Disadvantages of Inheritance

- What are two benefits of inheritance? (Subtyping & Subclassing)

- Explain How Inheritance Works.

- Procedural Code

- Explain Composition.

- What are the Three Different Kinds of Prototypal Inheritance? (Prototype delegation,Concatenative inheritance,Functional inheritance) - Concatenative inheritance: - Prototype delegation: - Functional inheritance:

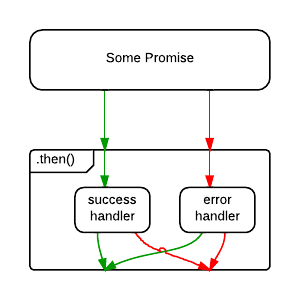

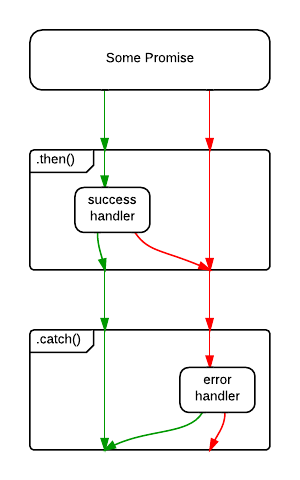

- How do you Promise Error Handle?

- What is Promise Chaining?

- How Do Promises Work?

- SECTION - Javascript

- What is deep binding and shallow binding?

- "this" keyword

- Get Min and max from array

- Check if two objects are the same.

- 1. What is the difference between undefined and not defined in JavaScript ?

- 2. What is the drawback of creating true private methods in JavaScript?

- 3. What is a “closure” in JavaScript, and what are some pitfalls of using them? Provide an example.

- 4. What the difference between constructor functions and function literal and when should you choice on over the other?

- 5. What is Event Delegation?

- 6. hat is Event Bubbling? And give an example.

- event.preventDefault() vs. return false

- What does encapsulation mean?

- What does Polymorphism mean?

- Explain the encapsulated anonymous function syntax.

- Target Vs eventTarget

- promise Vs callback

- Expressions V Declarations?

- Navigator vs window?

- var vs let vs const

- What are First Class Functions

- What are Function Composition

- Anonomus functions

- What is Associative Array? How do we use it?

- What is difference between private variable, public variable and static variable? How we achieve this in JS?

- How to add/remove properties to object in run time?

- How to achieve inheritance?

- What Lexical scope?

- How to extend built-in objects?

- Why extending array is bad idea?

- Difference between browser detection, feature detection and user agent string

- DOM Event Propagation

- Event Delegation

- Event bubbling V/s Event Capturing

- Graceful Degradation V/s Progressive Enhancement

- 1. What are the primitive and non-primitive data types, What is the difference between undefined and null.

- 2. Arrays - push,pop,shift,unshift,splice,delete,remove. The difference between delete and remove with array.

- 3. Lexical Scope vs dyamnic scope

- 4. What is self invoking anonymous function? IIFE

- 5. What is anonymous function?

- 6. Why do we need IIFE?

- 7. What is event bubbling?

- 8. Explain the phases of event handler in JS? or How the events are handled in JS?

- 9. What is an object literal?

- 10. Do we have classes in JS? Till ECMA5, there is no keyword called class to create classes, but you can use constructor

- 11. Explain "this" keyword

- Event delegation - Event Listeners to ul/li

- Write a function that will loop through a list of integers and print the index of each element after a 3 second delay.

- debouncing/Throttling

- find duplicate values in array

- Palindrome

- Dups in string

- string repeating letters

- Min/Max

- 12. By default "this" keyword refers to which object? - Window

- 13. What is strict mode?

- 14. Explain the term DOM?

- 15. How will make an AJAX call from JS?

- 16. Explain what is AJAX? Which object do you use to implement AJAX in JS?

- 17. Explain the use addEventListener method What is the significance of true or false value as third argument?

- 18. What is the purpose of "arguments" object in a function?

- 19. Why do we use call or apply method to call the function?

- 20. Difference between object created using object literal and using constructor

- 21. Explain prototype property of function

- 22. try catch finally - throw - error handling

- 23. Java script design patterns - http://addyosmani. com/resources/essentialjsdesignpatterns/book/#designpatternsjavascript

- 24. What is a namespace? How will you create a namespace in javascript?

- 25. How will you create a module in javascript?

- 26. What are Promises? [Async call Promise] - resolve state. Various states of promise.

- 27. Explain the difference between JSON and JSONP. - cross domain-

- 28. What is the cross domain reference issue? CORS Enabled WEB API.

- 29. What are recursive functions?

- 30. What are call-back functions? - calling a function as a parameter of other function.

- 31. How will you implement inheritance in Javascript? var child = Object.create(parent); Though there are many way

- 32. Explain the term hoisting.

- 33. Pub/Sub

- 34. Getter/Setter

- 35. Debounce/Throttle - https://sking7.github.io/articles/1248932487.html

- 36. Function Currying

- 37. Function Generators

- 37. Meaning of semantic tag, using display:none, visibility,

- 38. What is CSS box model?

- 39. localStorage API, practical way of implementation

- localStorage and sessionStorage

- Cookies

- localStorage vs. sessionStorage vs. Cookies

- Client-side vs. Server-side

- 40. What is difference between fluid and responsive layout?

- 41. Do you write anything in meta-tag while implementing responsive layout?

- 42. How do you make your site responsive? (what property of css you use to do so?)

- 43. HTML5 - what and why semantic tags

- 44. Various selectors like class,element,id,universal(*) etc. and syntax of applying classes in CSS

- 45. What are At-Rules (@Rules) in CSS? Name at least 2 with explanation other than @media

- 46. Pseudo classes and elements -definition,difference and practical example for both.

- 47. What is container collapse (issue or problem) in CSS

- 48. What is CSS reset?

- 49. What is clearfix?

- 50. Explain cross browser compatibility? Have you faced any problem implementing CSS with any browser, how did you fix it?

- 51. Do we need to have Jquery to use bootstrap? Explain the reason.

- 52. Create the same layout in bootstrap which is created responsive in previous assignment

- 53. Explain Bootstrap frame work and the grid system

- 55. What is the difference between padding and margin?

- 56. Explain CSS short-hands with example.

- 58. Explain padding:10 20 30 40, padding 10 20 30 and padding:10 20

- 59. Create 1 sample page (same page) with Fluid layout and Responsive layout

- 60. What's a test pyramid? How can you implement it when talking about HTTP APIs?

- 61. What is Dynamic typing

- 62. What is the difference between undefined and not defined in JavaScript ?

- 63. What is the drawback of creating true private methods in JavaScript?

- 64. What is a “closure” in JavaScript, and what are some pitfalls of using them? Provide an example.

- 65. Event Delegation

- 66. event.preventDefault() vs. return false

- 67. Describe event bubbling

- 68. What are Static Methods

- What is deep binding and shallow binding?

- "this" keyword

- SECTION - ReactJS / ES6 Iterview Questions

- Angular V React

- When to use store V State

- Add Third Party Libraries

- 2. JSX?

- 16. in Production (How do you tell React to build in Production mode and what will that do?)

- 18. Events (Describe how events are handled in React)

- how do parents communication with child

- what is High-Order Component

- 11. Refs

- 12. Keys (What are keys in React and why are they important?)

- 13. Controlled Vs Uncontrolled Components

- 19. createElement VS cloneElement?

- 17. React.Children.map Vs this,props.children.map

- State vs Props

- How to call a Child method from Parent

- How to call a Parent method from Child

- State Vs Stateless Components

- 10. Class Component VS Stateless (Functional) Components

- Dumb Components

- What are Smart components

- function component and class component

- 7. Stateless Components

- Element Vs Component

- 28. Reacts LYFECYCLE LIFECYCLE

- 20. setState (What happens when you call it? What is the second argument?,cad u call setState in render?)

- 23. MVC Vs Flux/Redux?

- 22. Redux - "single source of truth"

- 24. Redux - Actions

- 25. Redux - Action Creator

- 25. Redux - Reducers

- 26. Redux - Store

- 27. Redux - State Change

- Redux - Provider Component?

- Redux - connect?

- Redux - Setting up the folder structure?

- how they separate actions, reducers

- Setting up webpack, hot reloader, styles?

- Deploying?

- Random React/JSX specific errors?

- Nesting components?

- Redux boilerplate?

- what are the parts of React they don't like.

- where do they prefer to dispatch actions

- how they prefer to deal with asynchronous actions.

- how they prefer to split up components

- what do they think makes React a good tool

- SECTION - React Native Mobile

- 1. What is React Native?

- 2. Why open source?

- 3. How did Facebook write React Native for Android?

- 4. What’s the Challenges with React Native?

- 5. Advantages of React Native?

- 6. Handling Multiple Platforms?

- 7. React Native – Differences between Android and IOS?

- 8. Are all React components usable in React Native?

- 9. Passing functions between components in React and React Native?

- 10. Difference between React Native and NativeScript, which one do you prefer and why?

- 11. Are there any disadvantages to using React Native for mobile development?

- 12. What is the difference between using constructor vs getInitialState in React / React Native?

- 13. Re-Render on Changes?

- 14. what is a prop?

- 15. What is the difference between using constructor vs getInitialState in React / React Native?

- 16. What is the difference between React and React Native?

- 17. Is React Native a native Mobile App?

- 18. Can we use React Native code alongside with react native?

- 19. Do we use the same code base for Android and IOS?

- 20. Is React Native like other Hybrid Apps which are actually slower than Native mobile apps?

- SECTION - NodeJs

- What is the difference between localStorage, sessionStorage, session and cookies?

- Mention the steps by which you can async ?

- pros and cons

- How Node.js overcomes the problem of blocking of I/O operations?

- What is JSONP?

- operational Vs programmer errors?

- How does Node.js handle child threads?

- What is an error-first callback?

- How can you avoid callback hells?

- How can you listen on port 80 with Node?

- What's the event loop?

- What tools can be used to assure consistent style?

- What's the difference between operational and programmer errors?

- Why npm shrinkwrap is useful?

- What's a stub? Name a use case.

- What's a test pyramid? How can you implement it when talking about HTTP APIs?

- What's your favourite HTTP framework and why?

- How does Node.js handle child threads?

- What is the preferred method of resolving unhandled exceptions ?

- How does Node.js support multi-processor platforms, and does it fully utilize all processor resources?

- What is typically the first argument passed to a Node.js callback handler?

- What are Promises?

- When are background/worker processes useful? How can you handle worker tasks?

- How can you secure your HTTP cookies against XSS attacks?

- How can you make sure your dependencies are safe?

- What is Node.js?

- Why to use Node.js?

- Who developed Node.js?

- What are the features of Node.js?

- Explain REPL ?

- Explain variables ?

- What is the latest version of Node.js available?

- List out some REPL commands ?

- Mention the command to stop REPL ?

- Explain NPM ?

- Mention command to verify the NPM version ?

- How you can update NPM to new version ?

- Explain callback ?

- How Node.js can be made more scalable?

- Explain global installation of dependencies?

- Explain local installation of dependencies?

- Explain Package.JSON?

- Explain “Callback hell”?

- What are “Streams” in Node.JS?

- What you mean by chaining in Node.JS?

- Explain Child process module?

- Why to use exec method for Child process module?

- List out the parameters passed for Child process module?

- What is the use of method – “spawn()”?

- What is the use of method – “fork()”?

- Explain Piping Stream?

- What would be the limit for Piping Stream?

- Explain FS module ?

- Explain “Console” in Node.JS?

- Explain – “console.log(data)” statement in Node.JS?

- What you mean by “process”?

- Explain exit codes in Node.JS? List out some exit codes?

- List out the properties of process?

- Define OS module?

- What is the property of OS module?

- Explain “Path” module in Node.JS?

- Explain “Net” module in Node.JS?

- List out the differences between AngularJS and NodeJS?

- NodeJS is client side server side language?

- What are the advantages of NodeJS?

- In which scenarios NodeJS works well?

- What you mean by JSON?

- aScript Object Notation (JSON) is a practical, compound, widely popular data exchange format. This will enable

- Explain “Stub”?

- List out all Node.JS versions available?

- Explain “Buffer class” in Node.JS?

- How we can convert Buffer to JSON?

- How to concatenate buffers in NodeJS?

- How to compare buffers in NodeJS?

- How to copy buffers in NodeJS?

- What are the differences between “readUIntBE” and “writeIntBE” in Node.JS?

- Why to use

__filenamein Node.JS? - Why to use “SetTimeout” in Node.JS?

- Why to use “ClearTimeout” in Node.JS?

- Explain Web Server?

- List out the layers involved in Web App Architechure?

- Explain “Event Emitter” in Node.JS?

- Explain “NewListener” in Node.JS?

- Why to use Net.socket in Node.JS?

- Which events are emitted by Net.socket?

- Explain “DNS module” in Node.JS?

- Explain binding in domain module in Node.JS?

- Explain RESTful Web Service?

- How to truncate the file in Node.JS?

- How node.js works?

- What do you mean by the term I/O ?

- What does event-driven programming mean?

- Where can we use node.js?

- What is the advantage of using node.js?

- What are the two types of API functions?

- What is the biggest drawback of Node.js?

- What is control flow function?

- Explain the steps how “Control Flow” controls the functions calls?

- Why Node.js is single threaded?

- Does node run on windows?

- Can you access DOM in node?

- Using the event loop what are the tasks that should be done asynchronously?

- Why node.js is quickly gaining attention from JAVA programmers?

- What are the two arguments that async.queue takes?

- Event loop?

- Node.js vs Ajax?

- Node.js Challenges?

- "non-blocking" in node.js

- command to import external libraries?

- node.js Callbacks (and Advantages)

- What and Why Express Js?

- Express core features?

- How to install expressjs?

- Get variables in GET Method?

- Get POST a query in Express.js?

- output pretty html

- Get full url

- How to remove debugging from an Express app?

- Route - 404 errors?

- How to download a file?

- next() parameter

- config view engine

- app.use Vs app.get

- Logging

- CORS

- Explain Event Emitters in NodeJS

- SECTION - Javascript CI / Unit Testing

- SECTION - GraphQL

JavaScript is a multi-paradigm language that allows you to freely mix and match the 3 main types Javascript Paradigms, these include: Object Oriented Programming, Functional Programming and Procedural Programming

Object Oriented Programming (OOP) refers to using self-contained pieces of code to develop applications. We call these self-contained pieces of code objects, better known as Classes in most OOP programming languages and Functions in JavaScript.

Objects can be thought of as the main actors in an application, or simply the main “things” or building blocks that do all the work. As you know by now, objects are everywhere in JavaScript since every component in JavaScript is an Object, including Functions, Strings, and Numbers.

Object Oriented programming the code is divided up into classes (sometimes a language feature, sometimes not (e.g. javascript)), and typically supports inheritance and some type of polymorphism. The programmer creates the classes, and then instances of the classes (i.e. the objects) to carry out the operation of the program.

Through object literals (Encapsulation)

var myObj = {name: "Richard", profession: "Developer"};or constructor functions (Inheritance)

function Employee () {}

Employee.prototype.firstName = "Abhijit";

Employee.prototype.lastName = "Patel";

Employee.prototype.startDate = new Date();

Employee.prototype.signedNDA = true;

Employee.prototype.fullName = function () {

console.log (this.firstName + " " + this.lastName);

};

|

var abhijit = new Employee () //

console.log(abhijit.fullName()); // Abhijit Patel

console.log(abhijit.signedNDA); // trueto create objects in the OOP design pattern

The Three tenets of Object Oriented Programming (OOP):

Parasitic Combination Inheritance

Inheritance (objects can inherit features from other objects)

Inheritance refers to an object being able to inherit methods and properties from a parent object (a Class in other OOP languages, or a Function in JavaScript).

Lets say we have a quiz application to make different types of Questions. We will implement a MultipleChoiceQuestion function and a DragDropQuestion function. To implement these, it would not make sense to put the properties and methods outlined above (that all questions will use) inside the MultipleChoiceQuestion and DragDropQuestion functions separately, repeating the same code. This would be redundant.

Instead, we will leave those properties and methods (that all questions will use) inside the Question object and make the MultipleChoiceQuestion and DragDropQuestion functions inherit those methods and properties.

This is where inheritance is important: we can reuse code throughout our application effectively and better maintain our code.

An instance is an implementation of a Function. In simple terms, it is a copy (or “child”) of a Function or object. For example:

// Tree is a constructor function because we will use new keyword to invoke it.

function Tree (typeOfTree) {}

// bananaTree is an instance of Tree.

var bananaTree = new Tree ("banana");In the preceding example, bananaTree is an object that was created from the Tree constructor function. We say that the bananaTree object is an instance of the Tree object. Tree is both an object and a function, because functions are objects in JavaScript. bananaTree can have its own methods and properties and inherit methods and properties from the Tree object, as we will discuss in detail when we study inheritance below.

using the Parasitic Combination Inheritance Using the *Object.create() Method

if (typeof Object.create !== 'function') {

Object.create = function (o) {

function F() {

}

F.prototype = o;

return new F();

};

}Let’s quickly understand it is doing.

Object.create = function (o) {

//It creates a temporary constructor F()

function F() {

}

//And set the prototype of the this constructor to the parametric (passed-in) o object

//so that the F() constructor now inherits all the properties and methods of o

F.prototype = o;

//Then it returns a new, empty object (an instance of F())

//Note that this instance of F inherits from the passed-in (parametric object) o object.

//Or you can say it copied all of the o object's properties and methods

return new F();

}Encapsulation (each object is responsible for specific tasks).

Combination Constructor/Prototype Pattern

Encapsulation refers to enclosing all the functionalities of an object within that object so that the object’s internal workings (its methods and properties) are hidden from the rest of the application.

This allows us to abstract or localize specific set of functionalities on objects.

To implement encapsulation in JavaScript, we have to define the core methods and properties on that object. To do this, we will use the best pattern for encapsulation in JavaScript: the Combination Constructor/Prototype Pattern.

function User (theName, theEmail) {

this.name = theName;

this.email = theEmail;

this.quizScores = [];

this.currentScore = 0;

}

User.prototype = {

constructor: User,

saveScore:function (theScoreToAdd) {

this.quizScores.push(theScoreToAdd)

},

showNameAndScores:function () {

var scores = this.quizScores.length > 0 ? this.quizScores.join(",") : "No Scores Yet";

return this.name + " Scores: " + scores;

},

changeEmail:function (newEmail) {

this.email = newEmail;

return "New Email Saved: " + this.email;

}

} when you want to create objects with similar functionalities (to use the same methods and properties), you encapsulate the main functionalities in a Function and you use that Function’s constructor to create the objects. This is the essence of encapsulation. And it is this need for encapsulation that we are concerned with and why we are using the Combination Constructor/Prototype Pattern.

Polymorphism (objects can share the same interface—how they are accessed and used—while their underlying implementation of the interface may differ)

In JavaScript it is a bit more difficult to see the effects of polymorphism because the more classical types of polymorphism are more evident in statically-typed systems, whereas JavaScript has a dynamic type system.

Polymorphism foster many good attributes in software, among other things it fosters modularity and reusability and make the type system more flexible and malleable

This is not simple to answer, different languages have different ways to implement it. In the case of JavaScript, as mentioned above, you will see it materialize in the form of type hierarchies using prototypal inheritance and you can also exploit it using duck typing.

What is polymorphism in Javascript? - https://stackoverflow.com/questions/27642239/what-is-polymorphism-in-javascript

functional languages, the state changes on the computer are very heavily controlled by the language itself. Functions are first class objects, although not all languages where functions are first class objects are functional programming language (this topic is one of good debate). Code written with a functional languages involves lots of nested functions, almost every step of the program is new function invocation.

The Five tenets of Functional Programming:

you could simulate first-class functions using anonymous classes. Those first-class functions are what makes functional programming possible in JavaScript.

We already know that JavaScript has first-class functions that can be passed around just like any other value. So, it should come as no surprise that we can pass a function to another function. We can also return a function from a function.

You're probably already familiar with several higher order functions that exist on the Array.prototype. For example, filter, map, and reduce, among others.

const vehicles = [

{ make: 'Honda', model: 'CR-V', type: 'suv', price: 24045 },

{ make: 'Honda', model: 'Accord', type: 'sedan', price: 22455 },

{ make: 'Mazda', model: 'Mazda 6', type: 'sedan', price: 24195 },

{ make: 'Mazda', model: 'CX-9', type: 'suv', price: 31520 },

{ make: 'Toyota', model: '4Runner', type: 'suv', price: 34210 },

{ make: 'Toyota', model: 'Sequoia', type: 'suv', price: 45560 },

{ make: 'Toyota', model: 'Tacoma', type: 'truck', price: 24320 },

{ make: 'Ford', model: 'F-150', type: 'truck', price: 27110 },

{ make: 'Ford', model: 'Fusion', type: 'sedan', price: 22120 },

{ make: 'Ford', model: 'Explorer', type: 'suv', price: 31660 }

];

let Fords=vehicles.filter(x=>x.make==="Ford")

console.log(Fords) // [{'make':'Ford','model':'F-150','type':'truck','price':27110},{'make':'Ford','model':'Fusion','type':'sedan','price':22120},{'make':'Ford','model':'Explorer','type':'suv','price':31660}]"or

const averageSUVPrice = vehicles

.filter(v => v.type === 'suv')

.map(v => v.price)

.reduce((sum, price, i, array) => sum + price / array.length, 0);

console.log(averageSUVPrice); // 33399This just means your combining small function to make a large function

const vehicles = [

{ make: 'Honda', model: 'CR-V', type: 'suv', price: 24045 },

{ make: 'Honda', model: 'Accord', type: 'sedan', price: 22455 },

{ make: 'Mazda', model: 'Mazda 6', type: 'sedan', price: 24195 },

{ make: 'Mazda', model: 'CX-9', type: 'suv', price: 31520 },

{ make: 'Toyota', model: '4Runner', type: 'suv', price: 34210 },

{ make: 'Toyota', model: 'Sequoia', type: 'suv', price: 45560 },

{ make: 'Toyota', model: 'Tacoma', type: 'truck', price: 24320 },

{ make: 'Ford', model: 'F-150', type: 'truck', price: 27110 },

{ make: 'Ford', model: 'Fusion', type: 'sedan', price: 22120 },

{ make: 'Ford', model: 'Explorer', type: 'suv', price: 31660 }

];

const Makes=(arr,make)=>arr.filter(x=>x.make===make)

const CarType=(arr,type)=>arr.filter(x=>x.type===type)

let ArrayOfAllFords=Makes(vehicles,"Fords")

let ArrayOfAllFordTrucks=Makes(vehicles,"trucks")

//OUTPUTS

// ArrayOfAllFords is => [{ make: 'Ford', model: 'F-150', type: 'truck', price: 27110 }, { make: 'Ford', model: 'Fusion', type: 'sedan', price: 22120 }, { make: 'Ford', model: 'Explorer', type: 'suv', price: 31660 }]

//ArrayOfAllFordTrucks is => [{ make: 'Ford', model: 'F-150', type: 'truck', price: 27110 }]Easy to isolate, test, reuse.

Let's say that you would like to implement a function that computes the factorial of a number. Let's recall the definition of factorial from mathematics:

n! = n * (n-1) * (n-2) * ... * 1.

That is, n! is the product of all the integers from n down to 1. We can write a loop that computes that for us easily enough.

function iterativeFactorial(n) {

let product = 1;

for (let i = 1; i <= n; i++) {

product *= i;

}

return product;

}to iterate over something until its done

A pure function must satisfy both of the following properties:

Referential transparency: The function always gives the same return value for the same arguments. This means that the function cannot depend on any mutable state. Side-effect free: The function cannot cause any side effects. Side effects may include I/O (e.g., writing to the console or a log file), modifying a mutable object, reassigning a variable, etc.

Things that are not pure are things that use:

- console.log

- element.addEventListener

- Math.random

- Date.now

- Data from Network / API Calls

TLDR; same thing in same thing out every time.

It's stateless, with no side-effects

see the filter methods in the "Functional composition" and "Higher-order functions" examples.

Easy to isolate, test, reuse.

and

** Immutability** - Immutability means "unchange". So if you put the same thing in you should get the same thing out every time. like in the "Functional composition" and "Higher-order functions" examples.

Currying is when you break down a function that takes multiple arguments into a series of functions that take part of the arguments.

Here's an example in JavaScript:

function add (a, b) {

return a + b;

}

add(3, 4); returns 7

This is a function that takes two arguments, a and b, and returns their sum. We will now curry this function:

function add (a) {

return function (b) {

return a + b;

}

}

This is a function that takes one argument, a, and returns a function that takes another argument, b, and that function returns their sum.

add(3)(4);

var add3 = add(3);

add3(4);

The first statement returns 7, like the add(3, 4) statement. The second statement defines a new function called add3 that will add 3 to its argument. This is what some people may call a closure. The third statement uses the add3 operation to add 3 to 4, again producing 7 as a result.

Currying is often used to do partial application, but it's not the only way. This makes testing easier and makes it more modular.

## Functional Programming in the Wild Recently there has been a growing trend toward functional programming. In frameworks such as Angular and React, you'll actually get a performance boost by using immutable data structures.

Resources: https://stackoverflow.com/questions/1112773/what-are-the-core-concepts-in-functional-programming

procedural programming, C programs and bash scripting are good examples, you just say do step 1, do step 2, etc, without creating classes and whatnot.

A design pattern is a useful abstraction that can be implemented in any language. It is a "pattern" for doing things. Like if you have a bunch of steps you want to implement, you might use the 'composite' and 'command' patterns so make your implementation more generic.

Think of a pattern as an established template for solving a common coding task in a generic way.

Application Architecture, rakes into consideration how you build a system to do stuff.

So, for a web application, the architecture might involve x number of gateways behind a load balancer, that asynchronously feed queues. Messages are picked up by y processes running on z machines, with 1 primary db and a backup slave. Application architecture involves choosing the platform, languages, frameworks used. This is different than software architecture, which speaks more to how to actually implement the program given the software stack.

Paradigms are all-encompassing views of computation that affect not only what kinds of things you can do, but even what kinds of thoughts you can have; functional programming is an example of a programming paradigm.

Design Patterns are simply well-established programming tricks, codified in some semi-formal manner.

Application architecture is a broad term describing how complex applications are organised.

Javascript is a multi-paradigm programming language that supports imperative/and procedural programs Paradigms include:

-

OOP - http://javascriptissexy.com/oop-in-javascript-what-you-need-to-know/

-

Functional - https://opensource.com/article/17/6/functional-javascript

-

Imperial

-

procedural

| Paradigms | Declarative (Better) | Imperative |

|---|---|---|

| Description | Declarative Programing is "The What". A way of doing something without explicitly saying what it is your doing as way to make something multi-use. | Imperative Programing is "The How" Uses sequences of implicit statements. |

| Examples / Related | Function Programming higher order functions - a function you can pass into another function to make a big function (functions compassion) functions compassion combining functions into small reusable components to create a big functions Micro-services - Apps that are built from small self contained apps that run in their own memory space. Cons (in Addition to Declarative Cons): *Cross Cutting Concerns - (eg. Logging HTTP calls) *Dependent on outside resources Loose Coupling(good) - A System or Pattern where components are self contained. |

OOP Monolithic (bad) - Apps written in one cohesive unit that share memory space and resources. Pros (in Addition to Imperative Pros): * Faster - because it uses a shared memory space. * Not Dependent on outside resources Tight Couplings (bad) - A System or Pattern where components are self contained. |

| Pros | Because it uses small self contained pieces it's: * Avoids shared state * Scalable * Easily Recomposed * Easy To Test * Better Organized * Easy to isolate * Uses Immutable (Not Changing) State *Encourages Decoupling (keeping things separate) |

Because imperative programming uses implicit instructions it's: * Readability * Straight-forward -Easy to understand |

| Cons | * Poor(er) Readability - Everything can become abstract as it can be more theoretical or academic | * Usually dependent on shared state * Gets tightly coupled * Difficult to scale *Difficult to test * Classes are dependent on each other and one failed component will bring the whole system down. * Objects are tacked together (OOP) |

OOP* - uses objects with prototypal inhieratice, data is one big object/function

pros:

- uses imperative style cons:

- depends on shared shtate

Function Programming - uses functions and avoids shared state. A method of transforming data through an expression with limited side effects, usually uses seperate functions for clariry pros: uses functions and avoids shared state. uses immutable data structures, encourages decouping, aviods shared state

One of the principal advantages of object-oriented programming techniques over procedural programming techniques is that they enable programmers to create modules that do not need to be changed when a new type of object is added. A programmer can simply create a new object that inherits many of its features from existing objects. This makes object-oriented programs easier to modify.

Functional programming is a declarative paradigm, meaning that the program logic is expressed without explicitly describing the flow control. Declarative/functional programming? Functional programming uses functions and avoids shared state & mutable data.

Declarative programs abstract the flow control process, and instead spend lines of code describing the data flow: What to do. The how gets abstracted away. Declarative code relies more on expressions. An expression is a piece of code which evaluates to some value. Expressions are usually some combination of function calls, values, and operators which are evaluated to produce the resulting value.

Imperative code frequently utilizes statements. A statement is a piece of code which performs some action. Examples of commonly used statements include for, if, switch, throw, etc… Imperative programs spend lines of code describing the specific steps used to achieve the desired results — the flow control: How to do things.

For example, this imperative mapping takes an array of numbers and returns a new array with each number multiplied by 2:

Class Inheritance: A class is like a blueprint — a description of the object to be created. Classes inherit from classes and create subclass relationships: hierarchical class taxonomies.

Instances are typically instantiated via constructor functions with the new keyword.

Prototypal Inheritance: A prototype is a working object instance. Objects inherit directly from other objects.

Instances may be composed from many different source objects, allowing for easy selective inheritance and a flat [[Prototype]] delegation hierarchy. In other words, class taxonomies are not an automatic side-effect of prototypal OO: a critical distinction. Instances are typically instantiated via factory functions, object literals, or Object.create().

There is more than one type of prototypal inheritance:

-

Delegation (i.e., the prototype chain). -

Concatenative (i.e. mixins, `Object.assign()`). -

Functional (Not to be confused with functional programming. A function used to create a closure for private state/encapsulation).

Each type of prototypal inheritance has its own set of use-cases, but all of them are equally useful in their ability to enable composition, which creates has-a or uses-a or can-do relationships as opposed to the is-a relationship created with class inheritance.

tight comping - Js only works Loose Coupling means reducing dependencies of a class that use a different class directly. So if you test in a Dif browser it won’t work

Tight coupling, classes and objects are dependent on one another.

A Singleton only allows for a single instantiation, but many instances of the same object. The Singleton restricts clients from creating multiple objects, after the first object created, it will return instances of itself.

There are many times when one part of the application changes, other parts needs to be updated. In AngularJS, if the $scope object updates, an event can be triggered to notify another component. The observer pattern incorporates just that - if an object is modified it broadcasts to dependent objects that a change has occurred.

The purpose is to maintain encapsulation and reveal certain variables and methods returned in an object literal.

OOP Pros: It’s easy to understand the basic concept of objects and easy to interpret the meaning of method calls. OOP tends to use an imperative style rather than a declarative style, which reads like a straight-forward set of instructions for the computer to follow. OOP Cons: OOP Typically depends on shared state. Objects and behaviors are typically tacked together on the same entity, which may be accessed at random by any number of functions with non-deterministic order, which may lead to undesirable behavior such as race conditions. FP Pros: Using the functional paradigm, programmers avoid any shared state or side-effects, which eliminates bugs caused by multiple functions competing for the same resources. With features such as the availability of point-free style (aka tacit programming), functions tend to be radically simplified and easily recomposed for more generally reusable code compared to OOP. FP also tends to favor declarative and denotational styles, which do not spell out step-by-step instructions for operations, but instead concentrate on what to do, letting the underlying functions take care of the how. This leaves tremendous latitude for refactoring and performance optimization, even allowing you to replace entire algorithms with more efficient ones with very little code change. (e.g., memoize, or use lazy evaluation in place of eager evaluation.) Computation that makes use of pure functions is also easy to scale across multiple processors, or across distributed computing clusters without fear of threading resource conflicts, race conditions, etc… FP Cons: Over exploitation of FP features such as point-free style and large compositions can potentially reduce readability because the resulting code is often more abstractly specified, more terse, and less concrete. More people are familiar with OO and imperative programming than functional programming, so even common idioms in functional programming can be confusing to new team members. FP has a much steeper learning curve than OOP because the broad popularity of OOP has allowed the language and learning materials of OOP to become more conversational, whereas the language of FP tends to be much more academic and formal. FP concepts are frequently written about using idioms and notations from lambda calculus, algebras, and category theory, all of which requires a prior knowledge foundation in those domains to be understood.

The answer is never, or almost never. Certainly never more than one level. Multi-level class hierarchies are an anti-pattern. I’ve been issuing this challenge for years, and the only answers I’ve ever heard fall into one of several common misconceptions. More frequently, the challenge is met with silence. “If a feature is sometimes useful and sometimes dangerous and if there is a better option then always use the better option.” ~ Douglas Crockford

There is more than one type of prototypal inheritance:

Delegation (i.e., the prototype chain).

Concatenative (i.e. mixins, Object.assign()).

Functional (Not to be confused with functional programming. A function used to create a closure for private state/encapsulation).

Each type of prototypal inheritance has its own set of use-cases, but all of them are equally useful in their ability to enable composition, which creates has-a or uses-a or can-do relationships as opposed to the is-a relationship created with class inheritance.

Good to hear:

In situations where modules or functional programming don’t provide an obvious solution.

When you need to compose objects from multiple sources.

Any time you need inheritance.

Two way data binding means that UI fields are bound to model data dynamically such that when a UI field changes, the model data changes with it and vice-versa. One way data flow means that the model is the single source of truth. Changes in the UI trigger messages that signal user intent to the model (or “store” in React). Only the model has the access to change the app’s state. The effect is that data always flows in a single direction, which makes it easier to understand. One way data flows are deterministic, whereas two-way binding can cause side-effects which are harder to follow and understand. Good to hear: React is the new canonical example of one-way data flow, so mentions of React are a good signal. Cycle.js is another popular implementation of uni-directional data flow. Angular is a popular framework which uses two-way binding.

A monolithic architecture means that your app is written as one cohesive unit of code whose components are designed to work together, sharing the same memory space and resources. A microservice architecture means that your app is made up of lots of smaller, independent applications capable of running in their own memory space and scaling independently from each other across potentially many separate machines. Monolithic Pros: The major advantage of the monolithic architecture is that most apps typically have a large number of cross-cutting concerns, such as logging, rate limiting, and security features such audit trails and DOS protection. When everything is running through the same app, it’s easy to hook up components to those cross-cutting concerns. There can also be performance advantages, since shared-memory access is faster than inter-process communication (IPC). Monolithic cons: Monolithic app services tend to get tightly coupled and entangled as the application evolves, making it difficult to isolate services for purposes such as independent scaling or code maintainability. Monolithic architectures are also much harder to understand, because there may be dependencies, side-effects, and magic which are not obvious when you’re looking at a particular service or controller. Microservice pros: Microservice architectures are typically better organized, since each microservice has a very specific job, and is not concerned with the jobs of other components. Decoupled services are also easier to recompose and reconfigure to serve the purposes of different apps (for example, serving both the web clients and public API). They can also have performance advantages depending on how they’re organized because it’s possible to isolate hot services and scale them independent of the rest of the app. Microservice cons: As you’re building a new microservice architecture, you’re likely to discover lots of cross-cutting concerns that you did not anticipate at design time. A monolithic app could establish shared magic helpers or middleware to handle such cross-cutting concerns without much effort. In a microservice architecture, you’ll either need to incur the overhead of separate modules for each cross-cutting concern, or encapsulate cross-cutting concerns in another service layer that all traffic gets routed through. Eventually, even monolthic architectures tend to route traffic through an outer service layer for cross-cutting concerns, but with a monolithic architecture, it’s possible to delay the cost of that work until the project is much more mature. Microservices are frequently deployed on their own virtual machines or containers, causing a proliferation of VM wrangling work. These tasks are frequently automated with container fleet management tools.

Positive attitudes toward microservices, despite the higher initial cost vs monolthic apps. Aware that microservices tend to perform and scale better in the long run. Practical about microservices vs monolithic apps. Structure the app so that services are independent from each other at the code level, but easy to bundle together as a monolithic app in the beginning. Microservice overhead costs can be delayed until it becomes more practical to pay the prive

Synchronous programming means that, barring conditionals and function calls, code is executed sequentially from top-to-bottom, blocking on long-running tasks such as network requests and disk I/O. Asynchronous programming means that the engine runs in an event loop. When a blocking operation is needed, the request is started, and the code keeps running without blocking for the result. When the response is ready, an interrupt is fired, which causes an event handler to be run, where the control flow continues. In this way, a single program thread can handle many concurrent operations. User interfaces are asynchronous by nature, and spend most of their time waiting for user input to interrupt the event loop and trigger event handlers. Node is asynchronous by default, meaning that the server works in much the same way, waiting in a loop for a network request, and accepting more incoming requests while the first one is being handled. This is important in JavaScript, because it is a very natural fit for user interface code, and very beneficial to performance on the server. Good to hear: An understanding of what blocking means, and the performance implications. An understanding of event handling, and why its important for UI code.

here are several right ways to create objects in JavaScript. The first and most common is an object literal. It looks like this (in ES6):

// ES6 / ES2015, because 2015.

let mouse = {

furColor: 'brown',

legs: 4,

tail: 'long, skinny',

describe () {

return `A mouse with ${this.furColor} fur,

${this.legs} legs, and a ${this.tail} tail.`;

}

};Of course, object literals have been around a lot longer than ES6, but they lack the method shortcut seen above, and you have to use var instead of let. Oh, and that template string thing in the .describe() method won’t work in ES5, either.

You can attach delegate prototypes with Object.create() (an ES5 feature):

let animal = {

animalType: 'animal',

describe () {

return `An ${this.animalType}, with ${this.furColor} fur,

${this.legs} legs, and a ${this.tail} tail.`;

}

};

let mouse = Object.assign(Object.create(animal), {

animalType: 'mouse',

furColor: 'brown',

legs: 4,

tail: 'long, skinny'

});Let’s break this one down a little. animal is a delegate prototype. mouse is an instance. When you try to access a property on mouse that isn’t there, the JavaScript runtime will look for the property on animal (the delegate).

Object.assign() is a new ES6 feature championed by Rick Waldron that was previously implemented in a few dozen libraries. You might know it as $.extend() from jQuery or _.extend() from Underscore. Lodash has a version of it called assign(). You pass in a destination object, and as many source objects as you like, separated by commas. It will copy all of the enumerable own properties by assignment from the source objects to the destination objects with last in priority. If there are any property name conflicts, the version from the last object passed in wins.

Object.create() is an ES5 feature that was championed by Douglas Crockford so that we could attach delegate prototypes without using constructors and the new keyword.

I’m skipping the constructor function example because I can’t recommend them. I’ve seen them abused a lot, and I’ve seen them cause a lot of trouble. It’s worth noting that a lot of smart people disagree with me. Smart people will do whatever they want.

Wise people will take Douglas Crockford’s advice:

“If a feature is sometimes dangerous, and there is a better option, then always use the better option.”

Don’t you need a constructor function to specify object instantiation behavior and handle object initialization?

No. Any function can create and return objects. When it’s not a constructor function, it’s called a factory function. The Better Option

I usually don’t name my factories “factory” — that’s just for illustration. Normally I just would have called it mouse().

No. In JavaScript, any time you export a function, that function has access to the outer function’s variables. When you use them, the JS engine creates a closure. Closures are a common pattern in JavaScript, and they’re commonly used for data privacy. Closures are not unique to constructor functions. Any function can create a closure for data privacy:

let animal = {

animalType: 'animal',

describe () {

return `An ${this.animalType} with ${this.furColor} fur,

${this.legs} legs, and a ${this.tail} tail.`;

}

};

let mouseFactory = function mouseFactory () {

let secret = 'secret agent';

return Object.assign(Object.create(animal), {

animalType: 'mouse',

furColor: 'brown',

legs: 4,

tail: 'long, skinny',

profession () {

return secret;

}

});

};

let james = mouseFactory();No.

The new keyword is used to invoke a constructor. What it actually does is:

Create a new instance

Bind this to the new instance

Reference the new object’s delegate [[Prototype]] to the object referenced by the constructor function’s prototype property.

Reference the new object’s .constructor property to the constructor that was invoked.

Names the object type after the constructor, which you’ll notice mostly in the debugging console. You’ll see [Object Foo], for example, instead of [Object object].

Allows instanceof to check whether or not an object’s prototype reference is the same object referenced by the .prototype property of the constructor.

instanceof lies

Let’s pause here for a moment and reconsider the value of instanceof. You might change your mind about its usefulness.

Important: instanceof does not do type checking the way that you expect similar checks to do in strongly typed languages. Instead, it does an identity check on the prototype object, and it’s easily fooled. It won’t work across execution contexts, for instance (a common source of bugs, frustration, and unnecessary limitations). For reference, an example in the wild, from bacon.js.

It’s also easily tricked into false positives (and more commonly) false negatives from another source. Since it’s an identity check against a target object’s .prototype property, it can lead to strange things:

> function foo() {}

> var bar = { a: ‘a’};

> foo.prototype = bar; // Object {a: “a”}

> baz = Object.create(bar); // Object {a: “a”}

> baz instanceof foo // true. oops.That last result is completely in line with the JavaScript specification. Nothing is broken — it’s just that instanceof can’t make any guarantees about type safety. It’s easily tricked into reporting both false positives, and false negatives.

Besides that, trying to force your JS code to behave like strongly typed code can block your functions from being lifted to generics, which are much more reusable and useful.

instanceof limits the reusability of your code, and potentially introduces bugs into the programs that use your code.

new is weird

WAT? new also does some weird stuff to return values. If you try to return a primitive, it won’t work. If you return any other arbitrary object, that does work, but this gets thrown away, breaking all references to it (including .call() and .apply()), and breaking the link to the constructor’s .prototype reference.

No.

You may have heard of hidden classes, and think that constructors dramatically outperform objects instantiated with Object.create(). Those performance differences are dramatically overstated.

A small fraction of your application’s time is spent running JavaScript, and a miniscule fraction of that time is spent accessing properties on objects. In fact, the slowest laptops being produced today can access millions of properties per second.

That’s not your app’s bottleneck. Do yourself a favor and profile your app to discover your real performance bottlenecks. I’m sure there are a million things you should fix before you spend another moment thinking about micro-optimizations.

Not convinced? For a micro-optimization to have any appreciable impact on your app, you’d have to loop over the operation hundreds of thousands of times, and the only differences in micro-optimization you should ever be concerned about are the ones that are orders of magnitude apart.

Rule of thumb: Profile your app and eliminate as many loading, networking, file I/O, and rendering bottlenecks as you can find. Then and only then should you start to think about a micro-optimization.

Can you tell the difference between .0000000001 seconds and .000000001 seconds? Neither can I, but I sure can tell the difference between loading 10 small icons or loading one web font, instead!

If you do profile your app and find that object creation really is a bottleneck, the fastest way to do it is not by using new and classical OO. The fastest way is to use object literals. You can do so in-line with a loop and add objects to an object pool to avoid thrashing from the garbage collector. If it’s worth abandoning prototypal OO over perf, it’s worth ditching the prototype chain and inheritance altogether to crank out object literals.

But Google said class is fast…

WAT? Google is building a JavaScript engine. You are building an application. Obviously what they care about and what you care about should be very different things. Let Google handle the micro-optimizations. You worry about your app’s real bottlenecks. I promise, you’ll get a whole lot better ROI focusing on just about anything else.

No.

Both can use delegate prototypes to share methods between many object instances. Both can use or avoid wrapping a bunch of state into closures.

In fact, if you start with factory functions, it’s easier to switch to object pools so that you can manage memory more carefully and avoid being blocked periodically by the garbage collector. For more on why that’s awkward with constructors, see the WAT? note under “Does new mean that code is using classical inheritance?”

In other words, if you want the most flexibility for memory management, use factory functions instead of constructors and classical inheritance.

“…if you want the most flexibility for memory management,

use factory functions…”

Factories are extremely common in JavaScript. For instance, the most popular JavaScript library of all time, jQuery exposes a factory to users. John Resig has written about the choice to use a factory and prototype extension rather than a class. Basically, it boils down to the fact that he didn’t want callers to have to type new every time they made a selection. What would that have looked like?

/**

classy jQuery - an alternate reality where jQuery really sucked and never took off

OR

Why nobody would have liked jQuery if it had exported a class instead of a factory.

**/

// This just looks stupid. Are we creating a new DOM element

// with id="foo"? Nope. We're selecting an existing DOM element

// with id="foo", and wrapping it in a jQuery object instance.

var $foo = new $('#foo');

// Besides, it's a lot of extra typing with literally ZERO gain.

var $bar = new $('.bar');

var $baz = new $('.baz');

// And this is just... well. I don't know what.

var $bif = new $('.foo').on('click', function () {

var $this = new $(this);

$this.html('clicked!');

});What else exposes factories?

React React.createClass() is a factory.

Angular uses classes & factories, but wraps them all with a factory in the Dependency Injection container. All providers are sugar that use the .provider() factory. There’s even a .factory() provider, and even the .service() provider wraps normal constructors and exposes … you guessed it: A factory for DI consumers.

Ember Ember.Application.create(); is a factory that produces the app. Rather than creating constructors to call with new, the .extend() methods augment the app.

Node core services like http.createServer() and net.createServer() are factory functions.

Express is a factory that creates an express app.

As you can see, virtually all of the most popular libraries and frameworks for JavaScript make heavy use of factory functions. The only object instantiation pattern more common than factories in JS is the object literal.

JavaScript built-ins started out using constructors because Brendan Eich was told to make it look like Java. JavaScript continues to use constructors for self-consistency. It would be awkward to try to change everything to factories and deprecate constructors now.

No.

Every time I hear this misconception I am tempted to say, “do u even JavaScript?” and move on… but I’ll resist the urge and set the record straight, instead.

Don’t feel bad if this is your question, too. It’s not your fault. JavaScript Training Sucks!

The answer to this question is a big, gigantic

No… (but)

Prototypes are the idiomatic inheritance paradigm in JS, and class is the marauding invasive species.

A brief history of popular JavaScript libraries:

In the beginning, everybody wrote their own libs, and open sharing wasn’t a big thing. And then Prototype came along. (The name is a big hint here). Prototype did its magic by extending built-in delegate prototypes using concatenative inheritance.

Later we all realized that modifying built-in prototypes was an anti-pattern when native alternatives and conflicting libs broke the internet. But that’s a different story.

Next on the JS lib popularity roller coaster was jQuery. jQuery’s big claim to fame was jQuery plugins. They worked by extending jQuery’s delegate prototype using concatenative inheritance.

Are you starting to sense a pattern here?

jQuery remains the most popular JavaScript library ever made. By a HUGE margin. HUGE.

This is where things get muddled and class extension starts to sneak into the language… John Resig (author of jQuery) wrote about Simple Class Inheritance in JavaScript, and people started actually using it, even though John Resig himself didn’t think it belonged in jQuery (because prototypal OO did the same job better).

Semi-popular Java-esque frameworks like ExtJS appeared, ushering in the first kinda, sorta, not-really mainstream uses of class in JavaScript. This was 2007. JavaScript was 12 years old before a somewhat popular lib started exposing JS users to classical inheritance.

Three years later, Backbone exploded and had an .extend() method that mimicked class inheritance, including all its nastiest features such as brittle object hierarchies. That’s when all hell broke loose.

~100kloc app starts using Backbone. A few months in I’m debugging a 6-level hierarchy trying to find a bug. Stepped through every line of constructor code up the super chain. Found and fixed the bug in the top level base class. Then had to fix a lot of child classes because they depended on the buggy behavior of the base class. Hours of frustration that should have been a 5 minute fix.

This is not JavaScript. I was suddenly living in Java hell again. That lonely, dark, scary place where any quick movements could cause entire hierarchies to shudder and collapse in coalescing, tight-coupled convulsions.

These are the monsters rewrites are made of.

But, squirreled away in the Backbone docs, a ray of golden sunshine:

// A ray of sunshine in the belly of

// the beast...

var object = {};

_.extend(object, Backbone.Events);

object.on("alert", function(msg) {

alert("Triggered " + msg);

});

object.trigger("alert", "an event");Our old friend, concatenative inheritance saving the day with a Backbone.Events mixin.

It turns out, if you look at any non-trivial JavaScript library closely enough, you’re going to find examples of concatenation and delegation. It’s so common and automatic for JavaScript developers to do these things that they don’t even think of it as inheritance, even though it accomplishes the same goal.

Inheritance in JS is so easy

it confuses people who expect it to take effort.

To make it harder, we added class.

And how did we add class? We built it on top of prototypal inheritance using delegate prototypes and object concatenation, of course!

That’s like driving your Tesla Model S to a car dealership and trading it in for a rusted out 1983 Ford Pinto.

No.

Prototypal OO is simpler, more flexible, and a lot less error prone. I have been making this claim and challenging people to come up with a compelling class use case for many years. Hundreds of thousands of people have heard the call. The few answers I’ve received depended on one or more of the misconceptions addressed in this article.

I was once a classical inheritance fan. I bought into it completely. I built object hierarchies everywhere. I built visual OO Rapid Application Development tools to help software architects design object hierarchies and relationships that made sense. It took a visual tool to truly map and graph the object relationships in enterprise applications using classical inheritance taxonomies.

Soon after my transition from C++ and Java to JavaScript, I stopped doing all of that. Not because I was building less complex apps (the opposite is true), but because JavaScript was so much simpler, I had no more need for all that OO design tooling.

I used to do application design consulting and frequently recommend sweeping rewrites. Why? Because all object hierarchies are eventually wrong for new use cases.

I wasn’t alone. In those days, complete rewrites were very common for new software versions. Most of those rewrites were necessitated by legacy lock-in caused by arthritic, brittle class hierarchies. Entire books were written about OO design mistakes and how to avoid them or refactor away from them. It seemed like every developer had a copy of “Design Patterns” on their desk.

I recommend that you follow the Gang of Four’s advice on this point:

“Favor object composition over class inheritance.”

In Java, that was harder than class inheritance because you actually had to use classes to achieve it.

In JavaScript, we don’t have that excuse. It’s actually much easier in JavaScript to simply create the object that you need by assembling various prototypes together than it is to manage object hierarchies.

WAT? Seriously. Want the jQuery object that can turn any date input into a megaCalendarWidget? You don’t have toextend a class. JavaScript has dynamic object extension, and jQuery exposes its own prototype so you can just extend that — without an extend keyword! WAT?:

/*

How to extend the jQuery prototype:

So difficult.

Brain hurts.

ouch.

*/

jQuery.fn.megaCalendarWidget = megaCalendarWidget;

// omg I'm so glad that's over.The next time you call the jQuery factory, you’ll get an instance that can make your date inputs mega awesome.

Similarly, you can use Object.assign() to compose any number of objects together with last-in priority:

// I'm not sure Object.assign() is available (ES6)

// so this time I'll use Lodash. It's like Underscore,

// with 200% more awesome. You could also use

// jQuery.extend() or Underscore's _.extend()

var assign = require('lodash/object/assign');

var skydiving = require('skydiving');

var ninja = require('ninja');

var mouse = require('mouse');

var wingsuit = require('wingsuit');

// The amount of awesome in this next bit might be too much

// for seniors with heart conditions or young children.

var skydivingNinjaMouseWithWingsuit = assign({}, // create a new object

skydiving, ninja, mouse, wingsuit); // copy all the awesome to it.No, really — any number of objects:

import ninja from 'ninja'; // ES6 modules

import mouse from 'mouse';

let ninjamouse = Object.assign({}, mouse, ninja);This technique is called concatenative inheritance, and the prototypes you inherit from are sometimes referred to as exemplar prototypes, which differ from delegate prototypes in that you copy from them, rather than delegate to them.

No.

There are lots of compelling reasons to avoid the ES6 class keyword, not least of which because it’s an awkward fit for JavaScript.

We already have an amazingly powerful and expressive object system in JavaScript. The concept of class as it’s implemented in JS today is more restrictive (in a bad way, not in a cool type-correctness way), and obscures the very cool prototypal OO system that was built into the language a long time ago.

You know what would really be good for JavaScript? Better sugar and abstractions built on top of prototypes from the perspective of a programmer familiar with prototypal OO.

That could be really cool.

Class Inheritance: A class is like a blueprint — a description of the object to be created. Classes inherit from classes and create subclass relationships: hierarchical class taxonomies.

Instances are typically instantiated via constructor functions with the new keyword. Class inheritance may or may not use the class keyword from ES6. Classes as you may know them from languages like Java don’t technically exist in JavaScript. Constructor functions are used, instead. The ES6 class keyword desugars to a constructor function:

class Foo {}

typeof Foo // 'function'In JavaScript, class inheritance is implemented on top of prototypal inheritance, but that does not mean that it does the same thing:

JavaScript’s class inheritance uses the prototype chain to wire the child Constructor.prototype to the parent Constructor.prototype for delegation. Usually, the super() constructor is also called. Those steps form single-ancestor parent/child hierarchies and create the tightest coupling available in OO design.

“Classes inherit from classes and create subclass relationships: hierarchical class taxonomies.”

Prototypal Inheritance: A prototype is a working object instance. Objects inherit directly from other objects.

Instances may be composed from many different source objects, allowing for easy selective inheritance and a flat [[Prototype]] delegation hierarchy. In other words, class taxonomies are not an automatic side-effect of prototypal OO: a critical distinction.

Instances are typically instantiated via factory functions, object literals, or Object.create().

“A prototype is a working object instance. Objects inherit directly from other objects.”

Inheritance is fundamentally a code reuse mechanism: A way for different kinds of objects to share code. The way that you share code matters because if you get it wrong, it can create a lot of problems, specifically: Class inheritance creates parent/child object taxonomies as a side-effect. Those taxonomies are virtually impossible to get right for all new use cases, and widespread use of a base class leads to the fragile base class problem, which makes them difficult to fix when you get them wrong. In fact, class inheritance causes many well known problems in OO design: The tight coupling problem (class inheritance is the tightest coupling available in oo design), which leads to the next one… The fragile base class problem Inflexible hierarchy problem (eventually, all evolving hierarchies are wrong for new uses) The duplication by necessity problem (due to inflexible hierarchies, new use cases are often shoe-horned in by duplicating, rather than adapting existing code) The Gorilla/banana problem (What you wanted was a banana, but what you got was a gorilla holding the banana, and the entire jungle) I discuss some of the issues in more depth in my talk, “Classical Inheritance is Obsolete: How to Think in Prototypal OO”:

When people say “favor composition over inheritance” that is short for “favor composition over class inheritance” (the original quote from “Design Patterns” by the Gang of Four). This is common knowledge in OO design because class inheritance has many flaws and causes many problems. Often people leave off the word class when they talk about class inheritance, which makes it sound like all inheritance is bad — but it’s not. There are actually several different kinds of inheritance, and most of them are great.

This is a quote from “Design Patterns: Elements of Reusable Object-Oriented Software”. It means that code reuse should be achieved by assembling smaller units of functionality into new objects instead of inheriting from classes and creating object taxonomies. In other words, use can-do, has-a, or uses-a relationships instead of is-a relationships. Good to hear: Avoid class hierarchies. Avoid brittle base class problem. Avoid tight coupling. Avoid rigid taxonomy (forced is-a relationships that are eventually wrong for new use cases). Avoid the gorilla banana problem (“what you wanted was a banana, what you got was a gorilla holding the banana, and the entire jungle”). Make code more flexible.

It means assemble code in small reusable parts through functional programming instead of through inheritance Prefer composition over inheritance as it is more malleable / easy to modify later, but do not use a compose-always approach. With composition, it's easy to change behavior on the fly with Dependency Injection / Setters. Inheritance is more rigid as most languages do not allow you to derive from more than one type. So the goose is more or less cooked once you derive from TypeA.

Now say you want to create a Manager type so you end up with:

class Manager : Person, Employee {

...

}

This example will work fine, however, what if Person and Employee both declared `Title`? Should Manager.Title return "Manager of Operations" or "Mr."? Under composition this ambiguity is better handled:

```jsx

Class Manager {

public Title;

public Manager(Person p, Employee e)

{

this.Title = e.Title;

}

}The Manager object is composed as an Employee and a Person. The Title behaviour is taken from employee. This explicit composition removes ambiguity among other things and you'll encounter fewer bugs.

| Think of containment (composition) as a has a relationship. A car "has an" engine, a person "has a" name, etc.Think of inheritance as an is a relationship. A car "is a" vehicle, a person "is a" mammal, etc.

Think of inheritance as an is a relationship. A car "is a" vehicle, a person "is a" mammal, etc.

With all the undeniable benefits provided by inheritance, here's some of its disadvantages.

- You can't change the implementation inherited from super classes at runtime (obviously because inheritance is defined at compile time).

- Inheritance exposes a subclass to details of its parent's class implementation, that's why it's often said that inheritance breaks encapsulation (in a sense that you really need to focus on interfaces only not implementation, so reusing by sub classing is not always preferred).

- The tight coupling provided by inheritance makes the implementation of a subclass very bound up with the implementation of a super class that any change in the parent implementation will force the sub class to change.

- Excessive reusing by sub-classing can make the inheritance stack very deep and very confusing too.

On the other hand Object composition is defined at runtime through objects acquiring references to other objects. In such a case these objects will never be able to reach each-other's protected data (no encapsulation break) and will be forced to respect each other's interface. And in this case also, implementation dependencies will be a lot less than in case of inheritance.

-

Subtyping means conforming to a type (interface) signature, i.e. a set of APIs, and one can override part of the signature to achieve subtyping polymorphism.

-

Subclassing means implicit reuse of method implementations.

With the two benefits comes two different purposes for doing inheritance: subtyping oriented and code reuse oriented.

This encourages the use of classes. Inheritance is one of the three tenets of OO design (inheritance, polymorphism, encapsulation).

class Person {

String Title;

String Name;

Int Age

}

class Employee : Person {

Int Salary;

String Title;

}This is inheritance at work. The Employee "is a" Person or inherits from Person. All inheritance relationships are "is-a" relationships. Employee also shadows the Title property from Person, meaning Employee.Title will return the Title for the Employee not the Person.

An example of this is PHP without the use of classes (particularly before PHP5). All logic is encoded in a set of functions. You may include other files containing helper functions and so on and conduct your business logic by passing data around in functions. This can be very hard to manage as the application grows. PHP5 tries to remedy this by offering more object oriented design.

Composition is typically "has a" or "uses a" relationship. Here the Employee class has a Person. It does not inherit from Person but instead gets the Person object passed to it, which is why it "has a" Person.

Composition is favoured over inheritance. To put it very simply you would have:

class Person {

String Title;

String Name;

Int Age;

public Person(String title, String name, String age) {

this.Title = title;

this.Name = name;

this.Age = age;

}

}

class Employee {

Int Salary;

private Person person;

public Employee(Person p, Int salary) {

this.person = p;

this.Salary = salary;

}

}

Person johnny = new Person ("Mr.", "John", 25);

Employee john = new Employee (johnny, 50000);What are the Three Different Kinds of Prototypal Inheritance? (Prototype delegation,Concatenative inheritance,Functional inheritance)

The process of inheriting features directly from one object to another by copying the source objects properties. In JavaScript, source prototypes are commonly referred to as mixins. Since ES6, this feature has a convenience utility in JavaScript called Object.assign(). Prior to ES6, this was commonly done with Underscore/Lodash’s .extend() jQuery’s $.extend(), and so on… The composition example above uses concatenative inheritance.