Cloud Computing is a general term used to describe a new class of network based computing that takes place over the Internet

- On-demand self-service: Users can provision servers and networks with little human intervention

- Ubiquitous network access: Any computing capabilities are available over the network. Many different devices are allowed access through standardized mechanisms

- Location transparent resource pooling: Multiple users can access clouds that serve other consumers according to demand. Cloud services need to share resources between users and clients in order to reduce costs

- Rapid elasticity: Provisioning is rapid and scales out or in based on need

- Measured service with pay per use: One of the compelling business use cases for cloud computing is the ability to "pay as you go“, where the consumer pays only for the resources that are actually used by his applications

- Public Cloud: Public cloud is an IT model where on-demand computing services and infrastructure are managed by a third-party provider and shared with multiple organizations using the public Internet

- Private Cloud: The cloud infrastructure is operated solely for an organization. it may be managed by the organization or a third party and may exist on premise or off premise

- Hybrid Cloud: The cloud infrastructure is a composition of two or more clouds (Public or Private) that remain unique entities but are bound together by standardized or proprietary technology that enables data and application portability

- Community Cloud: is a cloud service shared between multiple organizations with a common Goal/Objective

- Application, Data, Middleware and OS to be maintained in case of the infrastructure as a service

- Cloudbursting: The process of off-loading tasks to the cloud during times when the cost compute resources are needed

- Multi-tenant computing: multi-tenancy means that multiple customers of a cloud vendor are using the same computing resources. Despite the fact that they share resources, cloud customers aren't aware of each other, and their data is kept totally separate.

- Resource pooling: Pooling is a resource management term that refers to the grouping together of resources for the purpose of maximizing advantage minimizing risk for the user

- Elasticity and virtualization

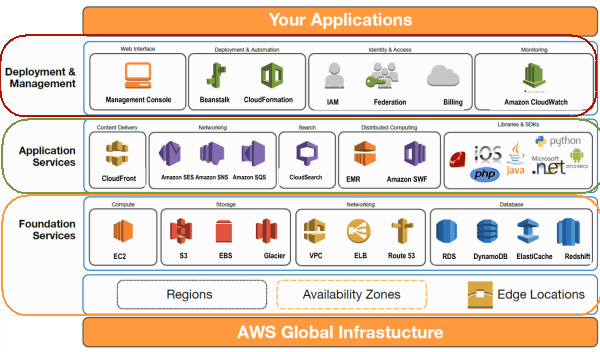

- There are many examples for vendors who publicly provide infrastructure as a service Amazon Elastic Compute Cloud (EC 2)

- Amazon Elastic Compute Cloud (EC 2 services can be leveraged via Web services (SOAP or REST), a Web based AWS (Amazon Web Service) management console, or the EC 2 command line tools

- The Amazon service provides hundreds of pre made AMIs (Amazon Machine Images) with a variety of operating systems (i e Linux, Open Solaris, or Windows) and pre loaded software

- It provides you with complete control of your computing resources and lets you run on Amazon’s computing and infrastructure environment easily

- It also reduces the time required for obtaining and booting a new server’s instances to minutes, thereby allowing a quick scalable capacity and resources, up and down, as the computing requirements change

- Amazon offers different instances size according to

- The resources needs ( large, and extra large

- the high CPU’s needs it provides (medium and extra large high CPU instances

- high memory instances (extra large, double extra large, and quadruple extra large instance)

A private cloud aims at providing public cloud functionality, but on private resources, while maintaining control over an organization’s data and resources to meet security and governance’s requirements in an organization Private cloud exhibits a highly virtualized cloud data center located inside your organization’s firewall It may also be a private space dedicated for your company within a cloud vendor’s data center designed to handle the organization’s workloads, and in this case it is called Virtual Private Cloud ( Private clouds exhibit the following characteristics:

- Allow service provisioning and compute capability for an organization’s users in a self service manner

- Automate and provide well managed virtualized environments

- Optimize computing resources, and servers utilization

- Support specific workloads

Containers have emerged as a key open source application packaging and delivery technology, combining lightweight application isolation with the flexibility of image-based deployment methods

- LXC stands for Linux Containers

- LXC is a solution for virtualizing software at the operating system level within the Linux kernel

- Unlike traditional hypervisors (think VMware, KVM and Hyper-V), LXC lets you run single applications in virtual environments

- You can also virtualize an entire operating system inside an LXC container, if you’d like

LXC’s main advantages

- It is easy to control a virtual environment using user-space tools from the host OS

- requires less overhead than a traditional hypervisor

- Increases the portability of individual apps by making it possible to distribute them inside containers

- It is a REST API that connects to libxlc, the LXC software library

- Written in Go Lang

- Creates a system daemon that apps can access locally using a Unix socket, or over the network via HTTP

Advantages

- A host can run many LXC containers using only a single system daemon, which simplifies management and reduces overhead. With pure-play LXC, you’d need separate processes for each container.

- The LXD daemon can take advantage of host-level security features to make containers more secure. On plain LXC, container security is more problematic.

- Because the LXD daemon handles networking and data storage, and users can control these things from the LXD CLI interface, it simplifies the process of sharing these resources with containers.

- LXD offers advanced features not available from LXC, including live container migration and the ability to snapshot a running container.

Red Hat Enterprise Linux 7 implements Linux Containers using core technologies such as:

- Control Groups (Cgroups) for Resource Management

- Namespaces for Process Isolation

- SELinux for Security, enabling secure multi-tenancy and reducing the potential for security exploits

- The kernel uses Cgroups to group processes for the purpose of system resource management

- Cgroups allocate CPU time, system memory, network bandwidth, or combinations of these among user-defined groups of tasks

- The kernel provides process isolation by creating separate namespaces for containers

- Namespaces enable creating an abstraction of a particular global system resource and make it appear as a separated instance to processes within a namespace

- Consequently, several containers can use the same resource simultaneously without creating a conflict

Types of Namespaces:

Mount Namespaces Isolate the set of file system mount points seen by a group of processes so that processes in different mount namespaces can have different views of the file system hierarchy. For example, each container can have its own /tmp or /var directory or even have an entirely different userspace.

UTS namespaces isolate two system identifiers – node-name and domain-name, returned by the uname() system call. This allows each container to have its own hostname and NIS domain name, which is useful for initialization and configuration scripts based on these names

IPC namespaces isolate certain inter-process communication (IPC) resources, such as System V IPC objects and POSIX message queues. This means that two containers can create shared memory segments and semaphores with the same name, but are not able to interact with other containers memory segments or shared memory.

PID namespaces allow processes in different containers to have the same PID, so each container can have its own init (PID1) process that manages various system initialization tasks as well as containers life cycle.

Network namespaces provide isolation of network controllers, system resources associated with networking, firewall and routing tables. This allows container to use separate virtual network stack, loopback device and process space

Security-Enhanced Linux (SELinux) is a security architecture for Linux® systems that allows administrators to have more control over who can access the system

LVM - Layered file system

Docker is an open source platform for building, deploying, running and managing containerized applications

- Docker uses a client-server architecture.

- The Docker client talks to the Docker daemon, which does the heavy lifting of building, running, and distributing your Docker containers.

- The Docker client and daemon can run on the same system, or you can connect a Docker client to a remote Docker daemon. The Docker client and daemon communicate using a REST API, over UNIX sockets or a network interface.

- Docker Compose is another client, that lets you work with applications consisting of a set of containers.

- It consists of four main parts:

- Docker Client: This is how you interact with your containers. Call it the user interface for Docker.

- Docker Objects: These are the main components of Docker:

- Containers: Containers are the placeholders for your software, and can be read and written to

- Images: Images are read-only, and used to create new containers.

- Docker Daemon: A background process responsible for receiving commands and passing them to the containers via command line.

- Docker Registry: Cloud or server based storage and distribution service for your images

Docker Engine is an open source containerization technology for building and containerizing your applications. Docker Engine acts as a client-server application with:

- A server with a long-running daemon process dockerd.

- APIs which specify interfaces that programs can use to talk to and instruct the Docker daemon.

- A command line interface (CLI) client docker.

- Below is a sample Docker file example for a basic spring boot application

FROM openjdk:8-jdk-alpine

ARG JAR_FILE=target/*.jar

COPY ${JAR_FILE} app.jar

ENTRYPOINT ["java","-jar","/app.jar"]- Another Example for Python

# syntax=docker/dockerfile:1

FROM python:3.7-alpine

WORKDIR /code

ENV FLASK_APP=app.py

ENV FLASK_RUN_HOST=0.0.0.0

RUN apk add --no-cache gcc musl-dev linux-headers

COPY requirements.txt requirements.txt

RUN pip install -r requirements.txt

EXPOSE 5000

COPY . .

CMD ["flask", "run"]- Compose is a tool for defining and running multi-container Docker applications.

- With Compose, you use a YAML file to configure your application’s services. Then, with a single command, you create and start all the services from your configuration.

Using Compose is basically a three-step process:

- Define your app's environment with a Dockerfile so it can be reproduced anywhere.

- Define the services that make up your app in docker-compose.yml so they can be run together in an isolated environment.

- Run docker compose up and the Docker compose command starts and runs your entire app. You can alternatively run docker-compose up using the docker-compose binary.

A docker-compose.yml looks like this:

version: '3'

services:

mongodb:

image: mongo

ports:

- "27017:27017"

environment:

- MONGO_INITDB_ROOT_USERNAME=root

- MONGO_INITDB_ROOT_PASSWORD=password

mongo-express:

image: mongo-express

restart: always

ports:

- "8080:8081"

environment:

- ME_CONFIG_MONGODB_ADMINUSERNAME=root

- ME_CONFIG_MONGODB_ADMINPASSWORD=password

- ME_CONFIG_MONGODB_SERVER=mongodb- Docker Hub is a service provided by Docker for finding and sharing container images with your team

| Virtual Machine | Docker | |

|---|---|---|

| Architecture | VMs have a host OS and a guest OS inside each VM | Dockers containers host on a single physical server with a host OS |

| Security | Virtual machines are stand-alone with their kernel and security features. Therefore, applications needing more privileges and security run on virtual machines | The container technology has access to the kernel subsystems; as a result, a single infected application is capable of hacking the entire host system |

| Portability | VMs are isolated from their OS, they are not ported across multiple platform without incurring compatibility issues | Docker containers packages are self contained and can run applications in any environment and since they don't need agues OS, they can be easily ported across different platform |

| Performance | Virtual machines are more resource-intensive than Docker containers as the virtual machines need to load the entire OS to start. Slower to start | The lightweight architecture of Docker containers is less resource-intensive than virtual machines. Boots in few seconds |

Kubernetes(K8s) is an open-source container-orchestration system.

- K8s has a Master-Slave architecture

- Master System

- The Control plane's components make global decisions about the cluster (for example, scheduling), as well as detecting and responding to cluster events

The API server is a component of the Kubernetes control plane that exposes the Kubernetes API. The API server is the front end for the Kubernetes control plane.

The main implementation of a Kubernetes API server is kube-apiserver. kube-apiserver is designed to scale horizontally—that is, it scales by deploying more instances. You can run several instances of kube-apiserver and balance traffic between those instances

- Consistent and highly-available key value store used as Kubernetes' backing store for all cluster data.

- More information can be found in etcd Documentation

- Control plane component that watches for newly created Pods with no assigned node, and selects a node for them to run on.

- Factors taken into account for scheduling decisions include: individual and collective resource requirements, hardware/software/policy constraints, affinity and anti-affinity specifications, data locality, inter-workload interference, and deadlines.

- Control plane component that runs controller processes.

- Logically, each controller is a separate process, but to reduce complexity, they are all compiled into a single binary and run in a single process.

- Some types of these controllers are:

- Node controller: Responsible for noticing and responding when nodes go down.

- Job controller: Watches for Job objects that represent one-off tasks, then creates Pods to run those tasks to completion.

- Endpoints controller: Populates the Endpoints object (that is, joins Services & Pods).

- Service Account & Token controllers: Create default accounts and API access tokens for new namespaces

- A Kubernetes control plane component that embeds cloud-specific control logic. The cloud controller manager lets you link your cluster into your cloud provider's API, and separates out the components that interact with that cloud platform from components that only interact with your cluster.

- The cloud-controller-manager only runs controllers that are specific to your cloud provider. If you are running Kubernetes on your own premises, or in a learning environment inside your own PC, the cluster does not have a cloud controller manager.

- As with the kube-controller-manager, the cloud-controller-manager combines several logically independent control loops into a single binary that you run as a single process. You can scale horizontally (run more than one copy) to improve performance or to help tolerate failures.

- The following controllers can have cloud provider dependencies:

- Node controller: For checking the cloud provider to determine if a node has been deleted in the cloud after it stops responding

- Route controller: For setting up routes in the underlying cloud infrastructure

- Service controller: For creating, updating and deleting cloud provider load balancers

- Node components run on every node, maintaining running pods and providing the Kubernetes runtime environment

- An agent that runs on each node in the cluster. It makes sure that containers are running in a Pod.

- The kubelet takes a set of PodSpecs that are provided through various mechanisms and ensures that the containers described in those PodSpecs are running and healthy. The kubelet doesn't manage containers which were not created by Kubernetes

- kube-proxy is a network proxy that runs on each node in your cluster, implementing part of the Kubernetes Service concept.

- kube-proxy maintains network rules on nodes. These network rules allow network communication to your Pods from network sessions inside or outside of your cluster.

- kube-proxy uses the operating system packet filtering layer if there is one and it's available. Otherwise, kube-proxy forwards the traffic itself

- More information can be found in kube-proxy Documentation

- The container runtime is the software that is responsible for running containers.

- Kubernetes supports several container runtimes: Docker, containerd, CRI-O, and any implementation of the Kubernetes CRI (Container Runtime Interface)

- Instance PaaS

- Framework PaaS

- Gives the programmer a solution stack: Web server, database engine, scripting language

- Simple deployment, no worries about servers, storages, network, scaling, updates

- Guarantees multi-tenancy for better security

- Users isolated by virtualization or OS means

- Account and billing of used resources

- Different at every vendor

- Development tools

- Iaas is better for migrating existing applications More flexible, you install your environment

- PaaS has lower demands on administration

- PaaS will take care of scaling if applications use correct frameworks, also redundancy and CDN

- PaaS better for new applications (Partially True) but has dangers of vendor lock-in if platform specific functions are used

- AWS Elastic Beanstalk

- Heroku

- Force.com

- Windows Azure

- Google App Engine

- Apache Stratos

- OpenShift

- Software as a service is a distribution model in which applications are hosted by a vendor or service provider and made available to customers over a network

- In the SaaS model, software is deployed as a hosted service and accessed over the internet, as opposed to On-Premise

- Some examples of SaaS are:

- Netflix

- Salesforce.com

- Office 365

- Workday

- In traditional model of software delivery, the customer acquires the license and assumes the responsibility of maintaining the software

- There is a high upfront cost associated with purchase of the license

- Return on investment is delayed considerably, due to rapid pace of technological changes

- The web as a platform is the center point.

- The services or the software is access via the internet

- Good Wifi connections

- PC costs are reducing but Software costs are increasing

- Timely and expensive setup and maintenance cost

- Licensing issues for business are contributing significantly to the use of illegal software and piracy

- Scalable

- Multitenant efficient

- One application instance must be able to accommodate users from multiple other companies at the same time

- This requires an architecture that maximizes the sharing of resources across tenants

- Is still able to differentiate data belonging to different customers

- Configurable

- To customize the application for one customer will change the application for other customer as well

- Each customer uses metadata to configure the way the application appears and behaves for its users

- Customers configuring applications must be simple and easy without incurring extra development or operation cost

-

Multi-Tenancy is an architecture in which single instance of a software application servers multiple customers. Each customer is called a tenant.

-

Tenants may be given the ability to customize some parts of the application such as Colour, UI or business rules, but they cannot change the code of the application

-

Single-tenant On-Premises

-

Single-tenant Virtual Servers

-

Single-tenant Data center (Mixed VM/Physical)

-

Multi-tenant - Separate DB Server

-

Multi-tenant - Schema per tenant

-

Multi-tenant - Shared Everything

- OpenNebula is a powerful, but easy to use, open source solution to build and manage Enterprise Clouds.

- It combines virtualization and container technologies with multi-tenancy, automatic provision and elasticity to offer on-demand applications and services on private, hybrid and edge environments.

- For Infrastructure Manager

- Centralized management of VM workload and distributed infrastructures

- Support for VM placement policies: balance of workload, server consolidation

- For Infrastructure User

| Feature | Function |

|---|---|

| Internal Interface |

|

| Scheduler |

|

| Virtualization Management |

|

| Image Management | General mechanisms to transfer and clone VM images |

| Network Management | Definition of isolated virtual networks to interconnect VMs |

| Service Management and Contextualization | Support for multi-tier services consisting of groups of inter-connected VMs, adn their auto-configuration at boot time |

| Security | Management of users by the infrastructure administrator |

| Fault Tolerance | Persistent database backend to store host and VM information |

| Scalability | Tested in the management of medium scale infrastructures with hundreds of servers and VMs |

| Flexibility and Extensibility | Open, flexible and extensible architecture, interfaces and components, allowing its integration with any product or tools |

Contains 3 main components:

- Tools

- Scheduler

- Searches for physical hosts to deploy newly defined VMs

- CLI

- Commands to manage OpenNebula

- onevm, onehost, onevnet

- Commands to manage OpenNebula

- Scheduler

- Core / Interface

- Request Manager

- Provides a XML-RPC interface to manage and get information about ONE(OpenNebula) entities

- VM Manager

- Takes care of the VM life cycle

- Host Manger

- Holds information about the hosts (Physical Machines)

- VN Manager (Virtual Network)

- This component is in charge of generating MAC and IP addresses

- SQL Pool

- Database that holds the state of ONE entities

- Request Manager

- Drivers

- Transfer Driver

- VM Driver

- Information Driver

- Provides highly reliable and scalable infrastructure for deploying web scale solutions

- with minimal support and administration costs

- More flexibility than own infrastructure, either on-premise or at a datacenter facility

For more documentation Amazon Web Service, please refer the notes here

TODO

TODO

- The Oracle Cloud Infrastructure Load Balancing service distributes traffic from one entry point to multiple servers reachable from your virtual cloud network (VCN).

- A load balancer improves resource use, facilitates scaling, and helps to ensure high availability.

- The load balancer can reduce your maintenance window by draining traffic from an unhealthy application server before you remove it from service for maintenance.

- There are mainly two types of load balancers in OCI

- Application load balancer (layer 7)

- Network load balancer (layer 3 and 4)

- An Application load balancer distributes traffic based on multiple variables, from the network layer to the application layer

- An application load balancer is a context-aware load distribution that directs requests based on any single variable as easily as requests based on a combination of variables.

- Applications are load balanced based on their behavior.

- An application load balancer works on layer 7, so it supports both HTTP and HTTPS. It can distribute HTTP and HTTPS traffic based on host-based or path-based rules.

- A Network load balancer distributes traffic based on IP address and destination ports.

- It handles only layer 4 (TCP) traffic; it’s not built to handle anything at layer 7, such as content type, cookie data, custom headers, user location, or application behavior.

- A network load balancer is a context-less load distribution. It handles only the network-layer information contained within the packets.

| Application load balancer | Network load balancer |

|---|---|

| Works on Layer 7 of the OSI model, i.e Application Layer | Works on Layer 4 of the OSI model, i.e. Transport Layer |

| Application load balancer performs content-based routing | Network load balancer performs request forwarding |

| Ensure the availability of the application | Do not ensure the availability of the application |

Openstack is a collection of open source technologies delivering a massively scalable cloud operation system

OpenStack is a cloud operating system that controls large pools of compute, storage, and networking resources throughout a data-center, all managed and provisioned through APIs with common authentication mechanisms.

OpenStack is broken up into services to allow you to plug and play components depending on your needs.

An OpenStack deployment contains a number of components providing APIs to access infrastructure resources.

- Compute

- Nova - Compute Service

- Zun

- Hardware Lifecycle

- Ironic

- Cyborg

- Storage

- Networking

- Neutron - Networking

- Octavia - Load Balancer

- Designate

- Shared Services

- Orchestration

- Heat - Orchestration

- Senlin

- Mistral

- Zaqar

- Blazar

- Workload Provisioning

- Magnum

- Sahara

- Trove

- Application Lifecycle

- Masakari

- Murano

- Solum

- Freezer

- API Proxies

- EC2API

- Web Frontend

- Horizon

- Nova is the OpenStack project that provides a way to provision compute instances (aka virtual servers).

- Nova supports creating virtual machines, bare-metal servers (through the use of ironic), and has limited support for system containers.

- Nova runs as a set of daemons on top of existing Linux servers to provide that service.

For the basic functionality to work, it needs some of the openstack services. These are Keystone, Glance, Neutron, Placement

- Keystone is an OpenStack service that provides API client authentication, service discovery, and distributed multi-tenant authorization by implementing OpenStack’s Identity API.

- Swift is a highly available, distributed, eventually consistent object/blob store. Organizations can use Swift to store lots of data efficiently, safely, and cheaply

- Cinder is the OpenStack Block Storage service for providing volumes to Nova virtual machines, Ironic bare metal hosts, containers and more.

- Goals of Cinder are:

- Component based architecture: Quickly add new behaviors

- Highly available: Scale to very serious workloads

- Fault-Tolerant: Isolated processes avoid cascading failures

- Recoverable: Failures should be easy to diagnose, debug, and rectify

- Open Standards: Be a reference implementation for a community-driven api

- Manila is the OpenStack Shared Filesystems service for providing Shared Filesystems as a service.

- Some of the features of Manila are:

- Component based architecture: Quickly add new behaviors

- Highly available: Scale to very serious workloads

- Fault-Tolerant: Isolated processes avoid cascading failures

- Recoverable: Failures should be easy to diagnose, debug, and rectify

- Open Standards: Be a reference implementation for a community-driven api

- Neutron is an OpenStack project to provide "network connectivity as a service" between interface devices (e.g., vNICs) managed by other OpenStack services (e.g., nova).

- It implements the OpenStack Networking API

- The Image service (glance) project provides a service where users can upload and discover data assets that are meant to be used with other services. This currently includes images and metadata definitions.

- Glance image services include discovering, registering, and retrieving virtual machine (VM) images.

- Glance has a RESTful API that allows querying of VM image metadata as well as retrieval of the actual image.

- Glance hosts a metadefs catalog. This provides the OpenStack community with a way to programmatically determine various metadata key names and valid values that can be applied to OpenStack resources.

- Heat is a service to orchestrate composite cloud applications using a declarative template format through an OpenStack-native REST API. Heat provides a template based orchestration for describing a cloud application by executing appropriate OpenStack API calls to generate running cloud applications.

- A Heat template describes the infrastructure for a cloud application in text files which are readable and writable by humans, and can be managed by version control tools.

- Templates specify the relationships between resources (e.g. this volume is connected to this server). This enables Heat to call out to the OpenStack APIs to create all of your infrastructure in the correct order to completely launch your application.

- The software integrates other components of OpenStack. The templates allow creation of most OpenStack resource types (such as instances, floating ips, volumes, security groups, users, etc), as well as some more advanced functionality such as instance high availability, instance autoscaling, and nested stacks.

- Heat primarily manages infrastructure, but the templates integrate well with software configuration management tools such as Puppet and Ansible.

- Operators can customize the capabilities of Heat by installing plugins.

TODO

TODO

- PBS Pro

- Maui

- Moab

- HDFS is a sub-project of the Apache Hadoop Project is a distributed, highly Fault Tolerant file system designed to run on low cost commodity hardware

Hadoop MapReduce is a software framework for easily writing applications which process vast amounts of data (multi-terabyte data-sets) in-parallel on large clusters (thousands of nodes) of commodity hardware in a reliable, fault-tolerant manner.