This guide's chapter targets the pool operators for getting their head around of current Shelley's Network stack until P2P (Governor with DeltaQ) arrives. It also provides some networking practices, for setting up a staking pool environment.

Disclaimer: All the Information in this gist, only applies to the currently implemented network stack of Haskell Shelley, and might be inaccurate or even completely wrong. The Author cannot be held responsible for any consequences after/before using any information from this gist, therefore, you acknowledge that you are USING THEM AT YOUR OWN RISK.

There are two type of applications participate in the Cardano Haskell Shelley network:

- The

(local) clients, and - (Full)

nodes(relays, core etc.)

Full nodes are the cardano-node instances that are running on different VM/servers/clouds accros the globe that are connected to one or more other nodes (peers) using TCP (statefull) connections (channels, bearers). Each node maintains only one (either of outgoing or incoming) connection per an other node (peer). Though, the nodes can have multiple incoming and outgoing connections, at the same time.

Clarification is required as I could see more than one connection to and from the same peer.

While the clients are some (local client) softwares (cardano-wallet, cardano-explorer, cardano-cli etc.) which can connect to a cardano-node instance running on the same server/VM as the client software, through some IPC (Inter-Process Communication i.e. local) mechanism (e.g. Unix domain socket or named-pipe in Windows).

Communication protocol: a well defined set of rules and regulations that determine how data is transmitted in computer networking

It might be not obvious from the above diagram, but there are (currently) only two type of communication protocols are implemented in Shelley, the

Node to Node (N2N)communication protocol, that (currently) uses (stateful) TCP connections between nodes, and theNode to Client (N2C)communication protocol, where the clients (local clients) are some standalone local applications, such as clis, wallets, explorers, 3rd party tools etc., that connect to nodes through some local IPC (Inter-Process Communication) mechanism (e.g. Unix domain socket or named-pipe in Windows).

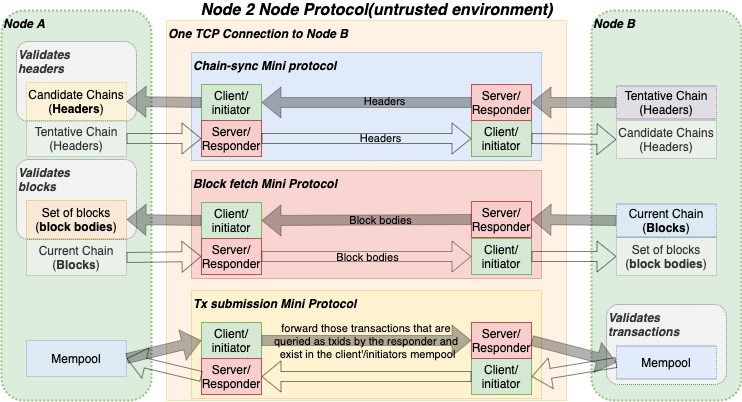

These two communication protcolos use different set of IOHK developed application level mini protocols. Each of the mini-protocols have a client (initiator) and a server (responder) component of the communication, and almost (except in N2C, see details later) all the time a node instantiates both components/parts the client/initiator and the server/responder of the same mini-protocol, when the connection is established and the mini-protocols are started. For details, see the diagrams below.

Keep in mind, that the

clienthas meaining in two very distuinguish context: The client software (local client) and the instantiated client/initiator part of a mini-protocol.

The mini-protocols of the N2C and N2N are multiplexed (afaik using

muxmini-protocol) over a single (unix domain socket and TCP respectively) connection.

The N2N communication protocol uses the following (independent application-layer) mini-protocols per upstream and downstream per peer:

- chain sync, to reconstruct the chain of an upstream node, by syncing chains of headers between them.

- chain sync client for getting (i.e. pulling) chains of block headers from the direct connected peer and validate them, as each of its peers can have different chains/forks.

- chain sync server allows its client to sync its nodes' chain with the server's current chain from the database.

- block fetch, for downloading block bodies from various peers.

- block fetch client downloads the selected blocks from the selected peers, it also decides which block bodies should be downloaded from witch selected peer.

- block fetch server allows its client to sync its nodes' blocks with the server's block from its database.

- the client requests these by some pulling requests.

- tx-submission for submitting transactions

- tx-submission server receives transactions from a downstream peer, validates and forward (by it's tx-submission client of each connected upstream peers) the

- tx-submission client for submitting transaction using push technology to other upstream peers.

Update: @karknu from IOHK Haskell Networking team pointed out that the data flow was wrong on tx-submission mini-protocol (what I have fixed it now), he said:

The responder will ask the initiator for TxIds, and provided that the responder doesn't already have them in its mempool it will wask for them. That it transactions flows from downstream peers to upstream peers.

See details in the below diagram:

Although, the N2N protocols instantiates both the client (Initiator) and server (Producer) side of the mini-protocols, the diagram highlights the Node A point of view of the related and instantiated mini-protocols.

As it can be seed in the above diagram, that the downstream (incoming) data are resource expensive, as they need to validate each of the blocks, headers, txs they're receiving,

while upstrem (outgoing) data are quite cheap, as they just serve the data without any validation (as they've already validated when they were received) requested by some other clients.

The N2C protocol uses the following mini-protocols:

- chain-sync, this is instantiates for using whole blocks instead of headers like in

N2Nand - local-tx-submission, it is used by the local client (e.g. cli etc)to send (it uses push protocol instead pull like all other mini-protocols) a single transaction that node's accepts (server/responder) by confirming it, or rejects by returning the reason back to the local client. It does not instantiate local client's server/responder

local-tx-submissionmini protocol.

The diagram express the Local client's point of view of the relevant domain socket connection.

As of now (16/Jun/2020), the P2P (Governor, DeltaQ) has not been enabled yet in the Shelley Haskell's implementation (version 1.13.0) and,

therefore cardano-nodes are currently use a static topology file (topology.json) file for connecting to other peers.

The node reads the producers (peers) from its topology file, and connects to them.

Keep in mind, that the current implementation does not use gossipping for discovering other peers, so it is relying only the producers (IP or DNS names) come from the topology file.

Terefore a node can only have as many outgoing connections as many IPs are resolved (staticaly or dynamically e.g. DNS) from its topology file, and has as many incoming connections as many other nodes have its IP resolved by their topology file.

Keep in mind, that

cardano-nodeimplemented similar protocol to theHappy Eyeballfor resolving DNS names to multiple IP addresses (if applicable), that may have different performance and connectivity characteristics.

The lifecycle of a connection depends on the termination (by request or error/exceptions) of any mini protocol (Needs clarification). A simple explanation of a connection life cycle contains the following steps:

- The (local) client or node connects to a peer node (using OS's domain or TCP socket)

- Negotiate the protocol version (

handshakeprotocol) - Runs the required mini-protocols (

mux,chain-sync,block-fetch,tx-submisisonetc.) - Shuts down the required mini-protocols on excpetions (protocol violation) or any shut down request for a mini-porotocol i.e. its state is set to

Done, and finally - Closes the (OS) connection to the peer node and release any resources.

TBD

- Data Diffusion and Peer Networking in Shelley

- Happy EyeBall protocol

- Shelley Network Design Part 1 (overview) - Not available yet