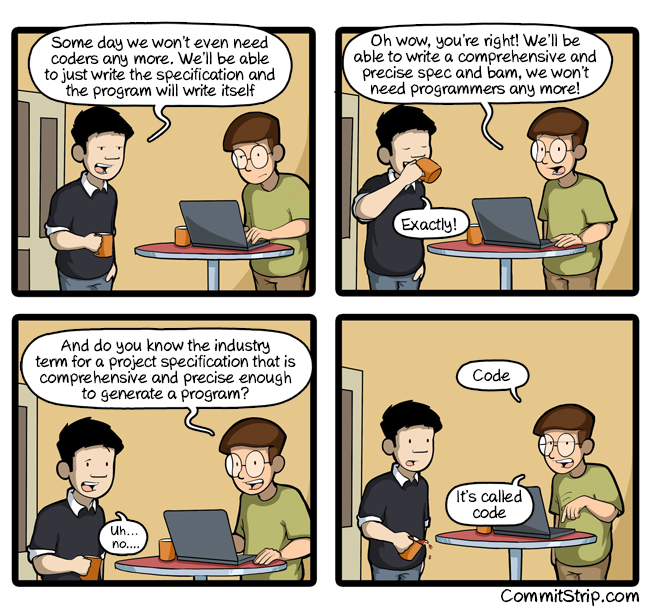

Yesterday, the occasionally brilliant comic strip commitstrip published this comic:

It set me thinking specifically about the topic of software testing. In particular, "what are we trying to achieve when writing tests for our softare?"

As the comic astutely points out, a piece of software is logically speaking little more than a documentation of the requirements of the software. Further to this, what we do when we write tests for our software is represent those requirements in another form, and check to see that both our software and the tests align in their interpretation.

These requirements on any level are defined simply a set of inputs, and their corresponding outputs. And largely what we do when we write tests is attempt to enumerate a subset of available inputs, and assert on their expected outputs, where the software will attept to do less enumeration, more abstraction, but still be able to reach the same conclusions. In theory any software could be replaced by a giant hash map of all possible inputs, and the corresponding outputs, it just wouldn't be possible to write, and probably wouldn't be possible to run, and so we create abstractions - or "code" as we like to call it.

Where this begins to unravel slightly, is when we introduce the notion of "test coverage" - the idea that our test suites should execute all of our code, and that 100% coverage, which is the best, is when every single piece of our code is being executed as part of our test run.

However, what this does is break the relationship between our tests and our requirements. No longer are our tests attempting to represent the requirements of our software, but begin to represent the structures of our code instead.

Effort becomes placed on testing trivial, potentially less important areas of the codebase, in favour of covering the critical paths but with a wider subset of the input domain, because once code is "covered" then it's tested, right? Right? Sometimes it gets even worse, and tests are written purely with the intention of executing a particular branch, but with no meaningful assertions about what the code is actually doing.

Firstly, don't worry about code coverage in your tests. Think about requirements coverage instead.

What is the functionality that your code is representing? Try to write some tests that also represent that functionality. This is where the value of a TDD approach is evident, but don't necessarily get too hung up on writing tests first - you're only human after all - but when you are writing your tests, try to abstract away from the code you've just written if you can, and think about representing the same requirements that inspired the code in your tests.

And certainly don't just write tests for the sake of having tests. A test that doesn't represent part of your requirements is just some maintenance overhead, or another thing that can fail your build when it's late, you have a deadline to hit, and "goddamnit Jenkins, why wont you just go green?!".

Your tests should work for you, not vice versa. You should know when you have a failing test that this definitely means there is something wrong with your software, and so write your assertions with that mindset.

- "If this assertion does not hold, is my software broken?"

- "If this assertion does hold, is my software working?"

If neither of the above, then don't write the test. If your code coverage isn't 100% as a result, then that's fine too (even better - you might be able to delete some code!)