Spark Jobs

So, what Spark does is that as soon as action operations like collect(), count(), etc., is triggered, the driver program, which is responsible for launching the spark application as well as considered the entry point of any spark application, converts this spark application into a single job which can be seen in the figure below

Spark Stages

Now here comes the concept of Stage. Whenever there is a shuffling of data over the network, Spark divides the job into multiple stages. Therefore, a stage is created when the shuffling of data takes place. These stages can be either processed parallelly or sequentially depending upon the dependencies of these stages between each other.

Spark Tasks

The single computation unit performed on a single data partition is called a task. It is computed on a single core of the worker node. Whenever Spark is performing any computation operation like transformation etc, Spark is executing a task on a partition of data.

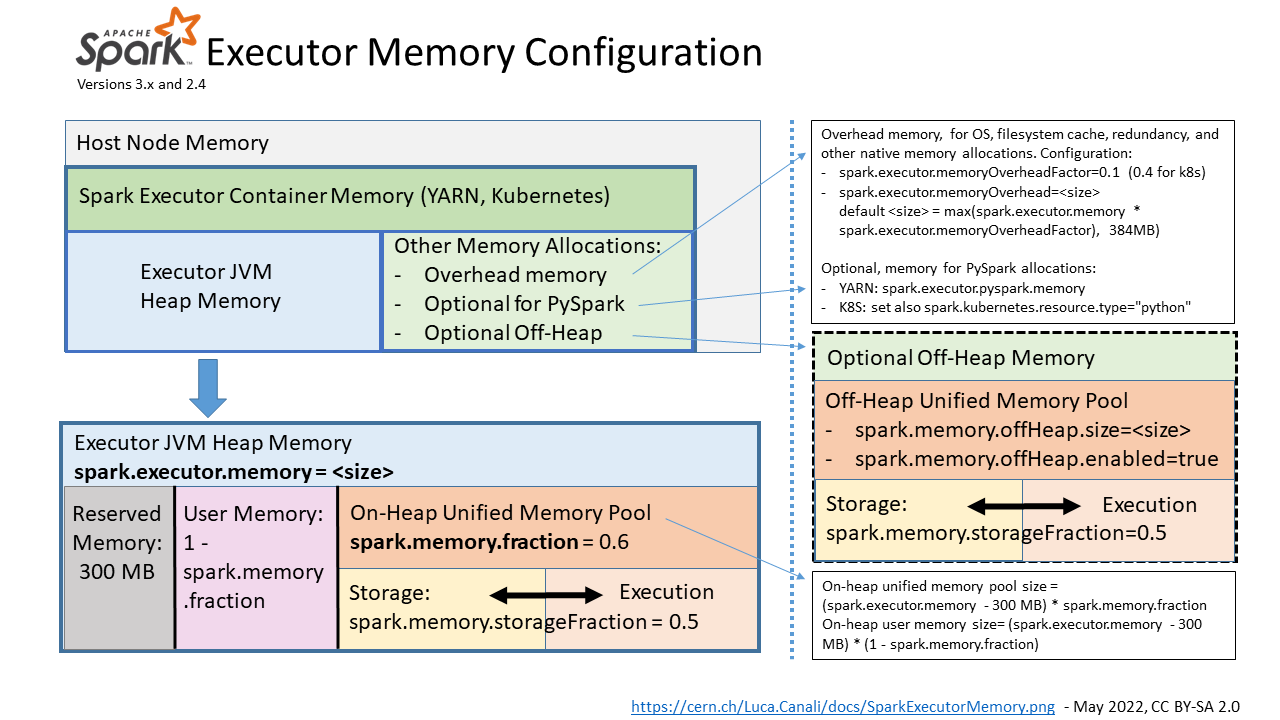

20190409 Best practices for successfully managing memory for Apache Spark applications on Amazon EMR

You can use two DataFrameReader APIs to specify partitioning:

- jdbc(url:String,table:String,partitionColumn:String,lowerBound:Long,upperBound:Long,numPartitions:Int,...)

- jdbc(url:String,table:String,predicates:Array[String],...)