This is a proposal to supplement / replace the current SigOps (signature opcodes) counting in bitcoin, with a more sensible limit that is based on actual execution of signature checking opcodes. I will call this new metric "SigChecks". (please, let's avoid "real SigOps" or "new SigOps")

The basics:

- Signature check opcodes only produce SigChecks when they are executed.

- scriptPubKeys have 0 SigChecks as far as the containing transaction is concerned, no matter what opcodes are in them. (Note: If SigOps rule is removed then there will no longer be any consensus restrictions on scriptPubKey, thus it will be unnecessary to parse the output scripts during block validation.)

- scriptSigs in coinbases have 0 SigChecks, because they are not executed.

- script-checking opcodes in unexecuted branches of scripts (e.g., OP_IF/OP_ENDIF) do not contribute SigChecks.

- Cancelled signature checks (where signatures are NULL, and thus the opcode returns False to stack) do not increment SigChecks.

- The SigChecks count can in principle be computed without actually having to verify any signatures nor computing any sighashes.

- This ability is a consequence of the NULLFAIL rule, and this is a nice property to keep for efficient transaction relay.

It is assumed the reader is familiarity with the NULLFAIL rule and the Schnorr multisig upgrade. Readers need not know anything about SigOps counting or limitations except that they are terrible.

During script execution, SigChecks are accumulated as follows:

- The SigChecks for a failed script / failed transaction is undefined.(*)

- The SigChecks for pre-activation mined scripts or transactions is undefined.(*)

- Successfully executing OP_CHECKSIG / OP_CHECKSIGVERIFY increments SigChecks by:

- 0, if signature is NULL (opcode returns false)

- +1, if signature is non-NULL (opcode return true)

- Successfully executing OP_CHECKDATASIG / OP_CHECKDATASIGVERIFY increments SigChecks by:

- 0, if signature is NULL (opcode returns false)

- +1, if signature is non-NULL (opcode return true)

- Successfully executing an M-of-N OP_CHECKMULTISIG or OP_CHECKMULTISIGVERIFY increments SigChecks by:

- 0, if all signatures are null (opcode returns false, or, M=0 and opcode returns true)

- +M, if the verification is in New/Schnorr mode and at least one signature is non-null. (dummy element is non-NULL, opcode returns true)

- +N, if the verification is in Old/ECDSA mode and at least one signature is non-null. (dummy element is NULL, opcode returns true)

(*) Implementations may use the undefined nature of old/invalid SigChecks to simplify handling. However it is recommended that the definitions provided here are used precisely as they can also gauge worst-case CPU consumption for pre-NULLFAIL scripts.

It is proposed that for a given transaction input, the number of SigChecks accumulated during script execution (scriptSig, scriptPubKey, and (if P2SH) redeemScript) is to be limited according to the length of the scriptSig, L:

txin_SigChecks <= minimum( (L + 60) // 43, L // 23)

(where // indicates floor division)

See the rationale section for the reasoning behind making a per-input limit, and the reason for this particular formula.

Note that since transaction inputs have a minimum size of 41 bytes (36 byte outpoint, 1-3 byte varint for scriptSig length, 4 byte sequence number), this means the maximum density achievable is 1/36.6666 sigchecks per byte which occurs at L = 69 (three SigChecks) and txin_len = 110.

In terms of implementation, a per-input limit is easy to implement since the test can be done in VerifyScript, immediately after EvalScript has finished counting up SigChecks.

This per-input limit is proposed to be a RELAY RULE, i.e., it does not apply during block validation. The limit is to be checked only under the set of standard script flags.

No additional standardness rules will be imposed on transactions. Note that the above per-input rule, combined with the standard transaction size (100 kB), means a standard transaction cannot have more than about 2730 SigChecks, and cannot exceed a density of 1/36.6666.

At consensus layer, the existing per-input parallel checking system (used in ConnectBlock) makes it difficult to implement any kind of per-transaction limit, and so any consensus limits on transaction sigChecks are not recommended.

If the per-transaction or per-input rules are only adopted at the standardness layer, then an additional rule is needed at the consensus layer in order to limit the CPU usage that can be induced by a block.

Note that any block constraint (sigops or sigchecks) that varies with size creates an block assembly optimization problem where one resource (blocksize) is traded off against another (sigops/sigchecks).Therefore, it is proposed that the number of SigChecks be limited based on the maximum block size:

block_SigChecks <= max_block_size // 100

(Such a "flat" limit does not create significant DoS risk relative to a varying limit, since attackers can always pad blocks with large data transactions.)

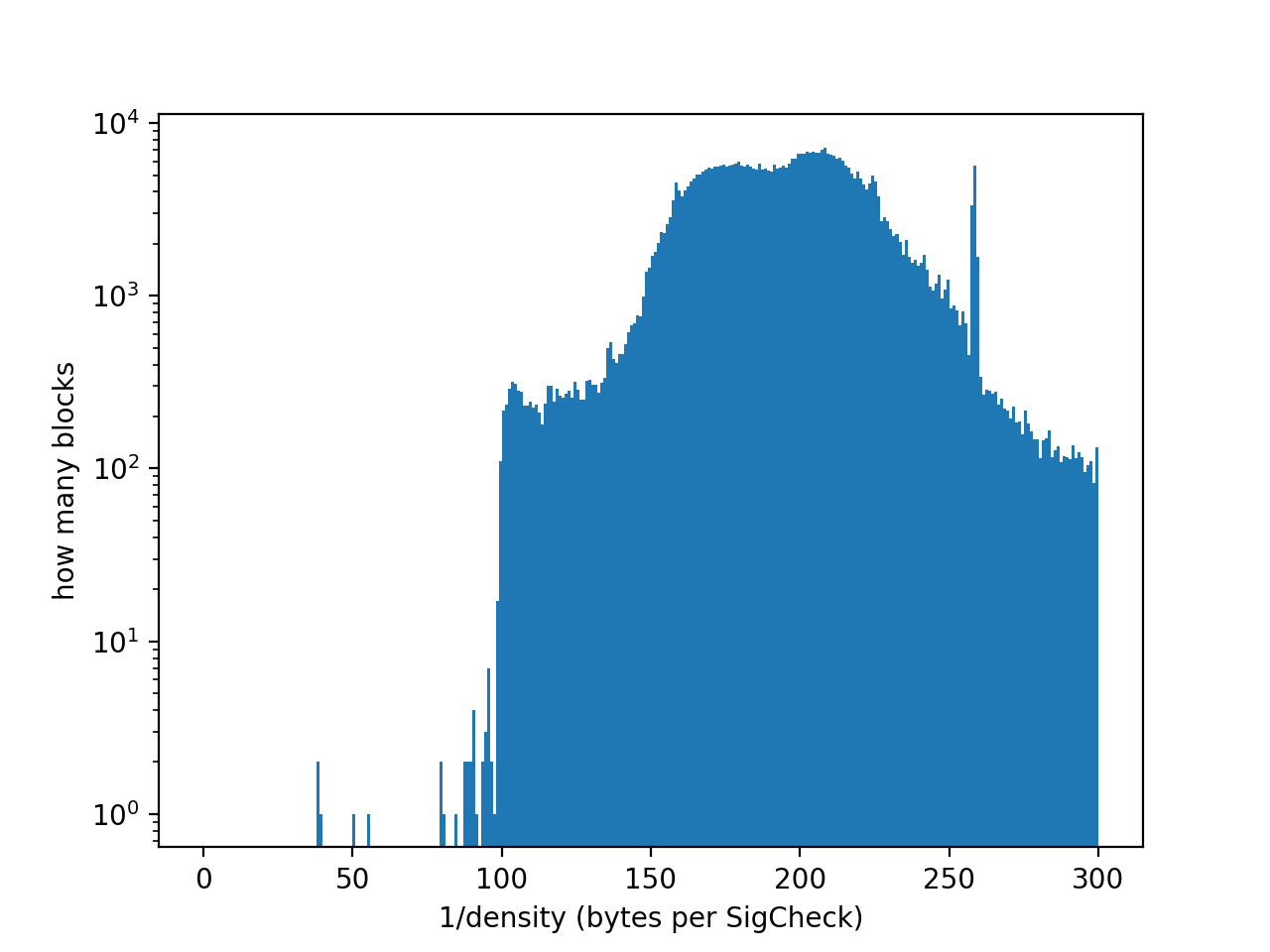

The value of 100 was chosen based on observing typical past blocks, and considering consolidations of typical transaction classes. Notably, 2-of-3 ECDSA multisig are very common. A block packed with ECDSA consolidations of 2-of-3 multisig will reach a density of around 100 bytes/sigcheck. In contrast dense P2PKH consolidations will land around 141 bytes/sigcheck (schnorr).

In terms of implementation, the block limit is delicate to implement due to the script caching system:

- The script cache needs to be updated from being a set-like cache to being a map-like cache. This is so that ConnectBlock is able to access the SigChecks count of transactions.

- Prior to the upgrade, transactions with a very high number of SigChecks are standard (theoretically up to 500000) and mineable. Although it's unlikely that someone will broadcast them, it must not cause a disruption if they choose to do so.

Currently, there are the following rules on SigOps:

- Maximum per-block limit (consensus)

- Maximum per-tx limit (consensus)

- Maximum per-tx limit (standardness)

- Maximum per-input limit for P2SH (standardness)

- (Possibly others)

It is proposed that all of these SigOps rules shall be deactivated simultaneously when the SigChecks rules come into force.

- A legacy 1-of-20 multisignature can require anywhere from 1 to 20 actual signature verifications in order to return True. It is practically not possible to know how many verifications are required without actually doing them. Thus, it counts as 20 SigChecks regardless of how many signature verifications were actually required in that case.

- A 0-of-N multisignature always returns True, but can be executed in two ways depending on the dummy element. Since the rule looks at whether all signatures are null (True), then this counts as 0 sigchecks regardless of the mode.

- Signature checks are not the only time-consuming operations in script execution. Other slow operations the author is aware of include: OP_ROLL with large stack size can be time consuming, the OP_IF stacking algorithm is inefficient, and the "sighash" hash computation is slow with very large scriptCodes.

- Signature checks are also not the only slow operation in verifying new transactions. UTXO lookup is also slow.

This rule doesn't seem to take away any capabilities, but it also may seem like an unnecessary complication. My motivation for this is that I'd like to encourage script authors to write their scripts in the form:

<alice key> OP_CHECKSIG OP_IF <alice conditions> OP_ELSE <bob key> OP_CHECKSIGVERIFY <bob conditions> OP_ENDIF

rather than

OP_IF <alice key> OP_CHECKSIGVERIFY <alice conditions> OP_ELSE <bob key> OP_CHECKSIGVERIFY <bob conditions> OP_ENDIF

The second form is malleable using the scriptSig argument to OP_IF, whereas in the first form the scriptSig must have either NULL or a valid signature from Alice's key and thus cannot be so easily malleated by third parties. The SigChecks=0 rule for false-returning opcodes means that the superior form (first script) doesn't have to pay an additional SigCheck in order to avoid malleability. In addition, there are many historical cases of using OP_CHECKxSIG opcodes in a return-False manner, and invariably these have quite short scriptSigs so they would get hit by a SigChecks rule that counts NULL signatures.

The standardness limits need to support all currently standard use cases:

- Bare multisignatures are the most dense application, since all pubkeys are located in the prior transaction and thus the scriptsig contains only signatures. Currently, only 1-of-1, 1-of-2, 2-of2, 1-of-3, 2-of-3, 3-of-3 are supported as standard to fund. Of these, 1-of-3 bare ECDSA multisig is the most dense.

- Note that super-dense bare multisigs such as 1-of-20, while nonstandard to fund, actually are currently standard to spend. Under the proposed density limiting rule, they will become nonstandard to spend using ECDSA signatures (but fully spendable with Schnorr signatures).

- P2SH multisignatures up to M-of-15 are standard; these are also fairly dense since they involve only a single signature, and compressed pubkeys are small (34 bytes including push opcode). 1-of-15 P2SH ECDSA multisig is also another extremely dense usage.

- In contrast most script applications are far, far less dense than these.

- Typically you will have both a fresh signature and fresh public key for each SigCheck. For a Schnorr signature and compressed key this means at least 100 bytes per SigCheck; (~106.5 bytes for ECDSA.)

- Even without the public key, having just a fresh signature alone means at least ~66 bytes per SigCheck.

- Covenants using CHECKDATASIG reuse a pubkey and signature, however they are extremely sparse due to having a giant scriptSig (from pushing the sighash preimage).

The historical usage of SigChecks reflects the above understanding; see the Historical patterns section below.

Based on interpolating the 1-of-3 bare ECDSA multisig (S=3, L=72.5) and the 1-of-15 P2SH ECDSA multisig (S=15, L=589.5) we get S = (L + 56.75)/43.08333. This is converted to S <= (L + 60)/43 since the round numbers are easier to work with; and note that ECDSA signatures do vary in size randomly so some allowance has to be made for this. For the 1-of-3 multisig, there would be a ~2-33 chance of producing a too-short ECDSA signature.

The above simple line limit still allows a higher density of SigChecks in some weird cases: S=1 at L=0, and S=2 at L=26. Again, the size of an input is 41+L for small L, giving the maximum densities as 1/41, 1/33.5, and 1/36.66 for S=1,2,3 respectively. By including a second limit of S <= L / 23, the maximum density moves from 1/33.5 to 1/36.66.

In the Historical patterns section below, it can be seen that the proposed rule is quite good in that it would only have affected six historical inputs, and even for these they would remain fully spendable just using Schnorr signatures.

It's also possible to consider a per-transaction limit, by dividing the total number of sigchecks in a transaction's executed scripts, by the total transaction bytesize. It's important to note however that a transaction consolidating many 1-of-3 bare multisigs into one output will necessarily have a density limited by the per-input rule above, and if the transaction density limit is more strict then this has the effect of limiting the number of 1-of-3 bare multisignatures that can be consolidated at once using ECDSA and possibly entirely forbidding M-of-N P2SH multisigs with high N to be spent with ECDSA. This would require such multisig wallets to either use padding (with OP_RETURN or creating additional useless outputs that will never be spent), or to disable support for ECDSA (i.e., disabling hardware wallet support) and use only Schnorr sigs.

For example, a minimum tx density of of 1/50 SigChecks/byte would impose the following restrictions on consolidations (let's say the consolidation is outputting to a single P2PKH, giving the transaction an extra overhead of 44 bytes):

- Unlimited 1-of-2 bare multisig or 2-of-3 bare multisig.

- At most one 1-of-3 bare multisig using ECDSA. All other bare multisigs unlimited (1-of-2, 2-of-3, etc.)

- No P2SH multisigs using ECDSA for 1-of-8 up to 1-of-15 and 2-of-13 up to 2-of-15. Most other p2sh multisigs unlimited.

Historically, thousands of transactions have been made with an overall density of just under 1/38 SigCheck/byte. These were large consolidations of 1-of-3 bare multisignatures. If the transaction density limit is set to 38, then for consolidating we would support:

- Up to ~85 1-of-3 bare multisignatures consolidated to a single P2PKH / single P2SH, and,

- Unlimited consolidations of all other standard types

Historically, only two transactions have been made with a density more than 1/38; again, today they could be spent with low density using Schnorr sigs or padding.

I've implemented the SigChecks counting mechanism and used it to crawl the entire BCH blockchain up to block 585795 (June 2019).

- In total, there were 675069516 non-coinbase inputs, with an average SigChecks of 1.189 and average ScriptSig length

Lof 127.5 bytes. - By far the most common is P2PKH (ECDSA, compressed key), having 1 SigCheck and

Lof 106 or 107. These are 68% of spent inputs. - It is extremely rare to have SigChecks over 15. There are three inputs with 16; one with 20. (note, the current standardness rules mean that over 15 is nonstandard)

- My analysis data and scripts are available here: https://github.com/markblundeberg/bch-sigchecks-stats

- Note that the counting used a slightly distinct definition of sigchecks: 0 sigchecks if the opcode returned false, or +1/+N if the opcode returned true. Although this is equivalent for normal use cases, the count differs in 0-of-N multisigs and old weird scripts that would violate nullfail rule today. I plan to redo this analysis using the modern counting scheme, once it is settled on.

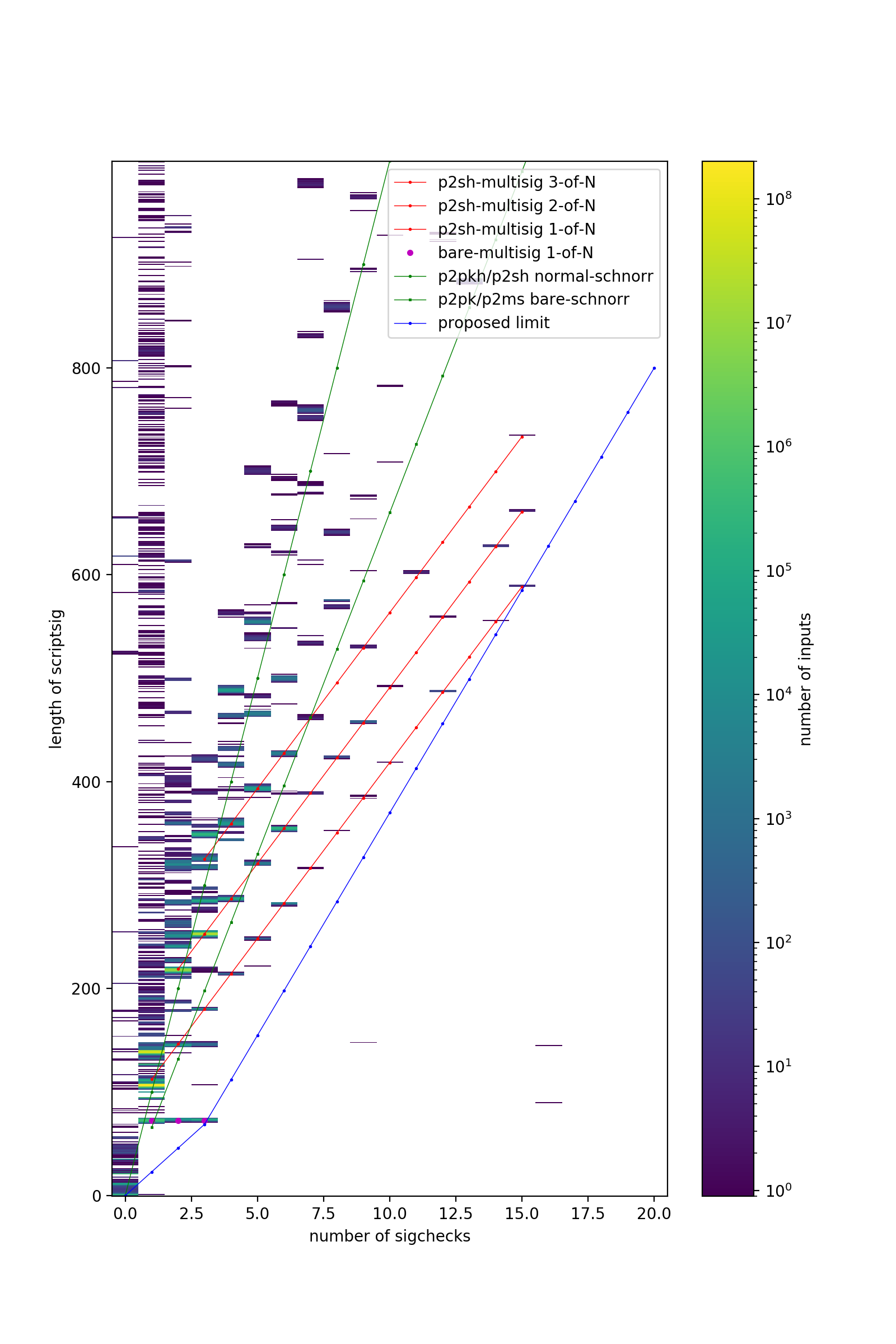

I have plotted all 675 million of these inputs on the histogram below, along with the proposed per-input limit. I have also marked the proposed per-input limit in blue, along with several common use-cases.

There are a total of six historical inputs that violated the proposed SigChecks rule. Note that all six would remain fully spendable even with the per-input limit, just the owners would need to choose the new OP_CHECKMULTISIG mechanics (bitfield in dummy element):

- (L=145, S=16) Transaction 18f4c7059be729fb48d014d2a4a0658a8ba1d35798d42274691a117da91643b5 spent a 2-of-16 bare multisig.

- (L=90, S=16) Transaction ed2f95d248d37367aaef3040c123a12af04a86f05f78ccd45871fba4acb4bb65 spent a 1-of-16 bare multisig.

- (L=148, S=9) Transaction 892bb6f09ed2ad140c48703aa71f1830273afb50c3c68f0d92d26045df47d15a spent a 1-of-9 bare multisig. (it also spent a 9-of-9, but that was within limits)

- (S=1, S=1) Transactions a0a4af46d9dec89c0d3075809634c36c37e919fdf244fcb522e67b825bfa0df9 and 01aa08cee9054262c4fedaf97e4e76ab4eab15f091d993825b078b722d5131d5 and b53c0654b6e0b926a5e8ca83926abfc6ac20b89409cd3d7a5747950278f9ef41 each spent a 0-of-1 bare multisig.

Note that the first two transactions were also extremely dense overall: 1/14 and 1/11 SigChecks/byte; all other transactions were at most 1/38 SigChecks/byte.

I've also compiled per-transaction density statistics from the same data set (267129100 non-coinbase transactions). Note that 5 million transactions were too low density to include in the plot (more than 300 bytes per SigCheck).

Likewise I've looked at blocks. The following plot shows distribution of block densities, excluding the coinbase transaction (which was not logged into the dataset). Note that 93575 blocks are off-plot since either they had no SigChecks or the density was too low. The highest density blocks (at just under ~ 1/38 SigChecks/byte) were #515557, #515558, and #515583 (packed full of 1-of-3 bare multisig consolidations).

Since the block-level limit (100 bytes per sigcheck) is more sparse than the densest standard tx, this means that standard blocks can fill to the sigchecks limit before they fill up to the bytesize limit. With blocks that are largely unfilled this is not a big deal, however, in case of congestion it is best if the transaction priority takes into account sigchecks count, for high-sigchecks transactions. This can be incorporated using a 'vbytes' system, like implemented in Bitcoin Core.

vbytes = max(tx_Size, 100 * tx_SigChecks)

(The use of vbytes is also helpful in making a simple block assembler, which only examines vbytes.) In principle the minimum fee rate calculation could be modified to use this vbytes, but that change in standardness rules is not recommended for this upgrade.