-

-

Save mattieb/6280653 to your computer and use it in GitHub Desktop.

| #!/usr/bin/python | |

| import time | |

| def new_d(): | |

| return { | |

| 1: 2, 3: 4, 5: 6, 7: 8, 9: 10, | |

| 11: 12, 13: 14, 15: 16, 17: 18, 19: 20 | |

| } | |

| def time_func(iterations, f, *args): | |

| start = time.time() | |

| for i in xrange(iterations): | |

| f(*args) | |

| return time.time() - start | |

| def try_del(key): | |

| d = new_d() | |

| try: | |

| del d[key] | |

| except KeyError: | |

| pass | |

| def if_del(key): | |

| d = new_d() | |

| if key in d: | |

| del d[key] | |

| def pop_del(key): | |

| d = new_d() | |

| d.pop(key, None) | |

| def succeed(f): | |

| f(3) | |

| def fail(f): | |

| f(4) | |

| iterations = 1000000 | |

| print "pop succeed:", time_func(iterations, succeed, pop_del) | |

| print "pop fail:", time_func(iterations, fail, pop_del) | |

| print "try succeed:", time_func(iterations, succeed, try_del) | |

| print "try fail:", time_func(iterations, fail, try_del) | |

| print "if succeed:", time_func(iterations, succeed, if_del) | |

| print "if fail:", time_func(iterations, fail, if_del) |

The general approach to testing is right,

- avoid shallow copy of the dict with new_d()

- avoid timing time_func itself by starting and ending the measurement within it

- run the functions in a tight loop with a large number of iterations to get a more meaningful comparison number

I'm not so sure about the premise of the testing, and the actual methodology.

The premise

The assertion that catching exceptions is slow may be true, but a real world measurement of try/except versus the other options isn't just an average between try success and try failure. The ratio of success/fail will vary to the situation, and I would hope that as consenting adults we would be applying try on data where we expect it to succeed the majority of the time. That's the situation where try is great, where it is indeed easier to ask for forgiveness than permission. If that's not the case then try/except is a bit of a rough approach to dealing with the data structure. In that sense I don't like the reductive assumptions to comparing try-success & try-failure to the other patterns. More generally the discussion is about the debate between using a built-in or one of the EAFP or LBYL patterns**[*]**

The methodology

The code is calling new_d(), and passing the functions into succeed() or fail() 1000000 times as well as the test subject functions, so these measurements are also including calls to an additional two functions on every iteration. They aren't particularly busy functions, but function calls still take time. It's DRY, sure, and a nice demonstration of higher order function usage which would be great in real code, but an empirical test setup should try to avoid measuring the test harness as well as the subject**[^]**

Rolling your own time_func is not that easy, better to use timeit which will isolate the function, do setup & and measure it more independently.

Compare the results of the original function with these modifications.

The first is the same as the original but removes the calls to new_d(). You might argue that a real world example function would need to call something to return a dict. It is also likely that it would be explicitly passed a parameter or directly access a global, class, instance, or locally scoped dictionary in the function. Perhaps I'm wrong and a function would indeed usually generate a new dict by calling a function, but this test is supposed to zoom in and focus on the performance of the various approaches to pop, try, and if.

#!/usr/bin/python3

import time

def time_func(iterations, f, *args):

start = time.time()

for i in range(iterations):

f(*args)

return time.time() - start

def try_del(key):

d = {

1: 2, 3: 4, 5: 6, 7: 8, 9: 10,

11: 12, 13: 14, 15: 16, 17: 18, 19: 20

}

try:

del d[key]

except KeyError:

pass

def if_del(key):

d = {

1: 2, 3: 4, 5: 6, 7: 8, 9: 10,

11: 12, 13: 14, 15: 16, 17: 18, 19: 20

}

if key in d:

del d[key]

def pop_del(key):

d = {

1: 2, 3: 4, 5: 6, 7: 8, 9: 10,

11: 12, 13: 14, 15: 16, 17: 18, 19: 20

}

d.pop(key, None)

def succeed(f):

f(3)

def fail(f):

f(4)

iterations = 1000000

print("pop succeed:", time_func(iterations, succeed, pop_del))

print("pop fail:", time_func(iterations, fail, pop_del))

print("try succeed:", time_func(iterations, succeed, try_del))

print("try fail:", time_func(iterations, fail, try_del))

print("if succeed:", time_func(iterations, succeed, if_del))

print("if fail:", time_func(iterations, fail, if_del))The second keeps the calls to new_d(), but removes the calls to the success and fail functions in order to show the impact those have on the measurement, and modifies it to use timeit. Since timeit performs the iteration loop for you, the time_func had to be removed. In the original, the time_func was only called once, but internally ran 1000000 iterations. I could have left it as-is and run timeit once and time_func many times, but the point is to make use of the machinery of timeit. I could have also changed time_func iterations to 1, but that seemed a bit silly to run a loop of 1.

#!/usr/bin/python3

from timeit import timeit

def new_d():

return {

1: 2, 3: 4, 5: 6, 7: 8, 9: 10,

11: 12, 13: 14, 15: 16, 17: 18, 19: 20

}

def try_del(key):

d = new_d()

try:

del d[key]

except KeyError:

pass

def if_del(key):

d = new_d()

if key in d:

del d[key]

def pop_del(key):

d = new_d()

d.pop(key, None)

if __name__ == '__main__':

print("pop succeed:", timeit('pop_del(3)', setup='from __main__ import new_d, pop_del', number=1000000))

print("pop fail:", timeit('pop_del(4)', setup='from __main__ import new_d, pop_del', number=1000000))

print("try succeed:", timeit('try_del(3)', setup='from __main__ import new_d, try_del', number=1000000))

print("try fail:", timeit('try_del(4)', setup='from __main__ import new_d, try_del', number=1000000))

print("if succeed:", timeit('if_del(3)', setup='from __main__ import new_d, if_del', number=1000000))

print("if fail:", timeit('if_del(4)', setup='from __main__ import new_d, if_del', number=1000000))The third is a combination of the previous two

#!/usr/bin/python3

from timeit import timeit

def try_del(key):

d = {

1: 2, 3: 4, 5: 6, 7: 8, 9: 10,

11: 12, 13: 14, 15: 16, 17: 18, 19: 20

}

try:

del d[key]

except KeyError:

pass

def if_del(key):

d = {

1: 2, 3: 4, 5: 6, 7: 8, 9: 10,

11: 12, 13: 14, 15: 16, 17: 18, 19: 20

}

if key in d:

del d[key]

def pop_del(key):

d = {

1: 2, 3: 4, 5: 6, 7: 8, 9: 10,

11: 12, 13: 14, 15: 16, 17: 18, 19: 20

}

d.pop(key, None)

if __name__ == '__main__':

print("pop succeed:", timeit('pop_del(3)', setup='from __main__ import pop_del', number=1000000))

print("pop fail:", timeit('pop_del(4)', setup='from __main__ import pop_del', number=1000000))

print("try succeed:", timeit('try_del(3)', setup='from __main__ import try_del', number=1000000))

print("try fail:", timeit('try_del(4)', setup='from __main__ import try_del', number=1000000))

print("if succeed:", timeit('if_del(3)', setup='from __main__ import if_del', number=1000000))

print("if fail:", timeit('if_del(4)', setup='from __main__ import if_del', number=1000000))These are my times of the 4 scripts, starting with the original and then my 3 modified versions.

> python .\tests\pop_try_del_og.py

pop succeed: 0.6799745559692383

pop fail: 0.7050254344940186

try succeed: 0.625943660736084

try fail: 0.8100082874298096

if succeed: 0.6490054130554199

if fail: 0.6170117855072021

> python .\tests\pop_try_del_og_dicts.py

pop succeed: 0.648021936416626

pop fail: 0.60898756980896

try succeed: 0.5719962120056152

try fail: 0.7960281372070312

if succeed: 0.5789785385131836

if fail: 0.5299675464630127

> python .\tests\pop_try_del.py

pop succeed: 0.6000474864572732

pop fail: 0.6013029936221185

try succeed: 0.5362181026908466

try fail: 0.7164418500281282

if succeed: 0.5597693311757679

if fail: 0.5227962649254438

> python .\tests\pop_try_del_dicts.py

pop succeed: 0.5466019133644747

pop fail: 0.5360004235065076

try succeed: 0.5201604109909419

try fail: 0.7285216620418071

if succeed: 0.509157538099783

if fail: 0.45228045612282974There is some variability in these, so to take it next level and be scientific you should run each of these multiple times and alongside an average also report at least some measures of variance or standard deviation.

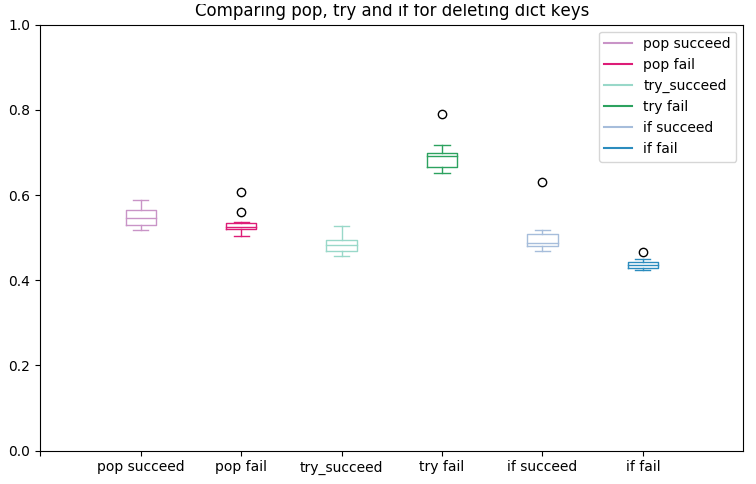

So I did that using 10 rounds of each:

Apart from matplotlib identifying some outliers (which will be related to sporadic things on my system) there is a fairly clear pattern here. They are all very quick, and apart from try-fail they are all pretty much the same performance, and given this is 1000000 iterations, each sits around 500ns, so it's a massively premature optimization to even think this through as far as has been done here. So stop it! Go and just use what works.

So I shamelessly borrowed matplotlib code for the boxplots from here; https://stackoverflow.com/a/20132614/1335793

I made no attempt at making it more DRY, so this code is for reference only, not an example of how you should do it:

#!/usr/bin/python3

from timeit import timeit

import numpy as np

import matplotlib.pyplot as plt

def try_del(key):

d = {

1: 2, 3: 4, 5: 6, 7: 8, 9: 10,

11: 12, 13: 14, 15: 16, 17: 18, 19: 20

}

try:

del d[key]

except KeyError:

pass

def if_del(key):

d = {

1: 2, 3: 4, 5: 6, 7: 8, 9: 10,

11: 12, 13: 14, 15: 16, 17: 18, 19: 20

}

if key in d:

del d[key]

def pop_del(key):

d = {

1: 2, 3: 4, 5: 6, 7: 8, 9: 10,

11: 12, 13: 14, 15: 16, 17: 18, 19: 20

}

d.pop(key, None)

def set_box_color(bp, color):

plt.setp(bp['boxes'], color=color)

plt.setp(bp['whiskers'], color=color)

plt.setp(bp['caps'], color=color)

plt.setp(bp['medians'], color=color)

if __name__ == '__main__':

pop_succeed , pop_fail, try_succeed, try_fail, if_succeed, if_fail = [], [], [], [], [], []

for i in range(10):

pop_succeed.append( timeit('pop_del(3)', setup='from __main__ import pop_del', number=1000000))

pop_fail.append(timeit('pop_del(4)', setup='from __main__ import pop_del', number=1000000))

try_succeed.append(timeit('try_del(3)', setup='from __main__ import try_del', number=1000000))

try_fail.append(timeit('try_del(4)', setup='from __main__ import try_del', number=1000000))

if_succeed.append(timeit('if_del(3)', setup='from __main__ import if_del', number=1000000))

if_fail.append(timeit('if_del(4)', setup='from __main__ import if_del', number=1000000))

plt.figure()

bp_ps = plt.boxplot(pop_succeed, positions=[2], widths=0.6)

bp_pf = plt.boxplot(pop_fail, positions=[4], widths=0.6)

bp_ts = plt.boxplot(try_succeed, positions=[6], widths=0.6)

bp_tf = plt.boxplot(try_fail, positions=[8], widths=0.6)

bp_is = plt.boxplot(if_succeed, positions=[10], widths=0.6)

bp_if = plt.boxplot(if_fail, positions=[12], widths=0.6)

set_box_color(bp_ps, '#c994c7')

set_box_color(bp_pf, '#dd1c77')

set_box_color(bp_ts, '#99d8c9')

set_box_color(bp_tf, '#2ca25f')

set_box_color(bp_is, '#a6bddb')

set_box_color(bp_if, '#2b8cbe')

plt.plot([], c='#c994c7', label='pop succeed')

plt.plot([], c='#dd1c77', label='pop fail')

plt.plot([], c='#99d8c9', label='try_succeed')

plt.plot([], c='#2ca25f', label='try fail')

plt.plot([], c='#a6bddb', label='if succeed')

plt.plot([], c='#2b8cbe', label='if fail')

plt.legend()

ticks = ['', 'pop succeed', 'pop fail', 'try_succeed', 'try fail', 'if succeed','if fail']

plt.xticks(range(0, len(ticks)*2, 2), ticks)

plt.xlim(0, len(ticks)*2)

plt.ylim(0, 1)

plt.tight_layout()

plt.title('Comparing pop, try and if for deleting dict keys')

plt.show()[*] pop is the built-in which can handle the key not found problem, try is the EAFP approach and if is the LBYL approach, and many people (including the glossary) are of the opinion that LBYL is not pythonic. The example of another thread potentially deleting the key inbetween the first thread doing a successful key-test and then trying to access that key only to have it gone and throw an exception anyway is valid, but also says that locks fix that issue. It's only really an issue in Python because the GIL does even round-robin thread switching and can break code execution paths at strange places (like in the middle of an if/elif/else block). For real concurrency or parallelism you wouldn't be using threads (in most cases). The other argument relates to duck-typing where you aren't supposed to be doing type() checks (LBYL style) but then goes on to say you'd be doing hasattr() checks or use Abstract Base Classes and then do isinstance() or issubclass() tests, and how would you? Surely with LBYL style, so in fact there is no basis in the argument that EAFP is better than LBYL. There are situations to use either, and situations where one is preferred. I tend to think using the built-in is ideal because they are simpler, and you benefit from implementation improvements in the language without having to change your code. For example using range() in python 2 was fine, but now in python 3 (like so many other things) it's a generator so you get awesome pipeline behaviour and reduced memory usage. Sure there was xrange but my point is about benefiting from python enhancements without re-writing code.

[^] as much as possible, in reality you can't eliminate all instrument interference from measurement, and there is no such thing as objective truth, but I digress. Philosophy of science is a deep topic, and completely falsifiable, so maybe I'm wrong and there is objective truth and maybe there is a god (the atheists aren't very scientific to be honest, it's hardly a falsifiable perspective to hold).

Well, I didn't go into nearly the same level of detail, but I did feel like putting the results into a table allowed for easier consideration of what the data could tell us.

I should probably note that the level of precision in these tables is arbitrary, and we can all agree that a single run on my random pc isn't sufficient to warrant these precision levels. I chose to round to these points in case anyone else had a larger data set to compare this small sample against.

Table 1: execution time (in milliseconds) rounded to to the nearest microsecond

| case | succeed | fail |

|---|---|---|

| pop | 45.673 ms | 49.161 ms |

| try | 56.651 ms | 72.481 ms |

| if | 58.166 ms | 42.423 ms |

Table 2: execution times relative to the slowest test case

| case | succeed | fail |

|---|---|---|

| pop | 63.014% | 67.8% |

| try | 78.16% | 100.0% |

| if | 80.25% | 58.5% |

Table 2 data has been rounded to the nearest tenth of a percent (that's about +-70 microseconds for the data from my pc)

Python3

Here are some of my results on my PC workstation's WSL with python3: