Search keywords in a text file

Hadoop(hdfs), Apache Spark, gcc

C,Shell Script, Python

- Oldschool / Standard way Link

int wc(char* file_path, char* word){

FILE *fp;

int count = 0;

int ch, len;

if(NULL==(fp=fopen(file_path, "r")))

return -1;

len = strlen(word);

for(;;){

int i;

if(EOF==(ch=fgetc(fp))) break;

if((char)ch != *word) continue;

for(i=1;i<len;++i){

if(EOF==(ch = fgetc(fp))) goto end;

if((char)ch != word[i]){

fseek(fp, 1-i, SEEK_CUR);

goto next;

}

}

++count;

next: ;

}

end:

fclose(fp);

return count;

}

int main(){//testestest : count 2

char key[] = "test"; // the string I am searching for

int wordcount = 0;

wordcount = wc("input.txt", key);

printf("%d",wordcount);

return 0;

}$ scp wc.c user@serverip

$ scp input.txt user@serverip

$ ssh user@serverip

$ gcc wc.c

$ ./#wc

xxxxx- Baseline

# Counting the "string" occurrence in a file

def count_string_occurrence():

string = "test"

f = open("result_file.txt")

contents = f.read()

f.close()

print "Number of '" + string + "' in file", contents.count("foo")- Parallel implementation

from pyspark import SparkContext, SparkConf

file = sc.textFile("hdfs:///user/mark/wiki.txt")

tests = file.filter(lambda line: "test" in line)

# Count all the test

tests.count()

# Count errors mentioning case

tests.filter(lambda line: "case" in line).count()

# Fetch the test case as an array of strings

tests.filter(lambda line: "case" in line).collect()errors.cache()from pyspark import SparkContext, SparkConf

file = sc.textFile("hdfs:///user/mark/wiki.txt")

counts = file.flatMap(lambda line: line.split(" ")) \

.map(lambda word: (word, 1)) \

.reduceByKey(lambda a, b: a + b)

counts.saveAsTextFile("hdfs:///user/mark/wc.txt")Python Notebook System

ipython notebook, matplotlib

Markdown, Python

- styling

- image

- code

- equation

- link

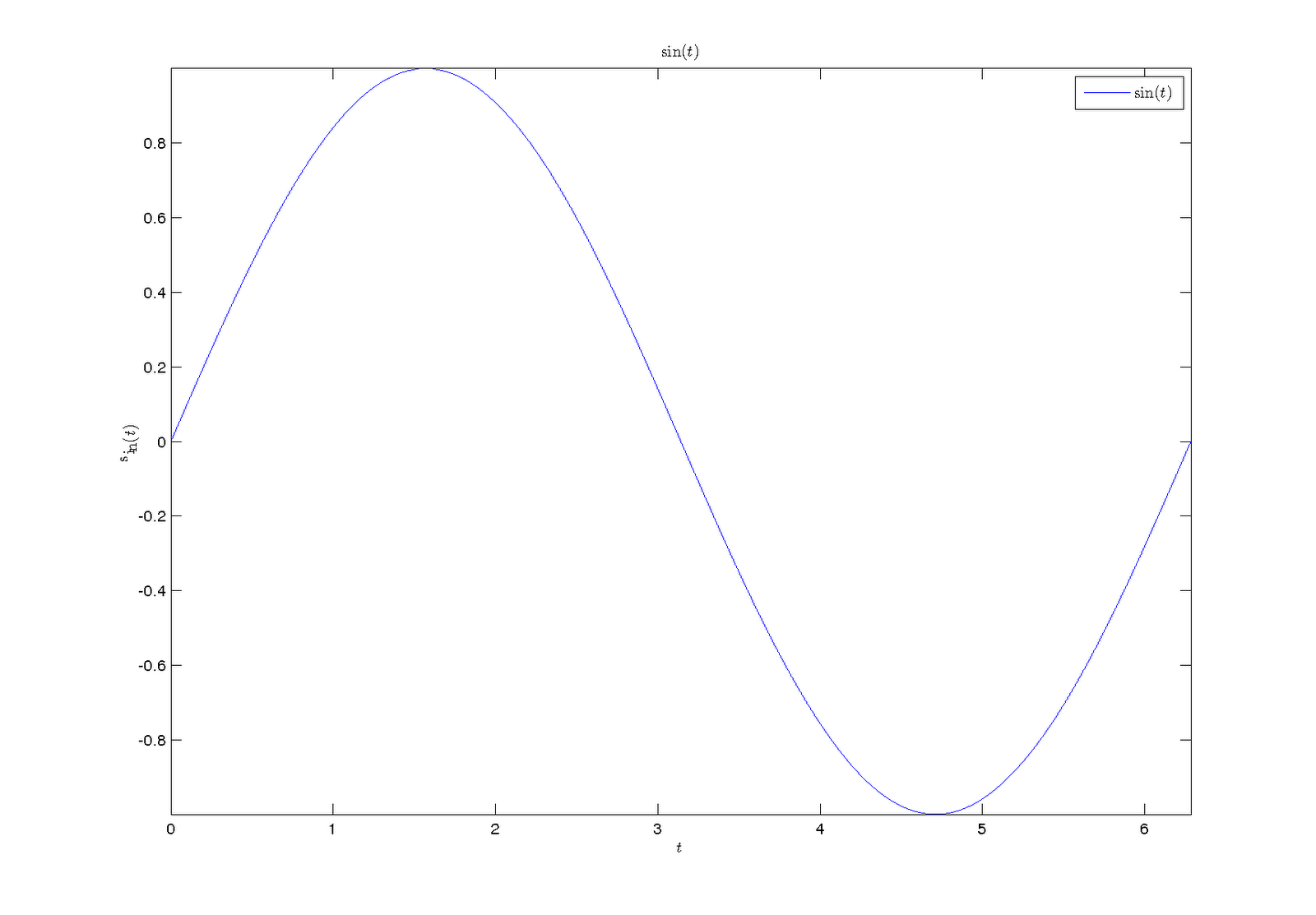

Task B.II : Execute code on Notebook, plot a sine wave

import matplotlib.pyplot as plt

x = linspace(0, 5, 10)

y = x ** 2

fig = plt.figure()

axes = fig.add_axes([0.1, 0.1, 0.8, 0.8]) # left, bottom, width, height (range 0 to 1)

axes.plot(x, y, 'r')

axes.set_xlabel('x')

axes.set_ylabel('y')

axes.set_title('title');- Task : use word_cloud to generate a image like this:

Thanks, pretty interesting.