Fine-tuning llama 2 7B to analyze financial reports and write “funny” tweets

Sharing some insights from a recent weekend fun project where I tried to analyze and summarize financial reports using a fine-tuned LLM.

My initial goal was to train a model to summarize the annual/quarterly financial reports of public companies (aka 10-K / 10-Q). But, realizing that straightforward financial summaries are boring, I thought of tuning LLM to generate sarcastic summaries of these reports. Something short I could post on Twitter.

Data exploration and dataset prep

Working with financial reports ain’t easy. You download them in html format, they’re pretty dense with ~100 pages filled with tables that can be tough to parse, many legal disclaimers and various useless info. I knew I wanted to get 3-5 funny tweets as an output from a report. But I spent quite some time figuring out what data to actually input to get the result - a page, a section, a table?

To find the right format, I started experimenting with ChatGPT manually feeding it different data as an input and asking to produce fun tweets. I tried:

- Single report pages - weren’t useful as they didn’t contain much of useful info.

- Entire report sections - hit the token limit or confused the model.

- Financial tables - alone they missed too much context.

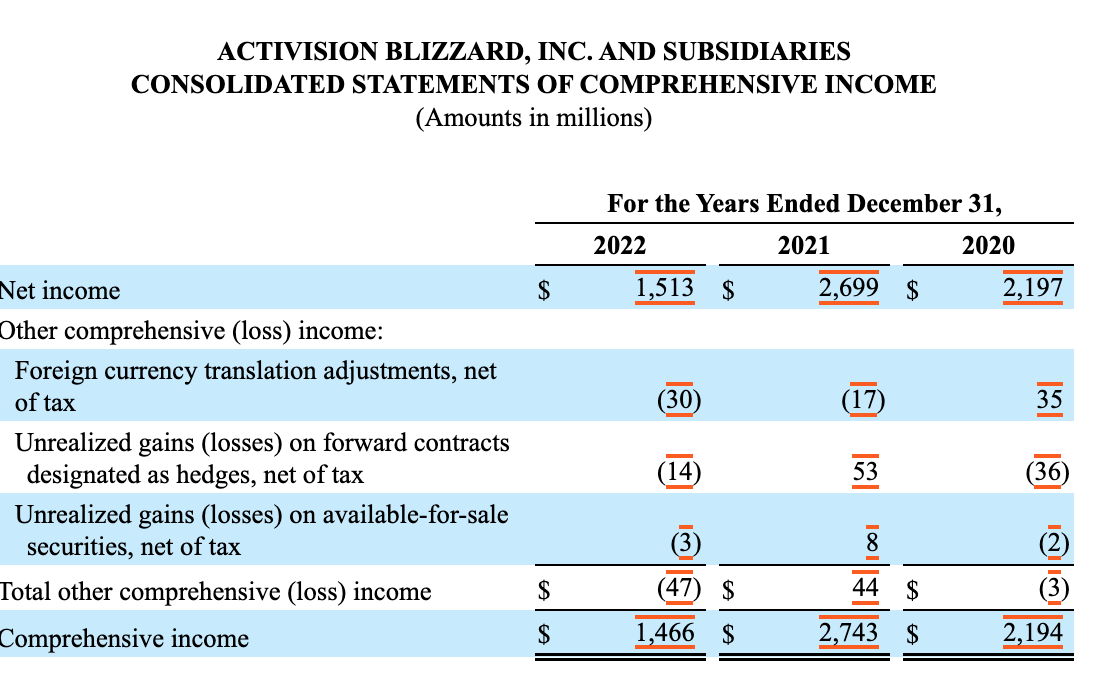

After experimenting I started realizing I needed to process those reports first. So I decided (a) to focus on only one single section of the report called “Management discussion & analysis” that contained most of the important info and ignore the rest; (b) to summarize this section and feed summaries to the model instead of just raw text/tables. I did that and I ended up with 3-5 summaries per report. After feeding those to the model I was satisfied with the result as gpt-4 was spitting out some decent tweets that I was comfortable using in my training dataset:

More dataset prep

Now I had to sit down and actually build a pipeline to prepare dataset for fine-tuning. Here is what it looked like:

- Extract and parse the “Management Discussion and Analysis” section from the reports. a) Automatically download financial reports from SEC EDGAR. I used a cool API called sec-api. b) I split it in ~6k token chunks so ChatGPT can summarize it. Stole some recipes from openai-cookbook.

- Summarize each chunks using gpt-3.5-turbo-16k API.

- Generate 5 funny tweets based on each summary with gpt-4 API.

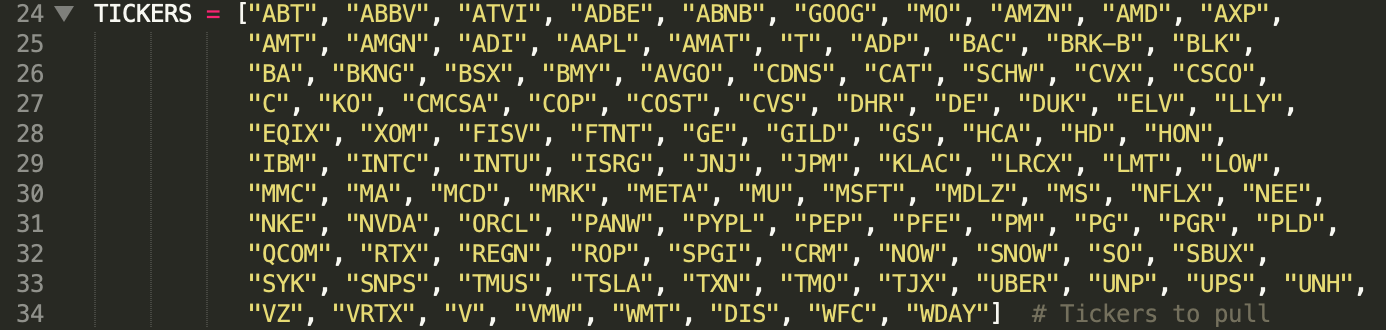

I ran this pipeline to pull financial reports of ~50 public companies over ~3 years. I ended up with a dataset of ~1k rows. To do this with openai’s APIs was not cheap but this was the fastest way and tbh I liked gpt-4’s humor.

Model training and even more dataset prep

Once dataset was ready, I jumped into training right away. I opted for the llama2 7b model with LoRA for simplicity and speed. To fine-tune I used llmhome.io that my folks created so I don’t ask them stupid questions about what version of torch should I install on the gpu machine so it works.

Initial results were not great. I tried different hyperparameters but still couldn’t get good enough output. In the situations like this, I tend to blame a model but deep inside I know the truth — my dataset is crap 💩

Once I looked deeper at a dataset I had to admit that was true. It was pretty weak — it had bunch of irrelevant summaries, some weird tweets created by ChatGPT, etc. I tried to write tools to clean it up automatically but they didn’t work well, so I had to sit down and clean it up manually again. Tbh, labelling and cleaning data is some sort of a full-distance Ironman type of challenge.

I was able cut the dataset from ~1,000 down to ~400 lines. It still felt a bit raw but much better than the initial one. Two other tweaks I made: (a) wrap my summaries into a prompt, (b) generate 3 instead of 5 tweets from a single summary.

I did couple more fine-tuning runs. The result that I got was better — now my new model was actually cracking some awkward jokes! 🥸 Also, it was doing pretty well interpreting the data from the summaries — I found it really cool for a 7b lora trained on 400 datapoints. It was still slightly worse compared to gpt-3.5 though and pretty far from the quality of gpt-4.

Takeaways

Will try this dataset with llama2-70b and mistral-7b and will report back. But in the meantime:

- Bad news: data prep is everything; it’s where I spent 95% of my time.

- Good news: even a small 400-line, but (relatively) high-quality, dataset can yield you satisfactory results.

- Something that I didn't realize is that 7b can be fine-tuned on pretty complex and long data inputs of 2-3k+ symbols; it can also learn interpret this data to output something not very simple as a joke.

- Gpt-4 can create really helpful datasets; but it is expensive and you have to clean them up manually as I haven’t found any good tools.

- llama2 7b fine-tuned with LoRA is not great but sometimes does okay.

- Hyperparameters that worked best: 16 batch, 8 epochs, lr 1e-4; haven't tried playing with LoRa's parameters.