-

-

Save mtarral/d99ce5524cfcfb5290eaa05702c3e8e7 to your computer and use it in GitHub Desktop.

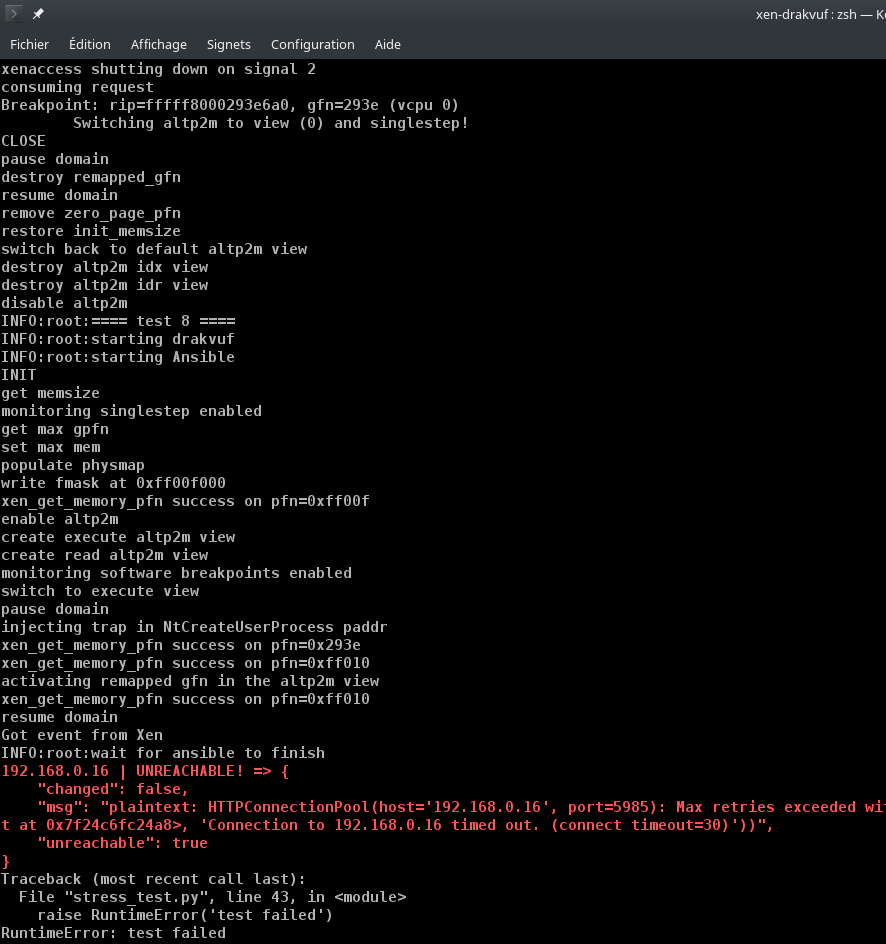

Xen test using drakvuf stealth breakpoints implementation, leading guest freeze or BSOD (critical data corruption)

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| #!/usr/bin/env python3 | |

| import sys | |

| import signal | |

| import logging | |

| import subprocess | |

| import re | |

| logging.basicConfig(level=logging.INFO) | |

| domain = sys.argv[1] | |

| ip = sys.argv[2] | |

| repeat = int(sys.argv[3]) | |

| def getdomid(domname): | |

| cmdline = ['xl', 'list'] | |

| proc = subprocess.run(cmdline, stdout=subprocess.PIPE) | |

| for line in proc.stdout.decode().splitlines(): | |

| m = re.match('(?P<dom_name>\S+)\s+(?P<domid>\S+).*', line) | |

| if m.group('dom_name') == domname: | |

| return int(m.group('domid')) | |

| for i in range(1, repeat+1): | |

| logging.info("==== test %d ====", i) | |

| logging.info("starting drakvuf") | |

| domid = getdomid(domain) | |

| drak_cmdline = ["./xen-drakvuf", str(domid)] | |

| drak_proc = subprocess.Popen(drak_cmdline) | |

| # run ansible | |

| logging.info("starting Ansible") | |

| ans_cmdline = ['/home/mtarral/projects/venv/bin/ansible', '*', '--inventory', '{},'.format(ip), '--connection', | |

| 'winrm', '--extra-vars', 'ansible_user=vagrant', '--extra-vars', | |

| 'ansible_password=vagrant', '--extra-vars', 'ansible_winrm_scheme=http', '--extra-vars', | |

| 'ansible_port=5985', '--module-name', 'win_command', '--args', | |

| 'C:\\Windows\\System32\\reg.exe /?'] | |

| ans_proc = subprocess.Popen(ans_cmdline) | |

| # wait for ansible to finish | |

| logging.info("wait for ansible to finish") | |

| ans_proc.wait() | |

| if ans_proc.returncode != 0: | |

| raise RuntimeError('test failed') | |

| logging.info("stop drakvuf") | |

| drak_proc.send_signal(signal.SIGINT) | |

| drak_proc.wait() |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| /* | |

| * xen-drakvuf.c | |

| * | |

| * Exercises the basic per-page access mechanisms | |

| * | |

| * Copyright (c) 2011 Virtuata, Inc. | |

| * Copyright (c) 2009 by Citrix Systems, Inc. (Patrick Colp), based on | |

| * xenpaging.c | |

| * | |

| * Permission is hereby granted, free of charge, to any person obtaining a copy | |

| * of this software and associated documentation files (the "Software"), to | |

| * deal in the Software without restriction, including without limitation the | |

| * rights to use, copy, modify, merge, publish, distribute, sublicense, and/or | |

| * sell copies of the Software, and to permit persons to whom the Software is | |

| * furnished to do so, subject to the following conditions: | |

| * | |

| * The above copyright notice and this permission notice shall be included in | |

| * all copies or substantial portions of the Software. | |

| * | |

| * THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR | |

| * IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, | |

| * FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE | |

| * AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER | |

| * LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING | |

| * FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER | |

| * DEALINGS IN THE SOFTWARE. | |

| */ | |

| /* | |

| * parts of this code have been taken from LibVMI and the Drakvuf project | |

| * The licenses can be found with the links below: | |

| * - libvmi: https://github.com/libvmi/libvmi/COPYING | |

| * - drakvuf: https://github.com/tklengyel/drakvuf/COPYING | |

| */ | |

| #define XC_WANT_COMPAT_EVTCHN_API 1 | |

| #define XC_WANT_COMPAT_MAP_FOREIGN_API 1 | |

| #include <errno.h> | |

| #include <inttypes.h> | |

| #include <stdlib.h> | |

| #include <stdarg.h> | |

| #include <stdbool.h> | |

| #include <string.h> | |

| #include <time.h> | |

| #include <signal.h> | |

| #include <unistd.h> | |

| #include <sys/mman.h> | |

| #include <poll.h> | |

| #include <libxl.h> | |

| #include <libxl_utils.h> | |

| #include <xenctrl.h> | |

| #include <xen/vm_event.h> | |

| #define LOG(a, b...) printf(a "\n", ## b) | |

| #define DPRINTF(a, b...) fprintf(stderr, a, ## b) | |

| #define ERROR(a, b...) fprintf(stderr, a "\n", ## b) | |

| #define PERROR(a, b...) fprintf(stderr, a ": %s\n", ## b, strerror(errno)) | |

| #define VMI_BIT_MASK(a, b) (((unsigned long long) -1 >> (63 - (b))) & ~((1ULL << (a)) - 1)) | |

| #define VMI_PS_4KB 0x1000ULL | |

| typedef struct remapped_gfn | |

| { | |

| xen_pfn_t o; | |

| xen_pfn_t r; | |

| bool active; | |

| } remapped_gfn_t; | |

| typedef struct xen_interface | |

| { | |

| xc_interface* xc; | |

| libxl_ctx* xl_ctx; | |

| xentoollog_logger* xl_logger; | |

| xc_evtchn* evtchn; // the Xen event channel | |

| int evtchn_fd; // its FD | |

| evtchn_port_t evtchn_port; | |

| void *ring_page; | |

| vm_event_back_ring_t back_ring; | |

| int port; | |

| } xen_interface_t; | |

| typedef struct drakvuf | |

| { | |

| xen_interface_t* xen; | |

| domid_t domain_id; | |

| xen_pfn_t max_gpfn; | |

| xen_pfn_t zero_page_gfn; | |

| uint64_t init_memsize; | |

| uint16_t altp2m_idx; | |

| uint16_t altp2m_idr; | |

| uint32_t page_shift; | |

| uint32_t page_size; | |

| uint32_t num_vcpus; | |

| remapped_gfn_t* remapped_list[5]; | |

| } drakvuf_t; | |

| static int interrupted; | |

| bool evtchn_bind = 0, evtchn_open = 0, mem_access_enable = 0; | |

| static void close_handler(int sig) | |

| { | |

| interrupted = sig; | |

| } | |

| static void usage(char* progname) | |

| { | |

| fprintf(stderr, "Usage: %s <domain_id>\n", progname); | |

| } | |

| static void xen_pause_vm(xc_interface* xc, domid_t domid) | |

| { | |

| xc_dominfo_t info = {0}; | |

| if (-1 == xc_domain_getinfo(xc, domid, 1, &info)) | |

| { | |

| ERROR("fail to get domain info !"); | |

| exit(4); | |

| } | |

| // the domain shouldn't be paused anyway | |

| // we call pause/resume synchronously | |

| // if (info.paused) | |

| // return; | |

| LOG("pause domain"); | |

| if (-1 == xc_domain_pause(xc, domid)) | |

| { | |

| ERROR("fail to pause domain !!"); | |

| exit(3); | |

| } | |

| } | |

| static void xen_resume_vm(xc_interface* xc, domid_t domid) | |

| { | |

| LOG("resume domain"); | |

| if (-1 == xc_domain_unpause(xc, domid)) | |

| { | |

| ERROR("fail to resume domain !!"); | |

| exit(2); | |

| } | |

| } | |

| static void put_response(xen_interface_t* xen, vm_event_response_t *rsp) | |

| { | |

| vm_event_back_ring_t *back_ring; | |

| RING_IDX rsp_prod; | |

| back_ring = &xen->back_ring; | |

| rsp_prod = back_ring->rsp_prod_pvt; | |

| /* Copy response */ | |

| memcpy(RING_GET_RESPONSE(back_ring, rsp_prod), rsp, sizeof(*rsp)); | |

| rsp_prod++; | |

| /* Update ring */ | |

| back_ring->rsp_prod_pvt = rsp_prod; | |

| RING_PUSH_RESPONSES(back_ring); | |

| } | |

| static void get_request(xen_interface_t* xen, vm_event_request_t *req) | |

| { | |

| vm_event_back_ring_t *back_ring; | |

| RING_IDX req_cons; | |

| back_ring = &xen->back_ring; | |

| req_cons = back_ring->req_cons; | |

| /* Copy request */ | |

| memcpy(req, RING_GET_REQUEST(back_ring, req_cons), sizeof(*req)); | |

| req_cons++; | |

| /* Update ring */ | |

| back_ring->req_cons = req_cons; | |

| back_ring->sring->req_event = req_cons + 1; | |

| } | |

| static int xc_wait_for_event_or_timeout(xen_interface_t* xen, unsigned long ms) | |

| { | |

| xc_evtchn* xce = xen->evtchn; | |

| struct pollfd fd = { .fd = xen->evtchn_fd, .events = POLLIN | POLLERR }; | |

| int port; | |

| int rc; | |

| rc = poll(&fd, 1, ms); | |

| if ( rc == -1 ) | |

| { | |

| if (errno == EINTR) | |

| return 0; | |

| ERROR("Poll exited with an error"); | |

| goto err; | |

| } | |

| if ( rc == 1 ) | |

| { | |

| port = xc_evtchn_pending(xce); | |

| if ( port == -1 ) | |

| { | |

| ERROR("Failed to read port from event channel"); | |

| goto err; | |

| } | |

| rc = xc_evtchn_unmask(xce, port); | |

| if ( rc != 0 ) | |

| { | |

| ERROR("Failed to unmask event channel port"); | |

| goto err; | |

| } | |

| } | |

| else | |

| port = -1; | |

| return port; | |

| err: | |

| return -errno; | |

| } | |

| // stolen from libvmi | |

| static void * | |

| xen_get_memory_pfn( | |

| drakvuf_t *drakvuf, | |

| uint64_t pfn, | |

| int prot) | |

| { | |

| void *memory = xc_map_foreign_range(drakvuf->xen->xc, | |

| drakvuf->domain_id, | |

| XC_PAGE_SIZE, | |

| prot, | |

| (unsigned long) pfn); | |

| if (MAP_FAILED == memory || NULL == memory) { | |

| ERROR("xen_get_memory_pfn failed on pfn=0x%"PRIx64"", pfn); | |

| return NULL; | |

| } else { | |

| // LOG("xen_get_memory_pfn success on pfn=0x%"PRIx64"", pfn); | |

| } | |

| return memory; | |

| } | |

| static void | |

| xen_release_memory( | |

| void *memory, | |

| size_t length) | |

| { | |

| munmap(memory, length); | |

| } | |

| static bool | |

| xen_put_memory( | |

| drakvuf_t *drakvuf, | |

| uint64_t paddr, | |

| void *buf, | |

| uint32_t count) | |

| { | |

| unsigned char *memory = NULL; | |

| uint64_t phys_address = 0; | |

| uint64_t pfn = 0; | |

| uint64_t offset = 0; | |

| size_t buf_offset = 0; | |

| while (count > 0) { | |

| size_t write_len = 0; | |

| /* access the memory */ | |

| phys_address = paddr + buf_offset; | |

| pfn = phys_address >> drakvuf->page_shift; | |

| offset = (drakvuf->page_size - 1) & phys_address; | |

| memory = xen_get_memory_pfn(drakvuf, pfn, PROT_WRITE); | |

| if (NULL == memory) { | |

| return false; | |

| } | |

| /* determine how much we can write */ | |

| if ((offset + count) > drakvuf->page_size) { | |

| write_len = drakvuf->page_size - offset; | |

| } else { | |

| write_len = count; | |

| } | |

| /* do the write */ | |

| memcpy(memory + offset, ((char *) buf) + buf_offset, write_len); | |

| /* set variables for next loop */ | |

| count -= write_len; | |

| buf_offset += write_len; | |

| xen_release_memory(memory, drakvuf->page_size); | |

| } | |

| return true; | |

| } | |

| static bool | |

| vmi_write_pa( | |

| drakvuf_t* drakvuf, | |

| uint64_t addr, | |

| void *buf, | |

| size_t count) | |

| { | |

| bool ret = false; | |

| size_t buf_offset = 0; | |

| uint64_t paddr = 0; | |

| uint64_t start_addr = addr; | |

| uint64_t offset = 0; | |

| while (count > 0) { | |

| size_t write_len = 0; | |

| paddr = start_addr + buf_offset; | |

| /* determine how much we can write to this page */ | |

| offset = (drakvuf->page_size - 1) & paddr; | |

| if ((offset + count) > drakvuf->page_size) { | |

| write_len = drakvuf->page_size - offset; | |

| } else { | |

| write_len = count; | |

| } | |

| /* do the write */ | |

| if (!xen_put_memory(drakvuf, paddr, | |

| ((char *) buf + (uint64_t) buf_offset), | |

| write_len)) { | |

| goto done; | |

| } | |

| /* set variables for next loop */ | |

| count -= write_len; | |

| buf_offset += write_len; | |

| } | |

| ret = true; | |

| done: | |

| return ret; | |

| } | |

| static void vmi_read_pa(drakvuf_t *drakvuf, uint64_t paddr, uint8_t* buf) | |

| { | |

| unsigned char* memory = NULL; | |

| memory = xen_get_memory_pfn(drakvuf, paddr >> 12, PROT_READ); | |

| memcpy(buf, memory, VMI_PS_4KB); | |

| xen_release_memory(memory, drakvuf->page_size); | |

| } | |

| static void xen_free_interface(xen_interface_t *xen, domid_t domain_id) | |

| { | |

| if (xen) | |

| { | |

| if (xen->ring_page) | |

| { | |

| munmap(xen->ring_page, getpagesize()); | |

| xen->ring_page = NULL; | |

| } | |

| xc_monitor_disable(xen->xc, domain_id); | |

| if (xen->port) | |

| { | |

| xc_evtchn_unbind(xen->evtchn, xen->port); | |

| xen->port = 0; | |

| } | |

| if (xen->xl_ctx) | |

| { | |

| libxl_ctx_free(xen->xl_ctx); | |

| xen->xl_ctx = NULL; | |

| } | |

| if (xen->xl_logger) | |

| { | |

| xtl_logger_destroy(xen->xl_logger); | |

| xen->xl_logger = NULL; | |

| } | |

| if (xen->xc) | |

| { | |

| xc_interface_close(xen->xc); | |

| xen->xc = NULL; | |

| } | |

| if (xen->evtchn) | |

| { | |

| xc_evtchn_close(xen->evtchn); | |

| xen->evtchn = NULL; | |

| } | |

| free(xen); | |

| } | |

| } | |

| static bool xen_init_interface(xen_interface_t *xen, domid_t domain_id) | |

| { | |

| int rc = 0; | |

| /* We create an xc interface to test connection to it */ | |

| LOG("xc_interface_open"); | |

| xen->xc = xc_interface_open(0, 0, 0); | |

| if (xen->xc == NULL) | |

| { | |

| ERROR("xc_interface_open() failed!"); | |

| goto err; | |

| } | |

| LOG("create logger"); | |

| xen->xl_logger = (xentoollog_logger*) xtl_createlogger_stdiostream( | |

| stderr, XTL_PROGRESS, 0); | |

| if (!xen->xl_logger) | |

| { | |

| ERROR("Fail to create logger!"); | |

| goto err; | |

| } | |

| LOG("allocating libxc context"); | |

| if (libxl_ctx_alloc(&xen->xl_ctx, LIBXL_VERSION, 0, | |

| xen->xl_logger)) | |

| { | |

| ERROR("libxl_ctx_alloc() failed!"); | |

| goto err; | |

| } | |

| // init ring page | |

| LOG("init ring page"); | |

| xen->ring_page = xc_monitor_enable(xen->xc, domain_id, &xen->evtchn_port); | |

| if (!xen->ring_page) | |

| { | |

| PERROR("fail to enable monitoring"); | |

| goto err; | |

| } | |

| // open event channel | |

| LOG("open event channel"); | |

| xen->evtchn = xc_evtchn_open(NULL, 0); | |

| if (!xen->evtchn) | |

| { | |

| ERROR("xc_evtchn_open() could not build event channel!"); | |

| goto err; | |

| } | |

| xen->evtchn_fd = xc_evtchn_fd(xen->evtchn); | |

| /* Bind event notification */ | |

| LOG("bind event notification"); | |

| rc = xc_evtchn_bind_interdomain(xen->evtchn, | |

| domain_id, | |

| xen->evtchn_port); | |

| if ( rc < 0 ) | |

| { | |

| ERROR("Failed to bind event channel"); | |

| goto err; | |

| } | |

| xen->port = rc; | |

| // initialize ring | |

| LOG("initialize the ring"); | |

| SHARED_RING_INIT((vm_event_sring_t *)xen->ring_page); | |

| BACK_RING_INIT(&xen->back_ring, | |

| (vm_event_sring_t *)xen->ring_page, | |

| XC_PAGE_SIZE); | |

| return true; | |

| err: | |

| xen_free_interface(xen, domain_id); | |

| return false; | |

| } | |

| static void vmi_close(drakvuf_t *drakvuf) | |

| { | |

| remapped_gfn_t* remapped_gfn = NULL; | |

| vm_event_request_t req; | |

| vm_event_response_t rsp; | |

| int rc; | |

| LOG("CLOSE"); | |

| if (!drakvuf->xen->xc) | |

| return; | |

| // xen_pause_vm(drakvuf->xen->xc, drakvuf->domain_id); | |

| for (int i=0; i < 5; i++) | |

| { | |

| remapped_gfn = drakvuf->remapped_list[i]; | |

| if (remapped_gfn) | |

| { | |

| LOG("destroy remapped_gfn"); | |

| if (drakvuf->altp2m_idx) | |

| xc_altp2m_change_gfn(drakvuf->xen->xc, drakvuf->domain_id, drakvuf->altp2m_idx, remapped_gfn->o, ~0); | |

| if (drakvuf->altp2m_idr) | |

| xc_altp2m_change_gfn(drakvuf->xen->xc, drakvuf->domain_id, drakvuf->altp2m_idr, remapped_gfn->r, ~0); | |

| xc_domain_decrease_reservation_exact(drakvuf->xen->xc, drakvuf->domain_id, 1, 0, &remapped_gfn->r); | |

| free(remapped_gfn); | |

| } | |

| } | |

| // xen_resume_vm(drakvuf->xen->xc, drakvuf->domain_id); | |

| // disable monitoring | |

| xc_monitor_singlestep(drakvuf->xen->xc, drakvuf->domain_id, false); | |

| xc_monitor_software_breakpoint(drakvuf->xen->xc, drakvuf->domain_id, false); | |

| // clean the ring now that monitoring is disabled | |

| LOG("cleaning ring: %d", RING_HAS_UNCONSUMED_REQUESTS(&drakvuf->xen->back_ring)); | |

| while ( RING_HAS_UNCONSUMED_REQUESTS(&drakvuf->xen->back_ring) ) | |

| { | |

| get_request(drakvuf->xen, &req); | |

| memset( &rsp, 0, sizeof (rsp) ); | |

| rsp.version = VM_EVENT_INTERFACE_VERSION; | |

| rsp.vcpu_id = req.vcpu_id; | |

| rsp.flags = (req.flags & VM_EVENT_FLAG_VCPU_PAUSED); | |

| rsp.reason = req.reason; | |

| /* Put the response on the ring */ | |

| put_response(drakvuf->xen, &rsp); | |

| /* Tell Xen page is ready */ | |

| rc = xc_evtchn_notify(drakvuf->xen->evtchn, | |

| drakvuf->xen->port); | |

| } | |

| LOG("remove zero_page_pfn"); | |

| if (drakvuf->zero_page_gfn) | |

| xc_domain_decrease_reservation_exact(drakvuf->xen->xc, drakvuf->domain_id, 1, 0, &drakvuf->zero_page_gfn); | |

| LOG("restore init_memsize"); | |

| if (drakvuf->init_memsize) | |

| xc_domain_setmaxmem(drakvuf->xen->xc, drakvuf->domain_id, drakvuf->init_memsize); | |

| LOG("switch back to default altp2m view"); | |

| xc_altp2m_switch_to_view(drakvuf->xen->xc, drakvuf->domain_id, 0); | |

| // destroy altp2m idx view | |

| LOG("destroy altp2m idx view"); | |

| if (drakvuf->altp2m_idx) | |

| xc_altp2m_destroy_view(drakvuf->xen->xc, drakvuf->domain_id, drakvuf->altp2m_idx); | |

| // destroy altp2m idr view | |

| LOG("destroy altp2m idr view"); | |

| if (drakvuf->altp2m_idr) | |

| xc_altp2m_destroy_view(drakvuf->xen->xc, drakvuf->domain_id, drakvuf->altp2m_idr); | |

| // disable altp2m | |

| LOG("disable altp2m"); | |

| xc_altp2m_set_domain_state(drakvuf->xen->xc, drakvuf->domain_id, 0); | |

| LOG("restore mem access"); | |

| xc_set_mem_access(drakvuf->xen->xc, drakvuf->domain_id, XENMEM_access_rwx, ~0ull, 0); | |

| xc_set_mem_access(drakvuf->xen->xc, drakvuf->domain_id, XENMEM_access_rwx, 0, drakvuf->max_gpfn); | |

| xen_free_interface(drakvuf->xen, drakvuf->domain_id); | |

| drakvuf->xen = NULL; | |

| } | |

| static bool vmi_init(drakvuf_t *drakvuf) | |

| { | |

| xc_dominfo_t info = {0}; | |

| uint8_t fmask[VMI_PS_4KB] = {[0 ... VMI_PS_4KB-1] = 0xFF}; | |

| LOG("INIT"); | |

| drakvuf->page_shift = 12; | |

| drakvuf->page_size = 1 << drakvuf->page_shift; | |

| drakvuf->xen = calloc(1, sizeof(xen_interface_t)); | |

| LOG("xen_init_interface"); | |

| if (!xen_init_interface(drakvuf->xen, drakvuf->domain_id)) | |

| { | |

| ERROR("fail to init xen interface"); | |

| goto err; | |

| } | |

| // get memsize | |

| LOG("get memsize"); | |

| if (!xc_domain_getinfo(drakvuf->xen->xc, drakvuf->domain_id, 1, &info)) | |

| { | |

| ERROR("fail to get memsize"); | |

| goto err; | |

| } | |

| drakvuf->init_memsize = info.max_memkb; | |

| // set num vcpus | |

| drakvuf->num_vcpus = info.max_vcpu_id + 1; | |

| // setup singlestep | |

| LOG("monitoring singlestep enabled"); | |

| if (xc_monitor_singlestep(drakvuf->xen->xc, drakvuf->domain_id, true) < 0) | |

| { | |

| ERROR("fail to setup singlestep"); | |

| goto err; | |

| } | |

| // get max gpfn | |

| LOG("get max gpfn"); | |

| if ( xc_domain_maximum_gpfn(drakvuf->xen->xc, drakvuf->domain_id, &drakvuf->max_gpfn) < 0 ) | |

| { | |

| ERROR("fail to get max gpfn"); | |

| goto err; | |

| } | |

| // set maxmem | |

| LOG("set max mem"); | |

| if ( xc_domain_setmaxmem(drakvuf->xen->xc, drakvuf->domain_id, ~0) < 0) | |

| { | |

| ERROR("Fail to set max mem"); | |

| goto err; | |

| } | |

| drakvuf->zero_page_gfn = ++drakvuf->max_gpfn; | |

| // populate physmap | |

| LOG("populate physmap"); | |

| if (xc_domain_populate_physmap_exact(drakvuf->xen->xc, drakvuf->domain_id, 1, 0, 0, &drakvuf->zero_page_gfn) < 0) | |

| { | |

| ERROR("Fail to populate physmap"); | |

| goto err; | |

| } | |

| // write fmask | |

| LOG("write fmask at 0x%" PRIx64 "", drakvuf->zero_page_gfn << 12); | |

| if (!vmi_write_pa(drakvuf, drakvuf->zero_page_gfn << 12, &fmask, VMI_PS_4KB)) | |

| { | |

| ERROR("fail to write fmask"); | |

| goto err; | |

| } | |

| // enable altp2m | |

| LOG("enable altp2m"); | |

| if ( xc_altp2m_set_domain_state(drakvuf->xen->xc, drakvuf->domain_id, 1) < 0) | |

| { | |

| ERROR("fail to enable altp2m"); | |

| goto err; | |

| } | |

| // create execute view | |

| LOG("create execute altp2m view"); | |

| if (xc_altp2m_create_view(drakvuf->xen->xc, drakvuf->domain_id, (xenmem_access_t)0, &drakvuf->altp2m_idx) < 0) | |

| { | |

| ERROR("fail to create execute view"); | |

| goto err; | |

| } | |

| // create read view | |

| LOG("create read altp2m view"); | |

| if (xc_altp2m_create_view(drakvuf->xen->xc, drakvuf->domain_id, (xenmem_access_t)0, &drakvuf->altp2m_idr) < 0) | |

| { | |

| ERROR("fail to create read view"); | |

| goto err; | |

| } | |

| // monitor breakpoints | |

| LOG("monitoring software breakpoints enabled"); | |

| if (xc_monitor_software_breakpoint(drakvuf->xen->xc, drakvuf->domain_id, true)) | |

| { | |

| ERROR("fail to monitor software breakpoints"); | |

| goto err; | |

| } | |

| // switch to execute view | |

| LOG("switch to execute view"); | |

| if (xc_altp2m_switch_to_view(drakvuf->xen->xc, drakvuf->domain_id, drakvuf->altp2m_idx) < 0) | |

| { | |

| ERROR("fail to switch on execute view"); | |

| goto err; | |

| } | |

| return true; | |

| err: | |

| vmi_close(drakvuf); | |

| return false; | |

| } | |

| // ideas and implementations stolen from drakvuf "vmi.c:inject_trap_pa" function | |

| static bool drakvuf_inject_trap(drakvuf_t *drakvuf, uint64_t paddr, int i) | |

| { | |

| xen_pfn_t current_gfn = paddr >> 12; | |

| remapped_gfn_t* remapped_gfn = NULL; | |

| uint64_t rpa = 0; | |

| uint8_t bp_opcode = 0xcc; | |

| uint8_t backup[VMI_PS_4KB]; | |

| int rc; | |

| remapped_gfn = calloc(1, sizeof(remapped_gfn_t)); | |

| remapped_gfn->o = current_gfn; | |

| remapped_gfn->r = ++(drakvuf->max_gpfn); | |

| // increase physmap | |

| if (xc_domain_populate_physmap_exact(drakvuf->xen->xc, drakvuf->domain_id, 1, 0, 0, &remapped_gfn->r) < 0) | |

| { | |

| ERROR("fail to populate physmap"); | |

| goto err; | |

| } | |

| // read original gfn | |

| vmi_read_pa(drakvuf, current_gfn << 12, backup); | |

| // fill new shadow copy | |

| vmi_write_pa(drakvuf, remapped_gfn->r << 12, backup, VMI_PS_4KB); | |

| // activate remapped gfn | |

| if (!remapped_gfn->active) | |

| { | |

| LOG("activating remapped gfn in the altp2m view"); | |

| if (xc_altp2m_change_gfn(drakvuf->xen->xc, drakvuf->domain_id, | |

| drakvuf->altp2m_idx, current_gfn, remapped_gfn->r) < 0) | |

| { | |

| ERROR("fail to change gfn"); | |

| goto err; | |

| } | |

| // we don't need the read view for the moment | |

| // if (xc_altp2m_change_gfn(drakvuf->xen->xc, drakvuf->domain_id, | |

| // drakvuf->altp2m_idr, remapped_gfn->r, drakvuf->zero_page_gfn) < 0) | |

| // { | |

| // ERROR("fail to change gfn"); | |

| // goto err; | |

| // } | |

| remapped_gfn->active = true; | |

| } | |

| rpa = (remapped_gfn->r << 12) + (paddr & VMI_BIT_MASK(0,11)); | |

| // write breakpoint | |

| if (!vmi_write_pa(drakvuf, rpa, &bp_opcode, 1)) | |

| { | |

| ERROR("fail to write breakpoint"); | |

| goto err; | |

| } | |

| // protect shadow page with a mem access | |

| LOG("restrict remapped_gfn to --X"); | |

| rc = xc_altp2m_set_mem_access(drakvuf->xen->xc, drakvuf->domain_id, drakvuf->altp2m_idx, current_gfn, XENMEM_access_x); | |

| if (rc) { | |

| ERROR("fail to set memaccess: error %d", rc); | |

| goto err; | |

| } | |

| drakvuf->remapped_list[i] = remapped_gfn; | |

| return true; | |

| err: | |

| if (remapped_gfn) | |

| free(remapped_gfn); | |

| return false; | |

| } | |

| int main(int argc, char *argv[]) | |

| { | |

| struct sigaction act; | |

| drakvuf_t* drakvuf; | |

| vm_event_request_t req; | |

| vm_event_response_t rsp; | |

| char* progname = argv[0]; | |

| int shutting_down = 0; | |

| int rc; | |

| argv++; | |

| argc--; | |

| if (argc != 1) | |

| { | |

| usage(progname); | |

| return -1; | |

| } | |

| drakvuf = calloc(1, sizeof(drakvuf_t)); | |

| drakvuf->domain_id = atoi(argv[0]); | |

| argv++; | |

| argc--; | |

| /* ensure that if we get a signal, we'll do cleanup, then exit */ | |

| act.sa_handler = close_handler; | |

| act.sa_flags = 0; | |

| sigemptyset(&act.sa_mask); | |

| sigaction(SIGHUP, &act, NULL); | |

| sigaction(SIGTERM, &act, NULL); | |

| sigaction(SIGINT, &act, NULL); | |

| sigaction(SIGALRM, &act, NULL); | |

| if (!vmi_init(drakvuf)) | |

| { | |

| ERROR("Fail to init vmi"); | |

| return 1; | |

| } | |

| // xen_pause_vm(drakvuf->xen->xc, drakvuf->domain_id); | |

| LOG("injecting trap in NtCreateUserProcess paddr"); | |

| if (!drakvuf_inject_trap(drakvuf, 0x29826a0, 0)) | |

| { | |

| ERROR("fail to inject trap"); | |

| goto err; | |

| } | |

| LOG("injecting trap in NtTerminateProcess paddr"); | |

| if (!drakvuf_inject_trap(drakvuf, 0x2990d14, 1)) | |

| { | |

| ERROR("fail to inject trap"); | |

| goto err; | |

| } | |

| LOG("injecting trap in NtOpenProcess paddr"); | |

| if (!drakvuf_inject_trap(drakvuf, 0x29a9b70, 1)) | |

| { | |

| ERROR("fail to inject trap"); | |

| goto err; | |

| } | |

| LOG("injecting trap in NtProtectVirtualMemory paddr"); | |

| if (!drakvuf_inject_trap(drakvuf, 0x29f21b0, 1)) | |

| { | |

| ERROR("fail to inject trap"); | |

| goto err; | |

| } | |

| // xen_resume_vm(drakvuf->xen->xc, drakvuf->domain_id); | |

| /* Wait for access */ | |

| for (;;) | |

| { | |

| if ( interrupted ) | |

| { | |

| /* Unregister for every event */ | |

| DPRINTF("xenaccess shutting down on signal %d\n", interrupted); | |

| shutting_down = 1; | |

| break; | |

| } | |

| rc = xc_wait_for_event_or_timeout(drakvuf->xen, 10000); | |

| // LOG("finished polling : %d", rc); | |

| if ( rc < -1 ) | |

| { | |

| ERROR("Error getting event"); | |

| interrupted = -1; | |

| continue; | |

| } | |

| else if ( rc != -1 ) | |

| { | |

| // DPRINTF("Got event from Xen\n"); | |

| } | |

| while ( RING_HAS_UNCONSUMED_REQUESTS(&drakvuf->xen->back_ring) ) | |

| { | |

| //LOG("consuming request"); | |

| get_request(drakvuf->xen, &req); | |

| if ( req.version != VM_EVENT_INTERFACE_VERSION ) | |

| { | |

| ERROR("Error: vm_event interface version mismatch!\n"); | |

| interrupted = -1; | |

| continue; | |

| } | |

| memset( &rsp, 0, sizeof (rsp) ); | |

| rsp.version = VM_EVENT_INTERFACE_VERSION; | |

| rsp.vcpu_id = req.vcpu_id; | |

| rsp.flags = (req.flags & VM_EVENT_FLAG_VCPU_PAUSED); | |

| rsp.reason = req.reason; | |

| switch (req.reason) { | |

| case VM_EVENT_REASON_SOFTWARE_BREAKPOINT: | |

| // LOG("Breakpoint: rip=%016"PRIx64", gfn=%"PRIx64" (vcpu %d)", | |

| // req.data.regs.x86.rip, | |

| // req.u.software_breakpoint.gfn, | |

| // req.vcpu_id); | |

| // LOG("\tSwitching altp2m to view (%u) and singlestep!", 0); | |

| // enable singlestep | |

| rsp.flags |= (VM_EVENT_FLAG_ALTERNATE_P2M | VM_EVENT_FLAG_TOGGLE_SINGLESTEP); | |

| rsp.altp2m_idx = 0; | |

| break; | |

| case VM_EVENT_REASON_SINGLESTEP: | |

| // LOG("Singlestep: rip=%016"PRIx64", vcpu %d, altp2m %u", | |

| // req.data.regs.x86.rip, | |

| // req.vcpu_id, | |

| // req.altp2m_idx); | |

| // LOG("\tSwitching altp2m to view (%u) and disable singlestep", drakvuf->altp2m_idx); | |

| // disable singlestep | |

| rsp.flags |= (VM_EVENT_FLAG_ALTERNATE_P2M | VM_EVENT_FLAG_TOGGLE_SINGLESTEP); | |

| rsp.altp2m_idx = drakvuf->altp2m_idx; | |

| break; | |

| case VM_EVENT_REASON_MEM_ACCESS: | |

| LOG("PAGE ACCESS: %c%c%c for GFN %"PRIx64" (offset %06" | |

| PRIx64") gla %016"PRIx64" (valid: %c; fault in gpt: %c; fault with gla: %c) (vcpu %u [%c], altp2m view %u)", | |

| (req.u.mem_access.flags & MEM_ACCESS_R) ? 'r' : '-', | |

| (req.u.mem_access.flags & MEM_ACCESS_W) ? 'w' : '-', | |

| (req.u.mem_access.flags & MEM_ACCESS_X) ? 'x' : '-', | |

| req.u.mem_access.gfn, | |

| req.u.mem_access.offset, | |

| req.u.mem_access.gla, | |

| (req.u.mem_access.flags & MEM_ACCESS_GLA_VALID) ? 'y' : 'n', | |

| (req.u.mem_access.flags & MEM_ACCESS_FAULT_IN_GPT) ? 'y' : 'n', | |

| (req.u.mem_access.flags & MEM_ACCESS_FAULT_WITH_GLA) ? 'y': 'n', | |

| req.vcpu_id, | |

| (req.flags & VM_EVENT_FLAG_VCPU_PAUSED) ? 'p' : 'r', | |

| req.altp2m_idx); | |

| LOG("\tPATCHGUARD CHECK: Switching altp2m to view (%u) and singlestep!", 0); | |

| // enable singlestep | |

| rsp.flags |= (VM_EVENT_FLAG_ALTERNATE_P2M | VM_EVENT_FLAG_TOGGLE_SINGLESTEP); | |

| rsp.altp2m_idx = 0; | |

| break; | |

| default: | |

| fprintf(stderr, "UNKNOWN REASON CODE %d\n", req.reason); | |

| } | |

| /* Put the response on the ring */ | |

| put_response(drakvuf->xen, &rsp); | |

| } | |

| /* Tell Xen page is ready */ | |

| rc = xc_evtchn_notify(drakvuf->xen->evtchn, | |

| drakvuf->xen->port); | |

| if ( rc != 0 ) | |

| { | |

| ERROR("Error resuming page"); | |

| shutting_down = 1; | |

| } | |

| if (shutting_down) | |

| break; | |

| } | |

| err: | |

| vmi_close(drakvuf); | |

| return 0; | |

| } |

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

A screenshot when a test fails.

The domain ended being in a "frozen" state, therefore

Ansiblecouldn't contact the WinRM service, and failed.