I present FastBFT implementation of the consensus algorithm as a part of "CoZ Bounty #1: Alternative Consensus Implementations" program.

Why did I pick FastBFT to participate? I was not familiar with this algorithm before. I expected to see multiple submissions of HoneyBadgerBFT and decided to implement any other BFT algorithm. At the end of the day, NEO community will benefit from having of many consensus implementations in its disposal. Meanwhile, could I state this is the world first open source FastBFT implementation? 😉

Before diving deep into FastBFT I would like to talk about the current consensus algorithm used in NEO network.

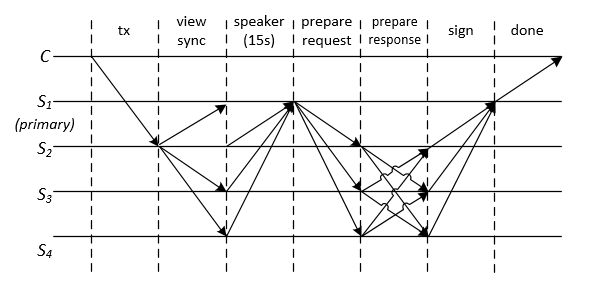

Today NEO network is using Delegated Byzantine Fault Tolerance (dBFT) algorithm. The next steps are executed by replicas to achieve the consensus on incoming transaction blocks (we will use standard terminology instead of NEO one):

- A replica broadcasts a transaction to the entire network.

- The primary replica is identified. Wait 15 seconds to other replicas to follow.

- The primary replica broadcasts the proposed block to secondary replicas.

- The secondary replicas receive the proposal and validate it.

- After receiving

snumber of 'prepareResponse' broadcasts, each second replica reaches the consensus and publishes a signed block. - When the primary replica receives a full block and a new round of consensus begins.

Where:

n: The number of active replicas.

f: The minimum threshold of faulty Consensus Nodes within the system. f = (n - 1) / 3

s: The safe consensus threshold. Below this threshold, the network is exposed to fault. s = ((n - 1) - f)

-

If you are interested in detailed steps description please proceed to the official documentation

-

If you are interested in a practical implementation of the algorithm I wrote a simplified emulation for my research. It can be found here

The main advantages of dBFT are its simplicity and reliability. On the another side, it runs supporting algorithms as part of the consensus and utilizes network bandwidth very aggressively. Please review the next points for clarification:

- dBFT decides on the primary replica during the consensus process introducing 15s delay.

- dBFT creates a lot of network traffic because there are many broadcasts in the communication among replicas.

- dBFT primary replica has to verify signatures the majority of replicas as the last stage of the consensus (I believe the same applicable for all requests to every replica).

Let's see how FastBFT consensus algorithm is solving mentioned issues.

FastBFT is a fast and scalable BFT protocol. It relies on a novel message aggregation technique that combines hardware-based trusted execution environments (TEEs) with lightweight secret sharing primitives. Combining this technique with several other optimizations (i.e., optimistic execution, tree topology and failure detection), FastBFT achieves low latency and high throughput even for large scale networks.

- TEE provides protected memory and isolated execution so that the regular operating system or applications can neither control nor observe the data being stored or processed inside them. In FastBFT TEE is responsible for secret issuing and its verification.

- Network Discovery service helps to organize replicas into a balanced tree rooted at the primary replica to distribute both communication and computation costs. The authors of FastBFT leave this part up to the final implementation.

- Failure Detection mechanism provides multilevel protection. If the primary replica detects faulty active replica it promotes one of passive replicas as the replacement. If the client or any secondary replica suspects the primary replica being faulty, they can run view change process that will end up with the new primary replica. Finally, faulty replicas can take advantage of the failure detection mechanism to trigger a sequence of tree reconstructions (i.e., cause a denial of service DoS attack). After the number of detected non-primary failures exceed a threshold, the primary replica can trigger a transition protocol to fall back to a backup BFT protocol.

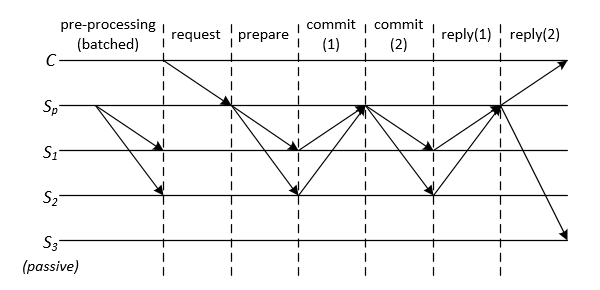

- Pre-processing phase is not a part of the consensus process. The primary replica distributes generated secrets splitting them into shares among replicas. It can be proactive and distribute as much as it anticipates upcoming consensuses. Later, those secret shares will be propagated up through the replica tree and the primary can reconstruct the secret for Commit and Reply phases.

- During Prepare phase the primary replica binds every secret to the block in the consensus.

- After being prepared active replicas in Commit phase reveal their secret shares to its parent replica. Upon receiving all secret shares and restoring the secret, the primary replica commits the block to the blockchain. The last step of this phase is the change propagation among the active replicas.

- In the next Reply phase the active replicas are trying to add the block to their local blockchain copies. If the result of this operation is the same that was reported by the primary replica then the active replicas reveal new secret shares back to the primary replica through their parents. The similar process took place in Commit phase. When the primary replica gets all parts of the secret, the replica can restore it and verify. In case of the success, the primary replica will notify the client that its transaction was included into the blockchain and broadcast changes to all passive replicas for them to update their blockchain versions.

- If you are interested to more academic description please read the whitepaper

- FastBFT separates the consensus algorithm from roles assignment. Indeed, the primary replica and the secondary replicas are already defined before the consensus will be initiated. They stay the same until there is a need to change a topology.

- FastBFT organizes replicas into the tree that can be used to optimized network communication among replicas. Every replica communicates only (normal case) with its parent or its direct children if any. At the top, the primary replica aggregates message exchange from its direct children. The broadcasting is used either the primary replicas to initiate a new phase or by a client and all replicas in special cases as new primary replica election if it is faulty.

- FastBFT uses secret sharing. It means with the support of network topology any replica has to verify only its own child replicas signatures.

I wrote the implementation of FastBFT to see it in practice. Having limited time I was able to implement these protocols:

- Consensus

- View Change

- Replica Topology [Emulated]

- Faulty Primary Replica [Partially]

- Faulty Replica Detection [Partially]

- Faulty Replica Topology

There are next main defined roles:

- Client

- Primary Replica

- Replica

The client (Client class) only generates transactions (GenerateTransaction method) and sends them as a message (TransactionMessage class) to the primary replica (primaryReplica.SendTransaction(transaction) line). Here a transaction is a random number and should contain meaningful payload in real implementation. After the client sent the transaction it waits for either confirmation or timeout. In case of timeout client broadcasts its among replicas to initiate the primary replica change.

The primary replica (PrimaryReplica class) when it is run (Run methods) syncs all replicas building the topology (ReplicaTopology.Discover(this, activeReplicas) line) and distributing view keys (SyncReplicas methods) among them. To get initial view keys the primary replica requests its TEE component (Tee class and BePrimary method). The active replicas will use assigned view keys to decrypt their share of the secret.

After described bootstrapping the primary replica is ready to accumulate transactions from clients into blocks (TransactionHandler class) and to spin up the consensus workflow (InitiateConsensusProcess method). As the third task it listens for incoming messages from other replicas. In case of getting a secret share (SecretShareMessage class) from a child replica it performs secret reconstruction (PrimarySecretShareHandler class) and either commits a block under the consensus to the blockchain (Commit phase) or replies to the client that the transaction was added to the ledger as part of the block (Reply phase).

Every active replica (Replica class) after running mainly participates in message exchange if it has a view key (NewViewHandler and ViewChangeHandler classes). When it is ordered to start Prepare phase (PrepareHandler class) it begins aggregation secret shares from child replicas and passes them to the parent replica (SecretShareHandler class). At the end of this phase the primary replica adds the block to the blockchain ledger and commands to initiate Reply phase (CommitHandler class). The active replica commits the block as well and responds with a new secret share (SecretShareMessage class).

I wish it was production ready but it is not. My vision how to turn it into real-world implementation includes the next refactoring:

- The client class should make transaction message more meaningful.

- The primary replica class and replica class has to be merged to facilitate the primary replica role reassignment.

- Blockchain class has to be introduced with additional validation on the blocks (double spending problems).

- All fake cryptography Crypto class has to be replaced.

- Cross thread communication has to be switched to network calls.

- TEE functions have to be tested in TEE hardware environment.

- Failure detection mechanism should be implemented.

In best traditions of simulations almost everything is emulated (crypto, network, storage, etc.). The replicas are presented as one or many threads.

Specification:

- Language: C#

- Framework: .Net 4.6.1

- CPU: Intel Core i5-3210M @ 2.50GHz (4 logical cores)

It is a sequential experiment with no delays. The primary node works on a single block. Until the block is added on every active replica the client will not see the approval of a transaction and the consensus on the next block will not start.

| BlockSize | TransactionsPerSecond |

|---|---|

| 100 | 2,382 |

| 1,000 | 28,032 |

| 10,000 | 239,257 |

The conclusion: FastBFT does not care about block size and limitations coming from network and/or storage.

| ActiveReplicasCount | TransactionsPerSecond |

|---|---|

| 4 | 2,382 |

| 8 | 2,054 |

| 12 | 1,680 |

The conclusion: FastBFT decreases transactions per second rate in a linear way.

It is a parallel experiment with no delays ("parallel" branch). The primary node works on a single block until it will be committed to the blockchain. After this the block sync among replica's blockchains and the consensus on a new block starts in parallel.

| BlockSize | TransactionsPerSecond |

|---|---|

| 100 | 10,154 |

| 1,000 | 99,414 |

| 10,000 | 548,693 |

The conclusion: The same as for Experiment 1.A.

| ActiveReplicasCount | TransactionsPerSecond |

|---|---|

| 4 | 10,154 |

| 8 | 6,596 |

| 12 | 6,227 |

The conclusion: The same as for Experiment 1.B.

In my subjective opition, FastBFT based on theoretical basis and artificial tests showed the potential to break 10,000 transactions per second limit. Although, I have some doubts to achieve 100,000 transactions per second threshold without a block size upgrade.