Create, Train, and Deploy a Mental Health (Depression / Suicide Watch) Classification Model as a Service in Vertex AI AutoML with data sourced from the BigQuery dataset.

-

A browser, such as Chrome or Firefox.

-

A Google Cloud project with billing enabled.

Keep these links handy (if you wish to follow along)

- On the project selector page, create a Google Cloud project.

-

Enable the BigQuery and Vertex AI APIs.

-

Open the Google Cloud Shell.

-

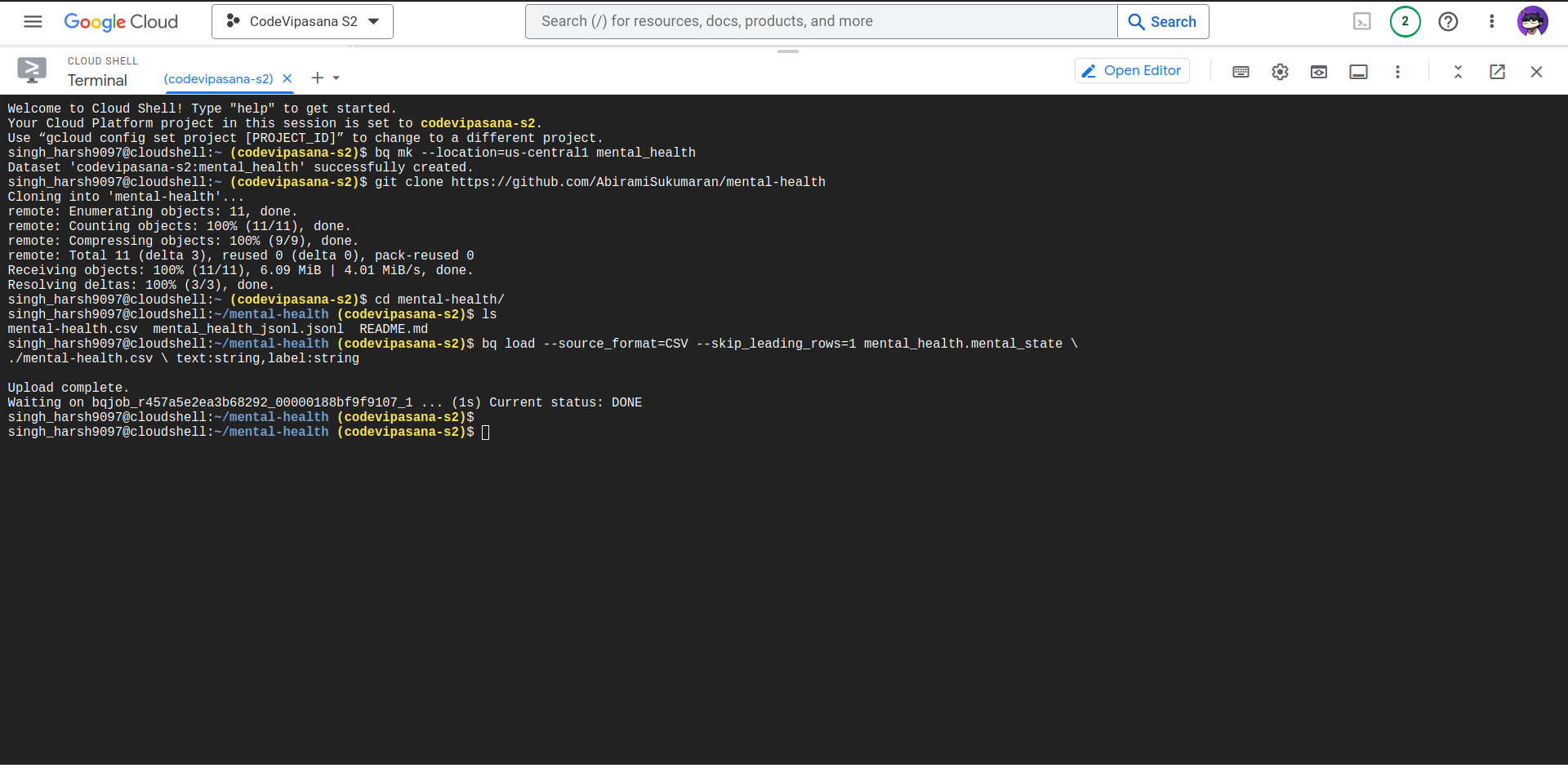

Use the

bq mkcommand to create a dataset called "mental_health":

NOTE: It'smental_healthand notmental-health. Since we cannot put-(hyphen) in the names of BigQuery datasets.bq mk --location=us-central1 mental_health

-

Clone the repository (for data file) and navigate to the project:

git clone https://github.com/AbiramiSukumaran/mental-health cd mental_health

-

Use the

bq loadcommand to load your CSV file into a BigQuery table:bq load --source_format=CSV --skip_leading_rows=1 mental_health.mental_state \ ./mental-health.csv \ text:string,label:string

The final result should look like:

-

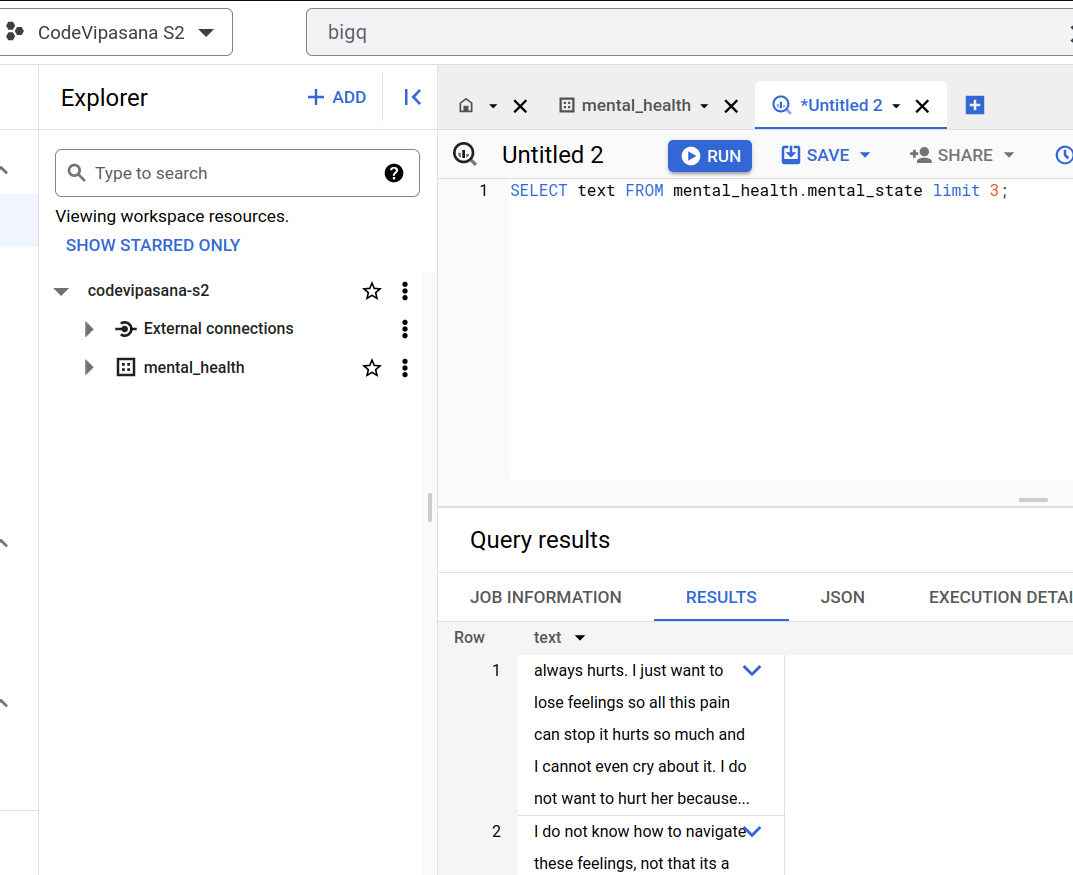

Using the BigQuery web UI, run:

SELECT text FROM mental_health.mental_state limit 3;

-

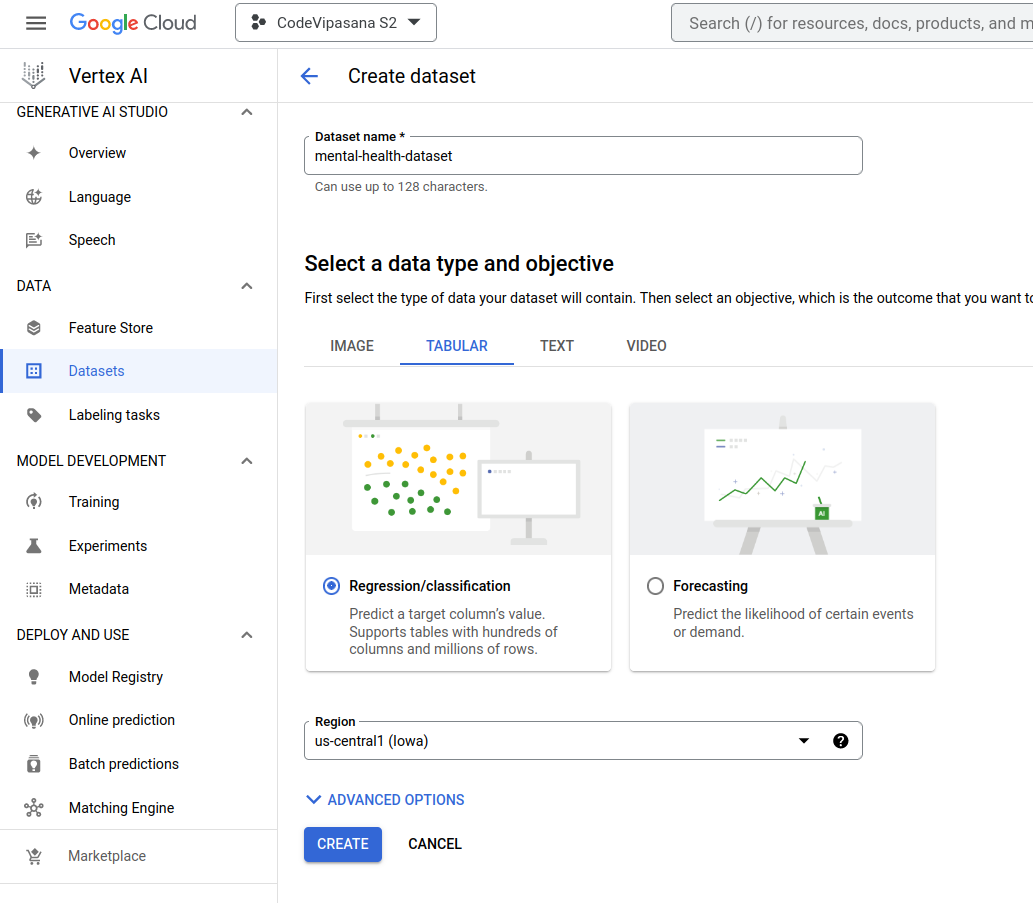

Open the Vertex AI and select Datasets and create a new dataset:

-

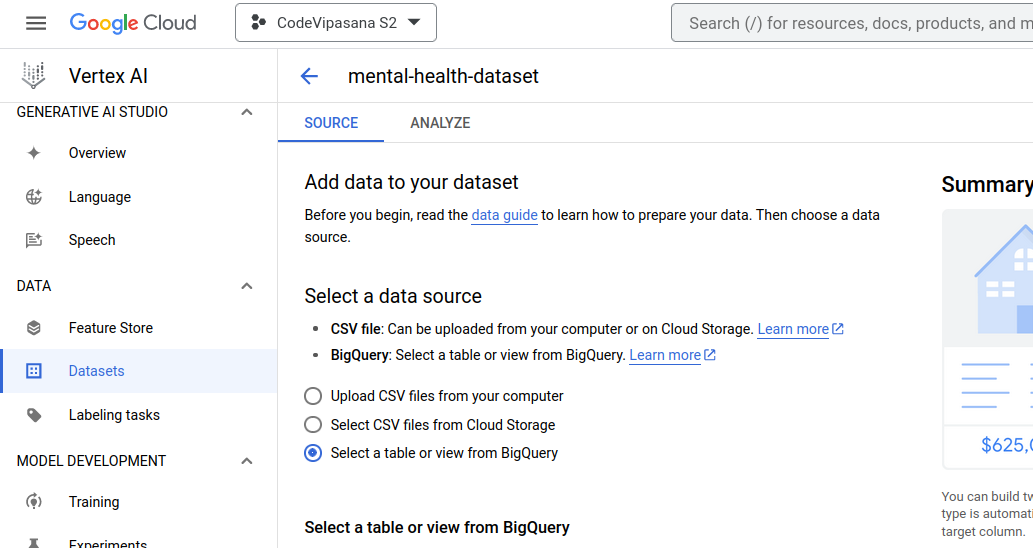

Now, select the third option, i.e., Select a table or view from BigQuery:

-

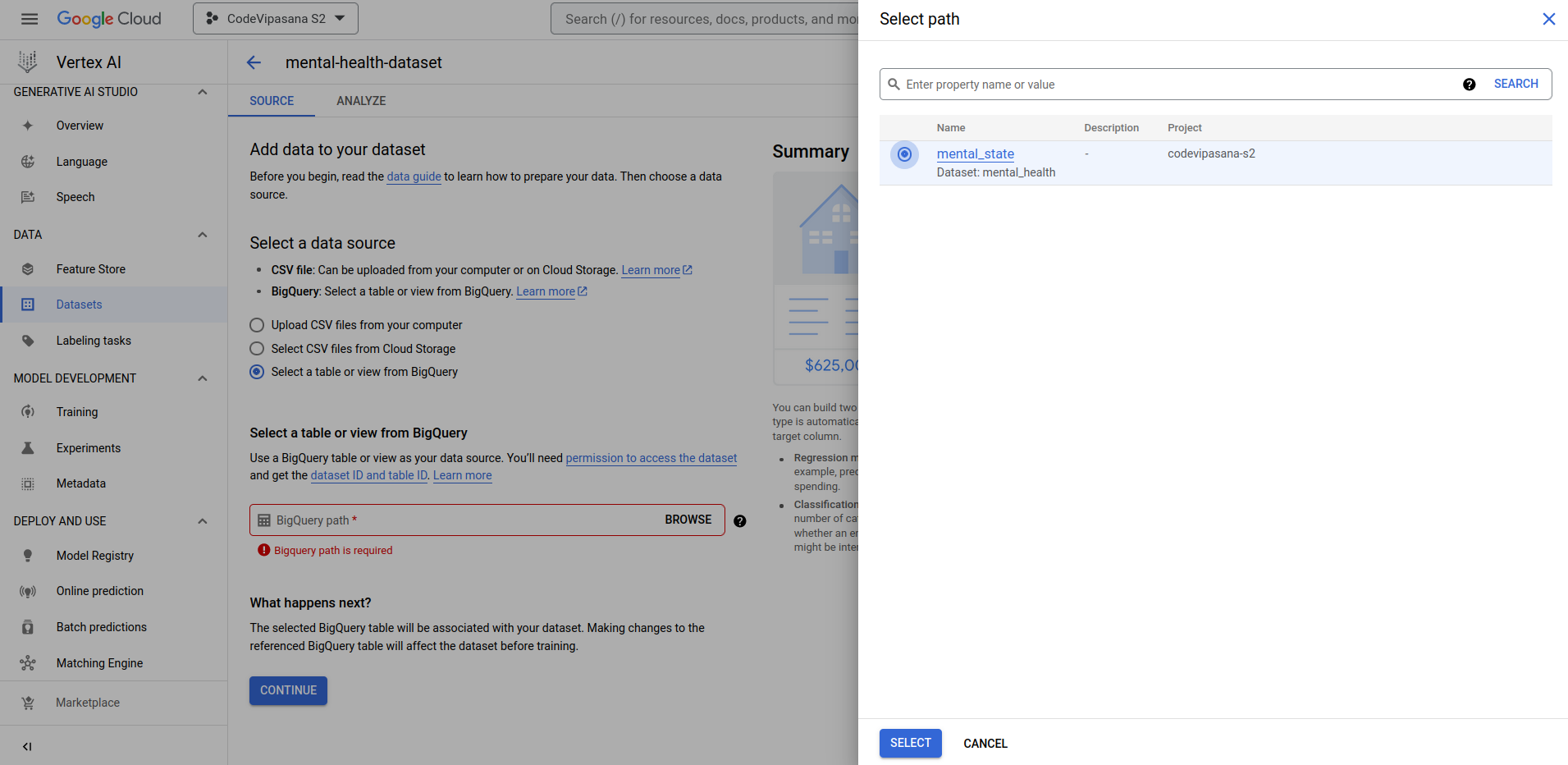

Browse the BigQuery table that we created earlier and select it:

-

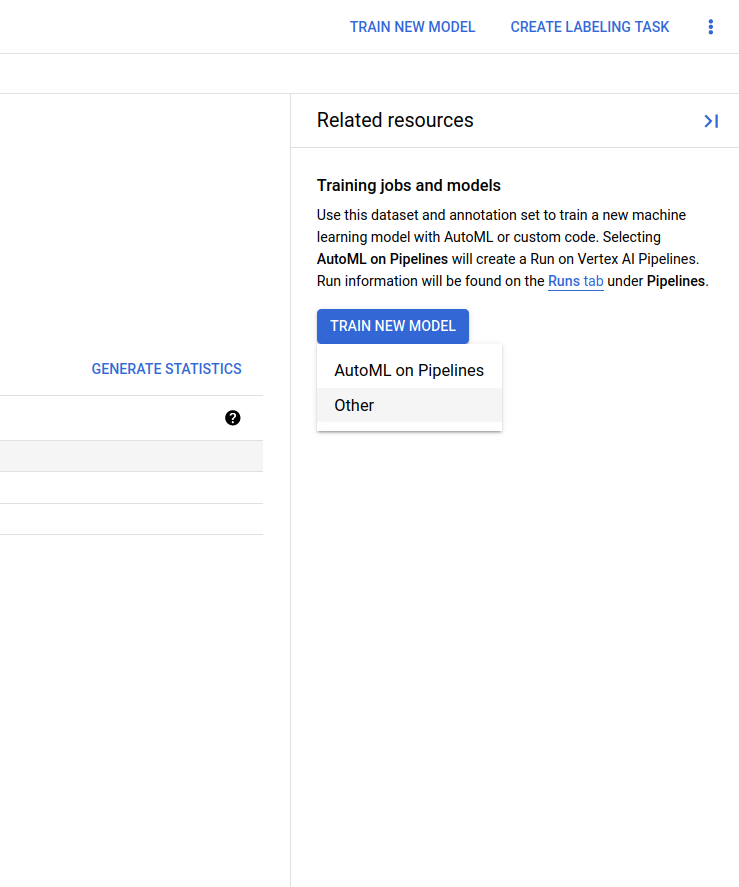

Once the dataset is created, you should see the Analyze page. Click the Train New Model and select "Others":

-

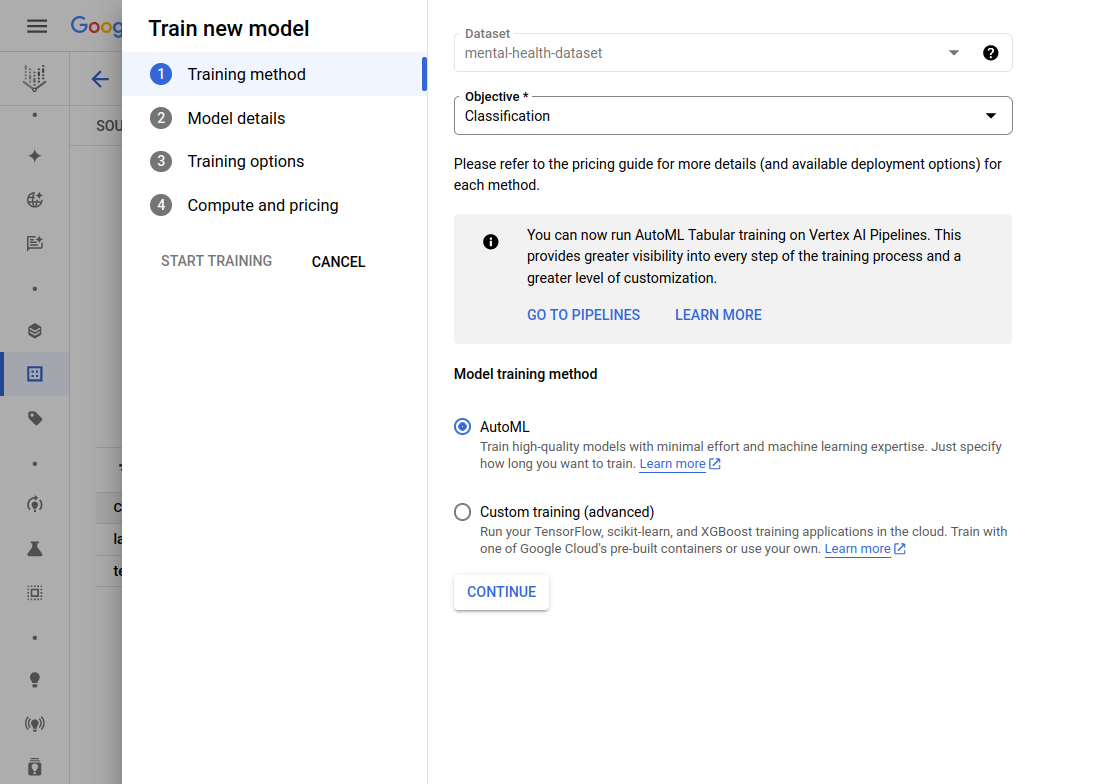

Select Objective as Classification and select AutoML option as Model training method and click continue:

-

Give your model a name and select the Target Column name as "label" from the dropdown and click Continue.

-

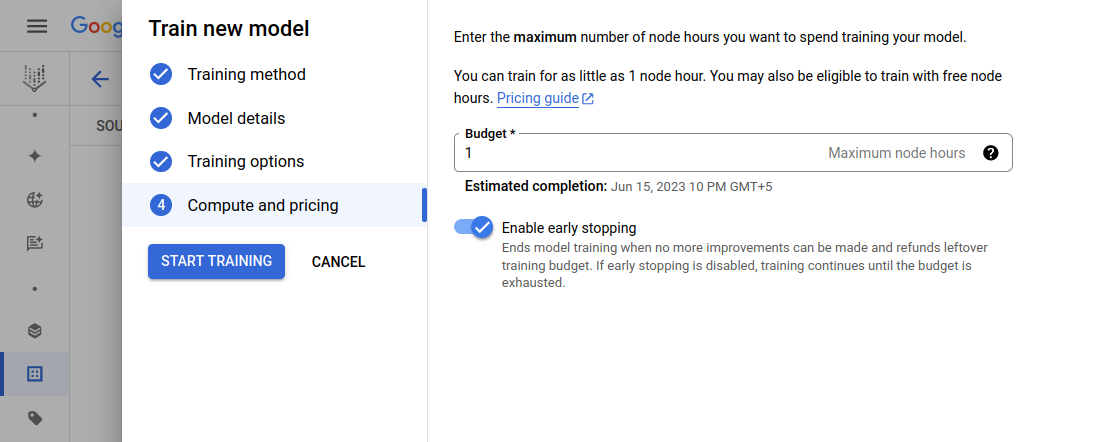

Put the Budget as 1 (i.e., Maximum Node Hours):

-

Click Start Training to begin training your new model.

-

View and evaluate the training results.

-

Deploy and test the model with your API endpoint, and click Continue.

-

On the next page in Model Settings, scroll down to see the Explainability Options and make sure it is enabled.

-

Click deploy once you have done the training configuration.

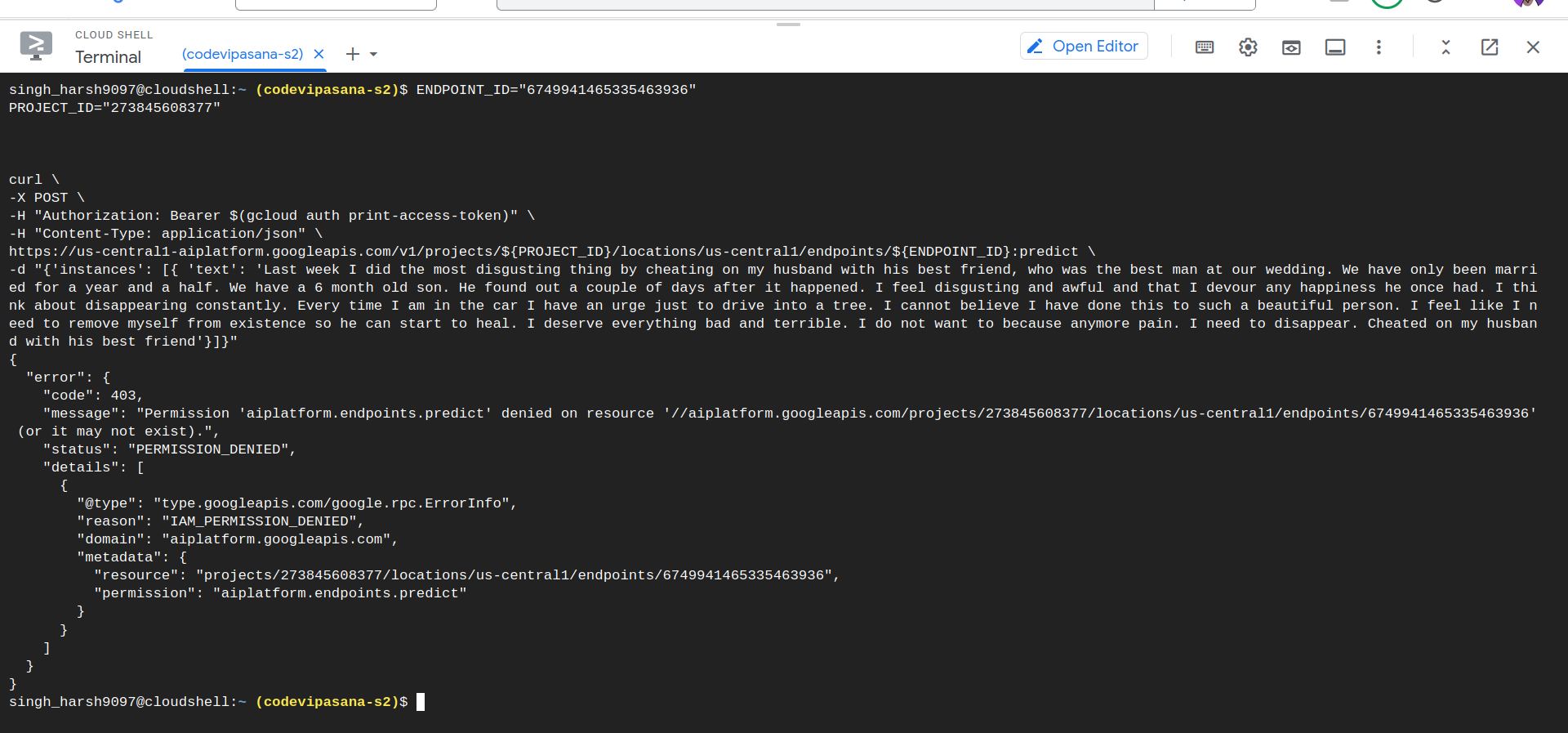

ENDPOINT_ID="YOUR_ENDPOINT_ID"

PROJECT_ID="YOUR_PROJECT_ID"

curl \

-X POST \

-H "Authorization: Bearer $(gcloud auth print-access-token)" \

-H "Content-Type: application/json" \

https://us-central1-aiplatform.googleapis.com/v1/projects/${PROJECT_ID}/locations/us-central1/endpoints/${ENDPOINT_ID}:predict \

-d "{'instances': [{ 'text': 'Last week I did the most disgusting thing by cheating on my husband with his best friend, who was the best man at our wedding. We have only been married for a year and a half. We have a 6 month old son. He found out a couple of days after it happened. I feel disgusting and awful and that I devour any happiness he once had. I think about disappearing constantly. Every time I am in the car I have an urge just to drive into a tree. I cannot believe I have done this to such a beautiful person. I feel like I need to remove myself from existence so he can start to heal. I deserve everything bad and terrible. I do not want to because anymore pain. I need to disappear. Cheated on my husband with his best friend'}]}"You should see the result below with labels and corresponding confidence scores:

To avoid any unnecessary charges, shut down or delete the project you created above.

-

How to take data (from day-to-day life) and make a simple ML model using GCP using point and click methodologies.

-

Create a simple Classification model.

-

Deploy the model to make it available as an API for other applications.