Make sure you are using an environment with python3 available

pip install aws boto3

aws configure

Make/grab your AWS access key and secret key from this link

and then run aws configure as below. Just press enter on the default region name.

$ aws configure

AWS Access Key ID [****************AAAA]:

AWS Secret Access Key [****************AAAA]:

Default region name [us-west-2]:

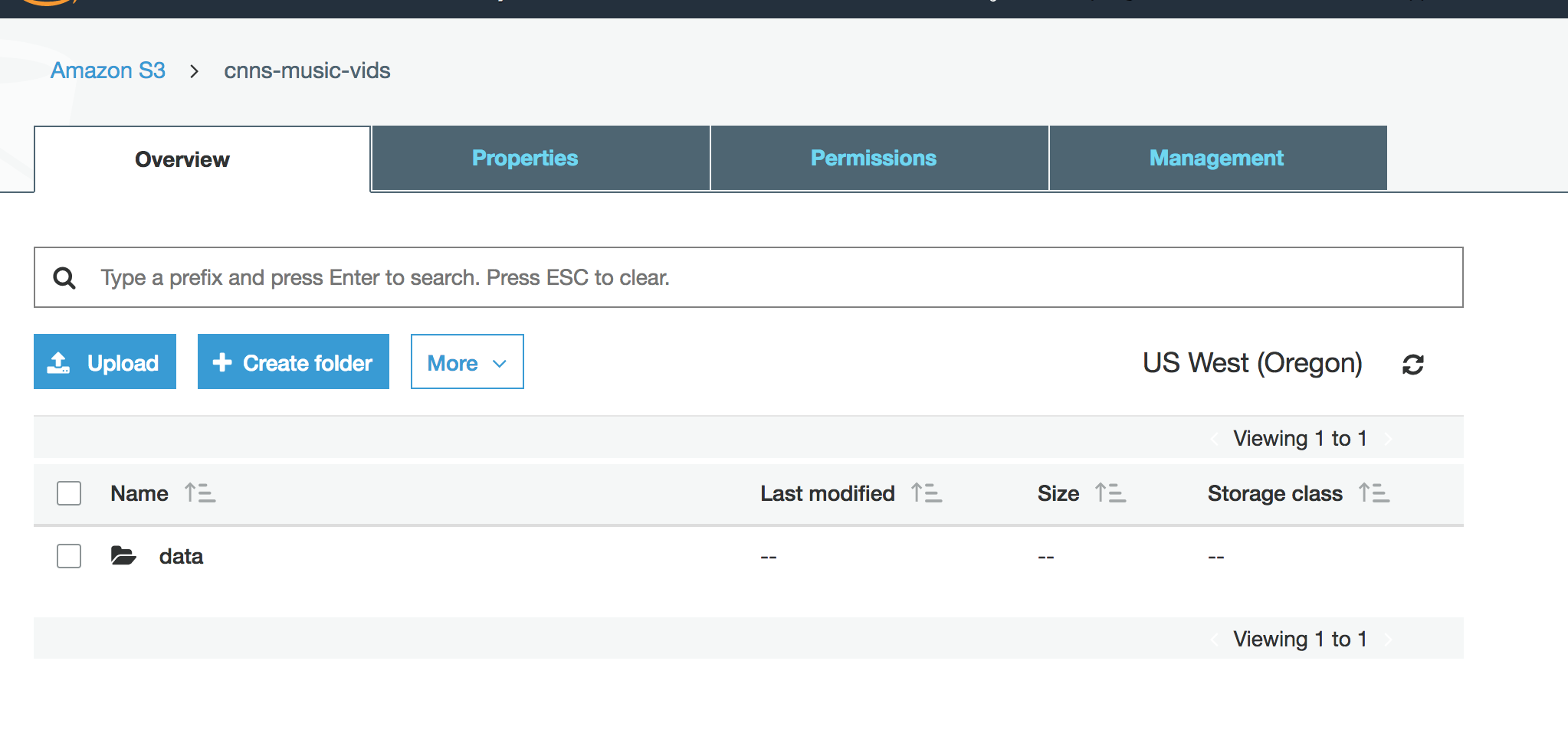

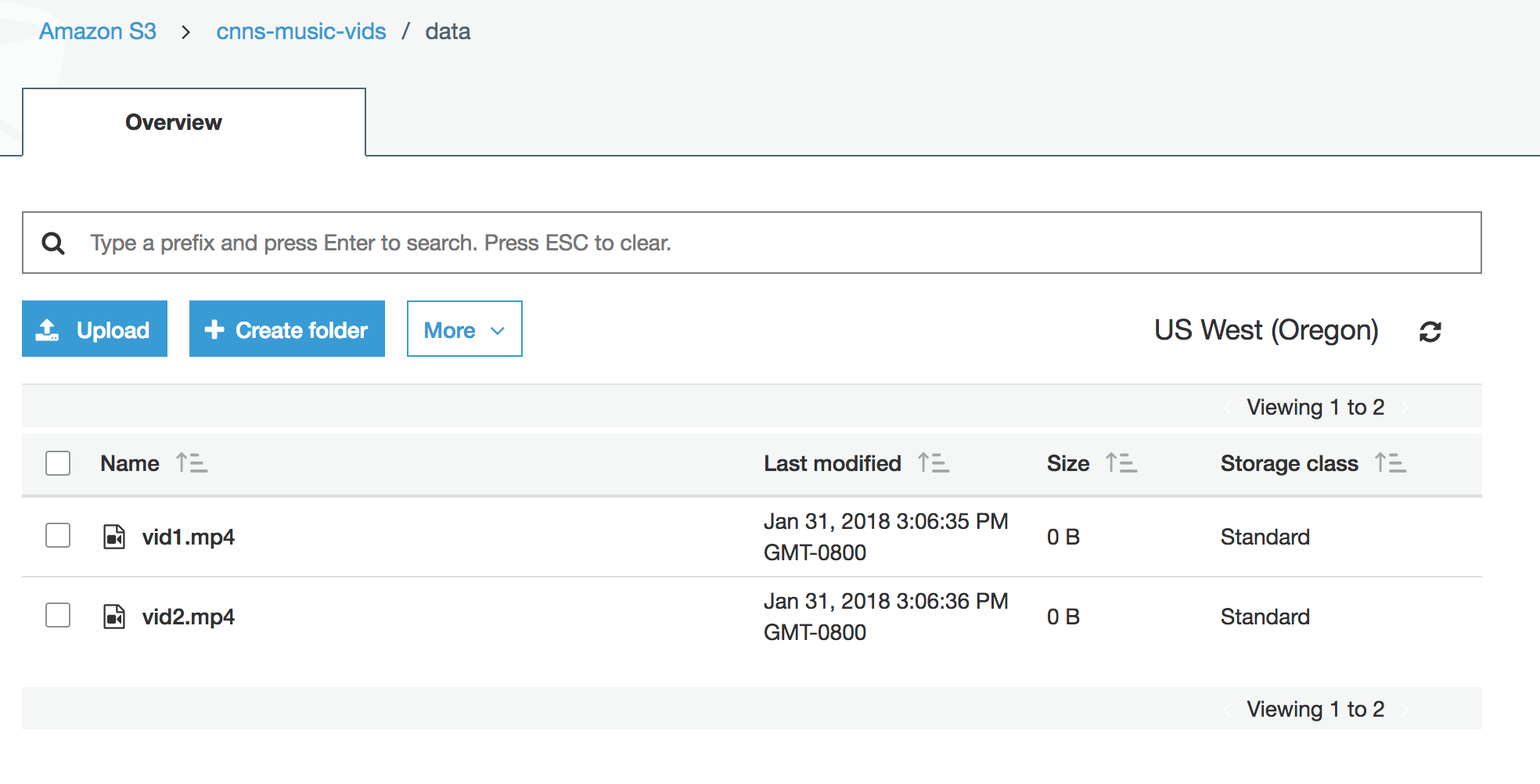

I've provided a sample aws script called aws_script.py. Edit it to point to a test path (data/) and a real bucket name (cnn-music-vids)

as well as an output directory (I'd keep it the same as your test_path). Then if everything goes well it should look something like this!

# edit aws-script.py

$ python aws_script.py

the bucket exists!

uploading directory data/

Searching "data/vid1.mp4" in "cnns-music-vids"

Uploading data/vid1.mp4...

Searching "data/vid2.mp4" in "cnns-music-vids"

Uploading data/vid2.mp4...

done uploading

Then you should see the following two pictures

Images after running test script on videos