Resources : http://bit.ly/SeqModelsResources

A gunshot.

"This is it", she thought

She took a deep breath and prayed.

9 seconds later...

she was World Sprint Champion.

Can't decide sentiment without considering all sentences.

Can't classify activity with just one frame of a video.

Can't generate/classify a second of music without considering the previous seconds.

Traditional neural networks can’t remember.

Recurrent Neural Networks can.

Conventional Neural Networks

def __init__(self, input_size, hidden_size, output_size):

self.in2out = nn.Linear(input_size, output_size)

self.softmax = nn.LogSoftmax(dim=1)

def forward(self, input):

output = self.in2out(input)

output = self.softmax(output)

return output, hiddenRecurrent Neural Networks

def __init__(self, input_size, hidden_size, output_size):

self.hid2hid = nn.Linear(hidden_size, hidden_size)

self.in2hid = nn.Linear(input_size,hidden_size)

self.tanh = nn.Tanh()

def forward(self, input, hidden):

hidden = self.tanh(self.in2hid(input) + self.hid2hid(hidden))

return hidden

Hidden state is representative of entire sequence

Types of Sequential Models

1 : One-to-One

Vanilla Neural Network

*Image Classification*

2 : One-to-Many

Sequence output

*Image Captioning*

3 : Many-to-One

Sequence output

*Sentiment Analysis*

4a : Many-to-Many

Sequence input and sequence output

*Encoder Decoder. Translation.*

4b : Many-to-Many

Synced sequence input and output

*Wakeword detection.*

Long and Short term dependencies

I grew up in Chennai... I speak X.

Where X is the word we are trying to predict.

I grew up in Chennai... I speak X.

speak --> X

- X must be a language.

- Close to X, hence short-term dependency

Chennai --> X

- X must be relevant to Chennai.

- Far from X, hence long-term dependency

- In theory, RNNs are capable of handling “long-term dependencies.”

- In practice, RNNs don’t seem to be able to learn them.

-

We need to decide what information to keep or remove at every timestep

-

We need gates

-

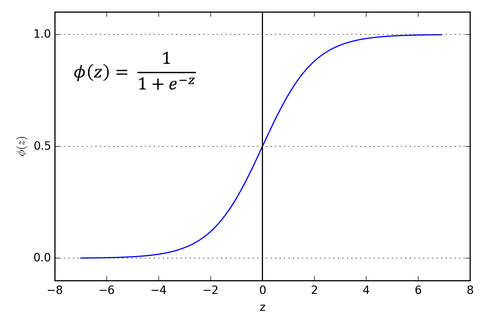

If the gate is a function...

-

Discard information, if gate value is 0

-

Allow information, if gate value is 1

-

pointwise multiplication operation

-

To decide if

Xshould be allowed through the gate.gate(X) = W*X + B

-

But gate value needs to be between 0 and 1..

-

Apply sigmoid function to gate value

gate(X) = sigmoid(W*X + B)

- Input

- Hidden State

- Cell State

- Decide what information to get rid of

- Input : Hidden state and input

1: Keep data0: Discard data completely

- For example :

- To determine gender of pronoun

- We need to remember the gender of last subject

- So, if we come across new subject, forget old gender

- Decide what new information to store in cell state

- Input : Hidden state and input

1: Input is important0: Input doesn't matter

- Layer has 2 parts

- Sigmoid layer (input gate layer) which decides which values we’ll update

- Tanh layer that creates a vector of new candidate values,

Ct

- For example :

- To determine gender of pronoun

- We need to remember the gender of last subject

- So, if we come across new subject, update new subject's gender

We know

- How much to update (

i_t) - How much to forgot (

f_t)

Next Step ?

Update the value

Determine output using hidden and cell state.

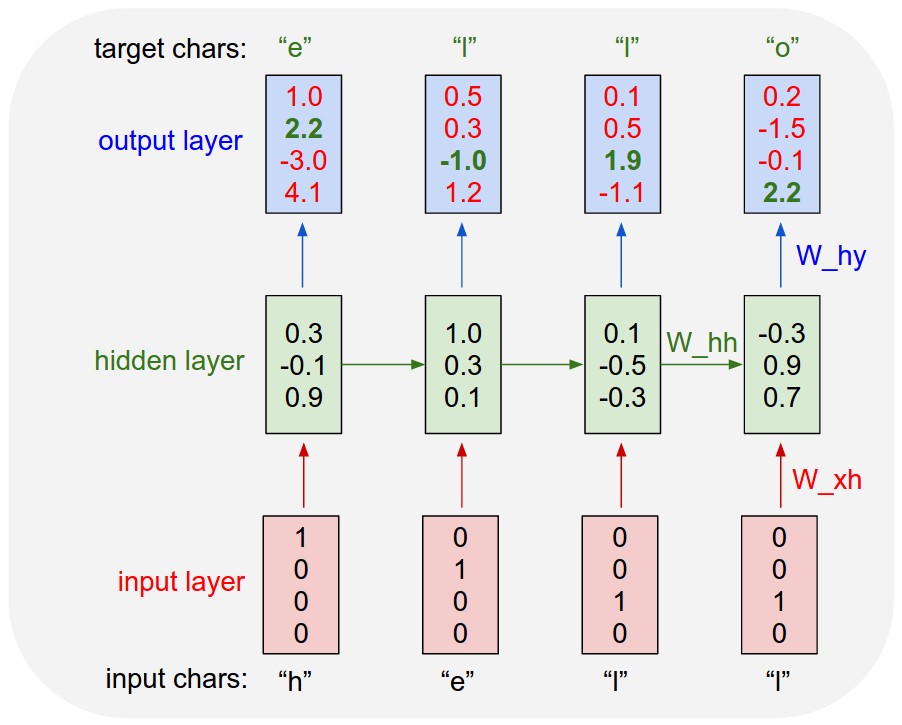

- Encoding characters

- 27 possible chars (A-Z + \n)

- Character -->

[27 x 1]vector

- Input

- Sequence of characters

- Input for each timestep -->

[27 x 1]char-vector

- Output

- Single character for each timestep

- Character that will follow the sequence of input chars

Colab Notebook : bit.ly/ColabCharLSTM

- Character-Level LSTM (Keras) to generate names

- Bonus : Using the LSTM to join names

- Brad + Angelina = Brangelina

- Char + Lizard = Charizard

- Britain + Exit = Brexit

📋 Slides Content as Markdown ➕ Follow me on Github 👨💼 Connect on LinkedIn