I am writing this to express my thoughts on containers in general. I have briefly stated my surface-thoughts on them to a few people, and because it is difficult to articulate all of my thoughts at once to someone, I thought I would write a blog-post / article thing. First of all, all the opinions expressed here are mine and mine alone. There is a good chance nobody else in the world has these same thoughts, so I am alone. I'm fine with that though, as it makes me unique.

Yes. Full stop. They are. I don't care if you say they are "small" virtual machines. They are none the less, a virtual machine. From Wikipedia, Virtual Machine:

In computing, a virtual machine (VM) is the virtualization/emulation of a computer system. Virtual machines are based on computer architectures and provide functionality of a physical computer. Their implementations may involve specialized hardware, software, or a combination.

The fact that you are using Docker or Kubernetes (or both) to spin up an instance of Linux that is running on another instance of Linux means that you have a VM in between so your Linux container thinks it is running on actual hardware. Claiming otherwise is foolish, and a misunderstanding of how this technology works.

I will agree that it is (hopefully) not virtualizing a whole processor in order to get this done. That is possible with something like QEMU if you want to run a bunch of ARM containers on your x64 processor. Modern x64 (and probably ARM) processors have a number of cool features so you can do virtualization with as little overhead as possible. This is great, and I applaud the efforts at Intel, AMD and across the field for adding these improvements. Unfortunately, it only reduces the overhead for virtualization, it does not remove it.

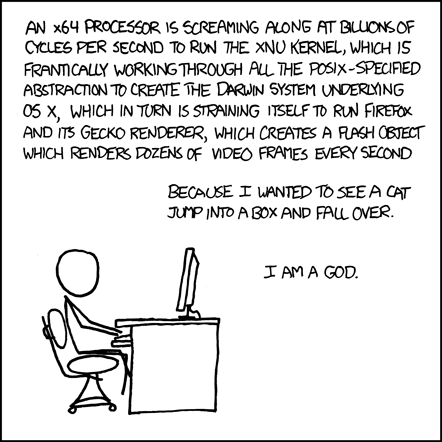

The end result is that you have added a layer of abstraction to your system. This can make one feel very powerful indeed:

With VM's, you need to add another set of layers, like this:

My Gimp skills aren't that great, but fancy images isn't what this is about. It's about adding layers that don't really need to be there. So that prompts the question:

There are a number of answers to this question. Some of them are good reasons to virtualize, some not so much. Here's a short list:

- Need to run old programs on new hardware

- Test a prototype system for different hardware

- Sandboxing / Isolation

- Unified development and deployment system

Of these few, only sandboxing & running old systems on new hardware is a good reason for fleets of VM's. And even then, if you are running multiple different VM's, each sandboxed from each other, you're doing it wrong. Let me explain.

If you are running old software on new hardware then virtualization might be a great way to go. Getting a PDP-11 VM running COBOL so your bank doesn't stop processing transactions is great. Getting your COBOL running on a modern system is better. Converting your COBOL to JavaScript is 💩. It works and is performant, why add layers of 💩 so you can hire 20-year-olds who don't know how to make systems efficient, or code left-pad? But that's another rant entirely...

If you're designing an embedded system, and want to test, it is probably faster to spin up an instance of QEMU running your software on the hardware you are targeting. This is wonderful, and awesome and golden.

If you want to run thousands of conflicting programs on the same hardware, and give them all "full access" to your machine, this is okay. Business A can't interfere with Business B because they are running on "different" machines.

Having a unified development environment sounds great. An honestly, it kind of is. Unfortunately, using a VM to facilitate this is like bringing your entire kitchen with you everywhere you go so you can make PB&J sandwiches the way you like them wherever you are. Granted, maybe you like special kinds of bread, peanut butter and jelly. That's fine, you should be able to find them at most any random grocery store. If not, that's 3 ingredients.

You don't need to bring all of your utensils, bowls, dishes, pots, pans, dishwasher, sink, drying rack, stove, oven, spice rack, cabinets, refrigerator (including milk, eggs, ice cream, ice, last nights dinner, soda, lettuce, cucumbers, pickles, cheese, lunchmeats, apples, strawberries, butter, etc) pantry (including cooking oil, canned beans, canned soups, other canned goods, pasta, chips, cookies, etc) to work so you can make a PB&J sandwich.

To make your PB&J when at work (or wherever) you only need a plate, a knife, and your ingredients. The plate and knife are interchangeable with ones you may have had at home. The ingredients may or may not be. This is an advantage of having systems that aren't dependent on one particular setting. While you may like your knives better because they are blunt-tipped and thus better at scraping the bottom of the container, as opposed to pointy-tipped (this is a real thing I've recently come across) they can both do the same job.

This is not a problem of the kitchen, but of the system that is using the kitchen. Sometimes, the kitchen-using-system trips itself up. This is known as Dependency Hell, when running apps, it might become DLL Hell. Both of these are solvable. In fact, the second has been a solved problem once games were commercially available on floppies: ship everything needed, and don't rely on the user to have anything but the bare minimum requirements to run the software (and bare minimum varies, but usually reduces to either A: the hardware needed to run the game i.e. a Tandy 1000, Atari 400, Commodore 64, etc, or B: the hardware needed plus the OS needed to run the game, and a fresh install will do, i.e. DOS, Windows. More than either of these isn't "bare minimum")

Dependency hell is solvable, but most programming languages rely on some form of Semantic Versioning to get the "right" dependencies. Unfortunately, semver is broken. Google semver is broken to find many articles and posts describing this. There is a solution I have for this, but few people will like it.

If Business A has 5 services, and thus make 5 VM's, you're running Linux (or OSX or Windows) 5 times, each to run 1 program. This isn't too bad, but imagine 1,000 instances of Linux, each running 1 program... Why not run 1 instance of Linux with 1,000 instances of your program? Modern multitasking operating systems are great at this. In fact, Unix was designed to allow multiple processes or users run tasks at the same time, on a single processor.

If you're not going to run more than one process on a given computer, you don't need to run a Linux VM. Get your program to run on bare metal and save yourself (and your customers) millions (possibly billions) of processor cycles per second. Ship that up to Goosoft AWS Cloud and save yourself money. On that note...

Recently I saw an article published on Energy Efficiency across Programming Languages and it got me thinking. Most people don't think about this, and many people who drive eco-friendly cars and have solar turbines drink overpriced lattes and use programming languages that are atrocious in their energy consumption (see pg 11 of the linked article for a quick overview). Since you're virtualizing a whole new Linux OS while running your app, you should be concerned with not just the energy usage of the code you wrote, but the VM you're running it in. I just found this Docker Activity repo on GitHub that tries to measure it... though again, it is just one repo and most people probably don't care.

And you should care! Not just because you're killing the planet if you don't care, you dirty coal-burning diesel-chugging tree killer! But because this has real effects on the software you use day-to-day! I know this because you have a smartphone in your pocket that uses VM's on it! And oh boy... it is the most pervasive container in the world!

If you use Android, you're using the JVM. Each time you run an app, you're running a new VM that contains your app and does all the app things. If you're lucky it's pre-compiled to bytecode, so your phone doesn't have to go from Java->JVM Bytecode each time you want to check the weather. Unfortunately you still need to go from JVM Bytecode->ARM machine code. Every. Single. Time. It does have a fancy JIT so it compiles the code "Just In-Time" to take advantage of some speed for repeated operations, but AFAIK, once you close the app and re-open it, the JVM needs to recompile (re-JIT?) all the stuff you JIT-ed last time.

"But I use an iFone, all my apps are compiled from Swoosh to MI6 machine code from the developer!" Okay, sure. But do you use the internet? If you do you're using another VM, and so are the Android people, and so is everybody else using the most containerized container that contains content: A Web Browser using JavaScript. There is no precompiled JS that you can download and just run. WebAssembly is a thing, but it's a distant thing. Very few sites use it. So not only are you downloading JavaScript files to your device to have it parse the JS to JS bytecode (if the given VM uses it, it may or may not and is not standard) but then running it on your device. And this happens each time you visit any web page. Each time you are re-downloading and recompiling the same JavaScript to your device so you can check the wheather (or whatever).

If your browser is smart enough, it may cache this so you don't have to re-download, and may even cache the compilation of JS, but I have very little faith that this is actually happening.

Eventually your device talks back to the server, and it is probably taking to a Node.js instance in a container on a VM running at Amazesoft Blue Smoke that may-or-may-not be dynamically compiling the JS. In fact, it probably has to interpret the JavaScript in the VM each time you run a new instance of the service. So if Business A is running 1,000 containers running their app, they're compiling the app 1,000 times, each time to exactly the same thing. Just so they can converse with their customers. This is also true if they're using Python, Ruby, PHP, Perl or most other dynamic languages.

How many times per day do containers get spun up? Hundreds? Thousands?? Millions??? Each time you are compiling your app in real-time, costing money and time. How much time? Seconds? Milliseconds? If it takes 50ms to boot your containerized app, and you're doing it a million times a day, your app is spending 13.9 hours doing nothing but getting ready per day. Sure this is spread out amongst all your customers, but how much does this cost you on Microzon Web Vapor? And is it only 50ms? Is it 150ms? 250ms? A quick Google suggests 0.5s to 1.2s boot time is normal, taking the average of 0.85s (850ms) we have:

0.85 seconds * 1,000,000 VM's

9.83796296 days

...10 days of booting...

I don't want to be all poo-poo on containers. They have some cool uses, and I've mentioned a few briefly above.

Honestly, being able to have your code be run by many people all over the world by shipping a single binary to many locations and thus getting better locality is pretty cool. Mobile games or PvP games benefit from this because you can connect to a server that has a low ping for you, and enables you to play on more-even footing.

Having the ability to "abstract away" servers is also rather nice. Fixing, upgrading, and generally maintaining servers is a pain, and if you can offload that to another business it can be a great boon to you. Services like Heroku which were once all-the-rage, allowing someone to code up an app, and push their git repository to them and see it live is really nice! It makes the development cycle really small and allows tiny businesses or even single developers to get shit done quickly. This doesn't scale as well as people would like, but it helps when getting started.

There are probably other areas that areuseful that I haven't mentioned. But on the whole, how I see containers being used is just 💩.

Simply being a crotchety greybeard on the internet is easy. It's more difficult to come up with solutions. How much more difficult? Well, it depends. You can come up with suggestions, or do that and implement them. Implementing stuff is hard. Getting people to use your suggestion, is hard. Getting people to use your suggested implementation is very hard. There are some things I'm working on to make my life as a developer more enjoyable. Are people going to use my solutions? Probably not. Especially since I need to get over the Analysis paralysis to really start on some of my ideas.

This one honestly has an easy fix. It's so simple, yet most people who get this far and haven't table-flipped yet will almost certainly do so now. Here is the answer:

To ensure a uniform development environment for all of the devs, you need to check-in all of the dependencies of your code. This includes things that may already be installed on every system. The fact that it may be installed also means it may not be installed, thus you need to ensure the recipient has it by checking it in to whatever source control you have. Be that Git, Mercurial, or the

srcdirectory on your drive where you threw all the code so you can zip it and email it to your friends.

This does 2 things actually. Both of which are essential. First of all, it drives up the cost of adding "just another little library", because now you have to download the entire source tree of that library, and check it into your repo. This means - for instance - if you are doing machine learning, and using TensorFlow, you need to have the whole system installed as a local dependency in your app. For each machine learning app that you have you need to do this. Each and every one. Yes. All of them. The directory structure of your app would look something like this:

\app

readme.md

\my_src

detect_face.py

detect_dog.py

detect_detection.py

\libs

\TensorFlow

\OpenCL

\OpenCV

\OpenOpening

If you want to get real pedantic about this (which I would recommend if you're running a business), add your languages standard lib here also. Who knows when Python is going to change how sort() works? What if one day it sorts in decending order instead of ascending? Would that be a breaking change? Probably, but they already made breaking changes and now the world is stuck with 2 incompatible versions of Python.

"But then I don't get all the nice bug-fixes that the upstream devs made to the software!" Oh, but you do! You just need to update it manually. And you should do that, after all, it's your code. I mean, it really is. If you're using open source software to build your app, all that code is now your responsibility. All of it. Your code. If it's not you're saying it is someone else's code, and thus their responsibility to fix it. So are you paying them to fix it? Are you making PR's and helping to give back to the developer? Probably not. Relying on free code and the niceness therin is all well and good if you're not making a profit off of it. But once you're a business, and making business dollars, and shipping code (even if it's code to servers you own) it is now your code that you are responsible for. If libX has a security flaw it is your fault for using it, not the maintainers of libX for not having the time / energy / money to fix their own 💩 code. Relying on them means you are relying on - effectively - an unpaid intern to deliver business-critical infrastructure.

Honestly, this is a problem even in Linux distros. Debian ships with old versions of many libraries, and the default is to use shared libraries. Why? Because dumb reasons I don't want to get into right now. Compiling the libraries right into your executable, or shipping the *.dll's yourself are much better options. Doing this on a system means that your toy image viewer can use the latest libPNG, while the version of Gimp you downloaded years ago is still using the same version it works with, and the AAA game you're running is using a different version to display all the loot in the 💩 boxes you just got.

Otherwise, you're relying on the consumer of your app to have exactly what you need installed on their system. Unfortunately, in this situation, if your app fails because the user doesn't have the latest libPNG installed, they're not getting on the Tweeterverse to tell their followers how dumb they are because they're using libPNG 1.2 and not the required libPNG 1.7 your app needs...

Running a bunch of services, all isolated from each other, is really easy. They're called multitasking operating systems. They've been around since the 1970's or so. You're using one right now to read this document. It's not magic. If you want them isolated so they don't use more memory / storage / processing than you want them to, well there's policies you can put in place. Google setting memory limits on process linux. If doing that is too hard for you then using Docker to do the same is also too hard for you. I mean, you already have Linux installed, who knows if Docker will install with the correct version and then you're running a VM with Linux inside Linux so you can do what Linux already can do natively... 😒

That's the case if you don't have control over language the app you need to run is in. Such as executables for various tasks. If you do have control over the implementation language (like when you're building your own app) then there is another simple answer for near-infinite scalability:

Make one Linux VM you can ship to Ammonia-Goggles and install Erlang on it. Run all of your services on that and it will magically handle hundreds of thousands or millions of requests, fail nicely, and you never have to turn it off to upgrade it. MAGIC!

Honestly people will hate this idea. Lots of people will. Erlang is cool technology but a weird language. With that being said, you can design systems in any language that do what Erlang does. Granted, it won't have 35+ years of bugfixes and enhancements, but you can do all this. I mean, from looking at the Erlang/OTP GitHub Repo it looks like they're probably using C to make the BEAM, Erlang's main VM implementation. And since both C and Erlang are Lambda Complete, any other language that can implement Gödel's General recursive functions can do the same. Joe Armstrong gave a talk and describes how you can achieve this here: https://www.youtube.com/watch?v=cNICGEwmXLU (his 6 rules for designing systems like Erlang start here: https://youtu.be/cNICGEwmXLU?t=1769)

Containers are generally cool, but so is fast food. Too much fast food is bad, the same with containers in my opinion. Need quick calories? Fine, have a double McWhopper with a Frosty and curly fries! Don't eat that every day as your main meal or you'll get obese and have other health issues. Containers and Virtual Machines in general are good and useful. But there is such a thing as too much of a good thing.

Does this mean I will never use containers? No. I will use any system that I need to in order to provide for my family, even if I don't particularly like how that system works. I use a gas vehicle to drive to and from work. Would I rather have an all electric vehicle and cut my emissions? Yes! Would I rather commute on a train and cut my emissions further? You bet! But the first one would require that I both have the money for a new vehicle, and am in need of a new vehicle (as trading in a perfectly good car for a new electric does not offset the emissions of building the new car). And the second would require millions or billions of dollars of infrastructure to be implemented in my area. The second is preferrable, the first is more probable.

Unfortunately this may prevent me from getting work. But just like declaring you voted for Cthulhu for president might prevent you from being hired, that is the same as taking a stand against technology you don't like. It doesn't really prevent you from doing the work, it just means you don't particularly like it. And as long as any work offered isn't morally wrong, I don't see a reason why anyone who is able shouldn't do it.