-

We have a pre-trained network and want to perform transfer learning using it.

-

Lets say we keep initial 5 layers from the pre-trained network and add our own layers on top of it.

-

Output of layer number 5 was given the name "layer5_out" when building the network

-

We can perform transfer learning on this in 2 ways:

1. Initialize the first 5 layers using the weights of the pre-trained network and freeze them during training. 2. Initialize the first 5 layers using the weights of the pre-trained network and train them i.e. update them during training.

Restore the network and get the tensor for the output of the 5th layer. We are assuming the model was saved using the tf.saved_model API: tf.saved_model.builder.SavedModelBuilder and tf.saved_model.loader.load.

tf.saved_model.loader.load(sess, tags, export_directory)

graph = tf.get_default_graph()

layer5_out = graph.get_tensor_by_name("layer5_out")

When you are building the graph, call tf.stop_gradient on layer from which you want to prevent the backpropagation of gradients. It is layer number 5 in our network.

layer5_out = tf.stop_gradient(layer5_out)

Skip this if you want to train the layers of pre-trained network as well.

with tf.variable_scope("trainable_section"):

layer6_out = tf.layers.conv2d(layer5_out, ....)

# add more layers as required

# get the variables declared in the scope "trainable_section"

trainable_vars = tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, "trainable_section")

Skip this if you want to train the layers of pre-trained network as well.

optimizer.minimize(loss, var_list=trainable_vars)

Skip this if you want to train the layers of pre-trained network as well.

trainable_variable_initializers = [var.initializer for var in trainable_vars]

sess.run(trainable_variable_initializers)

This step is required for both ways of performing transfer learning (see the assumptions above).

Hi,

I have implemented tiny yolo with Tensorflow. I have saved the model for 80 classes but now after reloading model I want to change my last convolutional layer to filters with value 40. How can I do it?

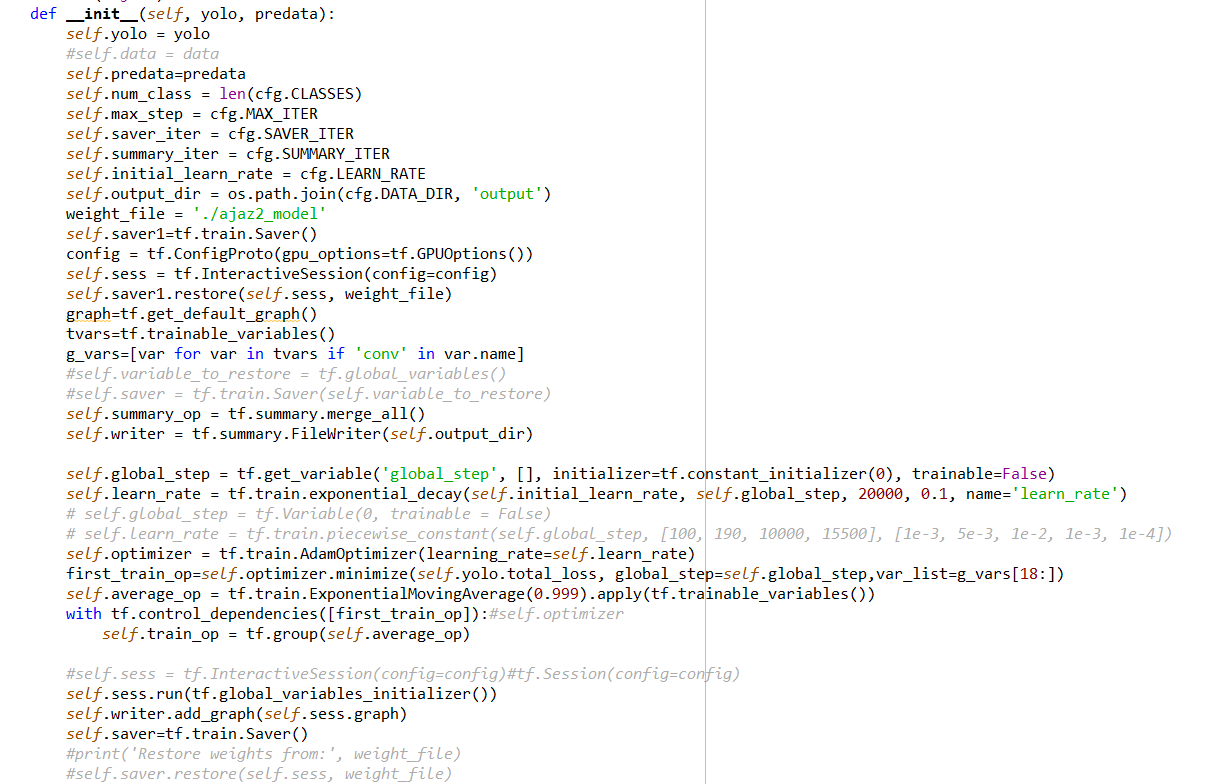

Here is my initializer code:

and here is the name of variables of last layers:

[<tf.Variable 'conv6_1/kernel:0' shape=(3, 3, 512, 1024) dtype=float32_ref>,

<tf.Variable 'conv6_1_bn/gamma:0' shape=(1024,) dtype=float32_ref>,

<tf.Variable 'conv6_1_bn/beta:0' shape=(1024,) dtype=float32_ref>,

<tf.Variable 'conv6_3/kernel:0' shape=(3, 3, 1024, 1024) dtype=float32_ref>,

<tf.Variable 'conv6_3_bn/gamma:0' shape=(1024,) dtype=float32_ref>,

<tf.Variable 'conv6_3_bn/beta:0' shape=(1024,) dtype=float32_ref>,

<tf.Variable 'conv_dec/kernel:0' shape=(1, 1, 1024, 40) dtype=float32_ref>,

<tf.Variable 'conv_dec/bias:0' shape=(40,) dtype=float32_ref>]