Last active

March 30, 2021 08:52

-

-

Save taking/10ed66f778f65573ff58f43f008e6161 to your computer and use it in GitHub Desktop.

쿠버네티스 설치부터 helm 을 이용한 istio service mesh 구성까지

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| #!/bin/bash | |

| RED=`tput setaf 1` | |

| GREEN=`tput setaf 2` | |

| NC=`tput sgr0` | |

| # Check permission | |

| if [ "$EUID" -ne 0 ] | |

| then echo "${RED}Please run as root ${NC}" | |

| exit | |

| fi | |

| ############ language change ############### | |

| echo "${RED}LANGUAGE CHANGE${NC}" | |

| localedef -c -i ko_KR -f UTF-8 ko_KR.utf8 | |

| localectl set-locale LANG=ko_KR.utf8 | |

| ############### Timezone ################### | |

| echo "${RED}TIMEZONE CHANGE${NC}" | |

| timedatectl set-timezone Asia/Seoul | |

| echo '[Timezone] Change Success' | |

| ############ hostname change ############### | |

| echo "${RED}HOSTNAME CHANGE${NC}" | |

| read -p "hostname Change is (ex k8s-worker) :" uhost | |

| hostnamectl set-hostname $uhost | |

| echo '[Hostname] Change Success' | |

| ############ mirror change ############### | |

| echo "${RED}APT MIRROR CHANGE${NC}" | |

| sed -i 's/nova.clouds.archive.ubuntu.com/mirror.kakao.com/g' /etc/apt/sources.list | |

| echo '[Mirror] Change Success' | |

| ############ Init Update ############### | |

| apt-get update -y | |

| apt-get install vim apt-transport-https gnupg2 curl -y | |

| ############ Docker Install ############### | |

| echo "${RED}DOCKER INSTALL${NC}" | |

| apt-get install docker.io -y | |

| systemctl enable --now docker | |

| docker --version | |

| echo '[Docker] Success' | |

| ############ K8s & Helm Install ############### | |

| echo "${RED}K8S & HELM INSTALL${NC}" | |

| curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add - | |

| echo "deb https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list | |

| curl https://helm.baltorepo.com/organization/signing.asc | sudo apt-key add - | |

| echo "deb https://baltocdn.com/helm/stable/debian/ all main" | sudo tee /etc/apt/sources.list.d/helm-stable-debian.list | |

| apt-get update -y | |

| apt-get install kubelet kubeadm kubectl helm -y | |

| apt-mark hold kubelet kubeadm kubectl | |

| echo '[k8s, Helm] Success' | |

| ############ Swap off ############### | |

| echo "${RED}SWAP OFF${NC}" | |

| swapoff -a && sudo sed -i.bak 's/\/swap\.img/#\/swap\.img/g' /etc/fstab | |

| echo '[Swap Off] Success' | |

| ############ Kernel Network Edit ############### | |

| echo "${RED}SET IP FORWARDING${NC}" | |

| echo 1 > /proc/sys/net/ipv4/ip_forward | |

| # sysctl -w net.ipv4.ip_forward=1 | |

| echo "${RED}SET NETWORK CONFIGURATION${NC}" | |

| modprobe br_netfilter | |

| cat <<EOF > /etc/sysctl.d/k8s.conf | |

| net.bridge.bridge-nf-call-iptables = 1 | |

| net.bridge.bridge-nf-call-ip6tables = 1 | |

| EOF | |

| sysctl --system | |

| echo '[Kernel Netowrk Edit] Success' | |

| systemctl daemon-reload | |

| systemctl restart kubelet | |

| systemctl enable kubelet | |

| ########### Docker daemon Added ############## | |

| echo "${RED}DOCKER DAEMON EDIT${NC}" | |

| cat <<EOF > /etc/docker/daemon.json | |

| { | |

| "exec-opts": ["native.cgroupdriver=systemd"], | |

| "log-driver": "json-file", | |

| "log-opts": { | |

| "max-size": "100m" | |

| }, | |

| "storage-driver": "overlay2" | |

| } | |

| EOF | |

| mkdir -p /etc/systemd/system/docker.service.d | |

| systemctl daemon-reload | |

| systemctl restart docker | |

| ############ Firewalls with UFW Added ############### | |

| echo "${RED}FIREWALLD RULES UPDATED${NC}" | |

| ufw allow ssh | |

| ufw allow 6443 | |

| ufw allow 2379 | |

| ufw allow 2380 | |

| ufw allow 10250 | |

| ufw allow 10251 | |

| ufw allow 10252 | |

| ############ Init and Network Setting ############### | |

| echo "${RED}K8S INSTALLING${NC}" | |

| my_vm_internal_ip="$(hostname -I | awk {'print $1'})" | |

| flannel_cidr="10.244.0.0/16" | |

| echo '#### K8s Init ? ####' | |

| echo '[Kubernetes Init Select]' | |

| echo 'Network Add-on is [Flannel]' | |

| echo 'Flannel Applying...' | |

| kubeadm init --pod-network-cidr=${flannel_cidr} --apiserver-advertise-address=${my_vm_internal_ip} | |

| echo ' ' | |

| echo '######## Token 복사하시고, Cluster Node에 붙이세요. ############' | |

| echo ' ' | |

| mkdir -p $HOME/.kube | |

| cp -i /etc/kubernetes/admin.conf $HOME/.kube/config | |

| chown $(id -u):$(id -g) $HOME/.kube/config | |

| #export KUBECONFIG=/etc/kubernetes/admin.conf | |

| echo "${RED}NETWORK - FLANNEL INSTALLING${NC}" | |

| kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml | |

| echo '[flannel] Success' | |

| ########### Example Domains ############### | |

| cat <<EOF >> /etc/hosts | |

| ${my_vm_internal_ip} ${uhost} | |

| EOF | |

| ########### Option ############# | |

| # kubectl taint nodes --all node-role.kubernetes.io/master- |

기타... (Calico 설정)

echo 'Network Add-on is [Calico]'

echo 'Calico Applying...'

calico_pod_cidr="10.240.0.0/16"

calico_network_cidr="10.110.0.0/16"

kubeadm init --pod-network-cidr=${calico_pod_cidr} --service-cidr=${calico_network_cidr} --apiserver-advertise-address=${my_vm_internal_ip} &&

mkdir -p $HOME/.kube &&

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config &&

chown $(id -u):$(id -g) $HOME/.kube/config

kubectl create -f https://docs.projectcalico.org/manifests/tigera-operator.yaml

curl -O -L https://github.com/projectcalico/calicoctl/releases/download/v3.18.1/calicoctl

chmod +x calicoctl

mv calicoctl /usr/local/bin

Kubernetes Reset 은

kubeadm reset -f

rm -rf /etc/cni /etc/kubernetes /var/lib/dockershim /var/lib/etcd /var/lib/kubelet /var/run/kubernetes ~/.kube/

network Flannel 이용 시?

kubeadm reset -f

rm -rf /etc/cni /etc/kubernetes /var/lib/dockershim /var/lib/etcd /var/lib/kubelet /var/run/kubernetes ~/.kube/ &&

sudo ip link del cni0 &&

sudo ip link del flannel.1

network Flannel + Submariner 이용 시?

kubeadm reset -f

rm -rf /etc/cni /etc/kubernetes /var/lib/dockershim /var/lib/etcd /var/lib/kubelet /var/run/kubernetes ~/.kube/ &&

sudo ip link del cni0 &&

sudo ip link del flannel.1 &&

sudo ip link del vx-submariner

Kubernetes Init 시, 테스트는

--dry-run 옵션 주기

Istio 설치 (Helm 사용)

설명

- 멀티 클러스터 환경에 적용을 위함

- istio envoy 를 통한 모니터링 metrics 등 서비스 메시를 위함

-> https://gist.github.com/taking/d24a199b923e6b17fd12ee9e458d791e 참고

Kubernetes 멀티 클러스터 구성

- 다음과 같이 K8s 클러스터가 2개일 경우, 멀티 클러스터 환경으로 구성을 하기 위함

- (Cluster1) k8s-master, k8s-worker

- (Cluster2) k8s-master, k8s-worker

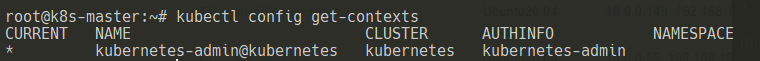

(Cluster1) 현재 contexts 확인

kubectl config get-contexts

(Cluster1) 기본 contexts 변경하기

- 경로

vim ~/.kube/config

# all

_hostname="$(hostname)"

kubectl label nodes ${_hostname} submariner.io/gateway=true --overwrite

kubectl taint nodes --all node-role.kubernetes.io/master-

kubectl get configmaps -n kube-system kubeadm-config -o yaml | sed "s/ clusterName: kubernetes/ clusterName: ${_hostname}/g" | kubectl replace -f - &&

kubectl config set-context kubernetes-admin@kubernetes --cluster=${_hostname} &&

kubectl config set-context kubernetes-admin@kubernetes --user=${_hostname} &&

kubectl config rename-context kubernetes-admin@kubernetes ${_hostname} &&

sed -i "s/ name: kubernetes/ name: ${_hostname}/g" ~/.kube/config &&

sed -i "s/- name: kubernetes-admin/- name: ${_hostname}/g" ~/.kube/config &&

kubectl get nodes

- 각 클러스터 별 위 과정과 동일하게 진행

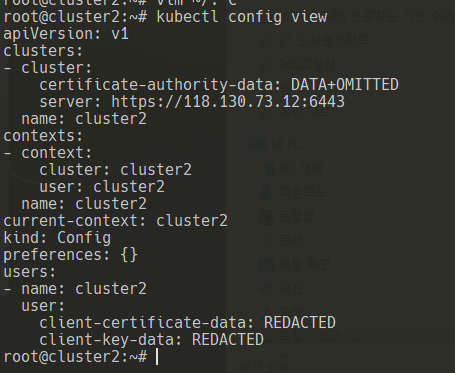

cluster3의 kubernetes config 파일을 cluster2 로 옮기기

cp ~/.kube/config ~/.kube/config-backup

KUBECONFIG=./config1:./config2 kubectl config view --flatten > ~/.kube/merged_kubeconfig &&

mv ~/.kube/merged_kubeconfig ~/.kube/config

kubectl config view

확인

kubectl get ns --context=cluster2

kubectl get ns --context=cluster3

참고 내용

o Clusters

- 말 그대로 쿠버네티스 클러스터의 정보이다

- 내 PC에 설치된 쿠버네티스 클러스터와, GCP에 설치된 쿠버네티스 클러스터가 있음을 볼 수 있다

- 각 클러스터의 이름을 local-cluster, gcp-cluster로 수정해서 알아보기 쉽게 해놓았다

- 처음 클러스터 생성하면 조금 복잡?한 이름으로 생성되는데, 위처럼 자신이 알아보기 쉽게 바꿔주는 것이 좋다

o users

- 클러스터에 접근할 유저의 정보이다

- 각 환경마다 필요한 값들이 다르다

- 이 또한 알아보기 쉽게 local-user, gcp-user 로 수정해놓았다

o context

- cluster와 user를 조합해서 생성된 값이다

- cluster의 속성값으로는 위에서 작성한 cluster의 name을 지정했고, user의 속성값 또한 위에서 작성한 user의 name을 지정했다

- local-context는 local-user 정보로 local-cluster에 접근하는 하나의 set가 되는 것이다

o current-context

- 현재 사용하는 context 를 지정하는 부분이다

- 현재 local-context 를 사용하라고 설정해놓았으므로, 터미널에서 kubectl 명령을 입력하면 로컬 쿠버네티스 클러스터를 관리하게 된다

master 노드에서 worker 노드로의 접속

Local 에서 Master 노드로 키 전송

scp -i taking.pem taking.pem ubuntu@192.168.150.180:/home/ubuntu/

ubuntu 계정에서 ssh 키 설정

mv taing.pem ~/.ssh

chmod 600 ~/.ssh/taking.pem

chmod 700 ~/.ssh/

ssh -i ~/.ssh/taking.pem ubuntu@10.0.0.62

Metrics-Server 설치

- OO

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

apt install jq -y

docker 초기화

docker stop $(docker ps -a -q) && docker rmi $(docker images -q)

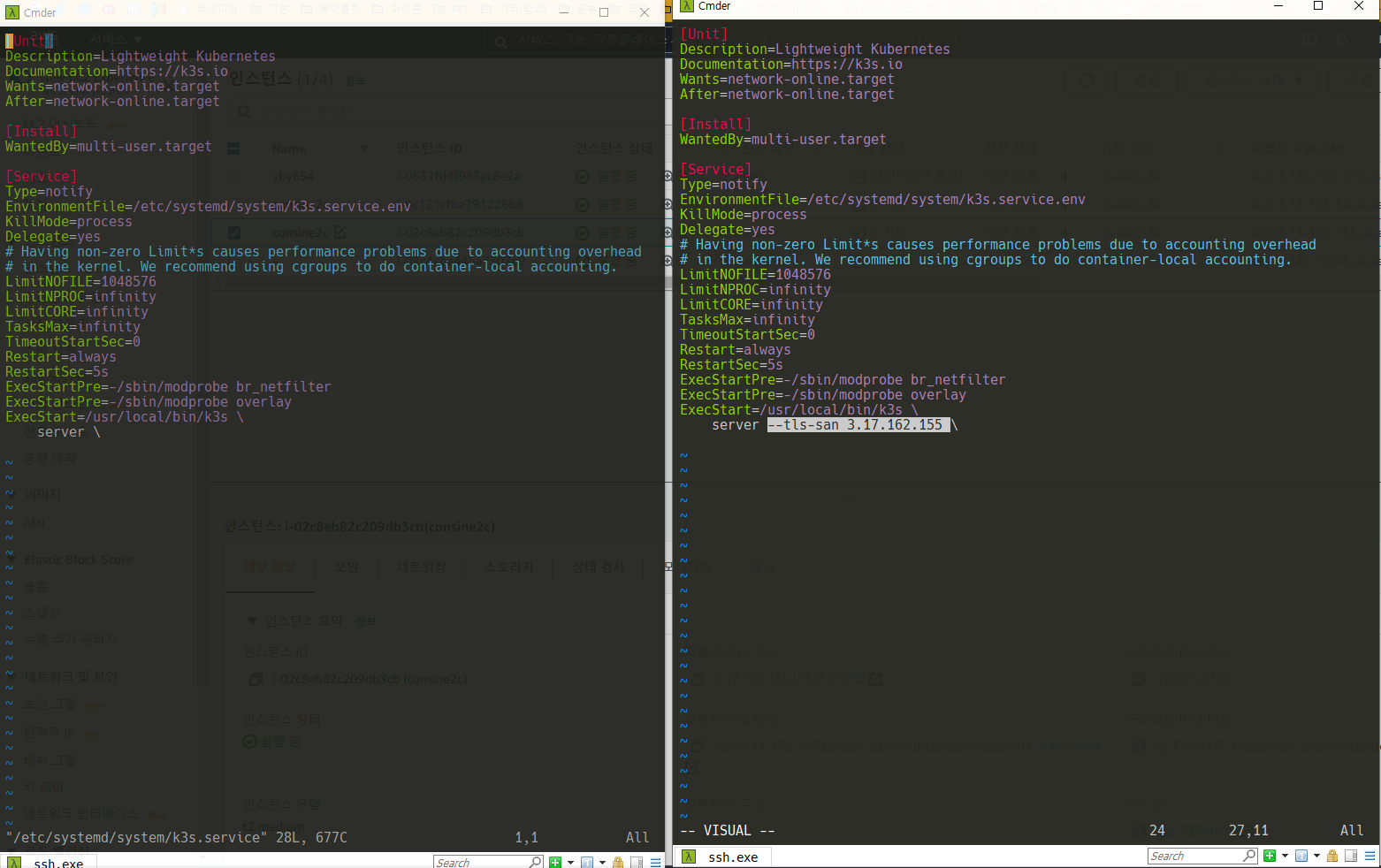

k3s

#!/bin/bash

apt update

apt upgrade

cluster_cidr="172.20.0.0/16"

service_cidr="172.24.0.0/16"

curl -sfL https://get.k3s.io | INSTALL_K3S_EXEC="server --cluster-cidr ${cluster_cidr} --service-cidr ${service_cidr} --cluster-dns ${cluster_cidr} --no-deploy \"servicelb\" --no-deploy \"metrics-server\" --no-deploy \"local-storage\"" sh -

kubectl get nodes

echo 'config file path = /etc/rancher/k3s/k3s.yaml'

- k3s 의 콘픽은

/etc/rancher/k3s/k3s.yaml에 있음 - 당연히 외부에서 접근하려면 해당 yml 파일의 server 의 127.0.0.1 부분을 public IP로 변경 필요

curl -sfL https://get.k3s.io | K3S_URL=https://serverip:6443 K3S_TOKEN=mytoken sh - 를 이용하여 Node 추가

- servicelb 를 설치 시, 제외 했기 떄문에 필요 시 metallb 추가 설치 필요

kubectl config set-context default --cluster='cluster-1' &&

kubectl config set-context default --user='cluster-1' &&

kubectl config rename-context default cluster-1 &&

sed -i 's/ name: default/ name: cluster-1/g' /etc/rancher/k3s/k3s.yaml &&

sed -i 's/- name: default/- name: cluster-1/g' /etc/rancher/k3s/k3s.yaml &&

kubectl get nodes

kubectl -n kube-system create serviceaccount cluster-1

kubectl create clusterrolebinding cluster-1\

--clusterrole=cluster-admin \

--serviceaccount=kube-system:cluster-1

systemctl daemon-reload

systemctl restart k3s

metallb

- 위에 잠깐 나왔지만, 이어서 보기 편하도록 추가

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.9.5/manifests/namespace.yaml

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.9.5/manifests/metallb.yaml

kubectl create secret generic -n metallb-system memberlist \

--from-literal=secretkey='$(openssl rand -base64 128)'

- 아래 addresses 부분을 cluster ip 로 변경하여 진행

cat <<EOF > ~/metallb-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- 172.20.0.0/16

EOF

kubectl apply -f ~/metallb-configmap.yaml

- public ip 할당 받을 수 있는 경우, 아래와 같이 가능

addresses:

- 192.168.100.100-192.168.100.250

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

MetalLB 설치

설명

ipvs 사용 시

MetalLB configmap 적용

Hello Kubernetes Sample 설치

Connect : IP:8181