velero Installation with Helm

Kubernetes 1.30+

Helm 3.15.0+

minio

bucketName=velero

accessKey=taking-access-key

secretKey=taking-secret-key

helm repo add minio https://charts.min.io/

helm repo update minio

helm install minio minio/minio \

--create-namespace \

--namespace minio-system \

--set mode=standalone \

--set replicas=2 \

--set persistence.size=10Gi \

--set MINIO_REGION=us-east-1 \

--set buckets[0].name=${bucketName} \

--set buckets[0].policy=none \

--set buckets[0].purge=false \

--set users[0].accessKey=${accessKey} \

--set users[0].secretKey=${secretKey} \

--set users[0].policy=readwrite \

--set resources.requests.memory=10Gi

helm repo add vmware-tanzu https://vmware-tanzu.github.io/helm-charts/

helm repo update vmware-tanzu

velero-values.yaml (Local) cat <<EOF > velero-values.yaml

initContainers:

- name: velero-plugin-for-aws

image: velero/velero-plugin-for-aws:v1.10.1

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /target

name: plugins

configuration:

defaultVolumesToFsBackup: true

backupStorageLocation:

- name: minio

provider: aws

bucket: velero

accessMode: ReadWrite

default: true

config:

region: us-east-1

s3ForcePathStyle: true

s3Url: http://minio.minio-system.svc.cluster.local:9000

publicUrl: http://minio.minio-system.svc.cluster.local:9000

volumeSnapshotLocation:

- name: minio

provider: aws

config:

region: us-east-1

credentials:

useSecret: true

secretContents:

cloud: |

[default]

aws_access_key_id = {your-minio-access-key}

aws_secret_access_key = {your-minio-secret-key}

deployNodeAgent: true

EOF

velero-values.yaml (External) cat <<EOF > velero-values.yaml

initContainers:

- name: velero-plugin-for-aws

image: velero/velero-plugin-for-aws:v1.10.1

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /target

name: plugins

configuration:

defaultVolumesToFsBackup: true

backupStorageLocation:

- name: minio

provider: aws

bucket: velero

accessMode: ReadWrite

default: true

config:

region: us-east-1

s3ForcePathStyle: true

s3Url: http://192.168.0.101:9000

publicUrl: http://192.168.0.101:9000

volumeSnapshotLocation:

- name: minio

provider: aws

config:

region: us-east-1

credentials:

useSecret: true

secretContents:

cloud: |

[default]

aws_access_key_id = {your-minio-access-key}

aws_secret_access_key = {your-minio-secret-key}

deployNodeAgent: true

EOF

helm upgrade my-velero vmware-tanzu/velero \

--install \

--create-namespace \

--namespace velero \

-f velero-values.yaml

VERSION=$(basename $(curl -s -w %{redirect_url} https://github.com/vmware-tanzu/velero/releases/latest))

UNAME=$(uname | sed 's/^Darwin$/darwin/; s/^Linux$/linux/')

ARCH=$(uname -m | sed 's/^aarch64$/arm64/; s/^x86_64$/amd64/')

wget https://github.com/vmware-tanzu/velero/releases/download/$VERSION/velero-$VERSION-$UNAME-$ARCH.tar.gz

tar xvzf velero-$VERSION-$UNAME-$ARCH.tar.gz

sudo mv velero-$VERSION-$UNAME-$ARCH/velero /usr/local/bin

rm -rf ./velero-$VERSION-$UNAME-$ARCH

rm -rf ./velero-$VERSION-$UNAME-$ARCH.tar.gz

velero version

backup-location default set velero backup-location set minio --default

velero backup-location get

kubectl logs deploy/my-velero -n velero

backupstoragelocations Check kubectl get backupstoragelocations -n velero

./velero backup create nfs-server \

--include-namespaces nfs-server \

--storage-location aws

./velero backup create nfs-server-backup \

--include-namespaces nfs-server \

--default-volumes-to-fs-backup \

--wait

./velero restore create \

--from-backup nfs-server-backup

velero schedule create nfs-server --include-namespaces nfs-server --schedule="*/10 * * * *"

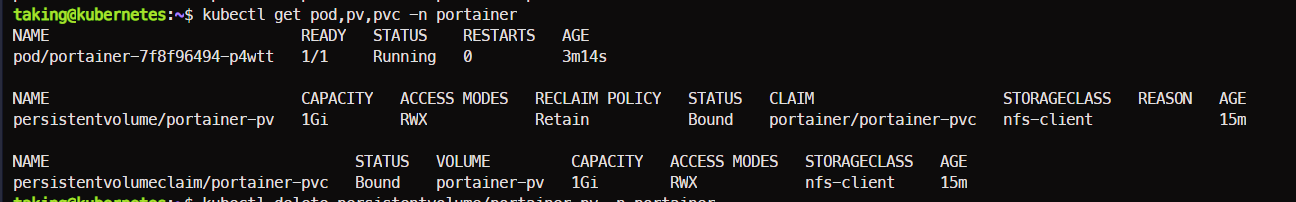

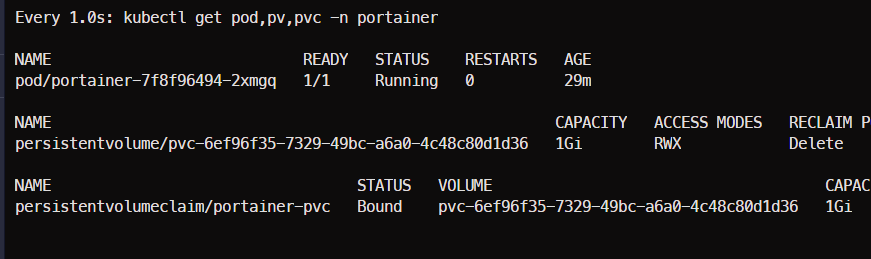

깔끔하고 다 좋은데... PV,PVC 가 복구 시 UUID가 새로 생성된다. 그래서 기존 PVC를 못 찾음.

kasten10 으로 넘어가본다. ㅠ