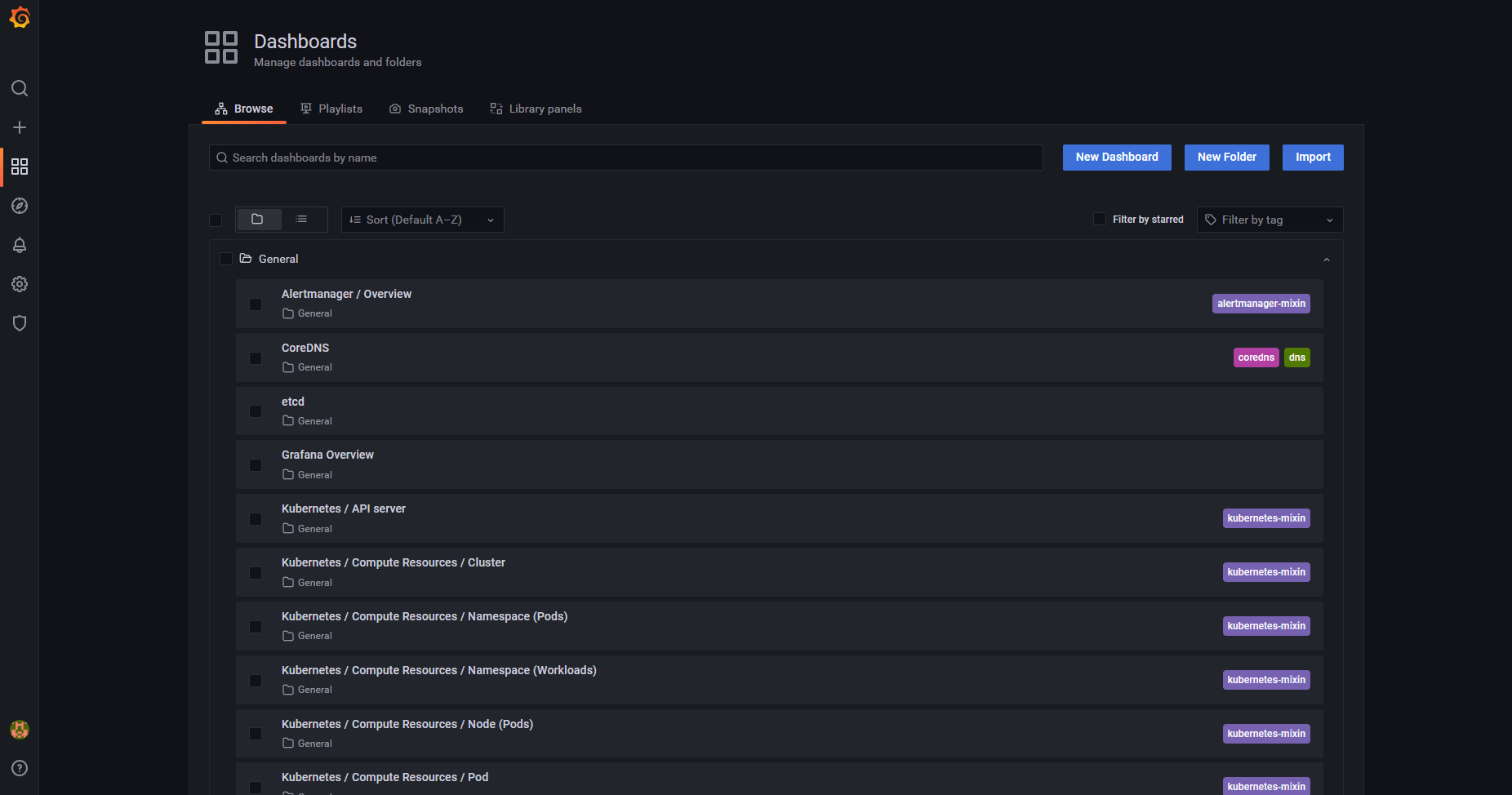

Prometheus-operator installation with Helm

Prometheus, Grafana, kube-state-metrics, prometheus-node-exporter on Kubernetes

Kubernetes 1.19+

Helm 3.2.0+

A persistent storage resource and RW access to it

Kubernetes StorageClass for dynamic provisioning

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm repo update prometheus-community

cat <<EOF > values-override.yaml

---

prometheus:

prometheusSpec:

scrapeInterval: 5s

externalLabels:

cluster: "crio-k8s-1.25"

replicas: 1

grafana:

adminPassword: prom-operator

EOF

helm install prometheus-stack prometheus-community/kube-prometheus-stack \

--create-namespace \

--namespace monitoring \

-f values-override.yaml

kubectl patch svc prometheus-stack-grafana -n monitoring --type='json' -p '[{"op":"replace","path":"/spec/type","value":"NodePort"},{"op":"replace","path":"/spec/ports/0/nodePort","value":32071}]'

kubectl patch svc prometheus-stack-kube-prom-prometheus -n monitoring --type='json' -p '[{"op":"replace","path":"/spec/type","value":"NodePort"},{"op":"replace","path":"/spec/ports/0/nodePort","value":30097}]'

instance_public_ip="$(curl ifconfig.me --silent)"

echo "https://$instance_public_ip:32071"

echo "ID: admin"

echo "PW: " $(kubectl get secret --namespace monitoring prometheus-stack-grafana -o jsonpath="{.data.admin-password}" | base64 --decode ; echo)

(Option) Thanos

helm Chart

Prerequisites

helm update

create namespace

create thanos-sidecar-secret (objectstore-config)

helm update

Install

values-override.yaml

Install

update