- create a new Google Colab Notebook

- open the CERTEM-demo Google Colab Notebook at https://colab.research.google.com/drive/1e9L4Edbm3_X4V_xH7EFkxsmT3wE4Qwca?usp=sharing

- copy each cell from CERTEM-demo.ipynb into your newly created notebook (in the very same order)

- Run all cells (Runtime -> Run all)

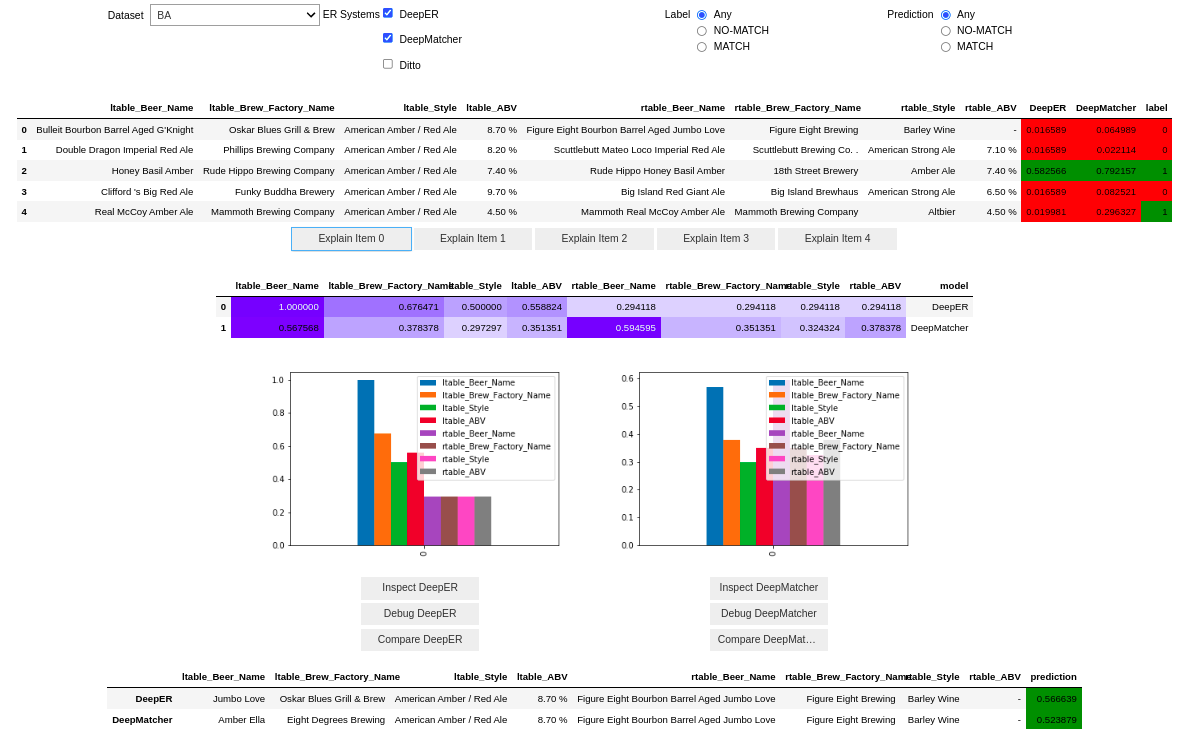

- Once all cells are loaded you should see something like

- Select one of the datasets in the dropdown menu, either BA or AB (avoid IA because of visualization issues)

- Select one of the existing models by checking one of the boxes DeepER, DeepMatcher, Ditto.

- Choose the index of one of the rows in the table to explain (each row contains a pair of records with its label and prediction, according to the selected model)

- Click Explain Item $Index (e.g., Explain Item 0) to obtain an explanation for the chosen pair of records

- Click Compare $Model (e.g., Compare DeepMatcher) to compare different explanations generated for the same pair of records by different explanation algorithms

- You will see a table with different saliency explanations for the same pair of records

- A darker blue background in a cell is associated to bigger numbers and means higher feature "importance"

- Some explanations might contain either 0.000000 or nan values, this can happen with numbers lower than 1e-7.

- Select the checkbox associated to the row which contains the saliency that sounds the correct one or that, at least, helps you understand the prediction (e.g. if the saliency at row 0 is the best one, select the checkbox named Sys 0).

- If none of the selected saliencies satisfies you or if you feel something is missing, fill some text within the User Defined Saliency and select the associated checkbox.

- Once you are satisfied with your selection, click the Record button.

- Now click on the Counterfactuals tab, you will see one or more counterfactual explanations.

- Select the checkbox associated to the counterfactual explanations that sounds the correct one or that, at least, helps you understand the behavior of the system.

- Once you are satisfied with your selection, click the Record button.

- Repeat steps 6-17 for 10 times.

- Open the File tab on the left of the Google Colab page

- Locate the /root/certem/us.csv file and download it.

- Upload the us.csv file by 18:00 CET of January 21st 2023 on the following online form: https://forms.office.com/r/PYP0ncXYqc

Last active

January 12, 2023 15:27

-

-

Save tteofili/eaaeaaa8af2d22005fe199f1dc8874ad to your computer and use it in GitHub Desktop.

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment