Pic credits : Github

Welcome back peeps. We are now starting System Design Series ( over weekends) where we will cover how to design large ( and great) systems, the techniques, tip/tricks that you can refer to in order to scale these systems. As a senior software engineer it’s expected that you know not just the breadth but also depth of the system design concepts.

2. Horizontal and vertical scaling

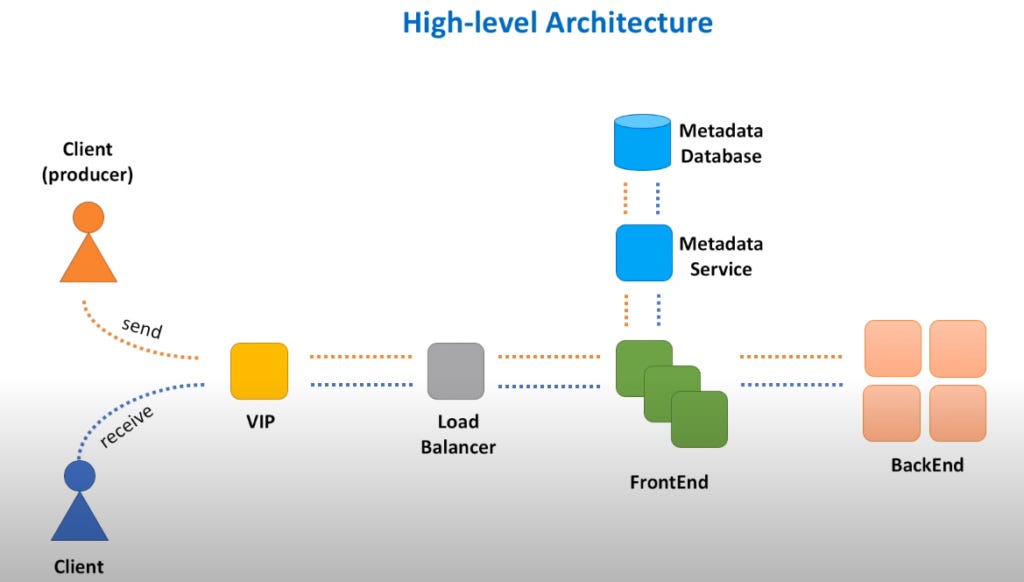

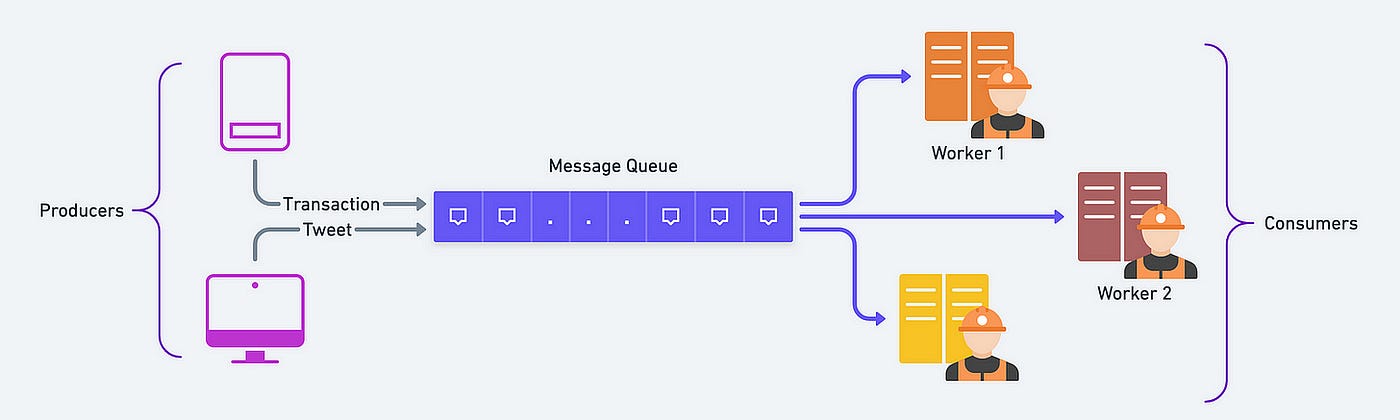

3. Load balancing and Message queues

6. Networking, How Browsers work, Content Network Delivery ( CDN)

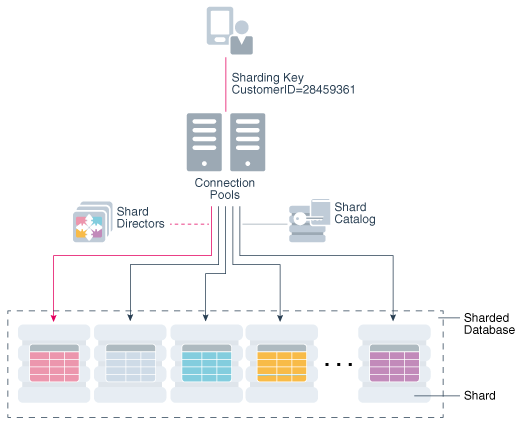

7. Database Sharding, CAP Theorem, Database schema Design

8. Concurrency, API, Components + OOP + Abstraction

9. Estimation and Planning, Performance

In layman’s language, system design is about —

Architecture + Data + Applications

Architecture means how are you going to put the different functioning blocks of a system together and make them seamlessly work with each other after taking into account all the nodal points where a sub-system can fail/stop working.

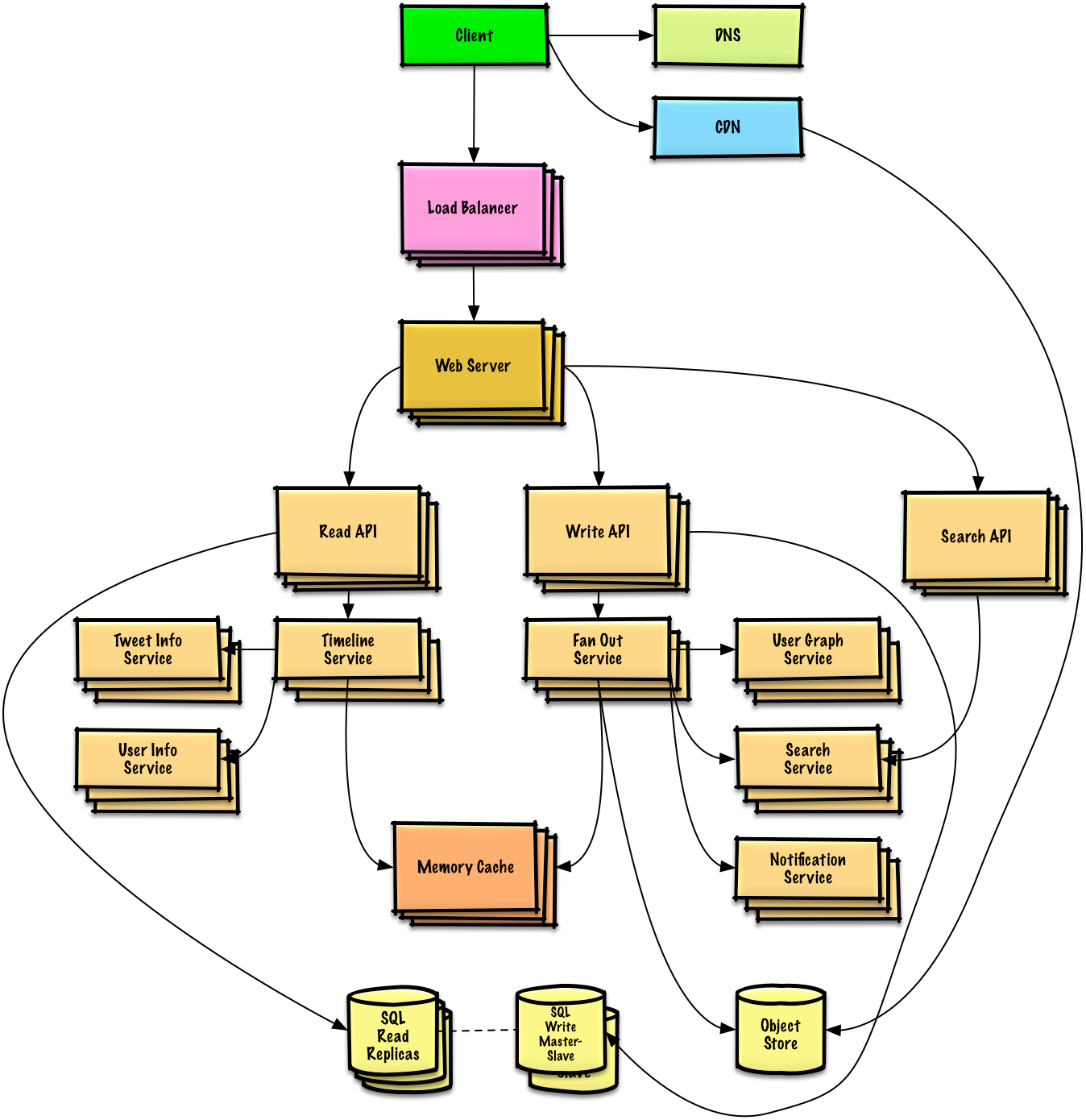

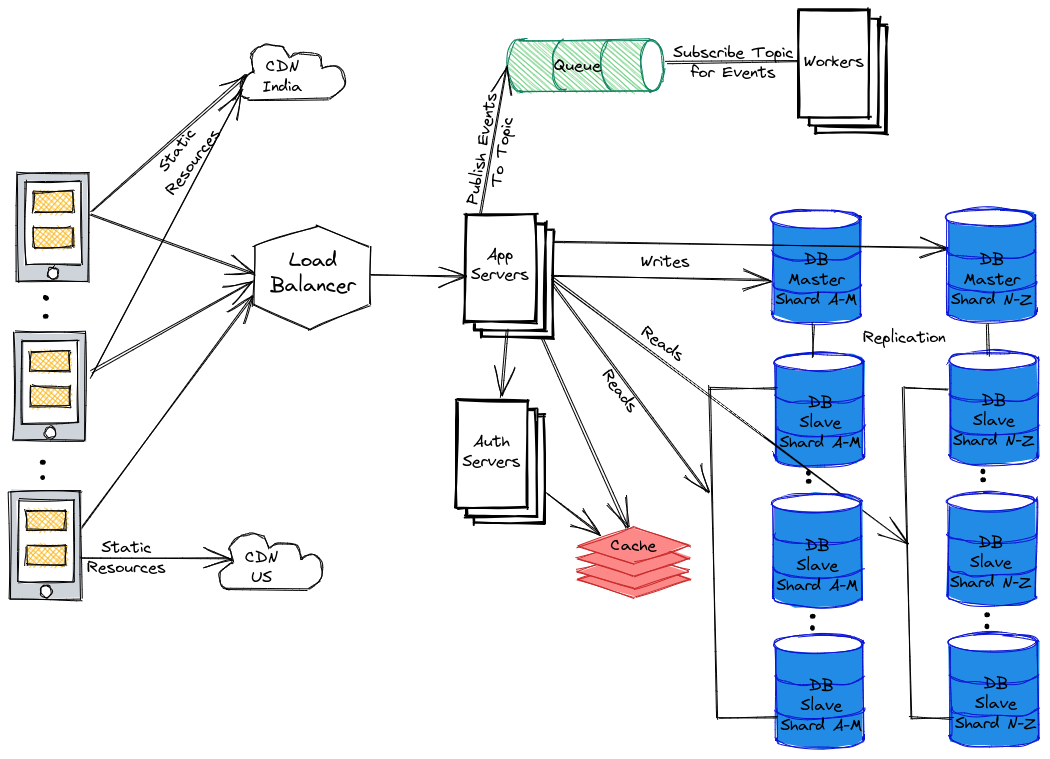

One such architecture example is shown below —

Pic credits : twtr

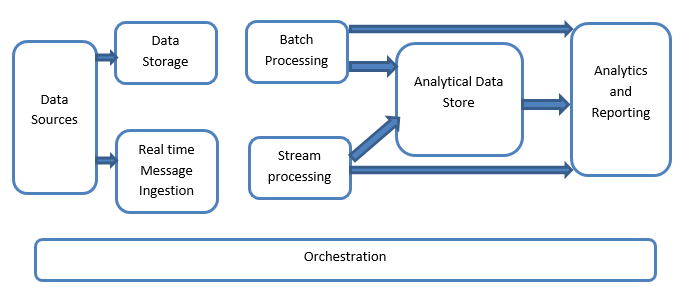

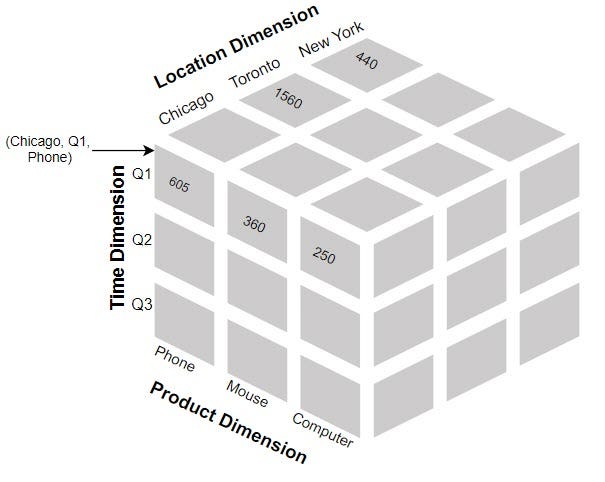

Data means what is the input, what are the data processing blocks, how to store petabytes of data and most importantly how to process it and give the desired/required output.

Pic credits : researchgate

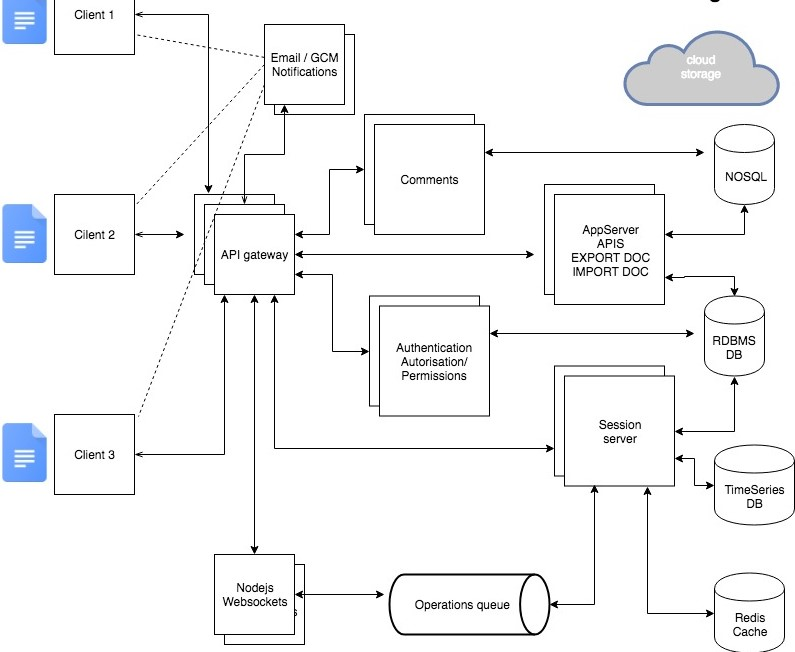

Applications means once the data is processed and output is ready how are the different applications attached to that large back end system will utilize that data.

Pic credits : ResearchGate

All this is prepared in the form of a large pipeline ( some/most part of it is automated based on the requirements)

Again, designing larger system that can serve zillions of people have 4 things in common —

- Reliability : It’s measured in terms of robustness, failures and effect analysis of the sub systems of the larger system.

- Scalability : It’s measured in terms of resources allocation, utilization, serviceability and availability.

- Availability : It’s measured by the percentage of time in a given period that a system is available to perform the assigned task without any failure(s).

- Efficiency : It’s measured in terms of reusability of components, simplicity and productivity.

And all of it bottles down to three things — Cost, Speed and Failures.

Now let’s talk about the most important concepts ( technically you should know) in System Design

2. Proxies

3. Caching

4. CAP theorem

6. Replication

7. Sharding and Data Partitioning

10. Storage, Availability and Hashing

11. API

- Horizontal and vertical scaling: Imagine you have a toy shop and you have a limited number of toys. If you want to make room for more toys, you can either stack them up vertically (vertical scaling) or you can spread them out horizontally (horizontal scaling). The same goes for computer systems, if you want to make it work faster, you can either add more power to it (vertical scaling) or you can add more computers to it (horizontal scaling).

- Load balancing and Message queues: Imagine you have a bunch of friends who want to play a game together, but you only have one game. To make it fair, you take turns playing the game. This is like load balancing in a computer system, it makes sure that the work is evenly distributed so it runs smoothly. Message queues are like a line or queue where you and your friends wait for your turn to play the game. In a computer system, message queues are used to store messages or tasks until they can be processed.

- High level design and low level design: Imagine you and your friends want to build a treehouse. The high level design is like the big picture plan of what the treehouse will look like and what it will have. The low level design is like the details of how each part of the treehouse will be built. The same goes for computer systems, the high level design is the big picture plan, and the low level design is the details of how it will be built.

- Consistent Hashing, Monolithic and Microservices architecture: Imagine you have a toy box and you want to keep all your toys organized. You can either keep all your toys in one big box (monolithic) or you can keep them in many small boxes (microservices). Consistent hashing is like labeling each box so you know what toys are in it. In a computer system, consistent hashing helps with organizing data in a distributed system.

- Caching, Indexing, Proxies: Imagine you have a big library with many books. To find a book quickly, you can either make a list of all the books (indexing) or you can keep a copy of the book you often look for in a nearby shelf (caching). A proxy is like a helper who can get the book for you if you can’t find it. In a computer system, caching, indexing and proxies are ways to make information easier to find and faster to access.

- Networking, How Browsers work, Content Network Delivery (CDN): Imagine you want to send a message to your friend who lives far away. To send the message, you can either go to your friend’s house (not efficient) or you can send the message through a network of people (more efficient). Browsers are like a messenger who helps you find websites on the internet and CDN is like a big network of messengers who can deliver the website to you faster.

- Database Sharding, CAP Theorem, Database schema Design: Imagine you have a big notebook with many pages and you want to keep your notes organized. You can either keep all your notes in one big notebook (not efficient) or you can divide your notes into many small notebooks (database sharding). The CAP theorem is like a rule that helps you decide what kind of notebook you want to use. The database schema design is like how you want to organize your notes in each notebook.

- Concurrency, API, Components + OOP + Abstraction: Imagine you and your friends want to play a game together. You can either play the game one by one (not efficient) or you can play the game at the same.

- Networks & Protocols: Imagine you and your friends want to send secret messages to each other. To make sure the messages are safe, you can use a secret code (protocol) and send the messages through a network of friends (network). In a computer system, networks and protocols are used to send information safely and efficiently.

- Latency & Throughput: Imagine you and your friends are playing a game where you have to pass a ball to each other as fast as you can. Latency is like the time it takes for the ball to get from one friend to another, and throughput is like how many balls can be passed in a certain amount of time. In a computer system, latency and throughput are important for measuring the speed and efficiency of information transfer.

- Storage, Availability, and Hashing: Imagine you have a toy box and you want to keep your toys safe and organized. To keep your toys safe, you can lock the toy box (storage) and make sure it’s always available to you (availability). To keep your toys organized, you can label each toy with a special code (hashing). In a computer system, storage, availability, and hashing are important for keeping information safe and organized.

- Start with requirements: Clearly understand the requirements and constraints of the system before starting the design process.

- Scalability: Design the system with scalability in mind from the beginning to ensure it can handle increasing workloads over time.

- Modularity: Design the system as a set of modular components that can be developed, tested, and maintained independently.

- Abstraction: Use abstraction to separate the implementation details from the interfaces, making the system easier to understand and maintain.

- Performance: Consider performance trade-offs when making design decisions, such as using caching or load balancing to improve response time.

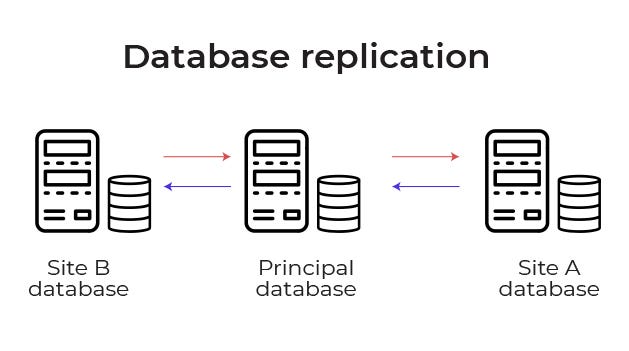

- Availability: Design the system for high availability by using redundancy and failover mechanisms, such as load balancers or database replication.

- Security: Consider security risks and implement appropriate security measures, such as encryption, authentication, and authorization.

- Monitoring: Implement monitoring and logging to keep track of system performance and detect and diagnose issues.

- Testability: Design the system for testability, including unit tests, integration tests, and end-to-end tests.

- Document: Document the design decisions, assumptions, and trade-offs to ensure that others can understand and maintain the system.

As we proceed further in this System Design series, we will explore above mentioned — 12 most important concepts and how the large systems are build around these concepts.

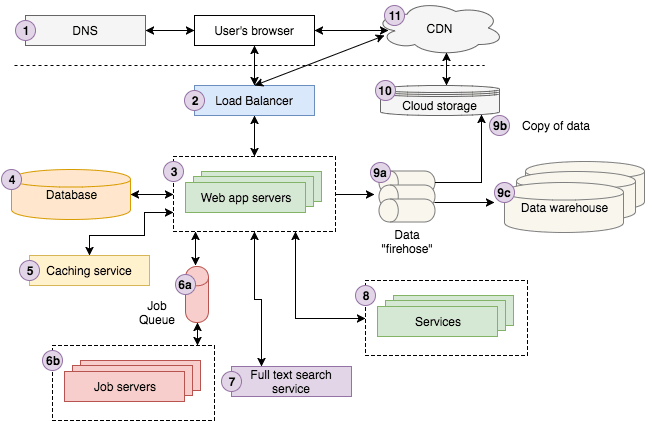

The first step to proceed with any system design problem is to use diagrams , connections, bullet points and make a checklist as shown in the diagram below —

Pic credits : workattech

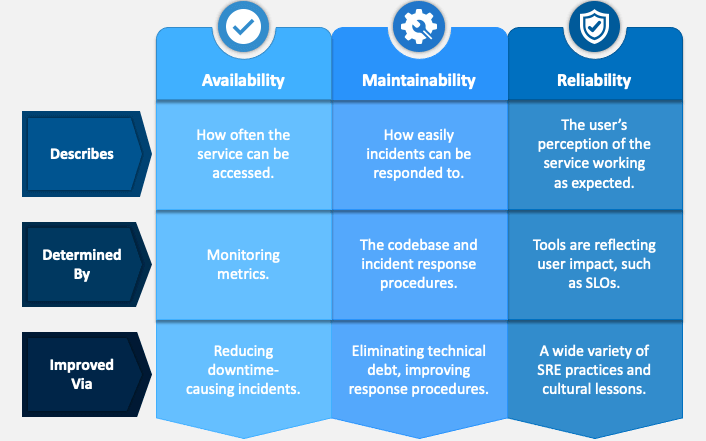

A system should be —

- Reliable

- Scalable

- Maintainable

Reliability means the system should be fault tolerant and working when faults/error happen.

Scalability means should should be able to cater to the growing users, traffic and data

Maintainability means even after adding new functionalities and features as per the new requirements, the system and the existing code should work.

Pic credits : sketchbubble

The Read API in system design is responsible for retrieving data from the system’s database and returning it to the requesting client.

This API is critical for any system that needs to provide access to its data.

Read API can be implemented using the following steps:

- Accepting input: The Read API should accept input from the client about what data is being requested. This input can be in the form of parameters, such as IDs or filters.

- Retrieving data: Once the input is received, the Read API should query the system’s database to retrieve the requested data.

- Formatting data: The Read API should format the data in a way that is easy for the client to understand. This may include converting the data to a specific format, such as JSON or XML.

- Returning data: Finally, the Read API should return the formatted data to the client.

Implementation of a Read API using Python and the Flask web framework:

from flask import Flask, request, jsonify

import sqlite3

app = Flask(__name__)

@app.route('/users')

def get_users():

# get the query parameters

name = request.args.get('name')

age = request.args.get('age')

# connect to the database

conn = sqlite3.connect('users.db')

cursor = conn.cursor()

# build the SQL query based on the query parameters

query = "SELECT * FROM users"

if name:

query += f" WHERE name = '{name}'"

if age:

query += f" AND age = {age}"

# execute the query and fetch the data

cursor.execute(query)

data = cursor.fetchall()

# format the data as a list of dictionaries

users = []

for row in data:

user = {'id': row[0], 'name': row[1], 'age': row[2]}

users.append(user)

# close the database connection

conn.close()

# return the formatted data as JSON

return jsonify(users)In this implementation, the API accepts query parameters for filtering the users by name and age. It then builds an SQL query based on these parameters and retrieves the data from a SQLite database. The data is then formatted as a list of dictionaries and returned to the client as JSON.

A Write API in system design refers to an API that allows clients to modify or update data in the system. It is often used in systems that require a level of data persistence or require data to be updated in real-time.

In order to implement a Write API, you will need to define the endpoints for the API, the data model for the objects being modified, and the logic for handling incoming requests.

Implementation of a Write API in Python using the Flask framework:

from flask import Flask, request, jsonify

app = Flask(__name__)

# Define the data model for a user

class User:

def __init__(self, id, name, email):

self.id = id

self.name = name

self.email = email

users = []

# Define the API endpoint for adding a new user

@app.route('/users', methods=['POST'])

def add_user():

user_data = request.get_json()

user = User(len(users) + 1, user_data['name'], user_data['email'])

users.append(user)

return jsonify({'success': True})# Define the API endpoint for updating an existing user

@app.route('/users/<int:user_id>', methods=['PUT'])

def update_user(user_id):

user_data = request.get_json()

user = next((u for u in users if u.id == user_id), None)

if not user:

return jsonify({'success': False, 'message': 'User not found'})

user.name = user_data.get('name', user.name)

user.email = user_data.get('email', user.email)

return jsonify({'success': True})if __name__ == '__main__':

app.run(debug=True)In this implementation, we define a data model for a user object with an id, name, and email. We also define two API endpoints, one for adding a new user and one for updating an existing user. When a POST request is made to /users, we create a new user object with the data provided in the request, add it to the list of users, and return a JSON response indicating success. When a PUT request is made to_ /users/<user_id>, we look for the user with the specified id in the list of users. If the user is found, we update its name and email properties with the data provided in the request and return a JSON response indicating success. If the user is not found, we return a JSON response indicating failure with a message explaining that the user was not found._

The Search API is a crucial component of many systems, as it allows users to find information quickly and efficiently.

When designing a search API, it’s important to consider the types of queries that users will be performing, as well as the data structures and algorithms that will be used to retrieve results.

Implementation of how a Search API can be implemented using Python:

import json

from typing import List

# assume that the data is already loaded into a list of dictionaries

data = [

{

"id": 1,

"title": "Lorem ipsum",

"description": "Lorem ipsum dolor sit amet, consectetur adipiscing elit.",

"tags": ["lorem", "ipsum", "dolor"]

},

{

"id": 2,

"title": "Dolor sit amet",

"description": "Dolor sit amet, consectetur adipiscing elit.",

"tags": ["dolor", "sit", "amet"]

},

{

"id": 3,

"title": "Consectetur adipiscing elit",

"description": "Praesent et massa vel ante commodo commodo a eu massa.",

"tags": ["consectetur", "adipiscing", "elit"]

}

]def search(query: str) -> List[dict]:

"""

Search for documents that match the given query

"""

results = []

for document in data:

if query.lower() in document['title'].lower() or query.lower() in document['description'].lower() or query.lower() in [tag.lower() for tag in document['tags']]:

results.append(document)

return results# example usage

results = search("Lorem")

print(json.dumps(results, indent=2))In this implementation, the search API takes a query string as input and returns a list of documents that match the query. The search is performed by iterating through each document in the data list and checking if the query string is present in the document’s title, description, or tags.

A User Info Service is a system component that is responsible for storing and retrieving user information in a system.

It is often a critical component of a larger system because many applications and services rely on user data to function properly.

In a system, the User Info Service would be responsible for managing the following tasks:

- Creating new user accounts

- Authenticating users and validating their credentials

- Retrieving user profile information (e.g. name, email address, profile picture, etc.)

- Updating user profile information

- Deleting user accounts

Implementation of a User Info Service in Python:

class UserInfoService:

def __init__(self):

self.users = {}

def create_user(self, username, password, email):

if username in self.users:

raise Exception('User already exists')

self.users[username] = {

'password': password,

'email': email,

'profile': {}

}

def authenticate_user(self, username, password):

if username not in self.users:

return False

return self.users[username]['password'] == password

def get_user_profile(self, username):

if username not in self.users:

return None

return self.users[username]['profile']

def update_user_profile(self, username, profile):

if username not in self.users:

return False

self.users[username]['profile'] = profile

return True

def delete_user(self, username):

if username not in self.users:

return False

del self.users[username]

return TrueIn this implementation, the User Info Service is implemented as a class with methods for each of the tasks described above. The service stores user information in a dictionary, where the keys are usernames and the values are dictionaries containing the user’s password, email, and profile information. The create_user method adds a new user to the dictionary, but first checks to make sure that the username isn't already taken. The authenticate_user method checks whether a given username and password combination is valid. The get_user_profile method retrieves a user's profile information, and the update_user_profile method updates it. Finally, the delete_user method removes a user from the dictionary.

A user graph service is a component in a system design that manages and maintains the relationships between users in a network. It is responsible for storing and updating information about user connections, such as friend lists, followers, or other types of relationships.

This service is commonly used in social networks, online marketplaces, and other systems that require social connections between users.

Implementation of a user graph service using Python and the Flask framework:

from flask import Flask, jsonify, request

from flask_sqlalchemy import SQLAlchemy

app = Flask(__name__)

app.config['SQLALCHEMY_DATABASE_URI'] = 'sqlite:///users.db'

db = SQLAlchemy(app)class User(db.Model):

id = db.Column(db.Integer, primary_key=True)

name = db.Column(db.String(50))

friends = db.relationship('User', secondary='friendships',

primaryjoin='User.id==friendships.c.user_id',

secondaryjoin='User.id==friendships.c.friend_id',

backref=db.backref('friend_of', lazy='dynamic'))friendships = db.Table('friendships',

db.Column('user_id', db.Integer, db.ForeignKey('user.id')),

db.Column('friend_id', db.Integer, db.ForeignKey('user.id'))

)@app.route('/users', methods=['GET'])

def get_users():

users = User.query.all()

return jsonify([user.name for user in users])@app.route('/users/<int:user_id>', methods=['GET'])

def get_user(user_id):

user = User.query.get(user_id)

if user is None:

return jsonify({'error': 'User not found'})

return jsonify({'name': user.name, 'friends': [friend.name for friend in user.friends]})@app.route('/users', methods=['POST'])

def create_user():

name = request.json.get('name')

user = User(name=name)

db.session.add(user)

db.session.commit()

return jsonify({'id': user.id})@app.route('/users/<int:user_id>/friends', methods=['POST'])

def add_friend(user_id):

friend_id = request.json.get('friend_id')

user = User.query.get(user_id)

friend = User.query.get(friend_id)

if user is None or friend is None:

return jsonify({'error': 'User or friend not found'})

if friend in user.friends:

return jsonify({'error': 'Already friends'})

user.friends.append(friend)

db.session.commit()

return jsonify({'message': 'Friend added'})if __name__ == '__main__':

db.create_all()

app.run()In this implementation, we use the Flask framework to create a web API for managing users and their connections. We define a User class using SQLAlchemy to represent a user in the system. The User class has a friends relationship that points to other users who are friends of this user. We also define a friendships table that stores the many-to-many relationship between users. This table is used to implement the friends relationship of the User class. The API provides several endpoints for managing users and their connections. The GET /users endpoint returns a list of all users in the system. The GET /users/{id} endpoint returns information about a specific user, including their name and friend list. The POST /users endpoint creates a new user in the system. Finally, the POST /users/{id}/friends endpoint adds a new friend to a user's friend list.

A timeline service in system design is responsible for managing and presenting a chronological list of events or activities.

It can be used in social media platforms, news portals, or other applications where time-based information needs to be presented to users.

Implementation of a timeline service can be done using the following steps:

- Data model: Define the data model for the timeline. This includes the events or activities to be displayed, along with any associated metadata such as timestamps and user IDs.

- Database schema: Create a database schema to store the data model. This can be a relational database such as MySQL or PostgreSQL, or a NoSQL database such as MongoDB or Cassandra.

- API endpoints: Define the API endpoints for the timeline service. This includes endpoints for creating, updating, and deleting events, as well as endpoints for retrieving the events in chronological order.

- Event processing: Define the logic for processing events. This includes verifying user permissions, validating input data, and performing any necessary transformations on the data.

- Presentation: Define the logic for presenting the events to users. This includes sorting the events in chronological order, applying any filters or search queries, and formatting the data for display.

Implementation of a timeline service using Python and the Flask web framework:

from flask import Flask, request, jsonify

import sqlite3

app = Flask(__name__)@app.route('/timeline')

def get_timeline():

# get the query parameters

user_id = request.args.get('user_id')

start_time = request.args.get('start_time')

end_time = request.args.get('end_time') # connect to the database

conn = sqlite3.connect('timeline.db')

cursor = conn.cursor() # build the SQL query based on the query parameters

query = "SELECT * FROM events"

if user_id:

query += f" WHERE user_id = {user_id}"

if start_time and end_time:

query += f" AND timestamp BETWEEN {start_time} AND {end_time}"

elif start_time:

query += f" AND timestamp >= {start_time}"

elif end_time:

query += f" AND timestamp <= {end_time}"

query += " ORDER BY timestamp ASC" # execute the query and fetch the data

cursor.execute(query)

data = cursor.fetchall() # format the data as a list of dictionaries

events = []

for row in data:

event = {'id': row[0], 'user_id': row[1], 'timestamp': row[2], 'data': row[3]}

events.append(event) # close the database connection

conn.close() # return the formatted data as JSON

return jsonify(events)@app.route('/event', methods=['POST'])

def create_event():

# get the request data

data = request.get_json() # validate the data

if 'user_id' not in data or 'timestamp' not in data or 'data' not in data:

return jsonify({'error': 'Invalid data'}), 400 # insert the data into the database

conn = sqlite3.connect('timeline.db')

cursor = conn.cursor()

query = "INSERT INTO events (user_id, timestamp, data) VALUES (?, ?, ?)"

cursor.execute(query, (data['user_id'], data['timestamp'], data['data']))

conn.commit()

event_id = cursor.lastrowid

conn.close() # return the ID of the created event

return jsonify({'id': event_id}), 201In this implementation, the timeline service provides two endpoints. The get_timeline() endpoint retrieves events from the database based on query parameters for user ID.

A notification service in system design is a mechanism that allows a system to send notifications to users or other systems about events or changes in the system.

It is often used in systems that require real-time updates or event-driven architectures. In order to implement a notification service, you will need to define the message format, the delivery mechanism, and the logic for handling incoming notifications.

Implementation of a notification service in Python using the Flask framework and the Redis Pub/Sub messaging system:

from flask import Flask, request

import redis

app = Flask(__name__)

redis_client = redis.Redis(host='localhost', port=6379)# Define the API endpoint for sending notifications

@app.route('/notifications', methods=['POST'])

def send_notification():

notification_data = request.get_json()

channel = notification_data['channel']

message = notification_data['message']

redis_client.publish(channel, message)

return 'Notification sent'# Define the function for handling incoming notifications

def handle_notification(channel, message):

print(f'Received message on channel {channel}: {message}')if __name__ == '__main__':

# Subscribe to the 'notifications' channel and start listening for messages

pubsub = redis_client.pubsub()

pubsub.subscribe('notifications')

for message in pubsub.listen():

handle_notification(message['channel'], message['data'])In this implementation, we define an API endpoint for sending notifications with a channel and message payload. When a POST request is made to /notifications, we publish the message to the Redis Pub/Sub system using the specified channel. We also define a function for handling incoming notifications. This function simply prints the channel and message payload to the console, but in a real system, you would likely use this function to trigger some kind of action or update in the system. Finally, we subscribe to the ‘notifications’ channel using the Redis pubsub() method and start listening for messages. When a message is received, the handle_notification() function is called with the channel and message payload as arguments._

A full text search service in system design is a mechanism that allows a system to search for and retrieve documents or other data based on keyword or phrase searches.

It is often used in systems that have large amounts of unstructured or semi-structured data, such as text documents or web pages. In order to implement a full text search service, you will need to define the data model and indexing strategy, the search API, and the logic for querying and retrieving results.

Implementation of a full text search service in Python using the Flask framework and the Elasticsearch search engine:

from flask import Flask, request, jsonify

from elasticsearch import Elasticsearch

app = Flask(__name__)

es = Elasticsearch()# Define the data model for a document

class Document:

def __init__(self, id, title, content):

self.id = id

self.title = title

self.content = content# Define the function for indexing documents

def index_document(document):

es.index(index='documents', body={

'id': document.id,

'title': document.title,

'content': document.content

})# Define the API endpoint for searching documents

@app.route('/search', methods=['GET'])

def search_documents():

query = request.args.get('q', '')

results = es.search(index='documents', body={

'query': {

'multi_match': {

'query': query,

'fields': ['title', 'content']

}

}

})['hits']['hits']

documents = [Document(r['_source']['id'], r['_source']['title'], r['_source']['content']) for r in results]

return jsonify({'documents': [doc.__dict__ for doc in documents]})if __name__ == '__main__':

# Index some example documents

documents = [

Document(1, 'Lorem Ipsum', 'Lorem ipsum dolor sit amet, consectetur adipiscing elit.'),

Document(2, 'Python Tutorial', 'Learn Python programming with this tutorial.'),

Document(3, 'Flask Web Framework', 'Build web applications with Flask.')

]

for doc in documents:

index_document(doc)

app.run(debug=True)In this implementation, we define a data model for a document object with an id, title, and content. We also define a function for indexing documents in the Elasticsearch search engine. When a GET request is made to /search?q=, we use the Elasticsearch search API to search for documents containing the specified query in either the title or content fields. We then construct a list of Document objects from the search results and return them as a JSON response. Finally, we index some example documents in the Elasticsearch index when the application starts up._

Caching is a technique used to store frequently accessed data in a temporary storage area so that it can be retrieved faster when required. It is commonly used in system design to improve performance and reduce latency.

How Caching Works:

Caching works by storing data in a fast-access storage area that is close to the data consumer. When data is requested, the system checks the cache first to see if the data is already available. If the data is available in the cache, it is retrieved and returned to the consumer. If the data is not available in the cache, the system retrieves the data from the original source and stores it in the cache for future use.

Implementing a Caching Service with Python:

In Python, we can implement a caching service using the built-in dict data type.

Implementation of a caching service that stores the result of a function call using a dictionary:

cache = {}

def cached_func(func):

def wrapper(*args):

if args in cache:

return cache[args]

else:

result = func(*args)

cache[args] = result

return result

return wrapperIn this implementation, cache is a dictionary that will be used to store the results of function calls. The cached_func decorator takes a function as an argument and returns a new function that caches the results of the original function. The new function, wrapper, first checks the cache to see if the result of the function call has already been computed. If the result is available in the cache, it is returned immediately. If the result is not in the cache, the original function is called with the arguments provided, and the result is stored in the cache before being returned._

To use the caching service, we simply decorate the function we want to cache with the cached_func decorator:

@cached_func

def fibonacci(n):

if n <= 1:

return n

else:

return fibonacci(n-1) + fibonacci(n-2)In this implementation, the fibonacci function will be cached, so that subsequent calls to fibonacci(n) with the same value of n will return the cached result instead of computing the result again. Caching can greatly improve the performance of a system by reducing the time needed to compute frequently accessed data. However, it’s important to be aware of the limitations of caching, such as the increased memory usage and the need to keep the cache consistent with the original data source.

It’s the process of distributing requests or messages from a single source to multiple destinations.

This is a common pattern in distributed systems, where a service or application needs to send information to multiple downstream systems.

One way to implement a fan out service is to use a message queue. The service would receive requests or messages from the source system, and then publish those messages to a message queue. Each downstream system would then subscribe to the message queue and receive a copy of the message.

Implementation of a fan out service using Python and RabbitMQ as the message queue:

import pika

# Connect to RabbitMQ

connection = pika.BlockingConnection(pika.ConnectionParameters('localhost'))

channel = connection.channel()# Create the message queue

channel.exchange_declare(exchange='fanout_exchange', exchange_type='fanout')# Define the message to be sent

message = 'Hello, world!'# Publish the message to the message queue

channel.basic_publish(exchange='fanout_exchange', routing_key='', body=message)# Close the connection

connection.close()In this implementation, the service connects to a RabbitMQ instance running on localhost. It then declares a fanout exchange, which is a type of exchange that broadcasts messages to all bound queues. The service then creates a message to be sent and publishes it to the exchange with an empty routing key, indicating that the message should be sent to all queues bound to the exchange.

To receive the messages, downstream systems would need to create a queue and bind it to the fanout exchange.

Here’s an implementation of a downstream system using Python and RabbitMQ:

import pika

# Connect to RabbitMQ

connection = pika.BlockingConnection(pika.ConnectionParameters('localhost'))

channel = connection.channel()# Create a queue and bind it to the fanout exchange

result = channel.queue_declare(queue='', exclusive=True)

queue_name = result.method.queue

channel.queue_bind(exchange='fanout_exchange', queue=queue_name)# Define a callback function to handle received messages

def callback(ch, method, properties, body):

print(f'Received message: {body}')# Start consuming messages from the queue

channel.basic_consume(queue=queue_name, on_message_callback=callback, auto_ack=True)

channel.start_consuming()In this implementation, the downstream system connects to the same RabbitMQ instance as the fan out service. It then declares a queue with an empty name, which creates a unique queue name that is only accessible to the current connection. The downstream system then binds the queue to the fanout exchange created by the fan out service. The system defines a callback function to handle received messages, which simply prints the message body to the console. Finally, the system starts consuming messages from the queue using the basic_consume method, passing the callback function as an argument.

A memory cache is a system component that stores frequently accessed data in memory for fast access. It can be used to improve the performance of a system by reducing the number of expensive database queries or other resource-intensive operations.

In a system, the memory cache would be responsible for managing the following tasks:

- Storing and retrieving data from memory

- Expiring or invalidating cached data after a certain amount of time or under certain conditions

- Maintaining cache consistency and preventing data corruption

- Managing cache capacity to avoid running out of memory

Implementation of a simple memory cache in Python:

class MemoryCache:

def __init__(self, capacity=1000, default_ttl=60):

self.cache = {}

self.capacity = capacity

self.default_ttl = default_ttl

def get(self, key):

if key not in self.cache:

return None

value, expiration = self.cache[key]

if expiration is not None and expiration < time.time():

del self.cache[key]

return None

return value

def set(self, key, value, ttl=None):

if len(self.cache) >= self.capacity:

self.evict()

if ttl is None:

ttl = self.default_ttl

expiration = time.time() + ttl

self.cache[key] = (value, expiration)

def delete(self, key):

if key in self.cache:

del self.cache[key]

def evict(self):

now = time.time()

expired_keys = [k for k, (v, e) in self.cache.items() if e is not None and e < now]

for key in expired_keys:

del self.cache[key]

def clear(self):

self.cache.clear()In this implementation, the MemoryCache class uses a dictionary to store key-value pairs, where the keys are the data being cached and the values are tuples containing the cached value and its expiration time. The get method retrieves a value from the cache, checking its expiration time to ensure it hasn't expired. The set method adds a new key-value pair to the cache, setting an optional time-to-live (TTL) value to determine how long the value should be cached for. If the cache is at capacity, the evict method is called to remove expired keys and make room for new ones. The delete method removes a key-value pair from the cache, and the clear method removes all key-value pairs from the cache.

DNS (Domain Name System) is a system used to translate human-readable domain names into IP addresses. It acts as a directory for the internet, allowing users to easily access websites and other internet services without needing to remember IP addresses.

In a system, a DNS server would be responsible for managing the following tasks:

- Resolving domain names into IP addresses

- Caching resolved IP addresses to improve performance

- Load balancing between multiple IP addresses for a given domain name

- Handling domain name registration and management

Implementation of a simple DNS resolver in Python:

import socket

class DNSResolver:

def __init__(self, ttl=60):

self.cache = {}

self.ttl = ttl

def resolve(self, domain):

if domain in self.cache:

ip, expiration = self.cache[domain]

if expiration > time.time():

return ip

ips = socket.getaddrinfo(domain, None)

if not ips:

return None

ip = ips[0][4][0]

self.cache[domain] = (ip, time.time() + self.ttl)

return ip

def clear_cache(self):

self.cache.clear()In this implementation, the DNSResolver class uses the socket module to resolve domain names into IP addresses. The resolve method first checks the cache to see if the requested domain name has already been resolved and cached. If the cached IP address has not expired, it is returned immediately. Otherwise, the getaddrinfo function is called to resolve the domain name into one or more IP addresses. If no IP addresses are returned, None is returned. Otherwise, the first IP address in the list is returned and cached with an expiration time based on the TTL value specified in the constructor. The clear_cache method removes all cached IP addresses from the cache.

Real-time message ingestion is the process of capturing and processing messages as they are generated in real-time.

This is a common requirement for many systems that handle real-time data, such as chat applications, social media platforms, and IoT systems.

In a system, real-time message ingestion would be responsible for managing the following tasks:

- Capturing messages as they are generated

- Storing messages in a way that allows for efficient retrieval and querying

- Processing messages in real-time to perform actions such as notifications or real-time analytics

- Scaling to handle high message volume and ensure high availability

Implementation of a simple real-time message ingestion system in Python using the Flask web framework and Redis as a message broker:

from flask import Flask, request

from redis import Redis

import json

app = Flask(__name__)

redis = Redis(host='localhost', port=6379)@app.route('/message', methods=['POST'])

def add_message():

message = json.loads(request.data)

redis.publish('messages', json.dumps(message))

return 'Message received'if __name__ == '__main__':

app.run(debug=True)In this implementation, the Flask web framework is used to create an HTTP endpoint at /message that accepts JSON-formatted messages via a POST request. The add_message function deserializes the JSON payload, and then publishes the message to a Redis message broker using the publish method. This allows the message to be distributed to any number of subscribers in real-time.

Subscribers can then use Redis’ subscribe method to listen for messages on the 'messages' channel:

from redis import Redis

import json

redis = Redis(host='localhost', port=6379)

pubsub = redis.pubsub()

pubsub.subscribe('messages')for message in pubsub.listen():

if message['type'] == 'message':

data = json.loads(message['data'])

# Do something with the message dataIn this implementation, the pubsub object is created to listen for messages on the 'messages' channel. The listen method blocks until a message is received, and then returns a dictionary with the message type and data. If the message type is 'message', the message data is deserialized from JSON and can then be used for further processing.

A load balancer is a system component that distributes incoming network traffic across multiple servers to improve performance, reliability, and availability.

Load balancers can be used to handle a large number of requests, prevent overload on individual servers, and ensure that traffic is distributed evenly among servers.

In a system, a load balancer would be responsible for managing the following tasks:

- Monitoring server health and availability

- Distributing traffic across available servers

- Managing session persistence (i.e. ensuring that subsequent requests from a client are routed to the same server)

- Handling SSL termination (i.e. decrypting encrypted traffic and forwarding it to the appropriate server)

Implementation of a simple load balancer in Python:

import random

class LoadBalancer:

def __init__(self, servers):

self.servers = servers

self.num_servers = len(servers)

self.server_statuses = [True] * self.num_servers

def choose_server(self):

available_servers = [i for i in range(self.num_servers) if self.server_statuses[i]]

if not available_servers:

return None

return random.choice(available_servers)

def handle_request(self, request):

server_index = self.choose_server()

if server_index is None:

raise Exception("No available servers")

server = self.servers[server_index]

return server.handle_request(request)

def set_server_status(self, server_index, status):

self.server_statuses[server_index] = statusIn this implementation, the LoadBalancer class takes a list of Server objects in its constructor. The choose_server method randomly selects an available server from the list, based on the current server statuses. The handle_request method uses the choose_server method to select a server, and then forwards the request to that server's handle_request method. If no available server is found, an exception is raised. The set_server_status method is used to set the status of a server to either available or unavailable.

A Content Delivery Network (CDN) is a distributed network of servers that helps to deliver content faster and more efficiently to end-users by caching content closer to the user’s location.

A CDN can be used in a system design to improve website performance and reliability by reducing latency, improving website availability and scalability, and reducing bandwidth costs.

Implementation of CDN using Amazon CloudFront, one of the popular CDN services available:

- Sign up for an AWS account and create a new CloudFront distribution.

aws cloudfront create-distribution \

--origin-domain-name example.com \

--default-root-object index.html \

--enabled

- Set up your origin server(s) by creating an Amazon S3 bucket or an EC2 instance as your origin server.

- Create a new DNS record for your distribution using your preferred DNS provider (e.g., Amazon Route 53) and point it to your CloudFront distribution.

- Test your CDN by accessing your website using the new DNS record. You should see improved performance and faster load times.

In summary, implementing a CDN in a system design can greatly improve website performance and reliability by caching content closer to end-users. It can also reduce latency, improve website availability and scalability, and reduce bandwidth costs.

A job server in system design is responsible for managing and executing background jobs or tasks asynchronously. It can be used in various applications such as web scraping, data processing, or batch processing.

The implementation of a job server can be done using the following steps:

- Job queue: Define a job queue to store the jobs to be executed. This can be a message queue such as RabbitMQ, a database table, or a simple in-memory data structure such as a list.

- Job producer: Define a job producer to add jobs to the job queue. This can be a user interface, a REST API endpoint, or any other application logic that generates jobs.

- Job consumer: Define a job consumer to fetch jobs from the job queue and execute them. This can be a worker process that runs continuously in the background, or a scheduler that runs periodically to check for new jobs.

- Job executor: Define the logic for executing the jobs. This includes processing input data, performing the necessary calculations or operations, and generating output data.

Implementation of a job server using Python and the Flask web framework:

from flask import Flask, request, jsonify

from queue import Queue

import threading

app = Flask(__name__)

job_queue = Queue()def worker():

while True:

job = job_queue.get()

# execute the job logic here

print(f"Executing job {job['id']} with data {job['data']}")

job_queue.task_done()@app.route('/job', methods=['POST'])

def create_job():

# get the request data

data = request.get_json() # validate the data

if 'data' not in data:

return jsonify({'error': 'Invalid data'}), 400 # add the job to the job queue

job_id = len(job_queue) + 1

job = {'id': job_id, 'data': data['data']}

job_queue.put(job) # return the ID of the created job

return jsonify({'id': job_id}), 201if __name__ == '__main__':

# start the worker threads

for i in range(4):

t = threading.Thread(target=worker)

t.daemon = True

t.start() # start the Flask app

app.run(debug=True)In this implementation, the job server provides a single endpoint create_job() that accepts job data as input and adds it to the job queue. The worker() function runs continuously in the background, fetching jobs from the queue and executing them. To start the worker threads, the name == 'main'_ block creates four worker threads and starts them in daemon mode. This ensures that the threads will exit gracefully when the main thread terminates. When a job is added to the job queue, it is assigned an ID and a dictionary object with the job data. The worker thread fetches the job from the queue, extracts the job data, and executes the job logic. In this example, the job logic is simply printing the job ID and data to the console, but it could be any arbitrary code for processing the job data. Finally, the worker thread calls task_done() to signal that the job has been completed.

Workers in system design refer to background processes that perform tasks asynchronously and independently of the main system flow.

They are often used to perform tasks that are time-consuming or resource-intensive, such as processing large files, sending emails, or performing data analysis.

Implementation of a worker system in Python using the Celery distributed task queue:

from celery import Celery

import time

app = Celery('tasks', broker='pyamqp://guest@localhost//')# Define a task that takes some time to complete

@app.task

def long_task(duration):

time.sleep(duration)

return f'Task completed after {duration} seconds'if __name__ == '__main__':

# Queue up some tasks

result1 = long_task.delay(5)

result2 = long_task.delay(10)

result3 = long_task.delay(15) # Check the status of the tasks

print(f'Task 1 status: {result1.status()}')

print(f'Task 2 status: {result2.status()}')

print(f'Task 3 status: {result3.status()}') # Wait for the tasks to complete and retrieve the results

print(result1.get())

print(result2.get())

print(result3.get())In this implementation, we define a Celery app and a task that takes a specified number of seconds to complete using the time.sleep() function. We also define a main function that queues up some example tasks and checks their status before waiting for them to complete and retrieving the results. When the application runs, the long_task() function is executed asynchronously and independently of the main system flow. We can queue up multiple tasks and check their status using the result.status() method. We can also retrieve the results of the completed tasks using the result.get() method.

An application server in system design is a server that provides a platform for running and managing applications, often in a distributed or scalable environment.

It typically includes features such as load balancing, caching, and session management, and is often used to deploy web applications or APIs.

Implementation of an application server in Python using the Flask web framework and the Gunicorn WSGI HTTP server:

from flask import Flask, jsonify

import os

app = Flask(__name__)# Define an API endpoint

@app.route('/api', methods=['GET'])

def hello():

return jsonify({'message': 'Hello, world!'})if __name__ == '__main__':

# Get the port number from the environment variable, or use a default value

port = int(os.environ.get('PORT', 5000))

# Run the application using the Gunicorn WSGI HTTP server

app.run(host='0.0.0.0', port=port)In this implementation, we define a Flask app with a single API endpoint that returns a JSON response. We then use the Gunicorn HTTP server to run the app on a specified port. We also get the port number from an environment variable, which allows us to easily configure the port when deploying the app.

To run this application, you would first need to install Flask and Gunicorn. You can do this using pip:

pip install flask gunicorn

Once you have installed the necessary packages, you can run the application using the following command:

Copy code

gunicorn app:app

This command tells Gunicorn to run the app module (which contains our Flask app) and the app variable (which is the name of our Flask app). Gunicorn will start up multiple worker processes to handle incoming requests, and will handle load balancing and other features automatically.

Queues are an important part of many system designs, allowing you to process tasks and messages in a distributed or asynchronous manner.

In this , we’ll implement a simple queue using the Python queue module.

import queue

import time

import threading

# Define a function that will process items from the queue

def process_queue(q):

while True:

item = q.get()

print(f'Processing item {item}...')

time.sleep(1)

q.task_done()# Create a queue and start the worker threads

q = queue.Queue()

for i in range(5):

t = threading.Thread(target=process_queue, args=(q,))

t.daemon = True

t.start()# Add some items to the queue

for i in range(10):

q.put(i)# Wait for the queue to be processed

q.join()

print('All items processed')In this implementation, we define a process_queue() function that will process items from the queue. We then create a queue.Queue() object and start five worker threads that will call process_queue() to process items from the queue. We then add ten items to the queue using q.put(). The worker threads will automatically process these items in a first-in, first-out (FIFO) order. Finally, we call_ q.join() to wait for all of the items to be processed. This function blocks until all items in the queue have been processed and marked as done using q.task_done()._

Object storage is a method of storing and retrieving unstructured data in the form of objects. It is often used to store large amounts of data, such as media files, and can be accessed over a network using APIs.

How an object store can be implemented using Python:

import os

import hashlib

class ObjectStore:

def __init__(self, storage_directory):

self.storage_directory = storage_directory def put(self, data):

"""

Store an object in the object store

"""

# generate a unique filename for the object

filename = hashlib.md5(data).hexdigest() # write the object data to a file

with open(os.path.join(self.storage_directory, filename), 'wb') as f:

f.write(data) return filename def get(self, filename):

"""

Retrieve an object from the object store

"""

# read the object data from a file

with open(os.path.join(self.storage_directory, filename), 'rb') as f:

data = f.read() return data# example usage

object_store = ObjectStore('/path/to/storage/directory')

data = b'This is some test data'

filename = object_store.put(data)

retrieved_data = object_store.get(filename)

print(retrieved_data)In this implementation, the object store is implemented as a Python class with two methods: put and get. The_ put method takes an object as input, generates a unique filename for the object using a hash function, and writes the object data to a file in the specified storage directory. The method returns the generated filename, which can be used to retrieve the object later. The get method takes a filename as input and reads the corresponding object data from a file in the storage directory. The method returns the object data as bytes.

Authentication is the process of verifying the identity of a user, typically by requiring them to provide credentials such as a username and password.

It is a crucial component of many systems to ensure that only authorized users can access protected resources.

How authentication can be implemented in Python using the Flask web framework:

from flask import Flask, request, jsonify

app = Flask(__name__)# mock user data for demonstration purposes

users = {

"alice": {

"password": "password123",

"email": "alice@example.com"

},

"bob": {

"password": "password456",

"email": "bob@example.com"

}

}# authentication endpoint

@app.route('/authenticate', methods=['POST'])

def authenticate():

username = request.json.get('username')

password = request.json.get('password') if username in users and password == users[username]['password']:

# authentication successful

return jsonify({'success': True, 'email': users[username]['email']})

else:

# authentication failed

return jsonify({'success': False, 'message': 'Invalid username or password'}), 401# example usage

response = app.test_client().post('/authenticate', json={'username': 'alice', 'password': 'password123'})

print(response.get_json())In this implementation, the authentication process is implemented as a Flask endpoint that accepts a username and password as input in a JSON payload. The endpoint checks the provided credentials against a mock user database, and if they are valid, returns a JSON response indicating success along with the user’s email address. If the credentials are invalid, the endpoint returns a JSON response indicating failure along with an error message and a 401 HTTP status code to indicate unauthorized access. In a real-world system, authentication would likely involve more sophisticated mechanisms such as encryption, multi-factor authentication, and user management.

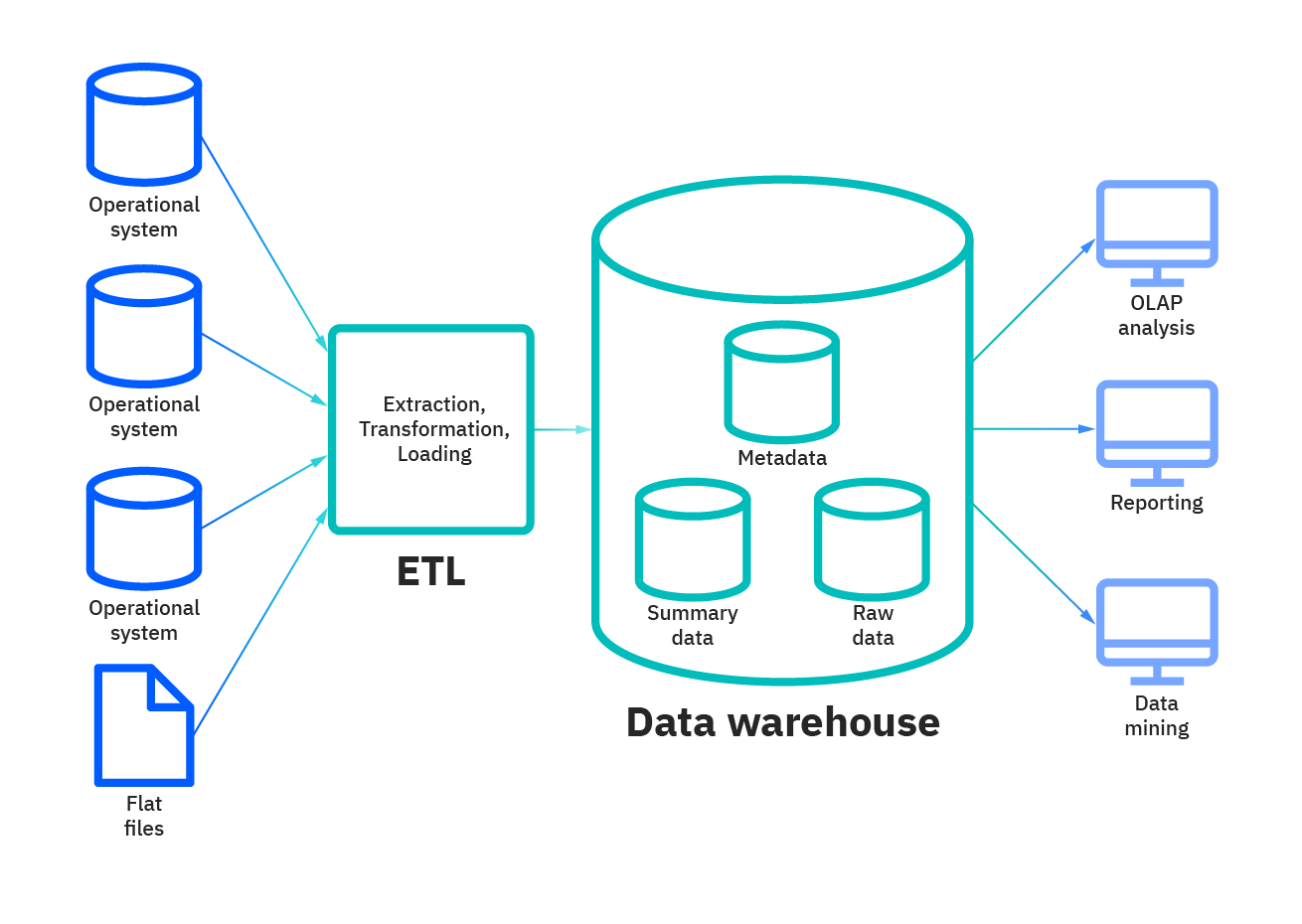

Batch processing is a method of processing a large number of input items as a single batch, typically for efficiency reasons.

It is often used in systems that need to process large amounts of data, such as data pipelines or ETL (Extract, Transform, Load) processes.

How batch processing can be implemented in Python using the multiprocessing module:

import multiprocessing

def process_batch(batch):

"""

Process a single batch of input items

"""

# process the input items in the batch

result = []

for item in batch:

result.append(item.upper()) return resultdef batch_process(input_data, batch_size=10):

"""

Process the input data in batches

"""

# split the input data into batches

batches = [input_data[i:i+batch_size] for i in range(0, len(input_data), batch_size)] # process each batch using multiprocessing

with multiprocessing.Pool() as pool:

results = pool.map(process_batch, batches) # flatten the results and return them

return [item for batch_result in results for item in batch_result]# example usage

input_data = ['apple', 'banana', 'cherry', 'date', 'elderberry', 'fig', 'grape', 'honeydew', 'kiwi', 'lemon']

output_data = batch_process(input_data, batch_size=3)

print(output_data)In this implementation, the batch_process function takes a list of input items and a batch size as input, and splits the input items into batches of the specified size. It then uses the multiprocessing module to process each batch in parallel using the process_batch function, which simply applies a transformation to each item in the batch. Finally, the results from each batch are combined into a single output list and returned.

Cloud storage is a method of storing data in a remote server or a network of servers that are accessible over the internet.

It is commonly used in systems that require scalable, flexible, and reliable storage solutions.

How cloud storage can be implemented in Python using the boto3 library to interact with Amazon S3:

import boto3

# create an S3 client

s3 = boto3.client('s3')# create a new bucket

bucket_name = 'my-bucket'

s3.create_bucket(Bucket=bucket_name)# upload a file to the bucket

file_name = 'example.txt'

with open(file_name, 'rb') as f:

s3.upload_fileobj(f, bucket_name, file_name)# download a file from the bucket

with open('downloaded.txt', 'wb') as f:

s3.download_fileobj(bucket_name, file_name, f)# list the contents of the bucket

response = s3.list_objects_v2(Bucket=bucket_name)

for item in response['Contents']:

print(item['Key'])In this implementation, the boto3 library is used to create an S3 client that can interact with Amazon S3. A new bucket is created using the create_bucket method, and a file is uploaded to the bucket using the upload_fileobj method. The file is then downloaded from the bucket using the download_fileobj method, and the contents of the bucket are listed using the list_objects_v2 method.

Stream processing is a critical component of many modern system designs, allowing applications to process large volumes of data in real-time. In stream processing, data is processed as it is generated, allowing for faster analysis and insights.

Implementation of stream processing using Python and Apache Kafka:

- Set up Apache Kafka using the Confluent platform, which provides a Python client for interacting with Kafka:

from confluent_kafka import Producer, Consumer

conf = {

'bootstrap.servers': 'my-kafka-broker:9092',

'client.id': 'my-client-id'

}producer = Producer(conf)

consumer = Consumer({

'bootstrap.servers': 'my-kafka-broker:9092',

'group.id': 'my-consumer-group',

'auto.offset.reset': 'earliest'

})- Create a Kafka topic to store the incoming stream of data:

producer.produce('my-topic', key='key', value='value')

- Set up a Kafka consumer to process the incoming stream of data:

consumer.subscribe(['my-topic'])

while True:

msg = consumer.poll(1.0) if msg is None:

continue if msg.error():

print(f"Consumer error: {msg.error()}")

continue print(f"Received message: {msg.value().decode('utf-8')}")- Process the incoming stream of data using Python’s data processing libraries like pandas, numpy, or scikit-learn:

import pandas as pd

df = pd.read_json(msg.value())

# perform data processing on the DataFrame

* Write the processed data to an output stream or database:

import sqlite3

conn = sqlite3.connect('my-db')

c = conn.cursor()# create table if it doesn't exist

c.execute('''CREATE TABLE IF NOT EXISTS my_table

(column1 text, column2 text, column3 text)''')# insert data into table

c.execute(f"INSERT INTO my_table VALUES (?, ?, ?)", (val1, val2, val3))

conn.commit()# close database connection

conn.close()In summary, stream processing using Python and Apache Kafka is a powerful tool for system design, allowing for the real-time processing of large volumes of data. The above code shows how to set up a Kafka producer to send data to a topic, a consumer to read data from the topic, process the data using data processing libraries, and write the results to an output stream or database.

Master-Slave is a common pattern used in system design to improve the scalability, performance, and availability of applications.

In this pattern, there is one master node that handles write requests and one or more slave nodes that handle read requests.

Here’s an implementation of the Master-Slave pattern using Python and Redis:

- Set up Redis using the redis-py library:

import redis

# Connect to Redis as the master node

master = redis.Redis(host='my-master-redis-host', port=6379)# Connect to Redis as the slave node(s)

slave1 = redis.Redis(host='my-slave-redis-host-1', port=6379)

slave2 = redis.Redis(host='my-slave-redis-host-2', port=6379)

* Write data to the master node using the `set()` method:

master.set('my-key', 'my-value')

* Read data from the slave node(s) using the `get()` method:

# read from slave1

value = slave1.get('my-key')

# if value is None, try reading from slave2

if value is None:

value = slave2.get('my-key')

* Configure Redis to replicate data from the master to the slave nodes:

# Set up Redis as a master-slave replication system

master_config = {

'slaveof': None # master has no slave

}

slave1_config = {

'slaveof': ('my-master-redis-host', 6379)

}slave2_config = {

'slaveof': ('my-master-redis-host', 6379)

}# Configure the Redis instances

master.config_set(**master_config)

slave1.config_set(**slave1_config)

slave2.config_set(**slave2_config)In summary, implementing the Master-Slave pattern using Python and Redis is a powerful tool for system design, allowing for the improved scalability, performance, and availability of applications. The above code shows how to set up Redis as a master-slave replication system, write data to the master node, and read data from the slave node(s).

Authentication servers are a critical component of many modern system designs, allowing applications to authenticate users and manage access control. In this pattern, there is a separate server dedicated to handling authentication requests and managing user credentials.

Here’s an implementation of an authentication server using Python and Flask:

- Set up a Flask server to handle authentication requests:

from flask import Flask, request, jsonify

app = Flask(__name__)@app.route('/login', methods=['POST'])

def login():

# Authenticate the user

username = request.json.get('username')

password = request.json.get('password')

# Check the username and password against the database

if authenticate_user(username, password):

# If the user is authenticated, generate a token

token = generate_token(username)

return jsonify({'token': token})

else:

return jsonify({'error': 'Invalid username or password'})- Set up a user database to store user credentials:

import sqlite3

def create_user_table():

conn = sqlite3.connect('users.db')

c = conn.cursor()

c.execute('''CREATE TABLE IF NOT EXISTS users

(username text, password text)''')

conn.commit()

conn.close()def add_user(username, password):

conn = sqlite3.connect('users.db')

c = conn.cursor()

c.execute(f"INSERT INTO users VALUES (?, ?)", (username, password))

conn.commit()

conn.close()def authenticate_user(username, password):

conn = sqlite3.connect('users.db')

c = conn.cursor()

c.execute(f"SELECT * FROM users WHERE username = '{username}' AND password = '{password}'")

result = c.fetchone()

conn.close()

return result is not None- Set up a token generation method to generate and validate authentication tokens:

import jwt

JWT_SECRET_KEY = 'my-secret-key'def generate_token(username):

payload = {'username': username}

return jwt.encode(payload, JWT_SECRET_KEY, algorithm='HS256')def validate_token(token):

try:

decoded_payload = jwt.decode(token, JWT_SECRET_KEY, algorithms=['HS256'])

return decoded_payload.get('username')

except jwt.exceptions.DecodeError:

return None- Set up an access control mechanism to check user authentication:

from functools import wraps

def auth_required(f):

@wraps(f)

def decorated_function(*args, **kwargs):

token = request.headers.get('Authorization')

if token is None:

return jsonify({'error': 'Authentication required'}), 401 username = validate_token(token)

if username is None:

return jsonify({'error': 'Invalid token'}), 401 return f(*args, **kwargs) return decorated_functionIn summary, implementing an authentication server using Python and Flask is a powerful tool for system design, allowing for the authentication and access control of users in a scalable and secure manner. The above code shows how to set up a Flask server to handle authentication requests, set up a user database to store user credentials, generate and validate authentication tokens, and set up an access control mechanism to check user authentication.

SQL Read replicas are an important tool for system design that can help improve the performance, scalability, and availability of databases. A read replica is a copy of a database that can be used to offload read requests from the primary database, reducing its load and improving its performance.

Here is an implementation of SQL Read replicas using Python and Amazon Web Services (AWS):

- Create an AWS account and configure the AWS credentials using the Boto3 library:

import boto3

session = boto3.Session(

aws_access_key_id='YOUR_ACCESS_KEY',

aws_secret_access_key='YOUR_SECRET_KEY',

region_name='YOUR_REGION'

)- Connect to the primary database using the SQL Alchemy library:

from sqlalchemy import create_engine

primary_db_uri = 'postgresql://user:password@primary-db-host:port/primary_db_name'

primary_engine = create_engine(primary_db_uri)- Create a read replica using the AWS RDS service and the Boto3 library:

client = session.client('rds')

response = client.create_db_instance_read_replica(

DBInstanceIdentifier='my-read-replica',

SourceDBInstanceIdentifier='my-primary-db',

DBInstanceClass='db.t2.micro',

AvailabilityZone='us-west-2a',

PubliclyAccessible=False,

Tags=[

{

'Key': 'Name',

'Value': 'my-read-replica'

}

]

)read_replica_endpoint = response['DBInstance']['Endpoint']['Address']- Connect to the read replica database using the SQL Alchemy library:

read_replica_uri = f"postgresql://user:password@{read_replica_endpoint}:port/read_replica_db_name"

read_replica_engine = create_engine(read_replica_uri)- Offload read requests to the read replica using SQL Alchemy’s

create_session()method:

from sqlalchemy.orm import sessionmaker

Session = sessionmaker(bind=read_replica_engine)# create a session using the read replica engine

session = Session()# perform read requests using the session

result = session.execute('SELECT * FROM my_table')In summary, implementing SQL Read replicas using Python and AWS is a straightforward process. The above code shows how to create a read replica database using the Boto3 library, connect to it using the SQL Alchemy library, and offload read requests to it using a session. This can help improve the performance, scalability, and availability of databases in system design.

Load and load estimation are important concepts in system design, as they help engineers determine the resources and capacity required to handle the anticipated workload for a system.

- Load: Load refers to the amount of work or resource utilization that a system is handling at a given time. It can be measured in terms of the number of requests per second, CPU utilization, memory usage, disk I/O, network traffic, or any other relevant metric.

- Load Estimation: Load estimation is the process of predicting the future load on a system. This involves analyzing the patterns of resource utilization, user behavior, and business requirements to determine the expected workload. Load estimation helps engineers to design a system that can handle the anticipated load and avoid overloading or underutilization.

Implementation of load and load estimation in system design using Python:

import psutil

import time

# Define the function to calculate system load

def get_system_load():

# Get the current system load

load = psutil.cpu_percent() # Wait for 1 second to get a more accurate reading

time.sleep(1) # Calculate the average system load over the past 1 second

load = psutil.cpu_percent() return load# Define the function to estimate future system load

def estimate_load():

# Get the current system load

current_load = get_system_load() # Predict the future system load based on historical data

predicted_load = current_load * 2 return predicted_loadIn this implementation, we use the psutil library to get the current CPU load of the system. We define a function called get_system_load() which calculates the average system load over the past 1 second. We use a time.sleep(1) call to wait for 1 second before calculating the load, in order to get a more accurate reading.

We then define a function called estimate_load() which calls get_system_load() to get the current system load, and then predicts the future system load based on historical data. In this example, we simply double the current system load to estimate the future load, but in a real system design, more complex algorithms and historical data could be used to make more accurate predictions.

To estimate the performance of the system you have designed, there are many metrics. In this we will cover most important three —

- Throughput — Its measured by no of jobs processed/ second

- Response time — Its measured by the time between sending request and getting a response

- Latency — Its measured by the time it takes for a request waiting in the queue to be completed.

Throughput: Throughput is a measure of the rate at which a system can process requests.

Implementation of throughput in system design using Python:

import time

# Define the function to measure throughput

def measure_throughput(num_requests):

start_time = time.time() # Perform the requests

for i in range(num_requests):

perform_request() end_time = time.time()

elapsed_time = end_time - start_time # Calculate the throughput

throughput = num_requests / elapsed_time return throughputIn this implementation, we define a function called measure_throughput() which takes the number of requests as an argument, and measures the time it takes to perform the requests using the time library. We then calculate the throughput by dividing the number of requests by the elapsed time.

Response time: Response time is a measure of the time it takes for a system to respond to a request.

Implementation of response time in system design using Python:

import time

# Define the function to measure response time

def measure_response_time():

start_time = time.time() # Perform the request

response = perform_request() end_time = time.time()

elapsed_time = end_time - start_time # Log the response time

log_response_time(elapsed_time) return responseIn this example, we define a function called measure_response_time() which measures the time it takes to perform a single request using the time library. We then log the response time for debugging and performance analysis purposes.

Latency: Latency is a measure of the time it takes for a request to be processed by a system.

Implementation of latency in system design using Python:

import time

# Define the function to measure latency

def measure_latency(num_requests):

start_time = time.time() # Perform the requests

for i in range(num_requests):

start_request_time = time.time()

perform_request()

end_request_time = time.time()

elapsed_request_time = end_request_time - start_request_time

log_latency(elapsed_request_time) end_time = time.time()

elapsed_time = end_time - start_time # Calculate the average latency

avg_latency = sum(latencies) / len(latencies) return avg_latencyIn this example, we define a function called measure_latency() which takes the number of requests as an argument, and measures the time it takes for each request to be processed using the time library. We then log the latency for each request for debugging and performance analysis purposes, and calculate the average latency by dividing the sum of the latencies by the number of requests.

CPU utilization, memory usage, disk I/O, and network traffic are key performance metrics that are used to measure the resource utilization of a computer system. Here’s a brief overview of how these metrics are calculated:

- CPU utilization: CPU utilization is a measure of how much of the CPU’s processing capacity is being used. It can be calculated as the ratio of the time the CPU spends executing instructions to the total time available. This metric can be obtained by measuring the number of CPU cycles used over a specific interval of time. On Unix-based systems, this information can be obtained using the “top” or “vmstat” commands, while on Windows systems, it can be obtained using the Task Manager or Performance Monitor.

- Memory usage: Memory usage is a measure of how much of the system’s memory is being used. This metric can be obtained by measuring the amount of physical memory (RAM) being used and the amount of virtual memory being swapped to disk. On Unix-based systems, this information can be obtained using the “free” or “vmstat” commands, while on Windows systems, it can be obtained using the Task Manager or Performance Monitor.

- Disk I/O: Disk I/O is a measure of how much data is being read from and written to the disk. This metric can be obtained by measuring the number of disk reads and writes and the amount of data being transferred. On Unix-based systems, this information can be obtained using the “iostat” command, while on Windows systems, it can be obtained using the Performance Monitor.

- Network traffic: Network traffic is a measure of how much data is being transmitted over the network. This metric can be obtained by measuring the amount of data being sent and received, the number of network packets, and the network bandwidth utilization. On Unix-based systems, this information can be obtained using the “ifconfig” or “netstat” commands, while on Windows systems, it can be obtained using the Performance Monitor or the “netstat” command.

CPU Utilization: CPU utilization is a measure of how much of the CPU’s processing power is being used.

Implementation of CPU utilization in system design using Python:

import psutil

# Get the current CPU utilization

cpu_percent = psutil.cpu_percent(interval=1)In this implementation, we use the psutil library to get the current CPU utilization. We specify an interval of 1 second for the measurement.

Memory Usage: Memory usage is a measure of how much memory is being used by a system.

Implementation of memory usage in system design using Python:

import psutil

# Get the current memory usage

mem = psutil.virtual_memory()

mem_percent = mem.percentIn this implementation, we use the psutil library to get the current memory usage. We use the virtual_memory() function to get information about the system's virtual memory, and then extract the memory usage percentage.

Disk I/O: Disk I/O is a measure of the amount of input/output operations being performed on a disk.

Implementation of disk I/O in system design using Python:

import psutil

# Get the current disk I/O

disk_io = psutil.disk_io_counters()

read_bytes = disk_io.read_bytes

write_bytes = disk_io.write_bytesIn this implementation, we use the psutil library to get the current disk I/O. We use the disk_io_counters() function to get information about the system's disk I/O, and then extract the number of bytes read and written.

- Network Traffic: Network traffic is a measure of the amount of data being sent and received over a network.

Implementation of network traffic in system design using Python:

import psutil

# Get the current network traffic

net_io = psutil.net_io_counters()

bytes_sent = net_io.bytes_sent

bytes_recv = net_io.bytes_recvIn this implementation, we use the psutil library to get the current network traffic. We use the net_io_counters() function to get information about the system's network traffic, and then extract the number of bytes sent and received.

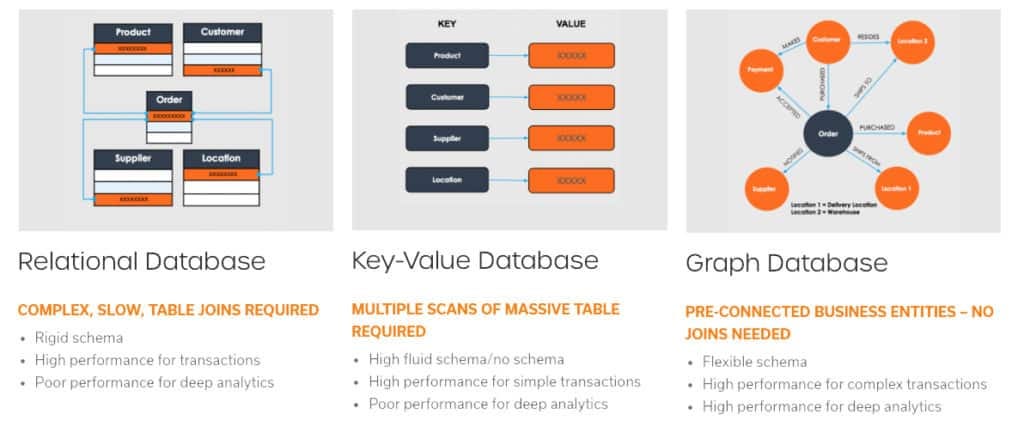

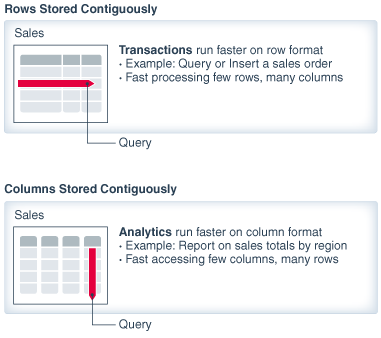

There are three types of Databases —